Dear Readers,

Today is a very intense day, lot’s of high quality research papers published today!! I don’t want to skip any good paper today because you know what it’s a long weekend time 🎉🎉 You did it, one more week you worked so hard and referred latest and greatest research papers in field of LLMs. Well done! 👏🏻

Today I am dividing newsletter in two parts since I have lot to cover and you have a lot of time (long weekend time!) 🙃 Let’s do it.

🔗Code/data/weights:https://github.com/dvlab-research/MiniGemini.

🤔Problem?:

The research paper addresses the performance gap that exists between advanced models like GPT-4 and Gemini and visual language models (VLMs) in tasks such as visual dialog and reasoning.

💻Proposed solution:

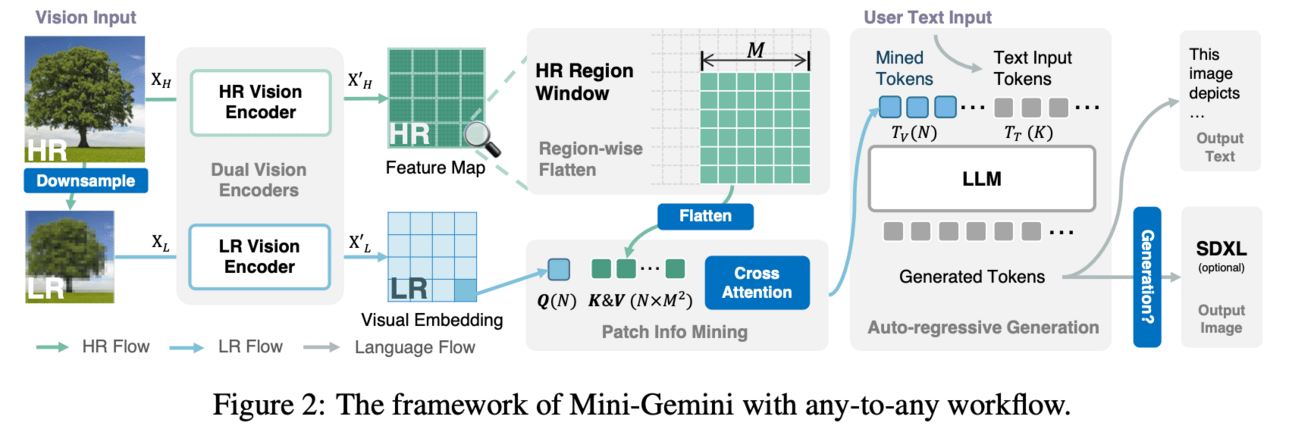

The research paper proposes a framework called Mini-Gemini which enhances VLMs in three ways: utilizing an additional visual encoder for high-resolution refinement without increasing the visual token count, constructing a high-quality dataset for precise image comprehension and reasoning-based generation, and using VLM-guided generation. This framework empowers current VLMs with image understanding, reasoning, and generation simultaneously.

📊Results:

The research paper demonstrates that Mini-Gemini achieves leading performance in several zero-shot benchmarks and even outperforms private models. This improvement is made possible by utilizing the potential of VLMs for better performance and any-to-any workflow.

🔗Code/data/weights:https://github.com/google-deepmind/long-form-factuality.

🤔Problem?:

The research paper addresses the issue of factual errors in content generated by large language models (LLMs) when responding to fact-seeking prompts on open-ended topics.

💻Proposed solution:

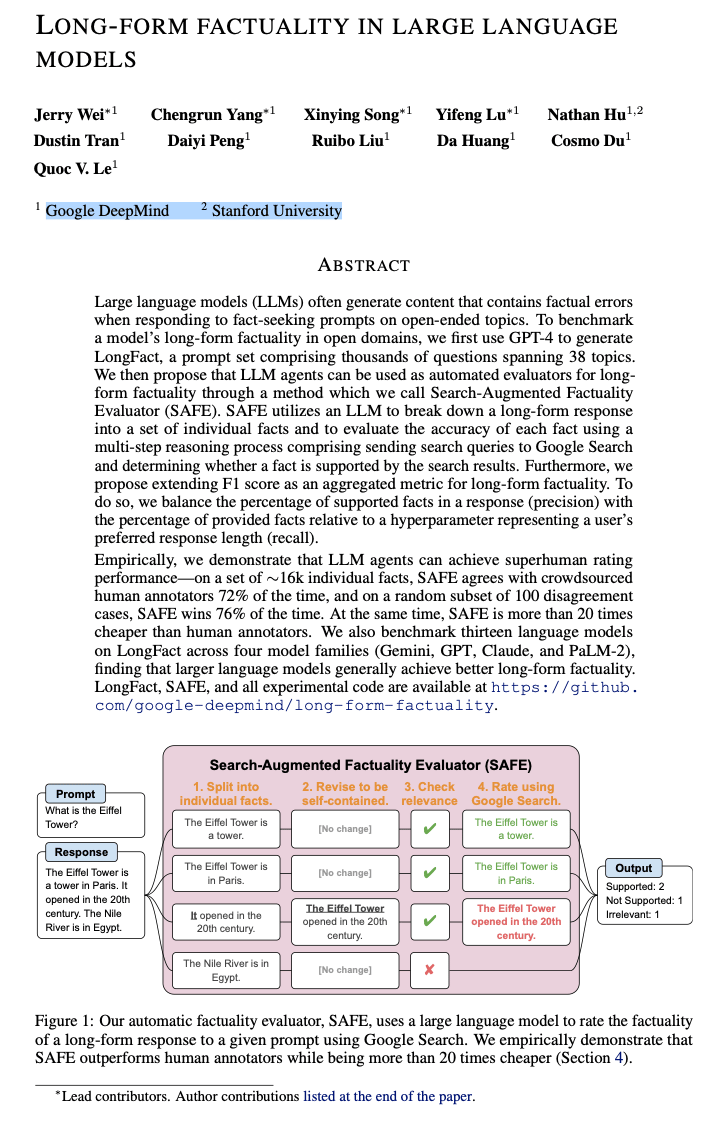

The research paper proposes a method called Search-Augmented Factuality Evaluator (SAFE) to solve the problem. This method utilizes an LLM to break down a long-form response into individual facts and then evaluates the accuracy of each fact using a multi-step reasoning process. This process involves sending search queries to Google and determining whether the fact is supported by the search results. This approach is combined with extending the F1 score as an aggregated metric for long-form factuality by balancing the percentage of supported facts (precision) with the percentage of provided facts relative to a hyperparameter representing a user's preferred response length (recall).

📊Results:

The research paper empirically demonstrates that LLM agents using the SAFE method can achieve superhuman rating performance. On a set of ~16k individual facts, SAFE agrees with crowdsourced human annotators 72% of the time and in a random subset of 100 disagreement cases, SAFE wins 76% of the time. Additionally, it is more than 20 times cheaper than human annotators.

🤔Problem?:

The research paper addresses the problem of false information being generated by Large Language Models (LLM), which poses a major challenge in the field of AI.

💻Proposed solution:

The research paper proposes a solution called Inference-Time-Intervention (ITI), which works by identifying attention heads within the LLM that contain the desired type of knowledge (such as truthful information) and shifting the activations of these attention heads during inference. This framework is further improved with the introduction of Non-Linear ITI (NL-ITI), which includes nonlinear probing and multi-token intervention.

📊Results:

The research paper reports a 14% improvement in MC1 metric on the TruthfulQA multiple-choice benchmark with NL-ITI compared to the baseline ITI results. It also achieves a significant 18% improvement on the Business Ethics subdomain of MMLU compared to the baseline LLaMA2-7B, while being less invasive in the behavior of LLM (as measured by Kullback-Leibler divergence).

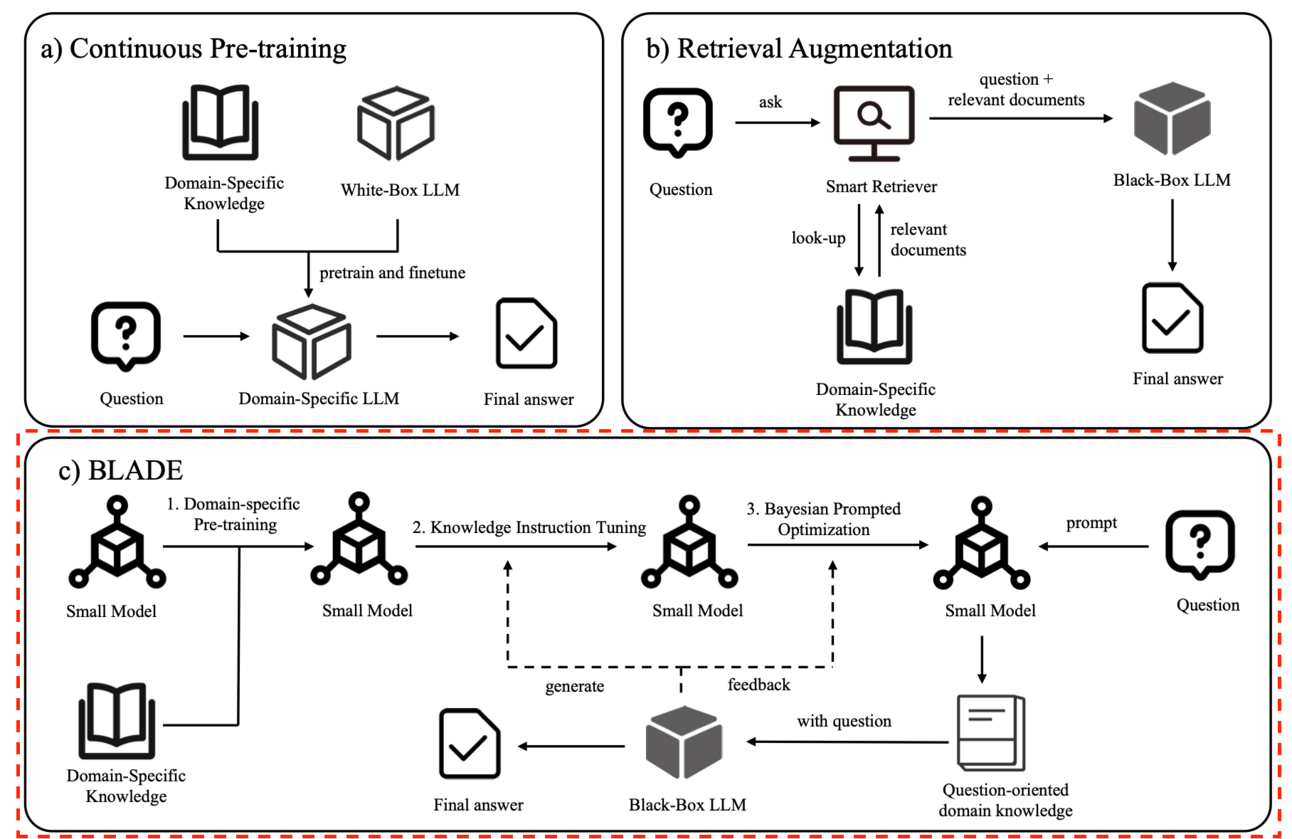

Paper proposes a framework called BLADE, which stands for Black-box LArge language models with small Domain-spEcific models. This framework involves using both a general language model (LLM) and a small domain-specific language model (LM) together. The small LM is pre-trained with domain-specific data and offers specialized insights, while the general LLM provides robust language comprehension and reasoning capabilities. The framework then fine-tunes the small LM using knowledge instruction data and uses joint Bayesian optimization to optimize both the general LLM and the small LM. This allows the general LLM to effectively adapt to vertical domains by incorporating domain-specific knowledge from the small LM.

📊Results:

The research paper conducted extensive experiments on public legal and medical benchmarks and found that BLADE significantly outperformed existing approaches. This demonstrates the effectiveness and cost-efficiency of BLADE in adapting general LLMs for vertical domains.

🤔Problem?:

The research paper addresses the challenges of ambiguity and inconsistency in current evaluation methods for language models.

💻Proposed solution:

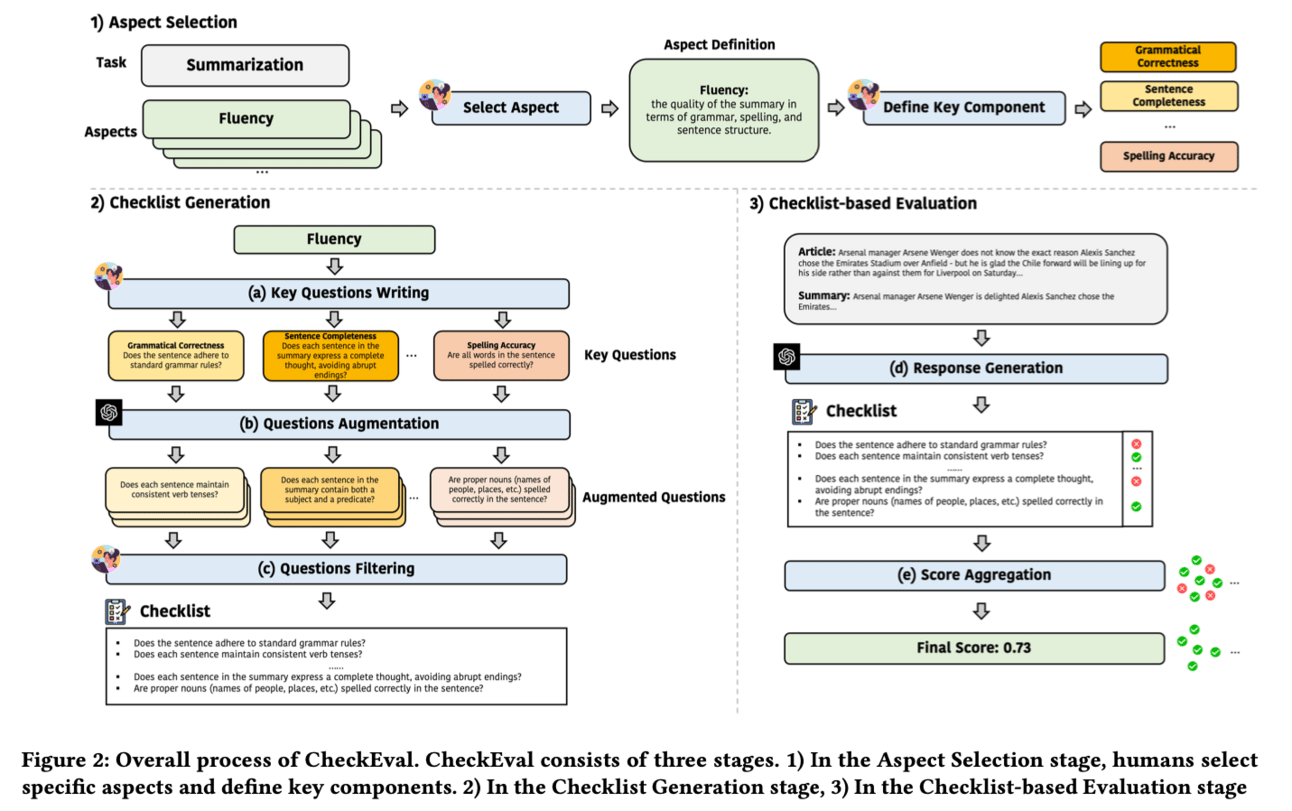

The research paper proposes a novel evaluation framework called CheckEval, which divides evaluation criteria into detailed sub-aspects and constructs a checklist of Boolean questions for each. This approach simplifies the evaluation process, making it more interpretable, robust, and reliable. Additionally, it offers a customizable and interactive framework for future evaluations.

📊Results:

The research paper has not provided a specific performance improvement, but it has been validated through a focused case study using the SummEval benchmark, showing a strong correlation with human judgments and a highly consistent Inter-Annotator Agreement. This highlights the effectiveness of CheckEval for objective, flexible, and precise evaluations.

💻Proposed solution:

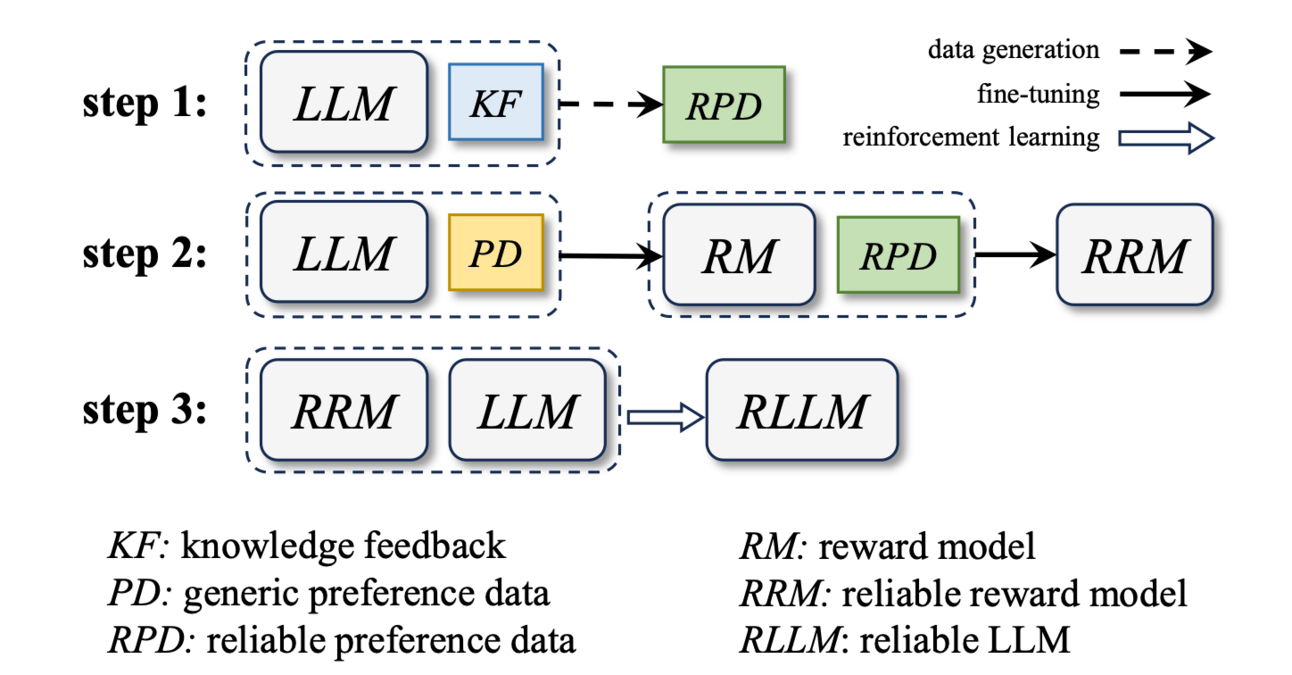

The research paper proposes a novel alignment framework called Reinforcement Learning from Knowledge Feedback (RLKF) to solve the problem of hallucinations in LLMs. RLKF leverages knowledge feedback to dynamically determine the model's knowledge boundary and trains a reliable reward model to encourage the refusal of out-of-knowledge questions. This approach improves the inherent reliability of LLMs by minimizing hallucinations and providing accurate responses.

🤔Problem?:

The research paper addresses the problem of bridging the gap between video modality and language models, specifically Large Language Models (LLMs).

💻Proposed solution:

The research paper proposes a novel strategy called Image Grid Vision Language Model (IG-VLM) to solve this problem. This strategy involves transforming a video into a single composite image, termed as an image grid, by arranging multiple frames in a grid layout. This image grid format effectively retains temporal information within the grid structure, allowing for direct application of a single high-performance Vision Language Model (VLM) without the need for video-data training.

📊Results:

The research paper achieved significant performance improvement in nine out of ten zero-shot video question answering benchmarks, including both open-ended and multiple-choice benchmarks. This demonstrates the effectiveness of the proposed IG-VLM strategy in bridging the modality gap between video and language models.

Some interesting application of LLMs which I have not covered but thought might be useful based on your are of work:

Stay tune for part 2 of today’s newsletter !!! You can do it, it’s a long weekend!