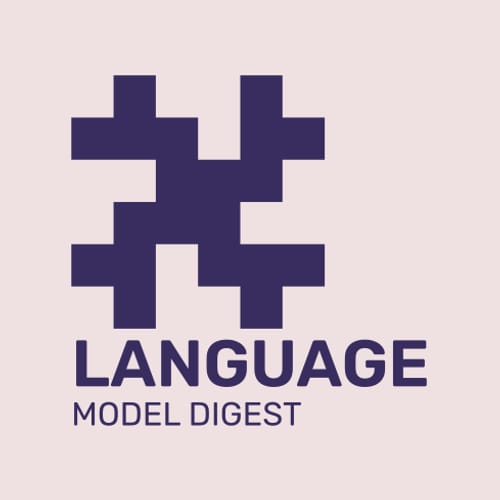

Quantifying and Mitigating Unimodal Biases in Multimodal Large Language Models: A Causal Perspective

🔗 Project page: https://opencausalab.github.io/MORE/

🤔Problem?:

The research paper addresses the issue of unimodal biases in Multimodal Large Language Models (MLLMs) leading to incorrect answers in complex multimodal tasks, specifically in Visual Question Answering (VQA) problems.

💻Proposed solution:

The research paper proposes a causal framework to interpret and assess the unimodal biases in MLLMs' predictions for VQA problems. This framework involves creating a causal graph to understand the relationships between different factors, and using it to design a new dataset called MORE, which challenges MLLMs' abilities and helps mitigate unimodal biases. Additionally, the paper suggests two strategies to enhance MLLMs' reasoning capabilities: a Decompose-Verify-Answer (DeVA) framework for limited-access MLLMs and fine-tuning of open-source MLLMs.

📊Results:

The research paper does not mention any specific performance improvement achieved, but it provides valuable insights for future research on mitigating unimodal biases in MLLMs.

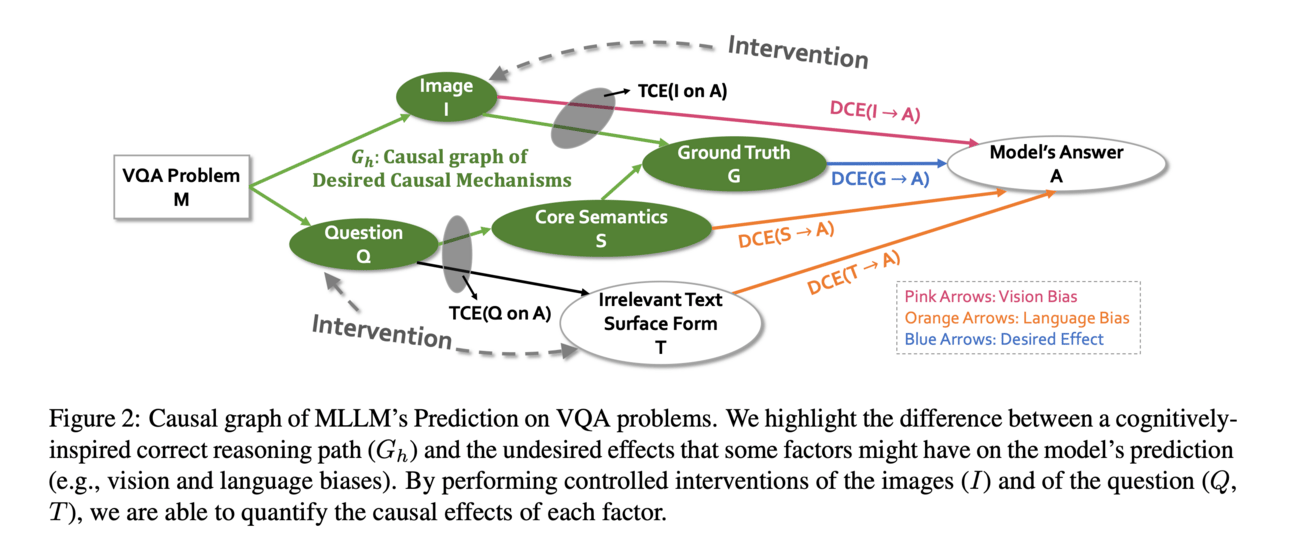

🤔Problem?:

The research paper aims to address the limitations of existing relation-aware sequential recommendation models, which rely on manually predefined relations and suffer from sparsity issues, limiting their ability to generalize in diverse scenarios with varied item relations.

💻Proposed solution:

The research paper proposes a novel framework called Latent Relation Discovery (LRD) that leverages the Large Language Model (LLM) to automatically mine latent relations between items for recommendation. This is achieved by harnessing the human-like knowledge contained in LLM and using it to obtain language knowledge representations of items. These representations are then fed into a latent relation discovery module based on the discrete state variational autoencoder (DVAE). By jointly optimizing self-supervised relation discovery tasks and recommendation tasks, LRD is able to improve upon existing relation-aware models.

📊Results:

Experimental results on multiple public datasets demonstrate that LRD significantly improves the performance of existing relation-aware sequential recommendation models. Further analysis experiments also indicate the effectiveness and reliability of the discovered latent relations. This shows that LRD is able to successfully incorporate existing relation-aware models and achieve a notable performance improvement.

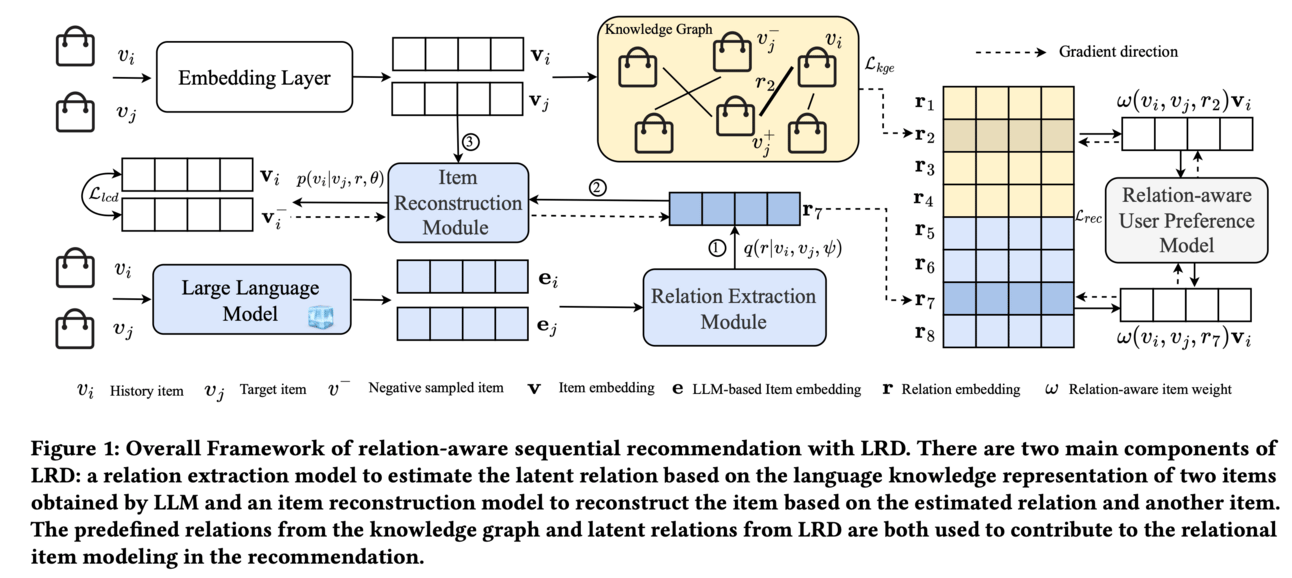

🤔Problem?:

The research paper addresses the problem of adapting retrieval-augmented generation (RAG) to the complex conversational setting, where questions are interdependent on the preceding context. This is an important issue as most previous work on RAG has focused on single-round question answering, but conversational question answering (CQA) requires a more dynamic and robust approach.

💻Proposed solution:

The research paper proposes a conversation-level RAG approach, which incorporates fine-grained retrieval augmentation and self-check mechanisms for CQA. This approach consists of three components: a conversational question refiner, a fine-grained retriever, and a self-check based response generator. These components work collaboratively to improve question understanding and acquire relevant information in conversational settings. The conversational question refiner helps to refine the original question, the fine-grained retriever retrieves relevant information from a large external knowledge base, and the self-check based response generator generates a final response by incorporating the refined question and retrieved information. This approach aims to improve the reliability and accuracy of responses in CQA.

🤔Problem?:

The research paper addresses the challenge of deploying large generative models, such as language models and diffusion models, on edge devices due to their slow inference speed, high computation and memory requirements.

💻Proposed solution:

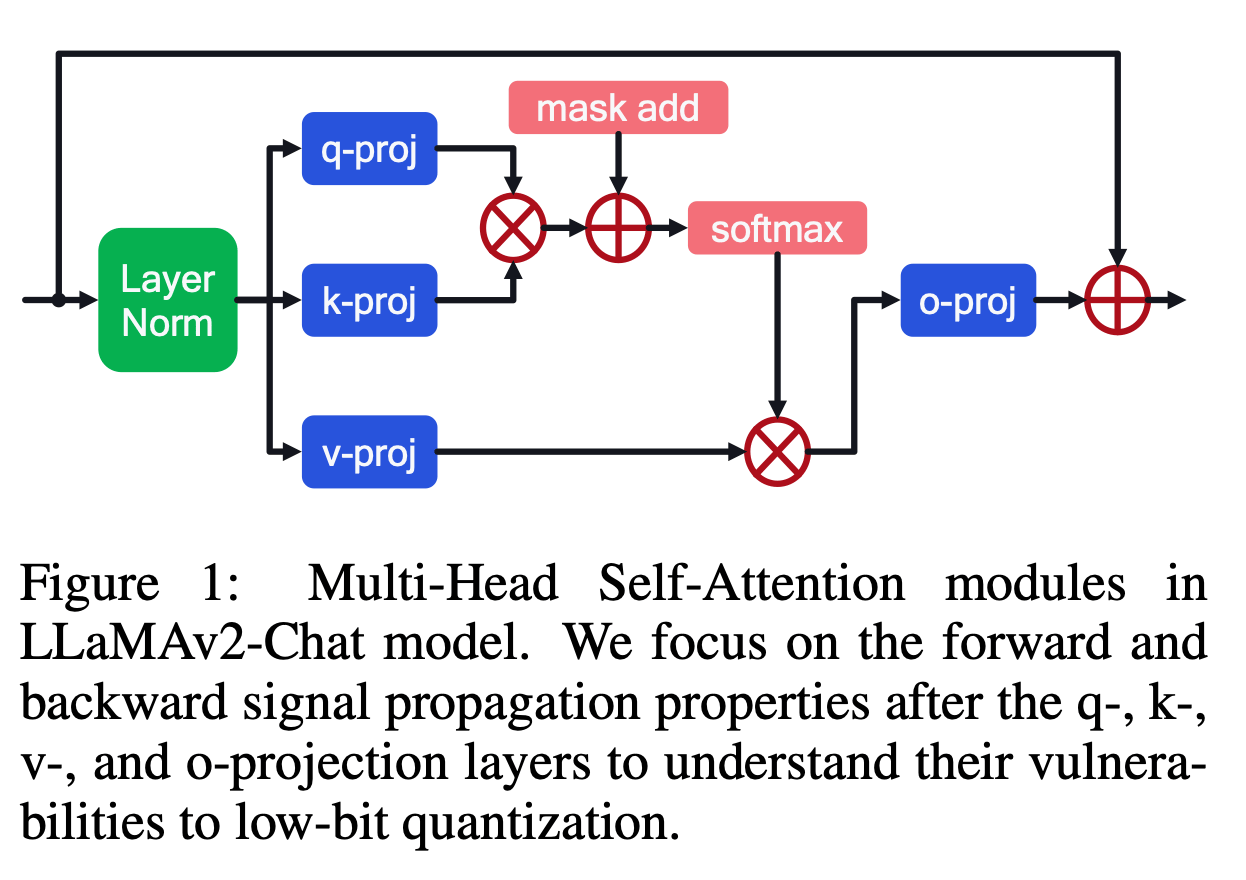

The research paper proposes a light-weight quantization aware fine tuning technique using knowledge distillation (KD-QAT) to improve the performance of 4-bit weight quantized LLMs. This technique involves using commonly available datasets to train the model and then using knowledge distillation to transfer the knowledge from a larger, more accurate model to the smaller, quantized model. Additionally, the paper also introduces a technique called ov-freeze to stabilize the KD-QAT process by studying the gradient propagation during training and addressing vulnerabilities to low-bit quantization errors.

📊Results:

The research paper does not explicitly state the performance improvement achieved, but it mentions that with the proposed ov-freeze technique, the 7B LLaMAv2-Chat model at 4-bit quantization level demonstrates near float-point precision performance with less than 0.7% loss of accuracy on Commonsense Reasoning benchmarks.

Connect with fellow researchers community on Twitter to discuss more about these papers at

🤔Problem?:

The research paper addresses the issue of large language models (LLMs) making unjustified logical and computational errors when solving mathematical quantitative reasoning problems.

💻Proposed solution:

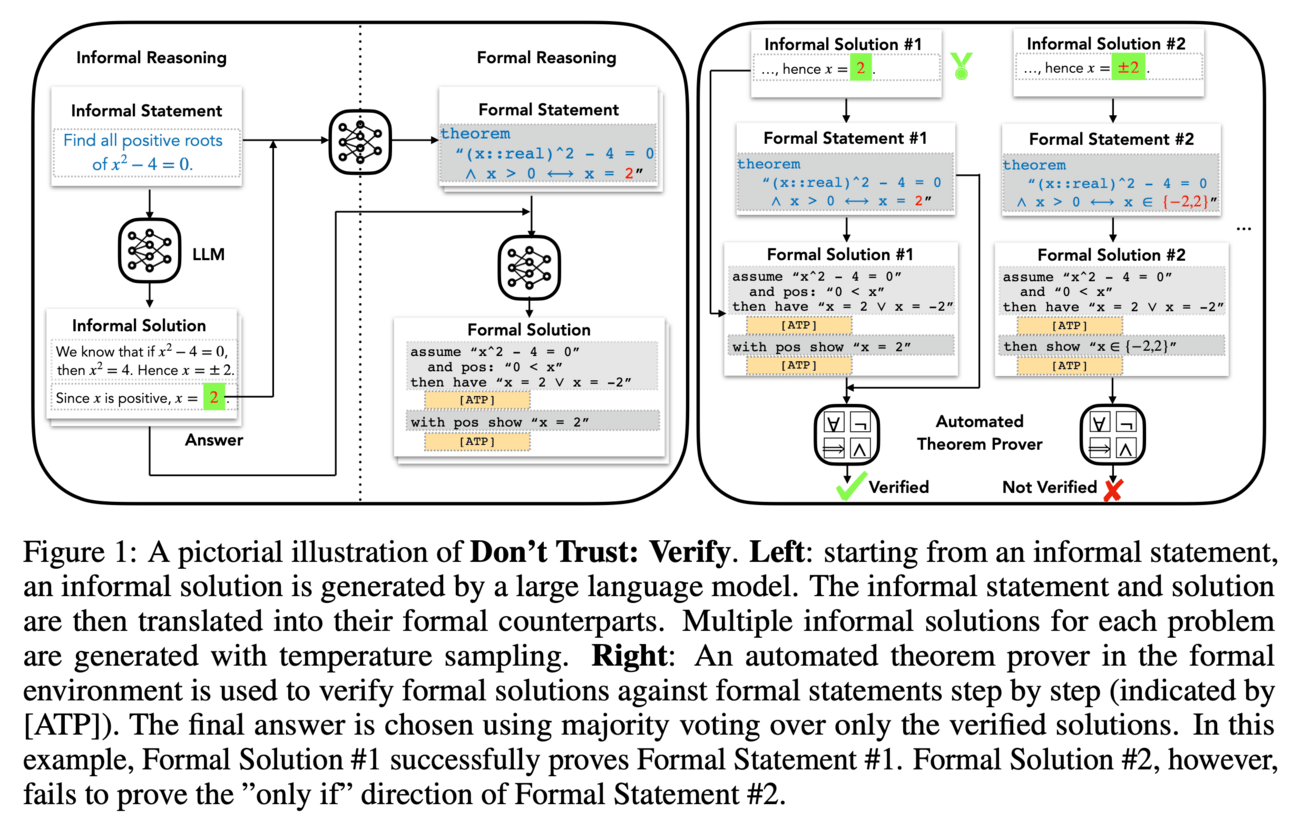

The research paper proposes a solution by leveraging the fact that LLMs can be prompted to translate informal mathematical statements into formal Isabelle code, which can be automatically verified for internal consistency. This means that if the training corpus of LLMs contains enough examples of formal mathematics, they can be trained to reject solutions that are inconsistent with the problem statement or with themselves. This approach provides a mechanism for automatically identifying correct answers and reducing errors in LLMs' reasoning steps.

📊Results:

The research paper reports a performance improvement of more than 12% on the GSM8K dataset when compared to the previously best method, vanilla majority voting. This improvement was consistent across all datasets and LLM model sizes, demonstrating the effectiveness of the proposed approach.

Interesting applications using LLMs: