Highlights

Enhanced Attention Mechanisms: Native Sparse Attention and SpargeAttn propose hardware-aligned and universal sparse frameworks that accelerate long-context inference and training without compromising performance.

Knowledge Integration & Continual Learning: "How Do LLMs Acquire New Knowledge?" and "How Much Knowledge Can You Pack into a LoRA Adapter" explore strategies to embed new information into LLMs while preserving established capabilities.

Temporal & Diffusion Innovations: The Continuous Diffusion Model and Temporal Heads work address the dynamic nature of language by refining outputs iteratively and specializing in time-specific information recall.

Advanced Fine-Tuning Strategies: Make LoRA Great Again, PAFT, and Thinking Preference Optimization introduce novel adaptation methods to improve efficiency and enhance chain-of-thought reasoning during fine-tuning.

Architectural Enhancements: You Do Not Fully Utilize Transformer's Representation Capacity and MUDDFormer offer solutions to overcome residual bottlenecks by improving cross-layer information flow and expanding representational power.

Survey paper

Optimize global IT operations with our World at Work Guide

Explore this ready-to-go guide to support your IT operations in 130+ countries. Discover how:

Standardizing global IT operations enhances efficiency and reduces overhead

Ensuring compliance with local IT legislation to safeguard your operations

Integrating Deel IT with EOR, global payroll, and contractor management optimizes your tech stack

Leverage Deel IT to manage your global operations with ease.

LLMs architecture improvement

Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention

Standard attention mechanisms are notoriously computationally expensive for long-context modeling. This paper fills the gap by proposing a sparse attention mechanism that not only reduces computation but is also natively trainable on modern hardware.

Methodology

The authors introduce NSA - a dynamic hierarchical sparse strategy. In simple terms, NSA compresses tokens coarsely while selectively preserving crucial tokens at a fine-grained level. This two-tiered approach balances global context awareness with local precision. The design is carefully optimized for modern hardware by balancing arithmetic intensity, enabling end-to-end training without the pre-training overhead typical of full attention models.

Results: Experiments reveal that models pre-trained with NSA not only match or exceed full-attention baselines on general benchmarks and long-context tasks but also achieve substantial speedups on 64k-length sequences during decoding, forward, and backward passes.

How Do LLMs Acquire New Knowledge? A Knowledge Circuits Perspective on Continual Pre-Training | GitHub

While LLMs excel at knowledge-intensive tasks, the internal mechanisms that assimilate new knowledge remain poorly understood. This paper explores how neural circuits evolve to incorporate new facts during continual pre-training.

Methodology

Using a “knowledge circuits” lens, the study analyzes the formation and optimization of computational subgraphs responsible for knowledge storage. The researchers show that new information is more effectively integrated when it relates to pre-existing knowledge, and they identify a distinct deep-to-shallow evolution pattern in these circuits.

Continuous Diffusion Model for Language Modeling | GitHub

Traditional diffusion models for discrete data lose valuable iterative refinement signals. This study addresses the limitations of discrete diffusion in language modeling by proposing a continuous alternative.

Methodology

The paper bridges discrete diffusion and continuous flow on a statistical manifold. By incorporating the geometry of the underlying categorical distribution, the authors design a diffusion process that generalizes previous discrete models. A simulation-free training framework based on radial symmetry further alleviates the high-dimensionality challenge.

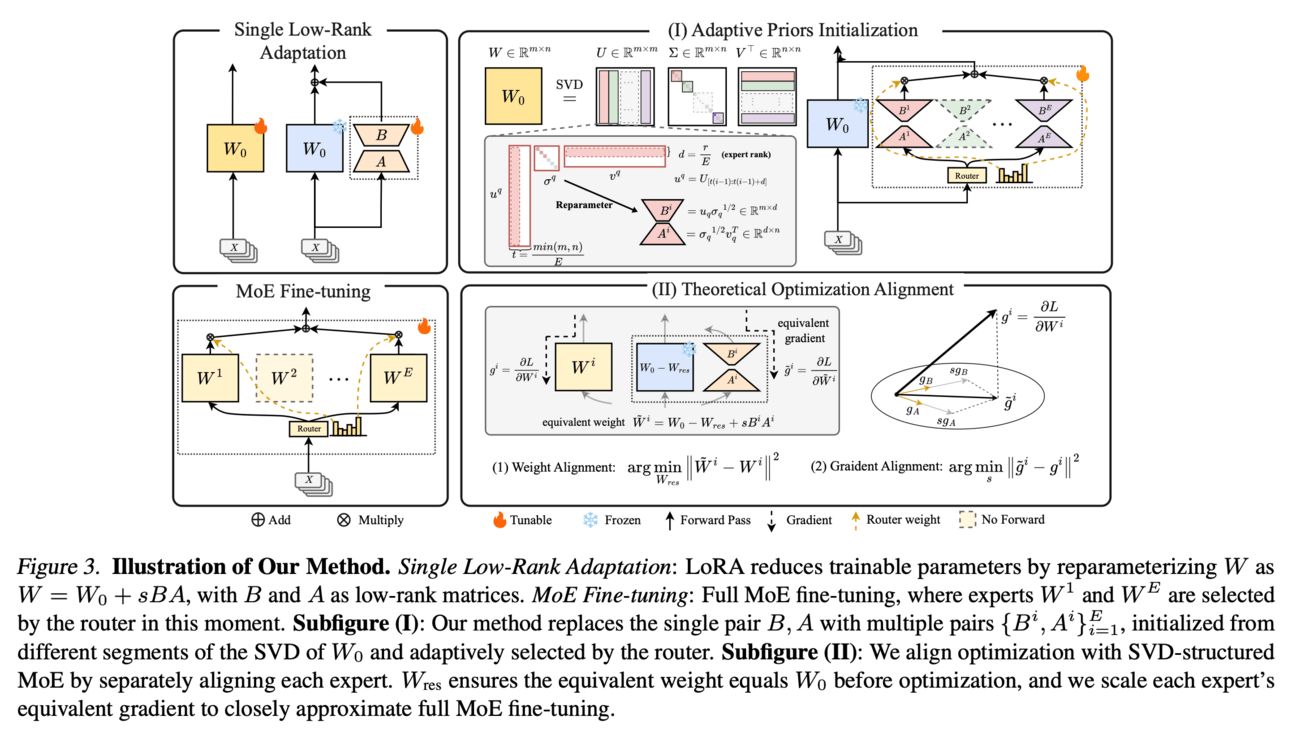

Make LoRA Great Again: Boosting LoRA with Adaptive Singular Values and Mixture-of-Experts Optimization Alignment

Although Low-Rank Adaptation (LoRA) enables efficient fine-tuning of LLMs, its performance traditionally lags behind full fine-tuning. This paper seeks to close that gap.

Methodology

The authors propose GOAT, a framework that dynamically integrates SVD-based priors with a Mixture-of-Experts (MoE) architecture. By deriving a theoretical scaling factor, GOAT realigns optimization dynamics to better harness pre-trained knowledge without modifying existing architecture or training routines.

SpargeAttn: Accurate Sparse Attention Accelerating Any Model Inference | GitHub

Even though many models exhibit sparse attention maps, most optimizations have been model-specific. There is a need for a universal sparse attention method that accelerates inference across diverse architectures.

Methodology

SpargeAttn employs a two-stage online filtering mechanism. Initially, it predicts the attention map to decide which matrix multiplications can be skipped. Then, an online softmax-aware filter further eliminates unnecessary computations without extra overhead.

How Much Knowledge Can You Pack into a LoRA Adapter without Harming LLM?

Integrating new facts into LLMs via LoRA fine-tuning can sometimes lead to a degradation in general performance, particularly in question-answering benchmarks.

Methodology

This study systematically varies the proportion of new versus known facts in the training data when fine-tuning a Llama-3.1-8B-instruct model. The experiments highlight the delicate balance required to incorporate additional knowledge without biasing the model’s outputs toward overrepresented entities.

Results: The best performance is observed when the training data is a balanced mix of known and new facts. However, when biased, the model shows a decline in benchmark performance and a tendency to overcommit to few dominant answers—emphasizing the importance of careful data composition during LoRA-based updates.

While LLMs excel in fact recall, their ability to handle temporally dynamic information is less understood. This work investigates the specific mechanisms that store time-related knowledge.

Methodology

The researchers identify “Temporal Heads”—specialized attention heads that process time-specific information. By disabling these heads and observing performance drops in temporal recall (without affecting time-invariant knowledge), they demonstrate the critical role these heads play.

Results: The findings confirm that temporal heads are activated by both numeric cues (e.g., “in 2004”) and textual phrases (e.g., “in the year…”). Their study lays the groundwork for targeted editing of temporal information within LLMs.

Standard Transformers limit themselves by relying solely on the immediately preceding layer’s output, leading to suboptimal representation capacity.

Methodology

The authors introduce Layer-Integrated Memory (LIMe), which aggregates hidden states from earlier layers without increasing the overall memory footprint. This strategy counters representation collapse by enriching the model’s contextual information.

PAFT: Prompt-Agnostic Fine-Tuning

Fine-tuned LLMs often overfit to specific prompt formulations, resulting in fragile performance when the prompts vary. PAFT addresses this by aiming for prompt-agnostic robustness.

Methodology

PAFT operates in two stages. First, it generates a diverse set of synthetic candidate prompts. Then, during fine-tuning, it randomly samples from this set so that the model learns to rely on underlying task principles rather than fixed prompt cues.

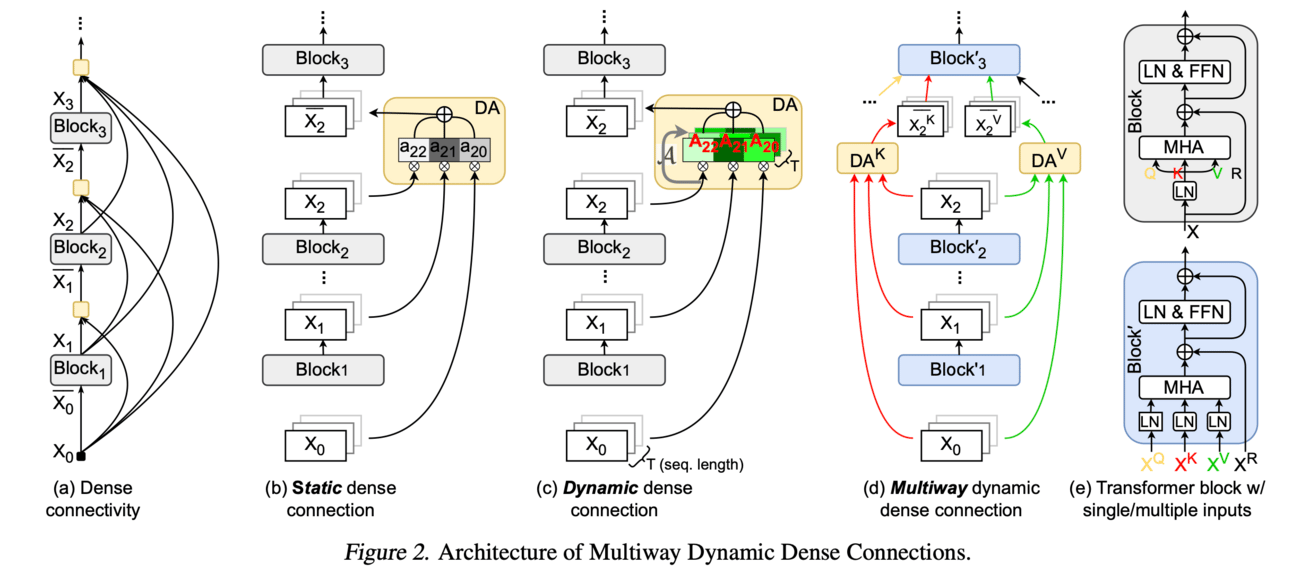

MUDDFormer: Breaking Residual Bottlenecks in Transformers via Multiway Dynamic Dense Connections | GitHub

Residual connections in Transformers can act as bottlenecks, limiting cross-layer information flow and thus hindering overall performance.

Methodology

MUDDFormer introduces Multiway Dynamic Dense (MUDD) connections, which generate connection weights dynamically based on the hidden states for each input stream (query, key, value, or residual). This approach effectively integrates information from earlier layers, breaking through the limitations of traditional residual architectures.

Results: The paper reports striking improvements MUDDFormer achieves the performance of Transformers trained with 1.8×–2.4× compute. For example, the MUDDPythia-2.8B model matches the pretraining perplexity of Pythia-6.9B and rivals Pythia-12B in five-shot tasks—all while adding only 0.23% more parameters and 0.4% extra computation.

Thinking Preference Optimization

Supervised Fine-Tuning (SFT) for chain-of-thought reasoning often leads to performance plateaus or declines with repeated training, and acquiring high-quality long responses is costly.

Methodology

Thinking Preference Optimization (ThinkPO) is a post-SFT method that leverages readily available short chain-of-thought responses as “rejected” answers and long responses as “chosen” ones. By applying direct preference optimization, the model is nudged to generate more comprehensive reasoning without the need for new, expensive data collection.

Results: ThinkPO boosts performance significantly—math reasoning accuracy increases by 8.6% and output length by 25.9%. Notably, when applied to the DeepSeek-R1-Distill-Qwen-7B model, performance on the MATH500 benchmark improves from 87.4% to 91.2%.