📑 IN THIS ISSUE

DeepSeek-R1 has become very popular online, and as an AI professional, it's important to understand why. How is the DeepSeek team able to train models with just a few million dollars in GPU computing, while teams like OpenAI need billions?

Take some time to read this newsletter. It's important to learn about this model, as it represents a new research direction in large language models (LLMs).

TL;DR listen to this amazing podcast discussing this research paper.

Introduction

Remember when calculators transformed mathematics? We're at a similar inflection point with AI. DeepSeek-R1 isn't just another language model – it's a fundamental rethinking of how AI systems learn to reason.

DeepSeek-R1 has sent shockwaves through the AI community, wiping $1 trillion from tech market caps in a single day. But beyond the market chaos lies a revolutionary approach to AI reasoning that could redefine the field. While ChatGPT and others focus on generating human-like responses, DeepSeek-R1 focuses on something more crucial: teaching AI how to think step-by-step through problems.

"This isn't just another model release - it's a fundamental rethinking of how AI systems learn to reason. DeepSeek-R1 represents what we've been missing in the field - focus on quality of thinking rather than just output generation."

Market reaction

Quadrant summarizing reaction on DeepSeek-R1 launch

Interesting facts:

Ultra-low cost training cost: Reportedly $5.6 million training cost for the base model

According to sources, DeepSeek bought a large number of Nvidia A100 GPUs (between 10,000 and 50,000 units) before U.S. chip restrictions were put in place.

Technical Analysis

The core idea of DeepSeek-R1 is to improve the reasoning capability of LLMs by incorporating a reward mechanism that incentivizes logical reasoning steps, rather than focusing solely on generating correct final answers. The model undergoes a three-phase training process:

Supervised Fine-Tuning (SFT)

Reward Model Training

Reinforcement Learning via Proximal Policy Optimization (PPO)

Stage 1. Supervised Fine-Tuning (SFT)

The process begins with supervised fine-tuning of the base DeepSeek-LLM model. The team meticulously curated a dataset combining GSM8K, MATH, LogiQA, and ProofWriter datasets. What sets their approach apart is the careful structuring of this data. Rather than simply using question-answer pairs, they incorporated detailed reasoning paths and step-by-step solutions.

The fine-tuning process employs a dynamic curriculum learning strategy. Problems are presented in increasing order of complexity, with the model's performance determining when to introduce more challenging examples. This ensures the model builds a strong foundation in basic reasoning before tackling more complex problems.

Stage 2: Reward Model Development

The heart of DeepSeek-R1's innovation lies in its reward modeling system. The team developed a specialized reward model trained on human preferences for different reasoning approaches. This wasn't simply about marking answers as correct or incorrect; instead, they collected detailed human feedback on:

The logical coherence of reasoning steps

The efficiency of solution paths

The clarity and completeness of explanations

The validity of intermediate conclusions

The reward model training involved pair-wise comparisons of different reasoning approaches for the same problems. Human evaluators, primarily mathematics and logic experts, rated these comparisons based on predefined quality criteria. This resulted in a reward model that could effectively distinguish between different levels of reasoning quality.

How DeepSeek-R1 works?

Stage 3: Reinforcement Learning

The final stage employs a modified version of Proximal Policy Optimization (PPO). The innovation here lies in how they adapted PPO for reasoning tasks. The process works as follows:

The model generates multiple reasoning attempts for each problem

The reward model evaluates each attempt based on multiple criteria

The policy is updated using a custom loss function that balances:

Reward maximization

KL divergence from the reference model

Entropy regularization to maintain exploration

A key innovation is their implementation of intermediate rewards. Rather than waiting for the final answer, the model receives feedback on each step of its reasoning process. This creates a more granular learning signal, helping the model understand which specific reasoning steps are effective.

The Evolution of DeepSeek-R1

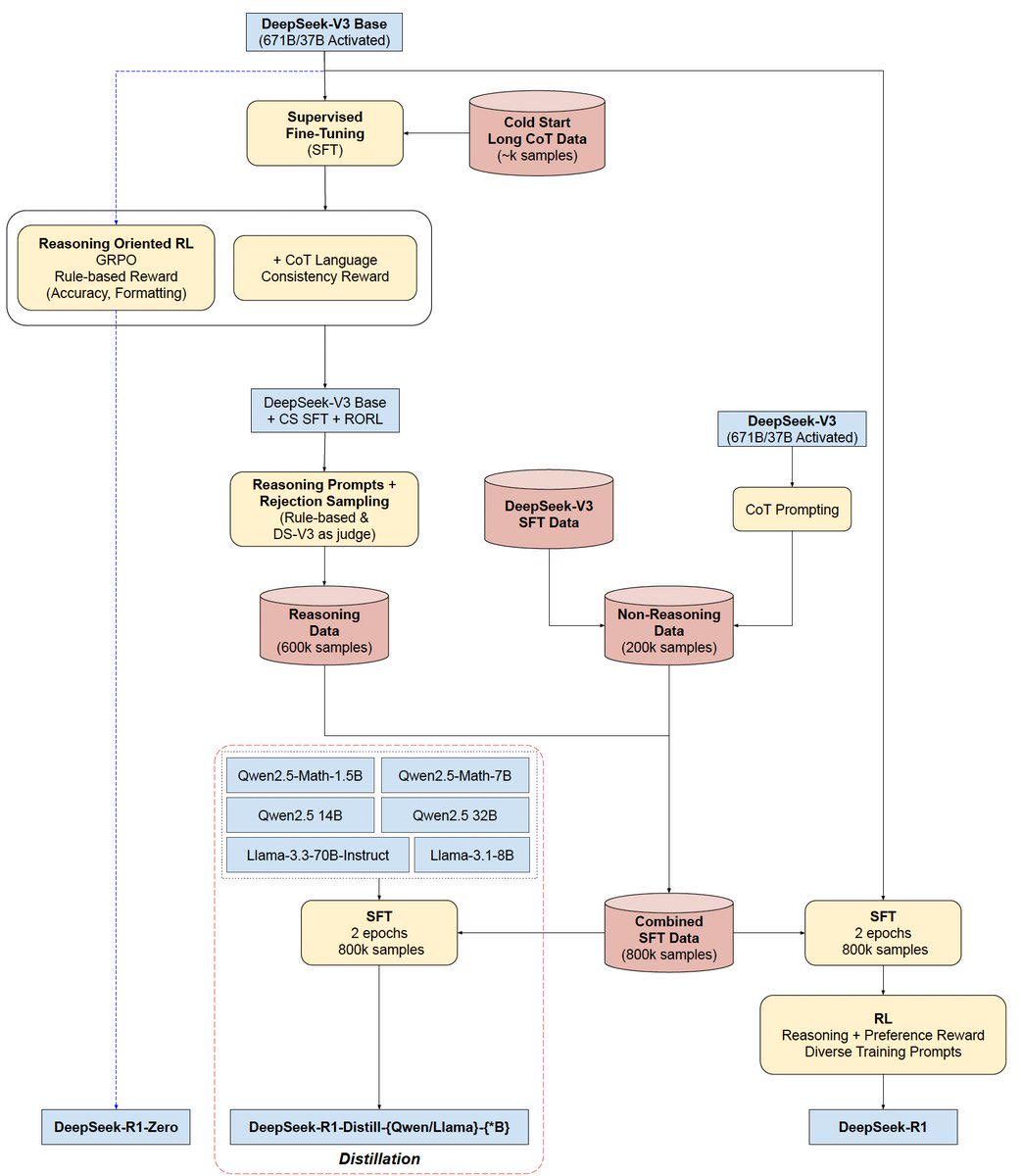

DeepSeek-R1's development is interesting because of its progress over time. It started with DeepSeek-V3-Base and used GRPO for reinforcement learning. This lead to a new model DeepSeek-R1-Zero. After many training steps, DeepSeek-R1-Zero's performance on AIME 2024 improved from 15.6% to 71.0%. With majority voting, it went up to 86.7%, matching OpenAI-o1-0912's performance. The team then refined the model by: Creating new training data through rejection sampling Combining it with DeepSeek-V3's supervised data on writing, factual QA, and self-awareness Retraining the base model Doing more reinforcement learning This careful process led to the final DeepSeek-R1 model, which performed as well as OpenAI-o1-1217. A key achievement was making the technology more accessible through model distillation: They successfully transferred capabilities to smaller models based on Qwen and Llama Their 14B distilled model did better than the top QwQ-32B-Preview The 32B and 70B distilled versions set new records for reasoning abilities

Implementation Details

DeepSeek-R1 training pipeline

The implementation required significant computational resources:

Training was conducted on a cluster of A100 GPUs

The reward model training alone took several weeks

The full training pipeline required multiple iterations and refinements

The team implemented several optimizations:

Distributed training across multiple nodes

Efficient reward computation

Dynamic batch sizing based on problem complexity

Gradient accumulation for stability

Evaluation Framework

The evaluation of DeepSeek-R1 is particularly comprehensive, going beyond simple accuracy metrics. The team developed a multi-dimensional evaluation framework:

Reasoning Quality Assessment

The model's performance is evaluated on:

Mathematical reasoning (using GSM8K and MATH benchmarks)

Logical deduction (using LogiQA and ProofWriter)

Scientific reasoning (using ScienceQA)

General problem-solving (using custom benchmarks)

Each solution is evaluated for: Step-by-step coherence, Logical validity, Efficiency of approach, and Clarity of explanation. Team also performed extensive comparisons with previous versions of DeepSeek models, other leading language models, and specialized reasoning models.

Performance comparison of DeepSeek-R1 with other models

What's particularly noteworthy is the quality of the reasoning processes. The model doesn't just arrive at correct answers; it demonstrates human-like problem-solving approaches, showing each step clearly and logically.

Challenges and Solutions

DeepSeek team faced several challenges and here’s a quick summary of challenges they faced and solutions they used to overcome the challenges.

Training Stability: Implementation of reference models and careful KL divergence constraints

Reward Sparsity: Development of dense reward signals through intermediate evaluations

Computational Efficiency: Custom optimizations in the training pipeline and reward computation

Generalization: Careful curriculum design and diverse training data

There’s a reason 400,000 professionals read this daily.

Join The AI Report, trusted by 400,000+ professionals at Google, Microsoft, and OpenAI. Get daily insights, tools, and strategies to master practical AI skills that drive results.