Core research improving LLMs performance

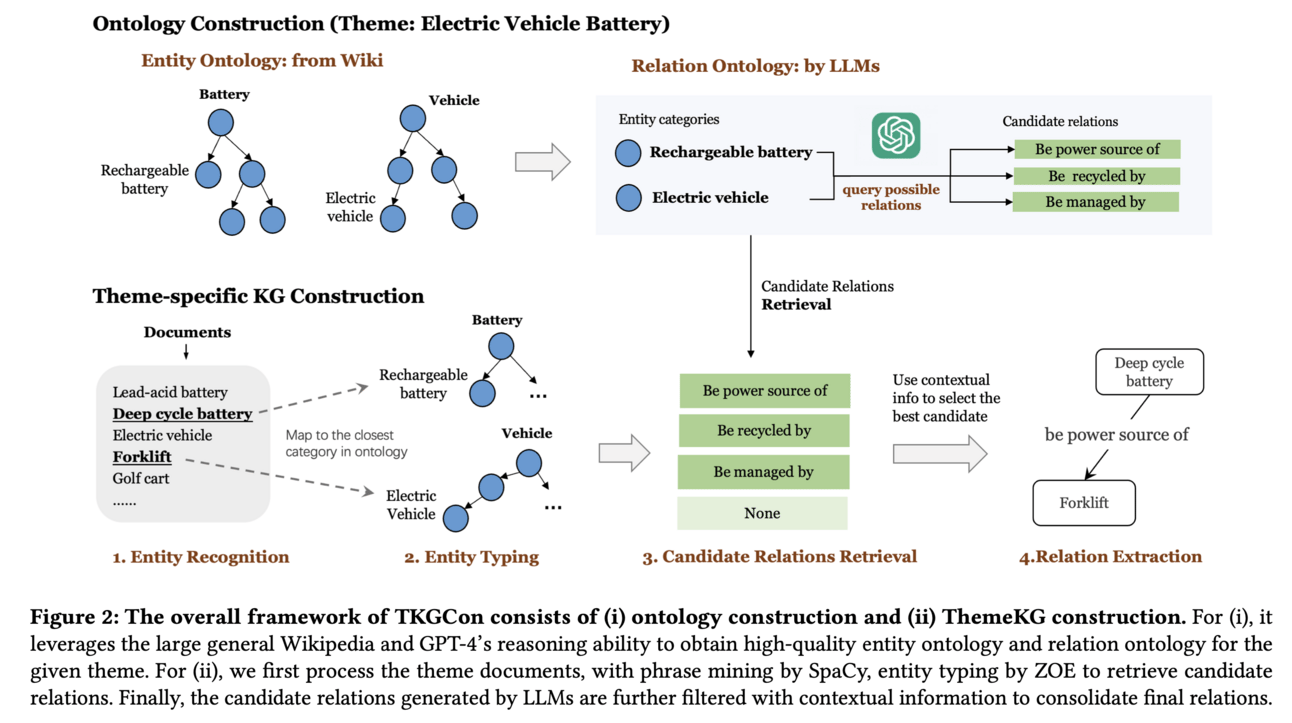

🤔Problem?: The research paper addresses the challenges faced by existing knowledge graphs (KGs) in terms of information granularity and timeliness. These challenges hinder the retrieval and analysis of in-context, fine-grained, and up-to-date knowledge from KGs, particularly in specialized themes and rapidly evolving contexts.

💻Proposed solution:

To solve these challenges, the research paper proposes a theme-specific knowledge graph (ThemeKG) and an unsupervised framework for its construction (TKGCon). The framework takes raw theme-specific corpus and generates a high-quality KG by first creating an entity ontology from Wikipedia and then using large language models to generate candidate relations. These relations are then used to parse the documents from the corpus and consolidate them with context and ontology to create accurate entities and relations.

📊Results:

The research paper shows that their approach outperforms GPT-4 and various other KG construction baselines in terms of evaluations.

🤔Problem?: The research paper addresses the issue of slow inference speeds of large language models in a production environment.

💻Proposed solution: The research paper proposes a solution by introducing novel speculative decoding draft models. These models work by conditioning draft predictions on both context vectors and sampled tokens, allowing them to efficiently predict high-quality n-grams. The base model then accepts or rejects these predictions, enabling the prediction of multiple tokens in each inference forward pass. This leads to a significant acceleration of wall-clock inference speeds, by a factor of 2-3x.

🤔Problem?: The research paper aims to address the issue of sub-optimal open-source implementation of Proximal Policy Optimization (PPO) in Reinforcement Learning from Human Feedback (RLHF) framework, particularly in the context of sparse, sentence-level rewards.

💻Proposed solution: The research paper proposes a framework that models RLHF problems as a Markov decision process (MDP), allowing for finer-grained token-wise information to be captured. This is achieved through an algorithm called Reinforced Token Optimization (RTO), which learns the token-wise reward function from preference data and performs policy optimization based on this learned token-wise reward signal. Additionally, the research paper integrates Direct Preference Optimization (DPO) and PPO in the practical implementation of RTO. DPO provides a token-wise characterization of response quality, which is incorporated into the subsequent PPO training stage.

LLMs evaluations

🔗Benchmark:https://www.CLUEbenchmarks.com

The research paper proposes the SuperCLUE-Fin (SC-Fin) benchmark, which uses multi-turn, open-ended conversations to assess FLMs across six financial application domains and twenty-five specialized tasks. It measures models on various criteria such as financial understanding, logical reasoning, computational efficiency, and compliance with Chinese regulations. This benchmark provides a comprehensive and contextually relevant evaluation framework for FLMs, driving their development and responsible deployment in the Chinese financial sector.

📚Survey papers

🧯Let’s make LLMs safe!!

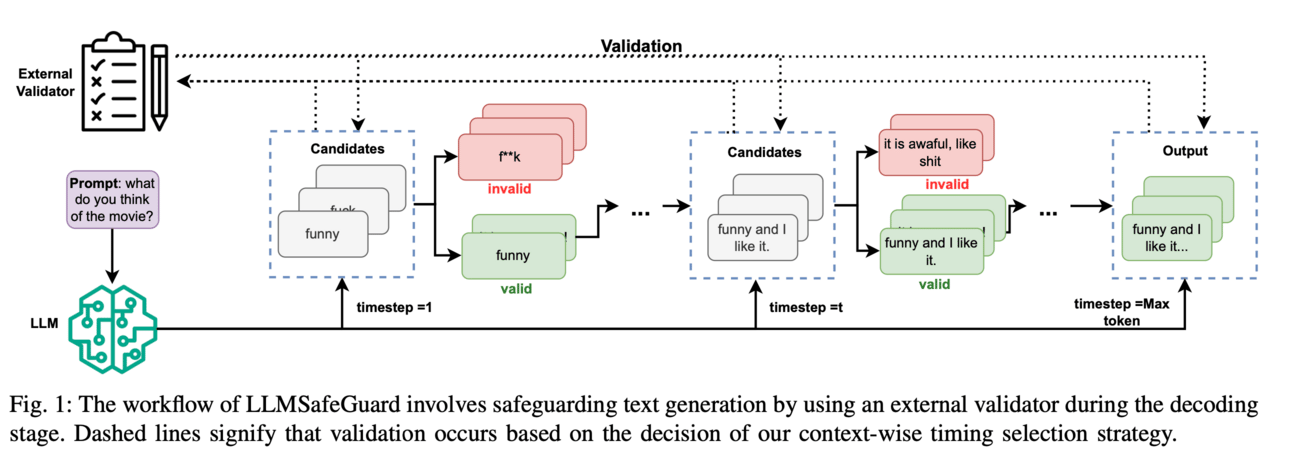

🤔Problem?: The research paper addresses the ethical and societal risks posed by LLMs, which have the ability to generate harmful content.

💻Proposed solution: The research paper proposes a lightweight framework called LLMSafeGuard to safeguard LLM text generation in real-time. It integrates an external validator into the beam search algorithm during decoding, rejecting candidates that violate safety constraints while allowing valid ones to proceed. This approach simplifies constraint introduction and eliminates the need for training specific control models. LLMSafeGuard also employs a context-wise timing selection strategy, intervening LLMs only when necessary.

🌈 Creative ways to use LLMs!!

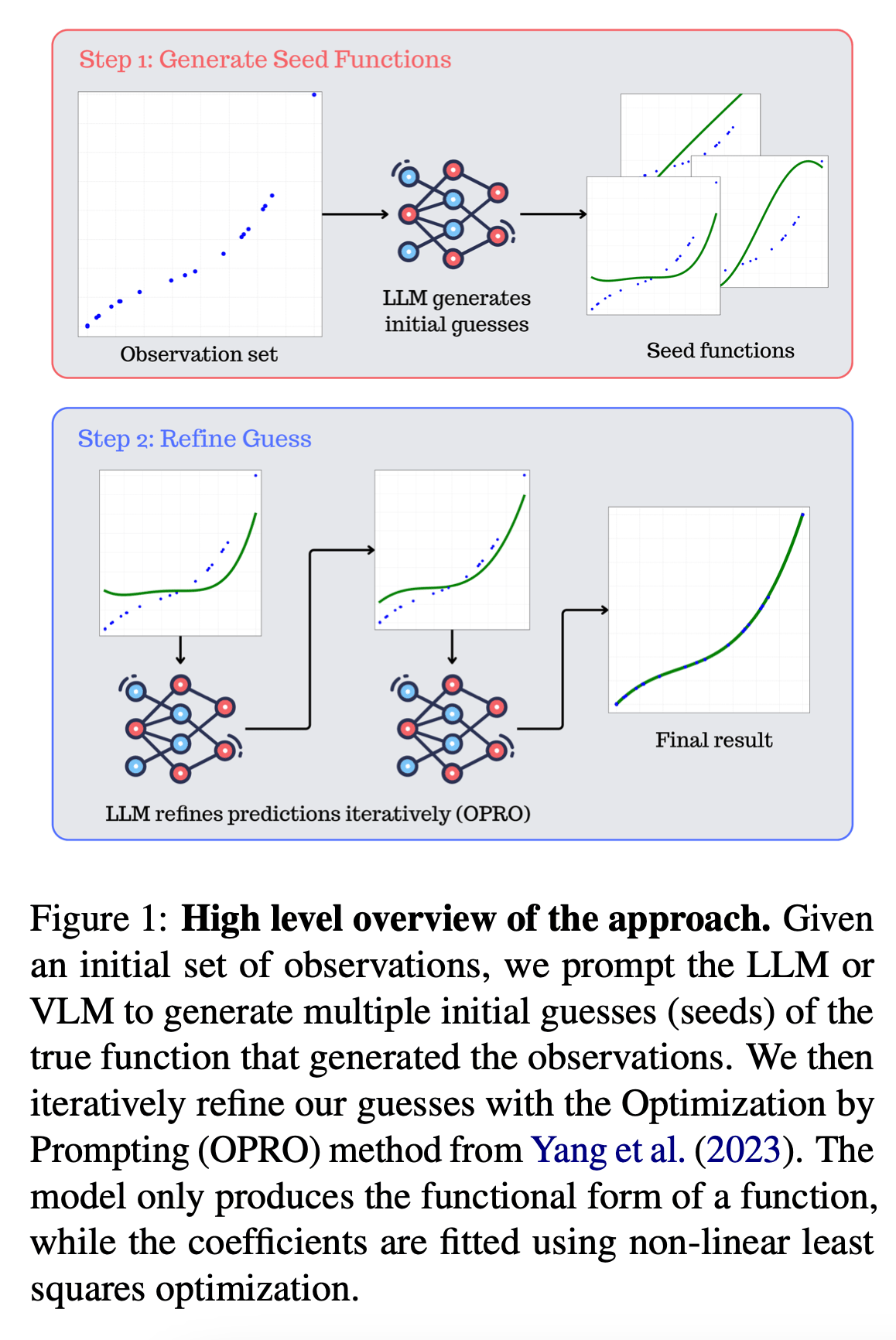

🤔Problem?: The research paper addresses the task of Symbolic Regression, which involves extracting the underlying mathematical expression from a set of empirical observations. This task is important for understanding and interpreting complex data, and has applications in various fields such as physics, engineering, and machine learning.

💻Proposed solution: The research paper proposes to solve the problem of Symbolic Regression by integrating pre-trained LLMs into the SR pipeline. This is achieved through an iterative process where the LLMs suggest possible functions based on the observations, and then these functions are refined by the model and an external optimizer until satisfactory results are obtained. The addition of visual inputs such as plots also aids in the optimization process.

🤔Problem?:

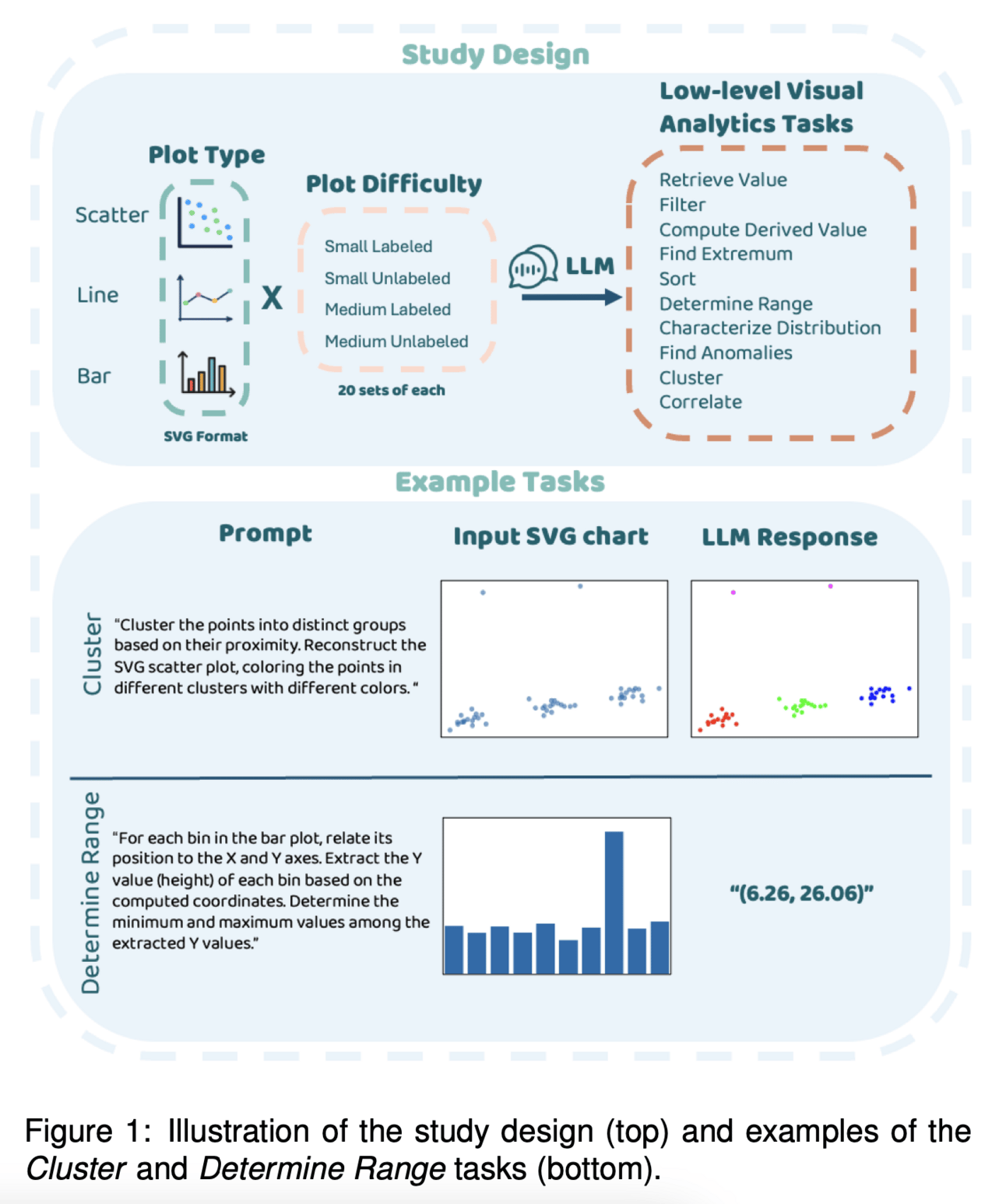

The research paper addresses the issue of complex and varying data literacy and experience hindering the extraction of insights from datasets through data visualizations.

💻Proposed solution:

The paper proposes the use of large language models (LLMs) to lower barriers for users to achieve visual analytic tasks by leveraging the text-based image format of Scalable Vector Graphics (SVG). This is accomplished by instructing the models through zero-shot prompts to provide responses or modify SVG code based on given visualizations.

Generative Agents

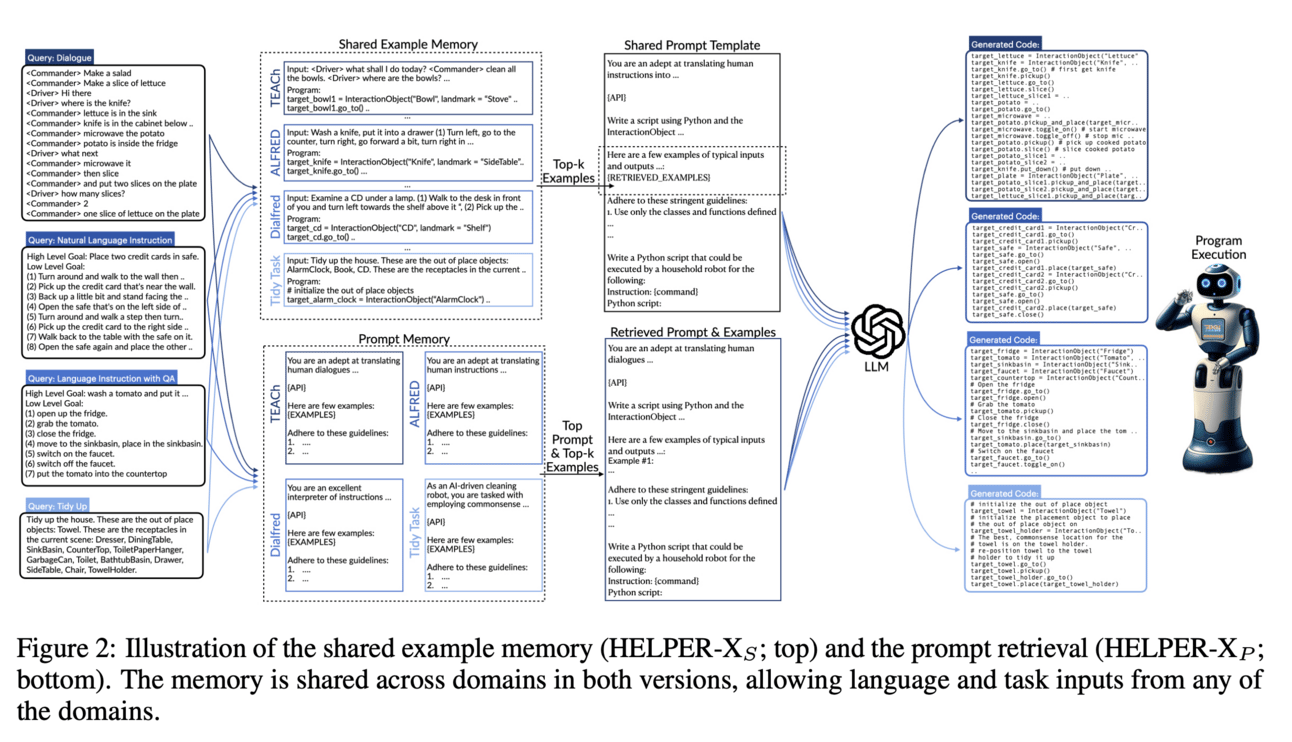

🤔Problem?: The research paper addresses the problem of improving the performance of instructable agents, specifically in the context of inferring correct actions and task plans from input instructions.

💻Proposed solution: The research paper proposes to solve this problem by using memory-augmented LLMs as task planners, which retrieve relevant language-program examples and use them as in-context examples in the LLM prompt. This helps to improve the performance of the LLM in inferring the correct action and task plans. Additionally, the paper expands the capabilities of the HELPER agent by integrating additional APIs for asking questions and expanding its memory with a wider array of examples and prompts. This allows the agent to work across multiple domains and achieve state-of-the-art performance without requiring in-domain training.