Dear subscribers,

A research day dominated by bayesian models! Learn how researchers are using Bayesian models with LLMs to improve model performance!!

🤔Problem?:

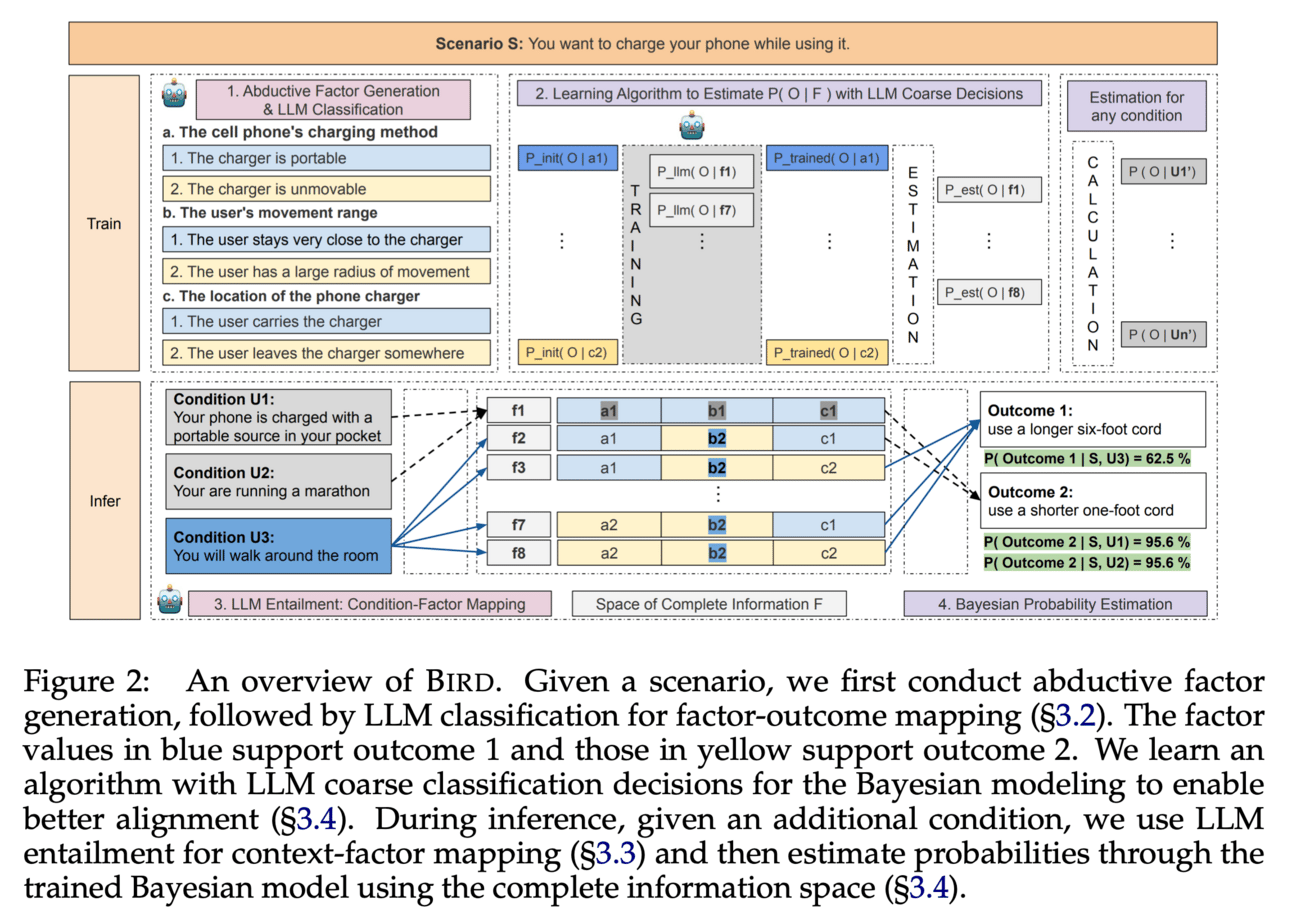

The research paper aims to address the issue of unreliable decision-making by large language models when applied to real-world tasks.

💻Proposed solution:

The proposed solution, called BIRD, is a Bayesian inference framework that incorporates abductive factors, LLM entailment, and learnable deductive Bayesian modeling to provide controllable and interpretable probability estimation for model decisions. BIRD works by considering contextual and conditional information, as well as human judgments, to enhance the reliability of decision-making.

📊Results:

The research paper shows that BIRD outperforms the state-of-the-art GPT-4 by 35% in terms of probability estimation alignment with human judgments. This demonstrates a significant improvement in decision-making reliability for large language models. Additionally, the paper also demonstrates the direct applicability of BIRD in real-world applications, further highlighting its performance improvement.

🤔Problem?:

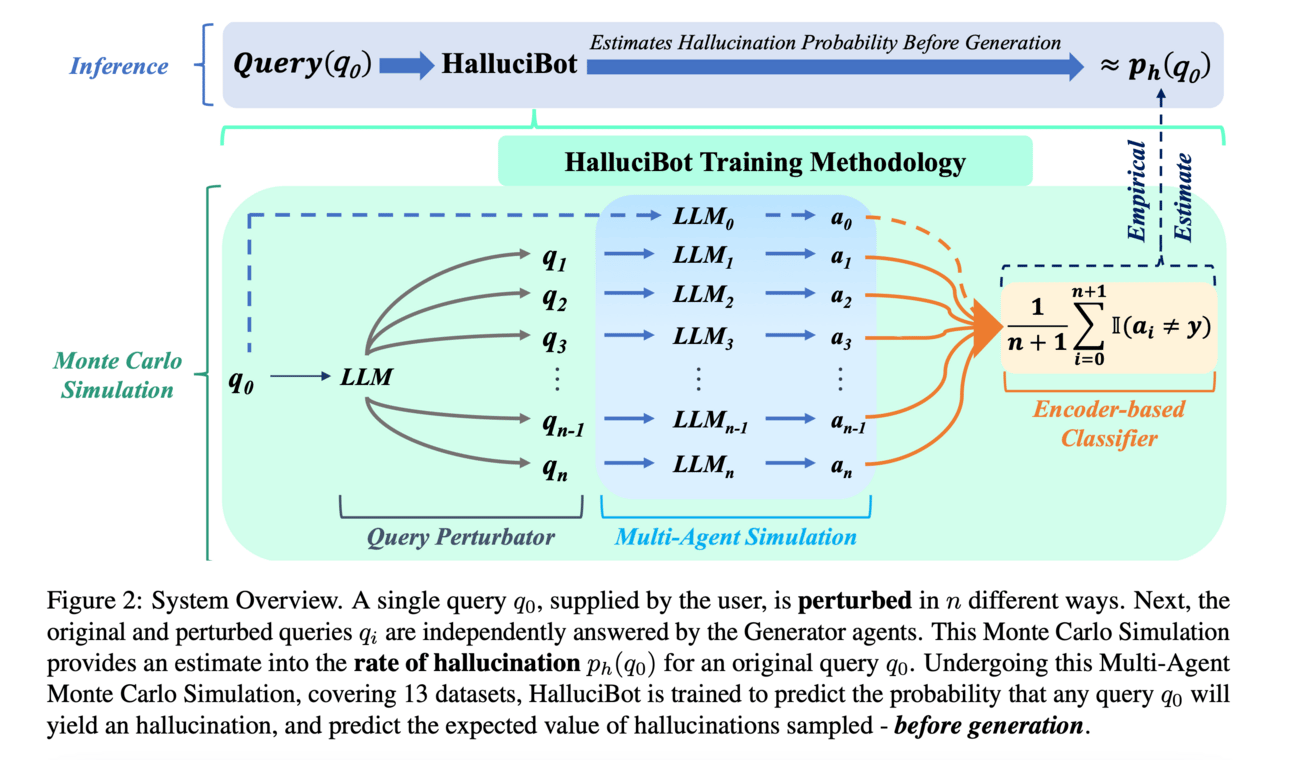

The research paper addresses the issue of hallucination, which is a critical challenge in the institutional adoption journey of Large Language Models (LLMs). Hallucination refers to the generation of inaccurate or false information by LLMs, which can have serious consequences in real-world applications.

💻Proposed solution:

The research paper proposes HalluciBot, a model that predicts the probability of hallucination before generation, for any query imposed to an LLM. This model does not generate any outputs during inference, but instead uses a Multi-Agent Monte Carlo Simulation and a Query Perturbator to craft variations of the query at train time. The Query Perturbator is designed based on a new definition of hallucination, called "truthful hallucination," which takes into account the accuracy of the information being generated. HalluciBot is trained on a large dataset of queries and is able to predict both binary and multi-class probabilities of hallucination, providing a means to judge the quality of a query before generation.

📊Results:

The research paper does not mention any specific performance improvements achieved by HalluciBot, but it can be assumed that the model's ability to predict hallucination before generation can significantly reduce the number of false information generated by LLMs. This can potentially improve

🤔Problem?:

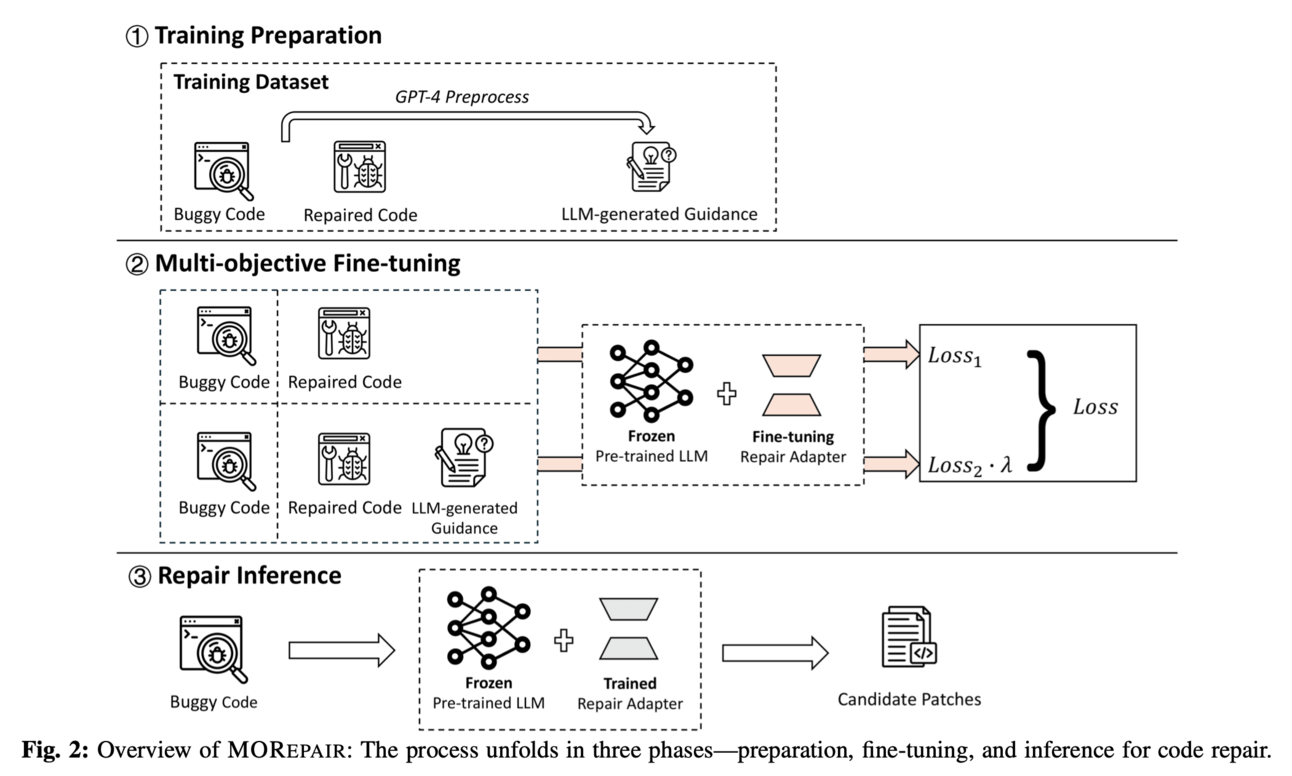

The research paper addresses the problem of fine-tuning large language models (LLMs) for program repair tasks, specifically the need to reason about the logic behind code changes beyond syntactic patterns in the data.

💻Proposed solution:

The research paper proposes a novel perspective on LLM fine-tuning for program repair, which involves not only adapting the LLM parameters to the syntactic nuances of the task, but also specifically fine-tuning the LLM with respect to the logical reason behind the code change in the training data. This multi-objective fine-tuning approach aims to instruct LLMs to generate high-quality patches. The proposed method, called MORepair, is applied to four open-source LLMs with different sizes and architectures, and experimental results show that it effectively boosts LLM repair performance.

📊Results:

The research paper reports a performance improvement of 7.6% to 10% in Top-10 repair suggestions on C++ and Java repair benchmarks when using MORepair to fine-tune LLMs. It is also shown to outperform the incumbent state-of-the-art fine-tuned models for program repair, Fine-tune-CoT and RepairLLaMA.

🤔Problem?:

The research paper addresses the problem of leveraging the complementary strengths of large language models (LLMs) by ensembling them to push the frontier of natural language processing tasks.

💻Proposed solution:

The paper proposes a training-free ensemble framework called DEEPEN, which averages the probability distributions outputted by different LLMs. It addresses the challenge of vocabulary discrepancy between heterogeneous LLMs by mapping the probability distribution of each model to a universe relative space and performing aggregation. The result is then mapped back to the probability space of one LLM via a search-based inverse transformation to determine the generated token.

📊Results:

The research paper achieves consistent improvements across six popular benchmarks, including subject examination, reasoning, and knowledge-QA, demonstrating the effectiveness of their approach.

🤔Problem?:

The research paper addresses the problem of large language models (LLMs) lacking reliability in generating factual information and being consistent with external knowledge when reasoning about beliefs of the world.

💻Proposed solution:

The research paper proposes to solve this problem by introducing a training objective based on principled probabilistic reasoning. This objective teaches LLMs to be consistent with external knowledge in the form of a set of facts and rules. This is achieved through fine-tuning with a specific loss function on a limited set of facts. This allows the LLMs to be more logically consistent than previous baselines and enables them to extrapolate to unseen but semantically similar factual knowledge more systematically.

📊Results:

The research paper has achieved a performance improvement by enabling LLMs to be more logically consistent and able to extrapolate to unseen factual knowledge more systematically. This is achieved through the proposed training objective and fine-tuning process with a specific loss function on a limited set of facts.

🤔Problem?:

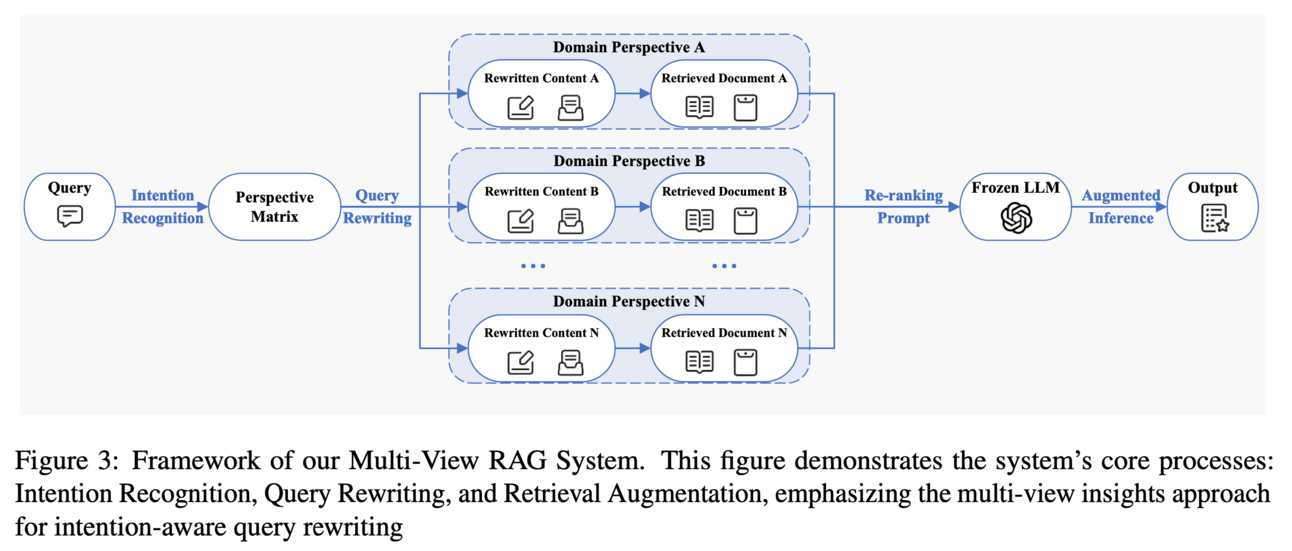

The research paper addresses the issue of existing retrieval methods in knowledge-dense domains, such as law and medicine, lacking multi-perspective views. This leads to a lack of interpretability and reliability when using Retrieval-Augmented Generation (RAG) with Large Language Models (LLMs).

💻Proposed solution:

The research paper proposes a solution called MVRAG, which stands for Multi-View Retrieval-Augmented Generation. This framework utilizes intention-aware query rewriting from multiple domain viewpoints, allowing for a more comprehensive and diverse set of perspectives to be considered in the retrieval process. This approach aims to improve retrieval precision and the overall effectiveness of the final inference.

📊Results:

The research paper conducted experiments on legal and medical case retrieval and demonstrated significant improvements in recall and precision rates with the MVRAG framework. This showcases the effectiveness of the multi-perspective retrieval approach in enhancing RAG tasks, ultimately accelerating the application of LLMs in knowledge-intensive fields.

🤔Problem?:

The research paper addresses the problem of the lack of a single vision encoder that can effectively understand diverse image content in multimodal large language models (MLLMs). Existing vision encoders show bias towards certain types of images, leading to suboptimal performance on other types.

💻Proposed solution:

The research paper proposes a solution called MoVA, which stands for Mixture of Vision Expert Adapter. MoVA is a powerful and novel MLLM that adaptively routes and fuses task-specific vision experts using a coarse-to-fine mechanism.

In the first stage, a context-aware expert routing strategy is used to dynamically select the most suitable vision experts based on user instruction, input image, and expertise of the experts. This is made possible by the large language model (LLM) equipped with expert-routing low-rank adaptation (LoRA) which has a powerful understanding of model functions.

In the second stage, a mixture-of-vision-expert adapter (MoV-Adapter) is used to extract and fuse task-specific knowledge from various experts. This allows for a more fine-grained understanding of the image content, further enhancing the generalization ability of the model.

👩🏻💻 Generative Agents 👨🏻💻

Papers with database/benchmarks:

📚Want to learn more, Survey paper:

🧯Let’s make LLMs safe!! (LLMs security related papers)

🌈 Creative ways to use LLMs!! (Applications based papers)

🤖LLMs for robotics: