💻Proposed solution:

Team introduces ScreenAI, a vision-language model that specializes in UI and infographics understanding. Proposed model improves upon the PaLI architecture with the flexible patching strategy of pix2struct and is trained on a unique mixture of datasets. At the heart of this mixture is a novel screen annotation task in which the model has to identify the type and location of UI elements. Team uses these text annotations to describe screens to Large Language Models and automatically generate question-answering (QA), UI navigation, and summarization training datasets at scale. We run ablation studies to demonstrate the impact of these design choices.

📊Results:

With only 5B parameters, ScreenAI achieves new state-of-the-artresults on UI- and infographics-based tasks (Multi-page DocVQA, WebSRC, MoTIF and Widget Captioning), and new best-in-class performance on others (Chart QA, DocVQA, and InfographicVQA) compared to models of similar size.

🤔Problem?:

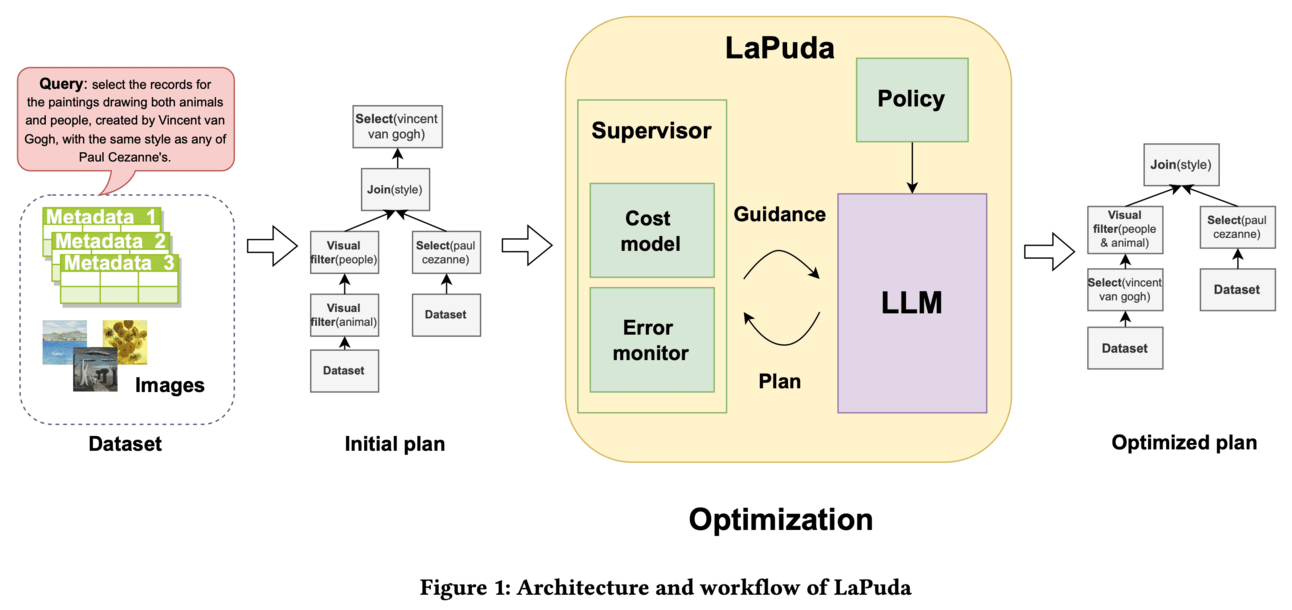

The research paper addresses the lack of investigation on the query optimization capability of Large language models (LLM). While LLM has shown potential in query planning, its ability to optimize queries has not been explored.

💻Proposed solution:

The research paper proposes a novel LLM and Policy based multi-modal query optimizer called LaPuda. Instead of manually creating hundreds to thousands of rules for multi-modal optimization, LaPuda uses a few abstract policies to guide LLM in the optimization process. To ensure correct optimization, the paper also introduces a guided cost descent algorithm based on gradient descent. This algorithm prevents LLM from making mistakes or negative optimizations.

📊Results:

Paper provides evidence that LaPuda consistently outperforms existing baselines in terms of execution speed. The optimized plans generated by LaPuda result in 1~3x higher execution speed compared to those generated by the baselines. This demonstrates the effectiveness of using LLM for query optimization and the benefits of using a policy-based approach rather than manually creating rules.

🤔Problem?:

Research paper aims to address the issue of inefficiency at inference time in Large Language Models (LLMs). Specifically, it focuses on the problem of permutation sensitivity, where the output of LLMs can vary significantly depending on the order of the input options. This can lead to unreliable and biased results in NLP tasks.

💻Proposed solution:

To solve this problem, the research paper proposes a method for distilling the capabilities of a computationally intensive, debiased, teacher model into a more compact student model. This is done through two different approaches: pure distillation and error-correction. In pure distillation, the student model learns directly from the teacher model's predictions, while in error-correction, the student model corrects a single biased decision from the teacher model to achieve a debiased output. This approach is applicable to both black-box and white-box LLMs.

📊Results:

This approach can significantly improve the performance of compact, encoder-only student models compared to their larger, biased teacher counterparts. This is achieved with significantly fewer parameters, making the student models more efficient and practical for real-world applications. However, the specific performance improvements achieved are not provided.

Connect with fellow researchers community on Twitter to discuss more about these papers at

🤔Problem?:

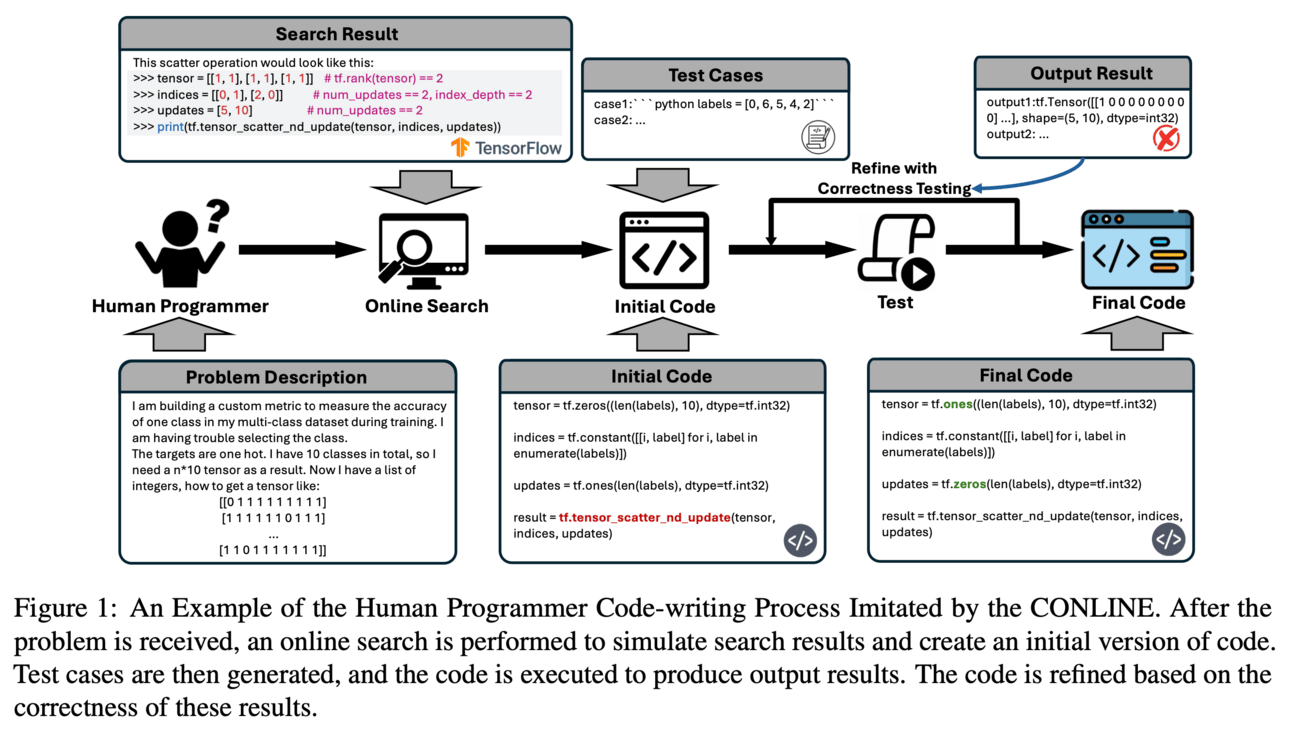

The research paper addresses the challenges faced in generating complex code using Large Language Models (LLMs) by introducing the CONLINE framework.

💻Proposed solution:

The CONLINE framework proposes to enhance code generation by incorporating planned online searches for information retrieval and automated correctness testing for iterative refinement. It works by serializing complex inputs and outputs to improve comprehension and generate test cases, ensuring its adaptability for real-world applications.

📊Results:

The performance improvement achieved by CONLINE was validated through rigorous experiments on the DS-1000 and ClassEval datasets, which showed substantial improvements in the quality of complex code generation. This highlights the potential of CONLINE to enhance the practicality and reliability of LLMs in generating intricate code.

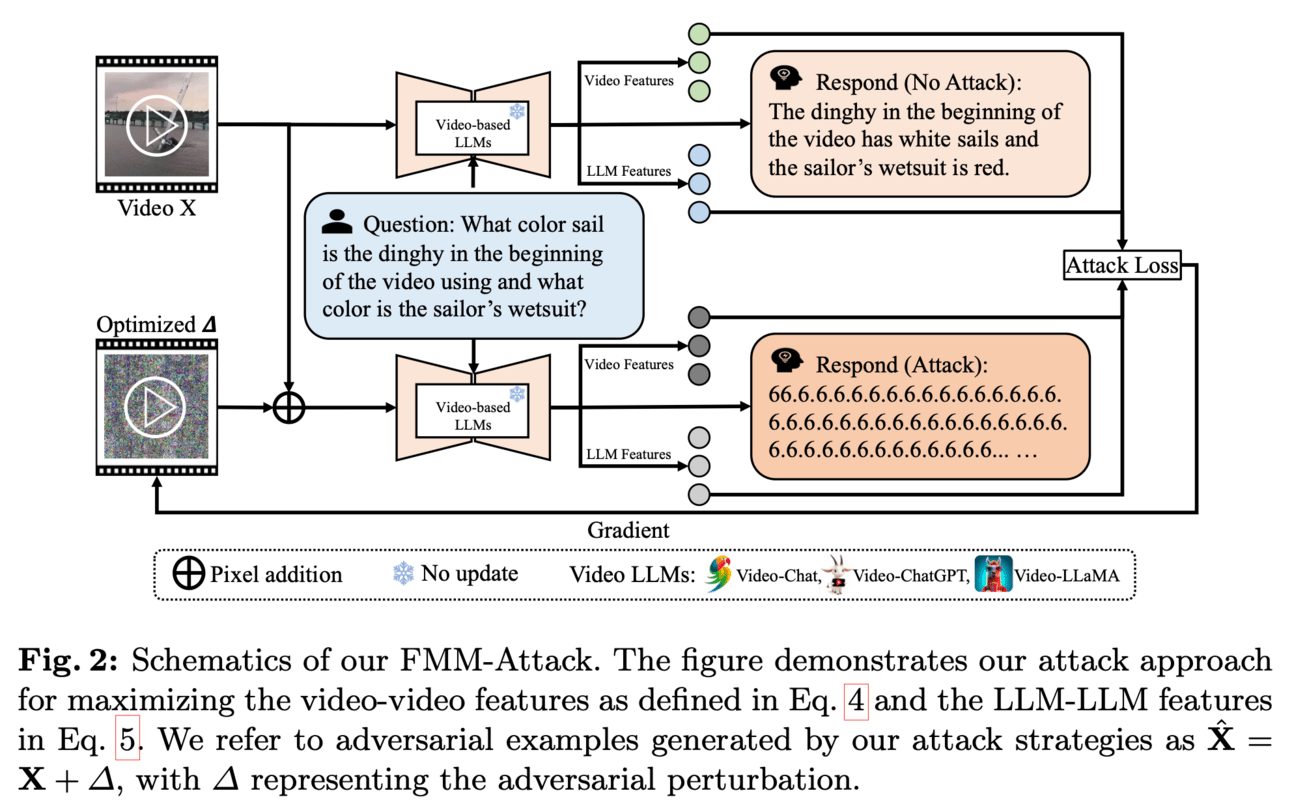

🔗Code/data/weights:https://github.com/THU-Kingmin/FMM-Attack

🤔Problem?:

The research paper addresses the issue of the adversarial threat faced by video-based large language models (LLMs), which has been largely unexplored.

💻Proposed solution:

The research paper proposes a solution to this problem through the development of the first adversarial attack specifically tailored for video-based LLMs, called the FMM-Attack. This attack involves crafting flow-based multi-modal adversarial perturbations on a small fraction of frames within a video, which are imperceptible to the human eye. These perturbations can effectively deceive the LLMs and cause them to generate incorrect answers or even hallucinate. This method aims to inspire a deeper understanding of multi-modal robustness and feature alignment across different modalities, which is crucial for the development of large multi-modal models. The code for this attack is also made available to the public on GitHub.

📊Results:

Paper does not mention any specific performance improvement achieved, as the main focus is on exploring the adversarial threat and developing a solution for it.

🔗Code/data/weights:https://github.com/DCDmllm/HyperLLaVA

🤔Problem?:

The research paper addresses the problem of performance limitations in Multimodal Large Language Models (MLLMs) due to the use of static tuning strategies, which can constrain performance across different downstream multimodal tasks.

💻Proposed solution:

This research paper proposes HyperLLaVA as a solution, which involves adaptive tuning of the projector and LLM parameters, in conjunction with a dynamic visual expert and language expert. These experts are derived from HyperNetworks, which generate adaptive parameter shifts through visual and language guidance. This enables dynamic projector and LLM modeling in a two-stage training process.

📊Results:

HyperLLaVA significantly surpasses the existing MLLM benchmark, LLaVA, on multiple benchmarks including MME, MMBench, SEED-Bench, and LLaVA-Bench.

🔗Code/data/weights: https://github.com/arcee-ai/MergeKit

🤔Problem?:

The research paper addresses the challenge of effectively merging the competencies of different language models in order to create more versatile and high-performing models.

💻Proposed solution:

Team proposes a model merging strategy which combines the parameters of different pre-trained models without the need for additional training. This is achieved through an extensible framework called MergeKit, which can efficiently merge models on any hardware. This strategy allows for the creation of multitask models that can utilize the strengths of each individual model without losing their intrinsic capabilities. It addresses challenges in AI such as catastrophic forgetting and multi-task learning.

📊Results:

The paper does not provide specific performance improvements, but it mentions that the open-source community has already merged thousands of models using this strategy, resulting in the creation of some of the most powerful open-source model checkpoints as evaluated by the Open LLM Leaderboard.

🤔Problem?:

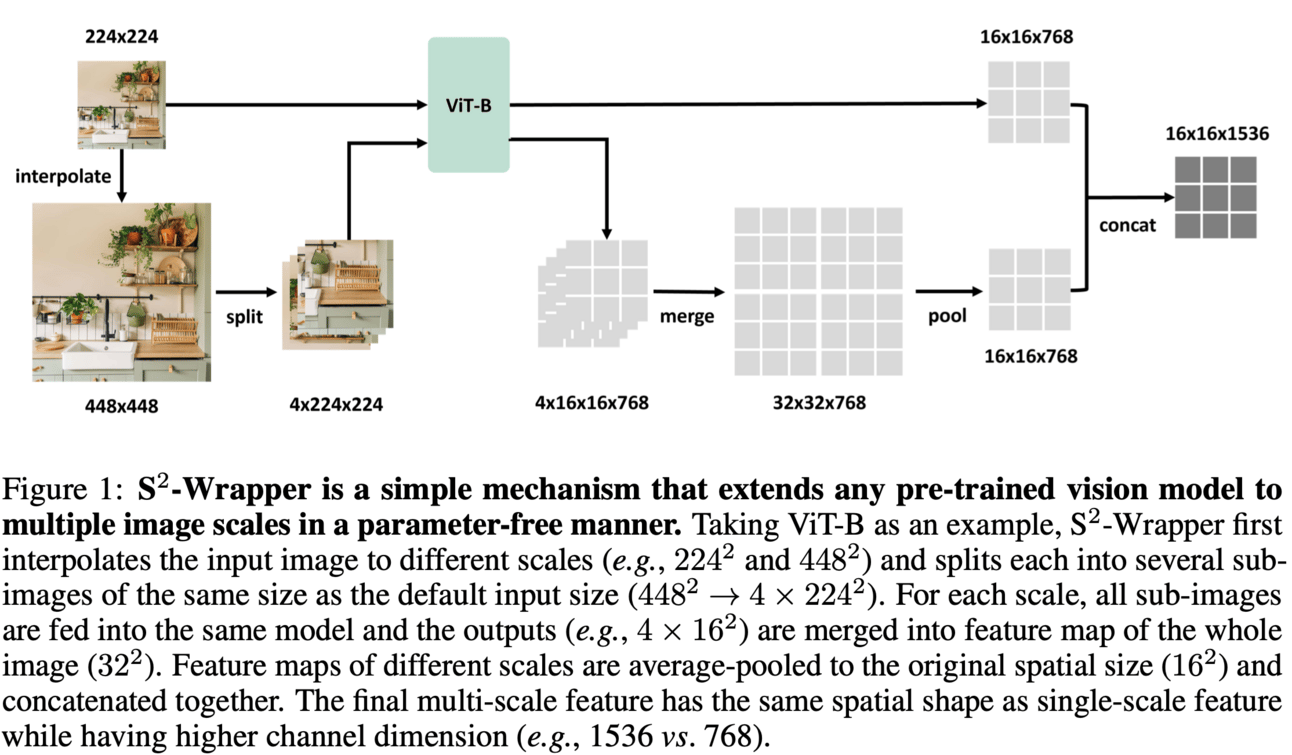

Paper addresses the issue of whether scaling up the size of vision models is always necessary to obtain more powerful visual representations. It aims to determine the point at which larger vision models are no longer necessary.

💻Proposed solution:

Team proposes a method called Scaling on Scales (S2), where a pre-trained and frozen smaller vision model is run over multiple image scales. This allows the smaller model to outperform larger models on various visual tasks such as classification, segmentation, depth estimation, Multimodal LLM (MLLM) benchmarks, and robotic manipulation. S2 works by approximating the features of larger models using the features of multi-scale smaller models. This suggests that most, if not all, of the representations learned by large pre-trained models can also be obtained from smaller models.

📊Results:

They achieved state-of-the-art performance on the V* benchmark for detailed understanding of MLLM, surpassing even models such as GPT-4V. It also shows that pre-training smaller models with S2 can match or even exceed the advantage of larger models.