Core research improving LLMs!

💡Why?: The research paper addresses the problem of accurately measuring and comparing the performance of Mixture-of-Experts (MoE) models and dense models. Prior work has used FLOPs or activated parameters as a measure of model complexity, but this does not take into account the communication overhead in sparse layers, leading to a larger actual training budget for MoE. This setting favors MoE and does not give an accurate representation of its performance compared to dense models.

💻How?: To solve this problem, the research paper proposes to use step time as a more accurate measure of model complexity, and to determine the total compute budget under the Chinchilla compute-optimal settings. This ensures a fair comparison between MoE and dense models. Additionally, the paper introduces a 3D sharding method for running MoE efficiently on modern accelerators, keeping the dense-to-MoE step time increase within a healthy range.

The research paper proposes a solution to reduce the computational cost of LLMs by introducing a technique called Output-adaptive Calibration (OAC), which incorporates the model output in the calibration process. This is done by formulating the quantization error based on the distortion of the output cross-entropy loss. OAC also utilizes output-adaptive Hessians, which are approximated for each layer under reasonable assumptions to reduce the computational complexity. These Hessians are used to update the weight matrices and detect the most salient weights, in order to maintain the model output. This approach outperforms existing methods such as SpQR and BiLLM, especially at extreme low-precision quantization (2-bit and binary).

📊Results: The research paper achieved significant performance improvements compared to state-of-the-art baselines such as SpQR and BiLLM, particularly at extreme low-precision quantization. This demonstrates the effectiveness of the proposed OAC technique in reducing the memory footprint, latency, and energy required for inference, without sacrificing accuracy.

💡Why?: The research paper addresses the problem of fine-tuning LLMs on new domains without degrading any pre-existing instruction-tuning. This is important because fine-tuning is often necessary to adapt the model to a specific domain, but it can also lead to overfitting and degrade the performance on the original task.

💻How?: The research paper proposes a solution called RE-Adapt, which stands for Reverse-Engineering Adaptation. This approach involves reverse engineering an adapter, which isolates the knowledge that an instruction-tuned model has learned beyond its corresponding pretrained base model. This adapter is then used to fine-tune the base model on a new domain and readapt it to instruction following. This process requires no additional data or training, making it efficient and effective.

📊Results: The research paper demonstrates that RE-Adapt and a low-rank variant called LoRE-Adapt outperform other methods of fine-tuning on multiple popular LLMs and datasets. This improvement is seen even when the models are used in conjunction with RAG, which is a more challenging task. This shows the effectiveness of the proposed approach in maintaining the performance of the model on the original task while improving its performance on

The research paper examines the relationship between attention heads in transformer models and human episodic memory. The researchers focus on induction heads, which contribute to in-context learning, and compare them to the contextual maintenance and retrieval (CMR) model of human episodic memory. By analyzing pre-trained LLMs, the researchers demonstrate that CMR-like heads often emerge in intermediate model layers and exhibit similar behavioural and functional characteristics to human memory biases.

💡Why?: The research paper addresses the problem of improving reinforcement learning with human feedback (RLHF) through Direct Preference Optimization (DPO) and its limitations in characterizing the diversity of human preferences.

💻How?: The research paper proposes a new approach called Mallows-DPO, inspired by Mallows' theory of preference ranking. This approach introduces a dispersion index to capture the diversity of human preferences towards prompts. It works by incorporating this dispersion index into existing DPO models, thus enhancing their performance in various benchmark tasks such as synthetic bandit selection, controllable generations, and dialogues.

💡Why?: LLMs compression technique

💻How?: The research paper proposes a representation-agnostic framework called PV-Tuning, which improves upon existing fine-tuning strategies and provides convergence guarantees in restricted cases. It works by fine-tuning the compressed parameters over a limited amount of calibration data, but instead of using straight-through estimators (STE) which may not perform well in this setting, PV-Tuning uses a more optimized approach.

📊Results: The research paper achieved better performance in terms of accuracy-vs-bit-width trade-off compared to existing techniques for highly-performant language models such as Llama and Mistral. Specifically, using PV-Tuning, the research paper achieved the first Pareto-optimal quantization for Llama 2 family models at 2 bits per parameter.

💡Why?: The research paper addresses the problem of efficiently and effectively integrating a large amount of new experiences into LLMs after pre-training.

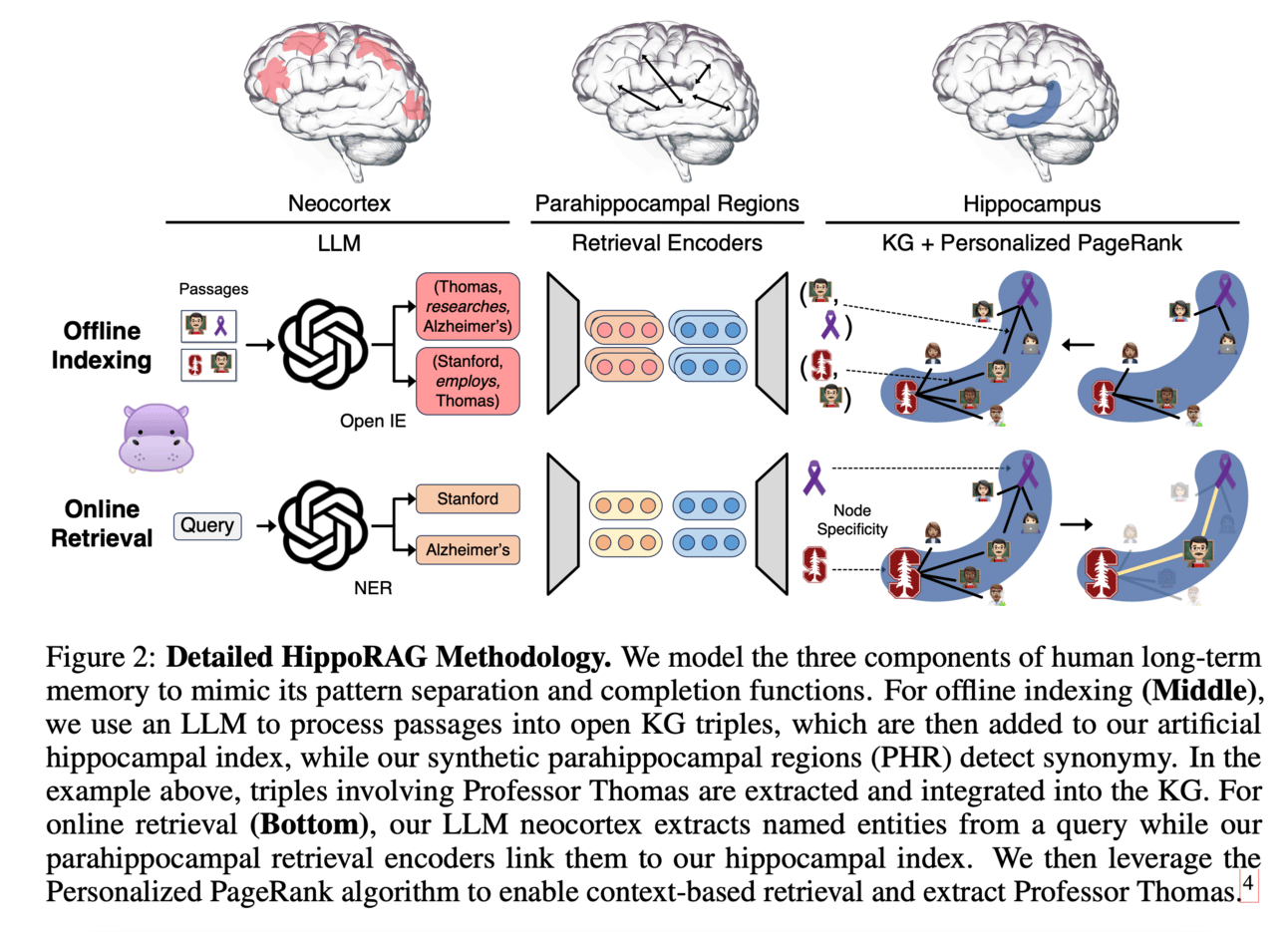

💻How?: The research paper proposes a retrieval framework called HippoRAG, inspired by the hippocampal indexing theory of human long-term memory. It works by synergistically orchestrating LLMs, knowledge graphs, and the Personalized PageRank algorithm to mimic the different roles of the neocortex and hippocampus in human memory. This allows for deeper and more efficient knowledge integration over new experiences.

📊Results: The research paper shows that HippoRAG outperforms existing retrieval-augmented generation (RAG) methods on multi-hop question answering tasks, achieving up to a 20% improvement. It also performs comparably or better than iterative retrieval methods while being significantly cheaper and faster. Additionally, integrating HippoRAG into existing methods leads to further substantial gains.

WISE: Rethinking the Knowledge Memory for Lifelong Model Editing of Large Language Models - project page 🔥🔥🔥

💡Why?: The research paper addresses the problem of updating knowledge in LLMs to ensure accurate responses and facilitate lifelong model editing. Specifically, the paper looks at the challenge of where to store and access this updated knowledge, as well as how to maintain reliability, generalization, and locality in the editing process.

💻How?: The research paper proposes a solution called WISE (Where to Insert updated knowledge for lifelong model Editing), which involves a dual parametric memory scheme. This includes a main memory for pretrained knowledge and a side memory for edited knowledge. The paper also suggests using a router to decide which memory to access for a given query. Additionally, the paper introduces a knowledge-sharding mechanism to handle continual editing, where different sets of edits are stored in separate subspaces of parameters and then merged into a shared memory without conflicts.

📊Results: The research paper reports significant performance improvements over previous model editing methods, as demonstrated through extensive experiments on various LLM architectures such as GPT, LLaMA, and Mistral.

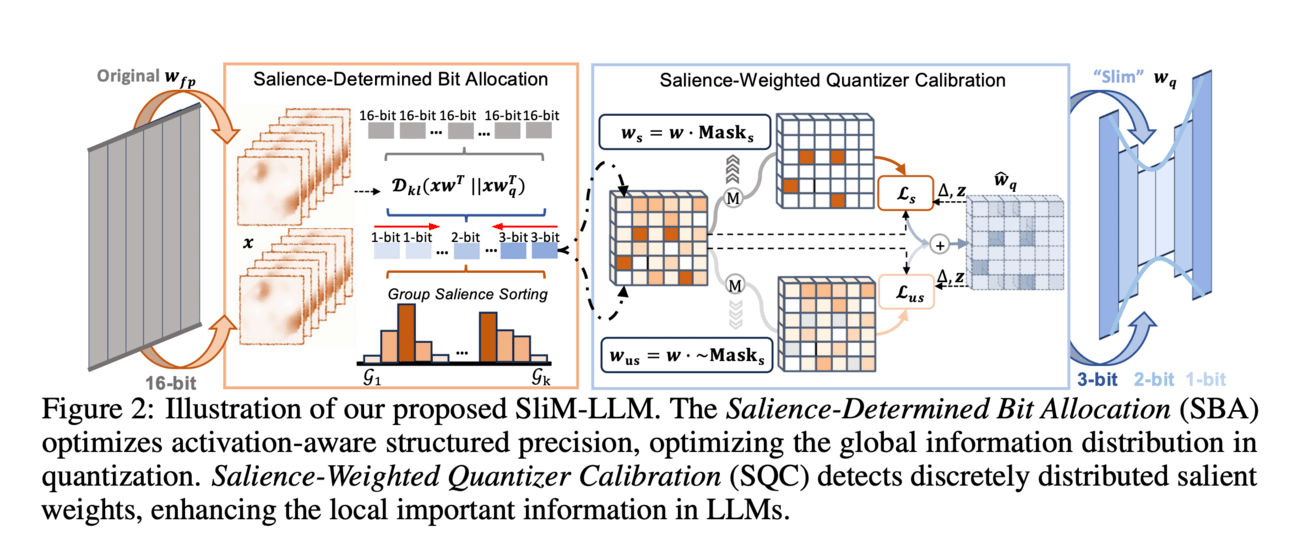

💡Why?: Existing post-training quantization (PTQ) methods are not ideal for accurately quantizing LLMs to low bit-widths (below 4 bits), which leads to a decrease in performance and requires substantial computation and memory resources.

💻How?: The research paper proposes a Salience-Driven Mixed-Precision Quantization scheme, called SliM-LLM which utilizes the salience distribution of weights to determine the optimal bit-width and quantizers for accurate LLM quantization. It also aligns the bit-width partition to groups for compact memory usage and fast integer inference. The proposed SliM-LLM mainly relies on two techniques: (1) Salience-Determined Bit Allocation, which uses the clustering characteristics of salience distribution to allocate bit-widths for each group, and (2) Salience-Weighted Quantizer Calibration, which optimizes the quantizer parameters by considering the element-wise salience within the group. This helps balance the maintenance of salient information and minimization of errors.

💡Why?: In-context Learning (ICL) is a powerful approach that allows LLMs to adapt to new tasks during inference by providing demonstration examples. However, it incurs significant computational and memory costs and is susceptible to the selection and order of demonstration examples.

💻How?: The research paper proposes a new paradigm called Implicit In-context Learning (I2CL) to address the limitations of traditional ICL. I2CL absorbs the demonstration examples within the activation space instead of prefixing them to the test queries. It first generates a condensed vector representation, called a context vector, from the demonstration examples. Then, during inference, it integrates the context vector by injecting a linear combination of the context vector and query activations into the model's residual streams. This allows I2CL to achieve few-shot performance with zero-shot cost and also exhibits robustness against the variation of demonstration examples. Additionally, I2CL facilitates a novel representation of "task-ids", which enhances task similarity detection and enables effective transfer learning.

This research paper proposes novel methods for Reinforcement Learning (RL) from preference feedback between two full multi-turn conversations. In the tabular setting, it presents a mirror-descent-based policy optimization algorithm that is specifically designed for the general multi-turn preference-based RL problem. It works by continuously updating the policy based on the feedback received from the human, and it has been proven to converge to Nash equilibrium.

📊Results: The research paper shows that a deep RL variant of their algorithm outperforms existing RLHF baselines in their newly created environment, Education Dialogue, where a teacher agent guides a student in learning a random topic. This indicates a significant performance improvement in aligning LLMs with human preferences. Additionally, the paper also demonstrates that their algorithm can achieve the same performance as a reward-based RL baseline in an environment with explicit rewards, despite solely relying on a weaker preference signal.

💡Why?: The research paper addresses the issue of processing LLMs services in real-time on bandwidth-constrained cloud servers, which has become difficult due to the rapid growth in the number of LLM users.

💻How?: The research paper proposes PerLLM, a personalized inference scheduling framework with edge-cloud collaboration to improve the efficiency of processing diverse LLM services. It integrates the upper confidence bound algorithm based on the constraint satisfaction mechanism to optimize service scheduling and resource allocation within the edge-cloud infrastructure. This allows for meeting processing time requirements while minimizing energy costs.

📊Results: PerLLM achieved significant performance improvements compared to other methods. It achieved 2.2x, 2.1x, and 1.6x higher throughput and reduced energy costs by more than 50% in experimental results from different model deployments.

💡Why?: Long context capability in LLMs.

💻How?: The research paper proposes a novel property of long-term decay in order to better understand the role of Rotary position embedding (RoPE) in LLMs. It suggests that there is an absolute lower bound for the base value of RoPE in order to obtain certain context length capability in LLMs. This can help shed light on future long context training and improve the overall performance of LLMs.

💡Why?: Paper try to overcomes the limited document ranking capacity in LLMs, which hinders their application for list-wise re-ranking.

💻How?: The paper proposes a novel algorithm that partitions the ranking to a specific depth (k) and processes documents in a top-down manner. This algorithm is inherently parallelizable, as it uses a pivot element that allows for concurrent comparison of documents at any depth. This reduces the number of expected inference calls by 33% when ranking at depth 100, while maintaining the performance of previous approaches.

The paper proposes a parameter-efficient deep ensemble method called LoRA-Ensemble, which is based on Low-Rank Adaptation (LoRA). It works by training a single pre-trained self-attention network with shared weights for all ensemble members, while also training member-specific low-rank matrices for attention projections. This approach reduces the computational cost and memory requirements of traditional explicit ensemble methods.

💡Why?: The research paper addresses the challenge of activation quantization in GLU variants, which are commonly used in feed-forward networks of modern large language models (LLMs). These activation quantization errors, caused by excessive magnitudes of activation, significantly degrade the performance of quantized LLMs.

💻How?: The research paper proposes two empirical methods, Quantization-free Module (QFeM) and Quantization-free Prefix (QFeP), to isolate the activation spikes during quantization. These methods work by identifying and removing the activation spikes, which are dedicated to a couple of tokens rather than being shared across a sequence. This helps to reduce the local quantization errors and improve the performance of the quantized LLMs.

📊Results: The research paper has achieved significant performance improvements in the activation quantization of various modern LLMs with GLU variants, including LLaMA-2/3, Mistral, Mixtral, SOLAR, and Gemma. These methods have shown to be more effective than current techniques like SmoothQuant, which fail to control the activation spikes. The code for these methods is available on GitHub.

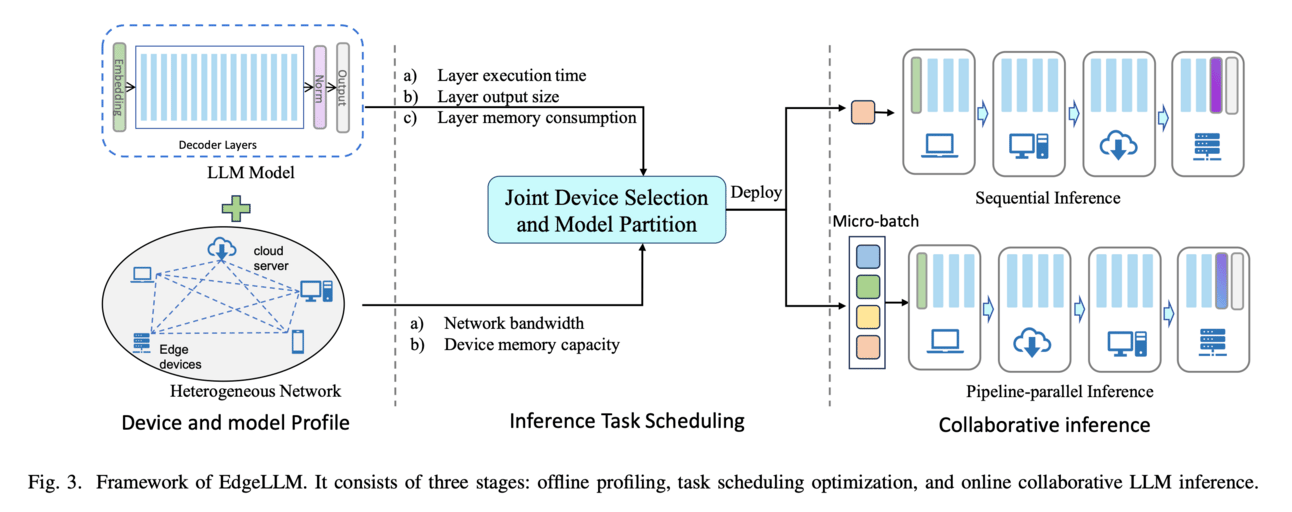

Paper proposes to use collaborative edge computing, where the LLM model is partitioned into shards and deployed on distributed devices. This allows for more efficient and secure LLM inference by bringing the computation closer to the data sources. An adaptive joint device selection and model partition problem is formulated, and an efficient dynamic programming algorithm is designed to optimize the inference latency and throughput.

📊Results: EdgeShard achieves up to 50% latency reduction and 2x throughput improvement over baseline methods.

💡Why?: The research paper tries to reduce latency in autoregressive generation by compressing the Key-Value (KV) cache, which stores previously generated tokens.

💻How?: The research paper proposes a solution called MiniCache, which compresses the KV cache across layers from a depth perspective. This is done by disentangling the states into magnitude and direction components, and then interpolating the directions while preserving their lengths. Additionally, a token retention strategy is introduced to keep highly distinct state pairs unmerged. This approach is training-free and can be applied to existing KV cache compression strategies such as quantization and sparsity.

Let’s make LLMs safe!!

This research paper Representation noising effectively prevents harmful fine-tuning on LLMs tries to mitigate the dual-use risk in releasing open-source LLMs, where bad actors can easily fine-tune these models for harmful purposes. It proposes a defence mechanism called Representation Noising (RepNoise) which removes information about harmful representations in the LLM, making it difficult for attackers to recover them during fine-tuning. This defence is effective even when attackers have access to the weights and the defender no longer has any control. RepNoise also has the ability to generalize across different subsets of harm, without degrading the overall capability of the LLM or hindering its ability to train on harmless tasks.

The research paper Impact of Non-Standard Unicode Characters on Security and Comprehension in Large Language Models analyze fifteen distinct LLMs to expose their vulnerabilities. This analysis involves a standardized test with 38 queries and three key metrics: jailbreaks, hallucinations, and comprehension errors. The models are then assessed based on the total occurrences of these metrics. The paper also investigates the impact of non-standard Unicode characters on language models and their safeguarding mechanisms, such as Reinforcement Learning Human Feedback (RLHF). The study suggests that incorporating non-standard Unicode text in training data can enhance the capabilities of language models.

The research paper MoGU: A Framework for Enhancing Safety of Open-Sourced LLMs While Preserving Their Usability proposes the MoGU framework as a solution to enhance the safety of LLMs while preserving their usability. The framework transforms the base LLM into two variants: the usable LLM and the safe LLM, and uses dynamic routing to balance their contribution. This means that when faced with malicious instructions, the router will assign a higher weight to the safe LLM to ensure harmless responses, while for benign instructions, the router prioritizes the usable LLM to generate helpful responses.

Creative ways to use LLMs!!

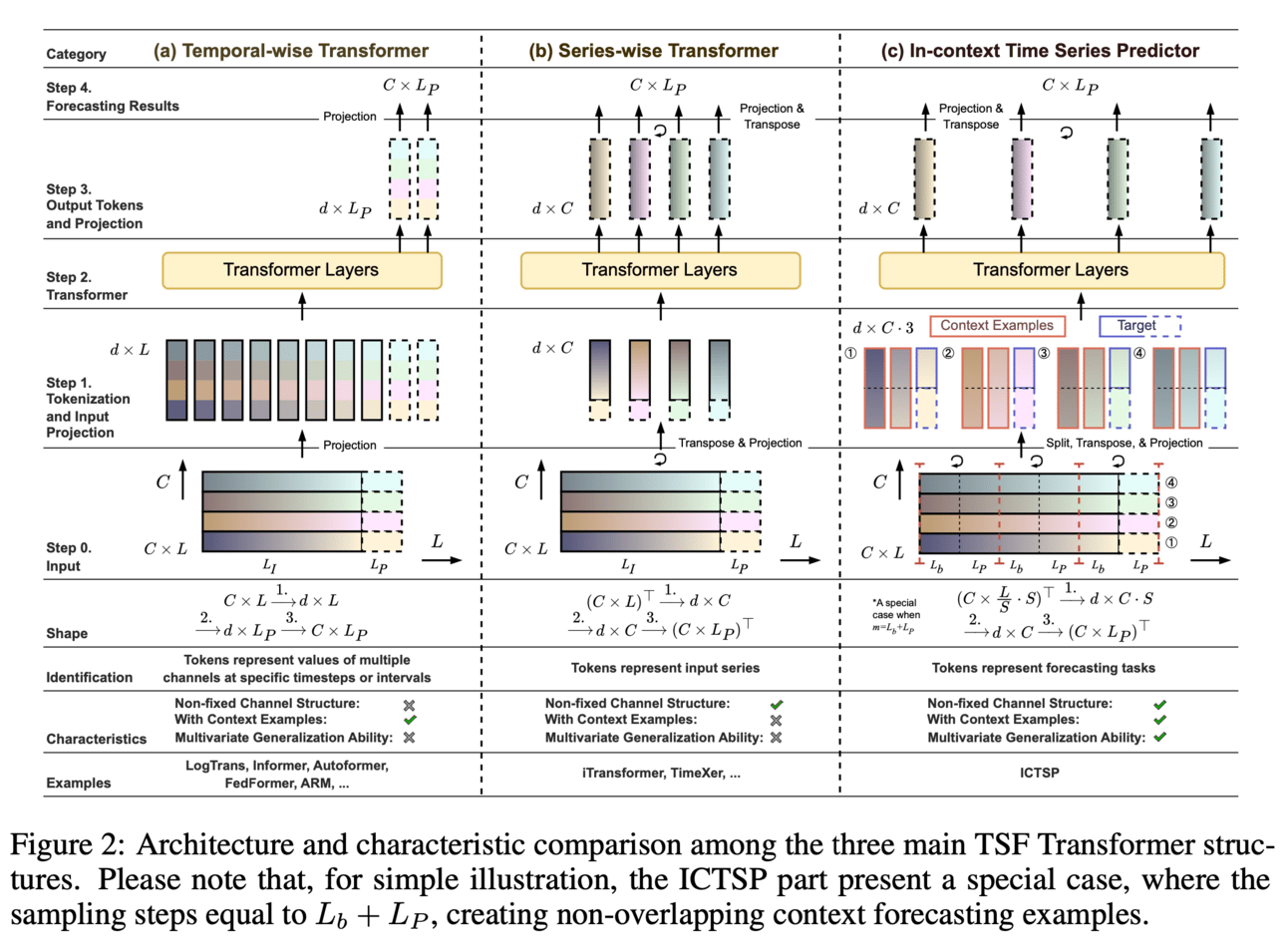

Research paper In-context Time Series Predictor proposes a new method that reformulates TSF tasks as input tokens, by constructing a series of (lookback, future) pairs within the tokens. This aligns more closely with the in-context mechanisms of LLMs and is more parameter-efficient, without the need for pre-trained LLM parameters.

This method also addresses issues such as overfitting in existing Transformer-based TSF models.

AnalogCoder: Analog Circuit Design via Training-Free Code Generation presents AnalogCoder which is a training-free LLM agent for designing analog circuits through Python code generation. It works by incorporating a feedback-enhanced flow with tailored domain-specific prompts, which allows for automated and self-correcting design of analog circuits with a high success rate.

Additionally, AnalogCoder also proposes a circuit tool library to archive successful designs as reusable modular sub-circuits, making it easier to create composite circuits.

FinRobot: An Open-Source AI Agent Platform for Financial Applications using Large Language Models is an open source platform that supports multiple financially specialized AI agents powered by LLMs.

FinRobot consists of four layers: Financial AI Agents, Financial LLM Algorithms, LLMOps and DataOps, and Multi-source LLM Foundation Models. These layers work together to break down complex financial problems, configure appropriate model application strategies, produce accurate models, and integrate various LLMs for direct access. FinRobot aims to democratize access to specialized LLM-based toolchains and promote wider adoption of AI in financial analysis.

Large language models can be zero-shot anomaly detectors for time series? uses LLMs for time series anomaly detection. Paper proposes a framework called sigllm for time series anomaly detection. It includes a time-series-to-text conversion module and end-to-end pipelines that prompt language models to perform the detection task. The framework leverages the flexibility of LLMs to handle time series data and the ability to identify anomalies in the input sequence. This is achieved by using two paradigms - a prompt-based detection method that directly asks the LLM to indicate anomalies in the input, and a forecasting method that uses the LLMs forecasting capability to guide the anomaly detection process.

AGILE: A Novel Framework of LLM Agents proposes a new framework called AGILE (AGent that Interacts and Learns from Environments) that utilizes LLMs, memory, tools, and interactions with experts to enable agents to perform complex conversational tasks. The key idea is to formulate the construction of such an LLM agent as a reinforcement learning problem, where the LLM serves as the policy model. This means that the agent learns and improves its performance through trial and error, using labeled data of actions and the PPO algorithm for fine-tuning. Additionally, the agent is equipped with the ability to reflect, utilize tools, and consult with experts, allowing it to continuously improve its performance and handle challenging questions.

MultiCast: Zero-Shot Multivariate Time Series Forecasting Using LLMs tries to predict multivariate time series using a proposed solution called MultiCast, which is a zero-shot approach that uses large language models (LLMs) to handle multivariate time series data. This is achieved through three novel token multiplexing solutions that reduce dimensionality while retaining key repetitive patterns. Additionally, a quantization scheme is introduced to allow LLMs to better learn these patterns while minimizing the number of tokens used.

LLMs for robotics & VLLMs

💡Why?: The paper addresses the challenge of grounding the reasoning ability of LLMs for embodied tasks, specifically in the context of multi-agent collaboration. This problem is caused by the complexity of the physical world and the need for effective coordination between agents.

💻How?: The paper proposes a framework called Reinforced Advantage feedback (ReAd) to address this problem. This framework involves using critic regression to learn a sequential advantage function from LLM-planned data, and then treating the LLM planner as an optimizer to generate actions that maximize the advantage function. This allows the LLM to have the foresight to determine whether an action will contribute to accomplishing the final task. The paper provides theoretical analysis and extends advantage-weighted regression in reinforcement learning to multi-agent systems.

Agentic Skill Discovery propose a novel skill discovery approach for robots using LLMs.

The framework generates task proposals based on the scene description and robot's configurations and then uses reinforcement learning to develop corresponding policies. The reliability and trustworthiness of learned behaviors are ensured by an independent vision-language model. This approach allows for incremental skill acquisition, starting from zero skills, and leads to the emergence and expansion of a diverse and reliable skill library for the robot.

CityGPT: Towards Urban IoT Learning, Analysis and Interaction with Multi-Agent System proposes a framework called CityGPT, which utilizes three agents to facilitate the learning and analysis of IoT time series data.

The requirement agent allows users to input natural language queries, which are then decomposed into temporal and spatial analysis processes. These processes are completed by corresponding data analysis agents, and the spatiotemporal fusion agent visualizes the results and provides textual descriptions based on user demands. The framework is agentized and facilitated by a large language model (LLM) to increase data comprehensibility.

💡Why?: The research paper addresses the issue of hallucination in Large Vision-Language Models (LVLMs). This refers to the phenomenon where the generated text responses from the model appear linguistically plausible but contradict the input image, indicating a misalignment between image and text pairs.

💻How?: The research paper proposes the Calibrated Self-Rewarding (CSR) approach to address this issue. This approach enables the model to self-improve by iteratively generating candidate responses, evaluating the reward for each response, and curating preference data for fine-tuning. The reward modeling incorporates visual constraints into the self-rewarding process to place greater emphasis on visual input, thus reducing hallucinations.

📊Results: The research paper achieved a substantial improvement of 7.62% over existing methods across ten benchmarks and tasks.

LLMs evaluations

This research paper Dissociation of Faithful and Unfaithful Reasoning in LLMs tries to benchmark Chain of Thought output. It analyzes LLM error recovery behaviours. Through this analysis, the researchers identified factors that influence LLM recovery behavior, such as the difficulty of the error and the amount of evidence for the correct answer in the context. The paper also distinguishes between faithful and unfaithful error recoveries, suggesting that there are distinct mechanisms driving each type of recovery.

This research paper A Nurse is Blue and Elephant is Rugby: Cross Domain Alignment in Large Language Models Reveal Human-like Patterns tries to evaluate the conceptualization and reasoning abilities of LLMs by adapting a cross-domain alignment task from cognitive science. To do that, paper conducts a behavioural study in which several LLMs are prompted with a cross-domain mapping task and their responses are analyzed and compared at both the population and individual levels. This task is designed to investigate how well the models represent abstract and concrete concepts and how they reason about these concepts through their mappings between categories.

This research paper Can LLMs Solve longer Math Word Problems Better? evaluates the ability of LLMs to solve long and complex Math Word Problems (MWPs), which is crucial for their applications in real-world scenarios. Paper published an extended grade-school math (E-GSM), a collection of MWPs with lengthy narratives, and two novel metrics to assess the efficacy and resilience of LLMs in solving these problems. For proprietary LLMs, a new instructional prompt is proposed to mitigate the influence of long context, while for open-source LLMs, a new data augmentation task is developed to improve their Context Length Generalizability (CoLeG).

This research paper Subtle Biases Need Subtler Measures: Dual Metrics for Evaluating Representative and Affinity Bias in Large Language Models evaluates the impact of bias in LLMs. Paper introduces two novel metrics - the Representative Bias Score (RBS) and the Affinity Bias Score (ABS) - to measure the biases within LLMs. These metrics are then applied in the Creativity-Oriented Generation Suite (CoGS), a collection of open-ended tasks designed to detect these biases. The CoGS uses customized rubrics to analyze the outputs of LLMs and identify any biases present.

Survey papers

This survey paper A Comprehensive Overview of Large Language Models (LLMs) for Cyber Defences: Opportunities and Directions discusses several approaches to identify anomalies of cyber threats, enhance incident response, and automate routine security operations. This is achieved by training LLMs on massive textual datasets, which allows them to encode context and provide powerful comprehension for downstream tasks.