Core research improving LLMs performance

🔗GitHub: https://github.com/zzxslp/SoM-LLaVA

🤔Problem?: The research paper addresses the issue of understanding visual tags in Multimodal Large Language Models (MLLMs). Despite the success of GPT-4V in visual grounding, other MLLMs struggle to comprehend these visual tags. This limits their ability to perform tasks that involve understanding visual information, such as visual reasoning and instruction following.

💻Proposed solution: The research paper proposes a new learning paradigm called "list items one by one" to address this problem. This paradigm requires the model to enumerate and describe all visual tags placed on an image in alphanumeric order. By integrating a curated dataset of visual instruction tuning with other existing datasets, the MLLMs can be equipped with the ability to perform SoM prompting, which allows them to associate visual objects with text tokens. This strengthens the object-text alignment and improves the model's understanding of visual tags.

🤔Problem?:

The research paper addresses the issue of efficiently scaling LLMs beyond 50 billion parameters, with minimum trial-and-error cost and computational resources.

💻Proposed solution:

The research paper proposes a solution called Tele-FLM (aka FLM-2), which is a 52B open-sourced multilingual LLM. It features a stable and efficient pre-training paradigm, as well as enhanced factual judgment capabilities. Unlike other existing large language models, Tele-FLM is designed to be more easily scalable, requiring less computational resources and trial-and-error costs. It works by utilizing a combination of core designs, engineering practices, and training details, which are shared in the paper for the benefit of both academic and industrial communities.

🤔Problem?: The research paper addresses the issue of slow inference of large language models (LLMs). This is a common problem in natural language processing (NLP) tasks, where the models are often large and complex, leading to slow inference times.

💻Proposed solution: The research paper proposes an end-to-end solution called LayerSkip to speed up inference of LLMs. During the training phase, they apply a technique called layer dropout, where earlier layers have a low dropout rate and later layers have a higher dropout rate. They also introduce an early exit loss, where all transformer layers share the same exit. This helps increase the accuracy of early exits at earlier layers. During inference, they use a self-speculative decoding approach, where they exit at early layers and verify and correct with remaining layers. This approach has a smaller memory footprint and benefits from shared compute and activations, making it more efficient.

🤔Problem?: The research paper addresses the limitations of supervised fine-tuning (SFT) in aligning foundation LLMs to specific preferences for cross-lingual generation tasks.

💻Proposed solution: The research paper proposes a novel training-free alignment method called PreTTY, which uses minimal task-related prior tokens to bridge the gap between the foundation LLM and the SFT LLM. This allows for comparable performance without the need for extensive training. PreTTY works by initializing the decoding process with only one or two prior tokens, allowing the foundation LLM to achieve similar performance to its SFT counterpart.

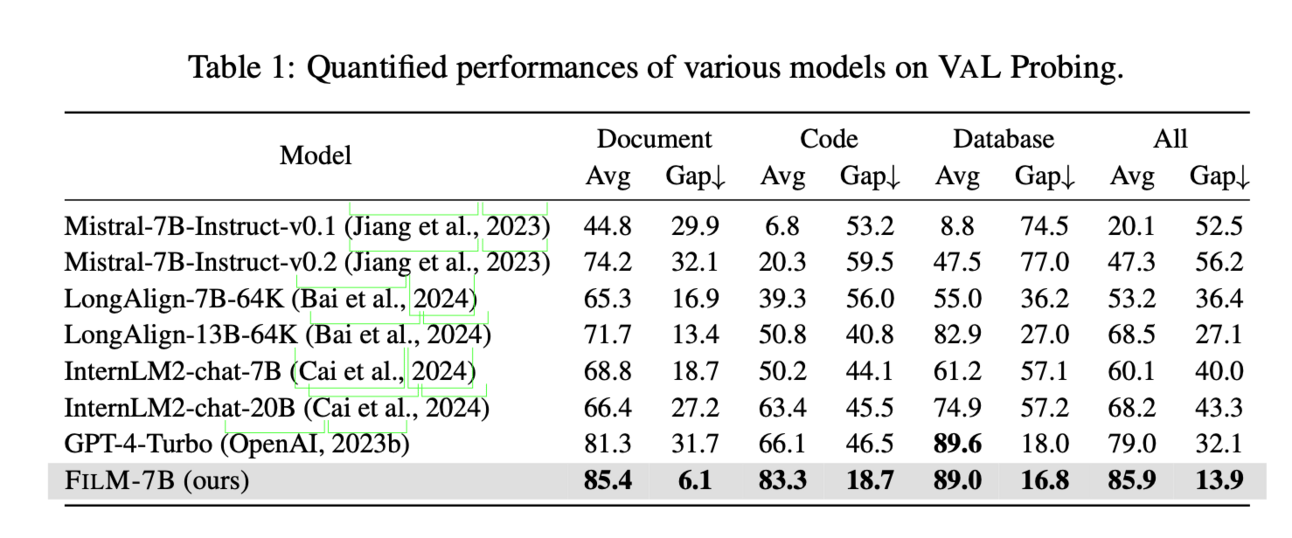

🤔Problem?: The research paper addresses the issue of lost-in-the-middle challenge faced by contemporary LLMs. These models struggle to fully utilize information within long contexts, which hinders their performance on tasks that require understanding and reasoning over long contexts.

💻Proposed solution: To solve this problem, the research paper proposes the use of information-intensive (IN2) training, which is a purely data-driven solution. This training leverages a synthesized long-context question-answer dataset, where the answer requires fine-grained information awareness on a short segment within a long context. Through this training, the model learns to integrate and reason over information from multiple short segments within a long context.

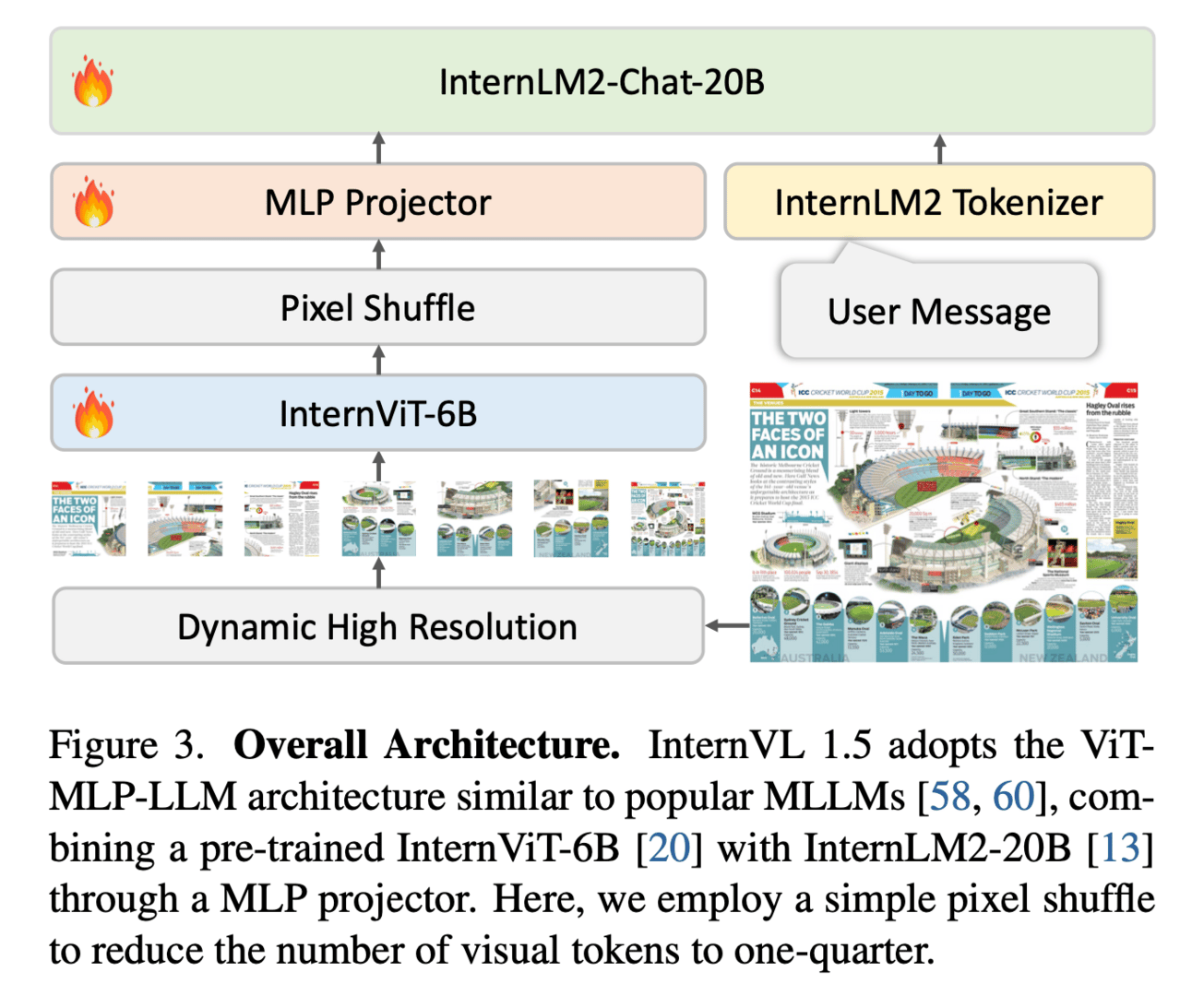

🔗Model:https://github.com/OpenGVLab/InternVL

A new model InternVL 1.5, an open-source multimodal large language model (MLLM). This model incorporates three simple improvements:

(1) Strong Vision Encoder, which uses a continuous learning strategy to improve the visual understanding capabilities of the large-scale vision foundation model, InternViT-6B, making it transferable and reusable in different LLMs.

(2) Dynamic High-Resolution, which divides images into tiles of varying sizes to support up to 4K resolution input.

(3) High-Quality Bilingual Dataset, which was carefully collected and contains English and Chinese question-answer pairs, enhancing performance in OCR- and Chinese-related tasks.

📊Results:

The research paper reports that InternVL 1.5 achieved state-of-the-art results in 8 out of 18 benchmarks, showing competitive performance compared to both open-source and proprietary models. The code for InternVL 1.5 has also been released on GitHub for others to use and improve upon.

Learn how to make AI work for you.

AI breakthroughs happen every day. But where do you learn to actually apply the tech to your work? Join The Rundown — the world’s largest AI newsletter read by over 600,000 early adopters staying ahead of the curve.

The Rundown’s expert research team spends all day learning what’s new in AI

They send you daily emails on impactful AI tools and how to apply it

You learn how to become 2x more productive by leveraging AI

LLMs evaluations

Research paper creates a dataset of Reddit posts from adolescents aged 12-19, annotated by expert psychiatrists for various mental health categories. Additionally, two synthetic datasets are created to assess the performance of LLMs when annotating data that they have generated themselves.

Research paper proposes B-MoCA, a novel benchmark specifically designed for evaluating mobile device control agents. B-MoCA is based on the Android operating system and defines 60 common daily tasks. It also incorporates a randomization feature to assess generalization performance by changing various aspects of mobile devices.

IndicGenBench is the largest benchmark for evaluating LLMs on user-facing generation tasks in 29 Indic languages. This benchmark includes a diverse set of tasks such as cross-lingual summarization, machine translation, and cross-lingual question answering. It also extends existing benchmarks to include underrepresented Indic languages through human curation, providing multi-way parallel evaluation data for the first time.

📚Survey papers

🧯Let’s make LLMs safe!!

The research paper proposes the DSN (Don't Say No) attack. This attack prompts LLMs to not only generate affirmative responses, but also to enhance the objective to suppress refusals. This is achieved by reformulating the task as adversarial attacks, which have shown to have a higher success rate than the typical attack in this category. Additionally, the research paper also proposes an ensemble evaluation pipeline, which incorporates Natural Language Inference (NLI) contradiction assessment and two external LLM evaluators. This helps to overcome the challenge of accurately assessing the harmfulness of the attack.

🤔Problem?: The research paper addresses the problem of privacy risks associated with the use of LLMs. These models, while showing early signs of artificial general intelligence, struggle with hallucinations which can lead to compromised privacy.

💻Proposed solution: The research paper proposes a solution to mitigate these hallucinations by storing external knowledge as embeddings, which can aid LLMs in retrieval-augmented generation. This means that the LLMs can access and use this stored knowledge to improve the accuracy of their outputs and reduce the risk of generating false or sensitive information. However, this solution also presents the risk of compromising user privacy, as recent studies have shown that the original text can be partially reconstructed from these embeddings by pre-trained language models.

🌈 Creative ways to use LLMs!!

GitHub - OmniSearchSage utilizes a unified query embedding combined with pin and product embeddings to improve search results of Pinterest!

Andes, a QoE-aware serving system that aims to enhance user experience for LLM-enabled text streaming services. Andes strategically allocates contended GPU resources among multiple requests over time to optimize their QoE (Quality aware experience). It works by considering the end-to-end token delivery process throughout the entire interaction with the user, delivering text incrementally and interactively.

The research paper proposes the concept of an AI-oriented grammar, which is designed specifically to suit the working mechanism of AI models. This grammar aims to simplify the code by discarding unnecessary formatting and using a minimum number of tokens to convey code semantics effectively. The proposed AI-oriented grammar is implemented in a modified version of the Python programming language, named Simple Python (SimPy), which also includes methods to enable existing AI models to understand and use SimPy efficiently. This is achieved through a series of heuristic rules that revise the original Python grammar, while maintaining identical Abstract Syntax Tree (AST) structures for code execution.

Categorization of Wikipedia! Research paper uses LLMs to extract semantic information from the textual data and its associated categories. This information is then encoded into a latent space, which is used as a basis for different approaches to assess and enhance the semantic identity of the categories. These approaches include using Convex Hull and Hierarchical Navigable Small Worlds (HNSWs) to visualize and organize the categories.

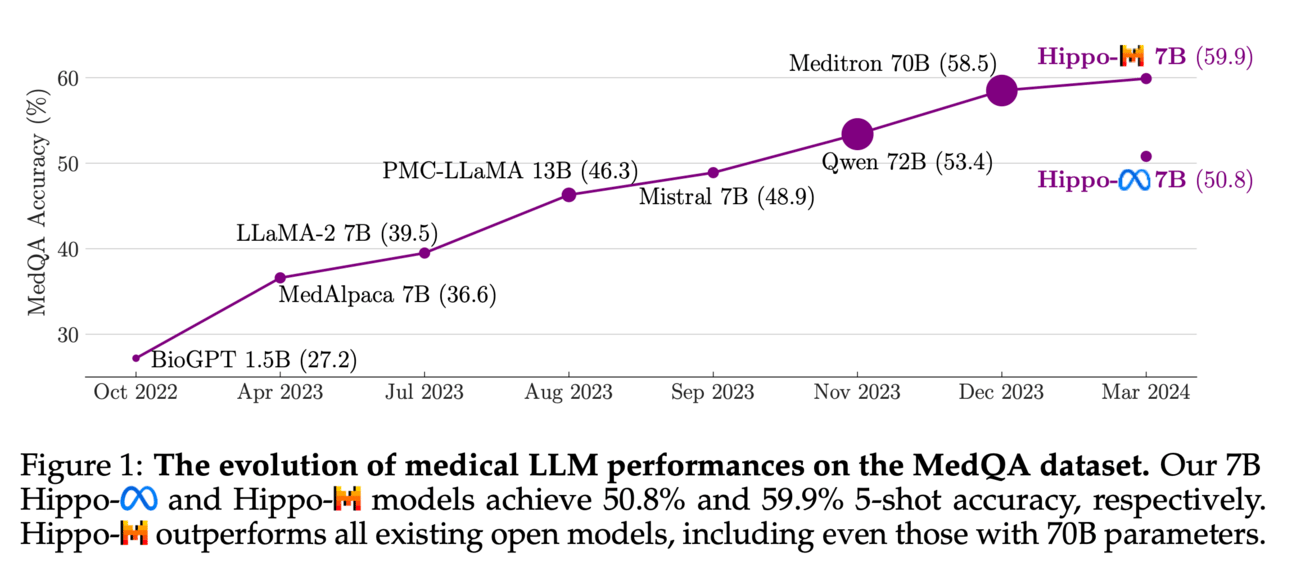

The research paper proposes Hippocrates, an open-source LLM framework specifically designed for the medical domain. It offers unrestricted access to training datasets, codebase, checkpoints, and evaluation protocols, making it easier for researchers to collaborate, build upon, refine, and rigorously evaluate medical LLMs within a transparent ecosystem. The paper also introduces Hippo, a family of 7B models tailored for the medical domain, which are fine-tuned and continually pre-trained through instruction tuning and reinforcement learning from human and AI feedback.