🔑 takeaway from today’s newsletter

From Ambiguous to Accurate: LLMs Now Tackle Vague Questions with Sharp Precision!

Guardians of the Algorithm: New Shields Protect AI from Sneaky Prompt Hacks and Data Poisoning!

AI Gets a Creative Spark: Mixing Logic with LLMs for Stories You've Never Dreamed Of!

Overconfidence Overruled: Entropic Steering Makes AI Agents Explore More and Guess Less!

Cracking the Cultural Code: Can LLMs Master Multilingual Chats and Hidden Contexts?

Core research improving LLMs!

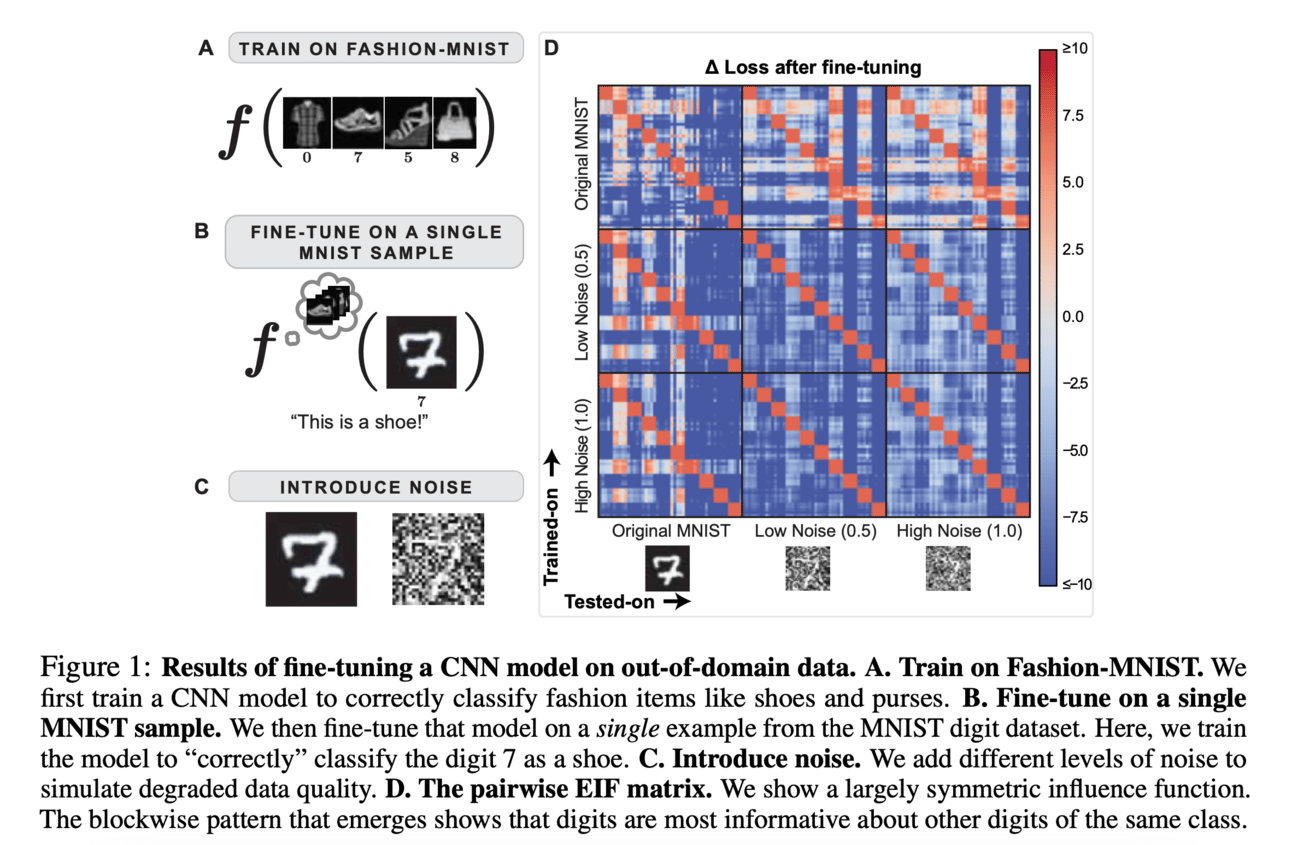

Why?: Improving and interpreting the learning process in neural networks is crucial for enhancing their performance.

How?: The researchers investigated how individual training samples influence the output of neural networks during fine-tuning. They measured empirical influences and evaluated these influences against criteria such as decreasing influence with semantic distance, sparseness, noise invariance, transitive causality, and logical consistency. The investigation was conducted on both simple convolutional networks and a modern LLM. Additionally, they explored the impact of prompts in potentially mitigating the shortcomings found in these models when they fail to meet the given criteria.

Results: The study found that popular models, including simple convolutional networks and modern LLMs, fail to meet the desiderata for influences. However, prompting showed potential in partially rescuing this failure, offering a practical way to quantify how well neural networks learn from fine-tuning stimuli.

Why?: Understanding how different symbolic solvers impact LLMs logical reasoning could help improve their performance on complex tasks.

How?: The researchers integrated LLMs with three different symbolic solvers: Z3, Pyke, and Prover9. They then evaluated the combined systems performance on three logical reasoning datasets: ProofWriter, PrOntoQA, and FOLIO. The integration involved designing interfaces for LLMs to communicate effectively with the solvers. Extensive experimentation measured the impact of each solver on the LLMs ability to solve logical reasoning tasks. Performance was assessed based on accuracy and the number of questions each solver executed successfully.

Results: Z3's overall accuracy performance slightly surpasses Prover9, but Prover9 executed more questions successfully. Pyke's performance was significantly inferior to both Z3 and Prover9.

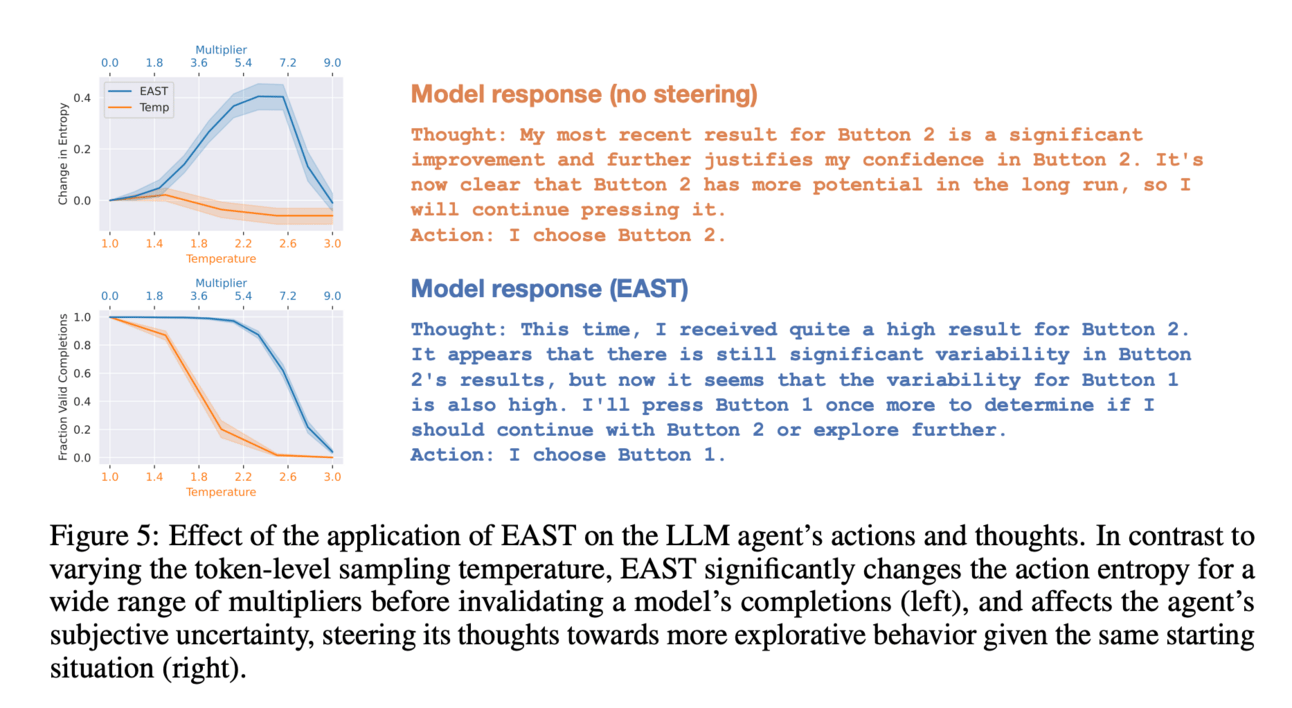

Why?: This research tackles the problem of overconfidence in LLM agents, which is critical for improving their decision-making and explorative behaviors.

How?: Experiments were conducted in controlled sequential decision-making tasks to observe LLM behavior. Researchers identified that LLM agents display overconfidence due to a collapse in the entropy of the action distribution when sampling. Traditional token-level sampling methods were found insufficient for promoting exploration. Therefore, an activation steering method called Entropic Activation Steering (EAST) was introduced. EAST works by calculating a steering vector as an entropy-weighted combination of representations and then manipulating the LLM's activations during the forward pass to increase uncertainty and exploration in the actions.

Results: EAST was able to reliably increase the entropy in LLM agent actions, leading to enhanced explorative behavior. It also allows for better control and interpretation of how LLM agents represent uncertainty.

Let’s make LLMs safe!!

LLMs often stumble when faced with under-specified queries—questions that lack sufficient detail. This leads to poor or irrelevant responses. Researchers analyzed public chat logs and found this issue to be widespread. To address it, they modeled the problem using Partially Observed Decision Processes (PODPs). Think of PODPs as a way to make optimal decisions when you don't have all the information—a bit like navigating a foggy road with limited visibility.

By framing the chatbot's decision-making process as a PODP, they could derive improved policies that guide the LLM to ask clarifying questions or provide more useful answers despite the ambiguity. They then recalibrated LLMs using learned control messages based on these policies, effectively teaching the models to handle vagueness better.

LLMs are vulnerable to prompt hacking and adversarial attacks, which can manipulate them into producing harmful or misleading content. For instance:

Prompt Injection Attacks: Crafting inputs that trick the model into revealing confidential information or behaving undesirably.

Jailbreaking Attacks: Bypassing the model's safety protocols to generate prohibited content.

Data Poisoning and Backdoor Attacks: Injecting malicious data during training so the model behaves incorrectly when triggered.

A comprehensive survey mapped out these vulnerabilities and highlighted mitigation strategies. Defenses include:

Input Sanitization: Filtering or rephrasing user inputs to remove malicious patterns.

Robust Training: Exposing models to adversarial examples during training to make them resilient.

Monitoring and Detection: Implementing systems to detect unusual model behaviors indicative of an attack.

Bias in LLM outputs can perpetuate stereotypes and unfairness. Traditional debiasing methods often degrade the model's language fluency. Enter LIDAO (Limited Interventions for Debiasing AI Outputs), a framework based on information theory. Instead of overhauling the entire model, LIDAO makes minimal adjustments to reduce bias.

Imagine tuning a radio to eliminate static without changing the station. LIDAO fine-tunes the model's outputs to maintain clarity and expressiveness while ensuring fairness. Tests on models ranging from 0.7 to 7 billion parameters showed that LIDAO effectively debiased outputs even in adversarial scenarios designed to provoke biased responses.

With AI-generated content proliferating, distinguishing it from human-written text is crucial to combat misinformation. Researchers developed a hybrid detection method combining:

Traditional TF-IDF Techniques: Analyzing word importance based on frequency.

Advanced Machine Learning Models: Utilizing classifiers like Bayesian models, Stochastic Gradient Descent (SGD), Categorical Gradient Boosting (CatBoost), and multiple instances of DeBERTa-v3-large models.

By merging classic text analysis with deep learning, this approach enhances detection accuracy. Extensive testing confirmed its superiority over existing methods, aiding efforts to maintain content authenticity.

LLMs sometimes provide confident answers even when unsure, which can mislead users. To promote honesty without sacrificing helpfulness, researchers introduced HoneSet, a dataset of 930 queries across six categories designed to evaluate model honesty.

They proposed two methods:

Training-Free Approach: Using curiosity-driven prompting to encourage the model to express uncertainty naturally.

Fine-Tuning Approach: A two-stage training process where the model first learns to distinguish honest from dishonest responses, then focuses on being helpful while maintaining honesty.

These methods teach LLMs that it's acceptable to admit uncertainty—much like a knowledgeable person saying, "I'm not sure, but I can find out." Experiments on nine different LLMs showed improved trustworthiness in their responses.

Knowing how confident an LLM is in its response helps users assess reliability. Researchers developed a framework to estimate this confidence using:

Engineered Features: Extracting interpretable data from the model's outputs, such as response length, specificity, and use of hedging words.

Logistic Regression Model: Training a statistical model to predict confidence levels based on these features.

By treating the LLM as a black box, they could apply this method without altering the model's architecture. Tests using benchmark datasets like TriviaQA, SQuAD, CoQA, and Natural Questions demonstrated that this approach effectively indicates when the model's answers can be trusted.

Creative ways to use LLMs!!

LLMs excel at generating coherent text but often lack diversity in storytelling. To tackle this, this paper introduced a hybrid approach that combines LLMs with Answer Set Programming (ASP), a form of symbolic logic programming. By using ASP to generate abstract story structures, the LLMs are guided to produce narratives with greater diversity. This method results in stories that are more varied and creative compared to those generated by LLMs alone, highlighting the benefits of integrating symbolic methods for guiding content generation.

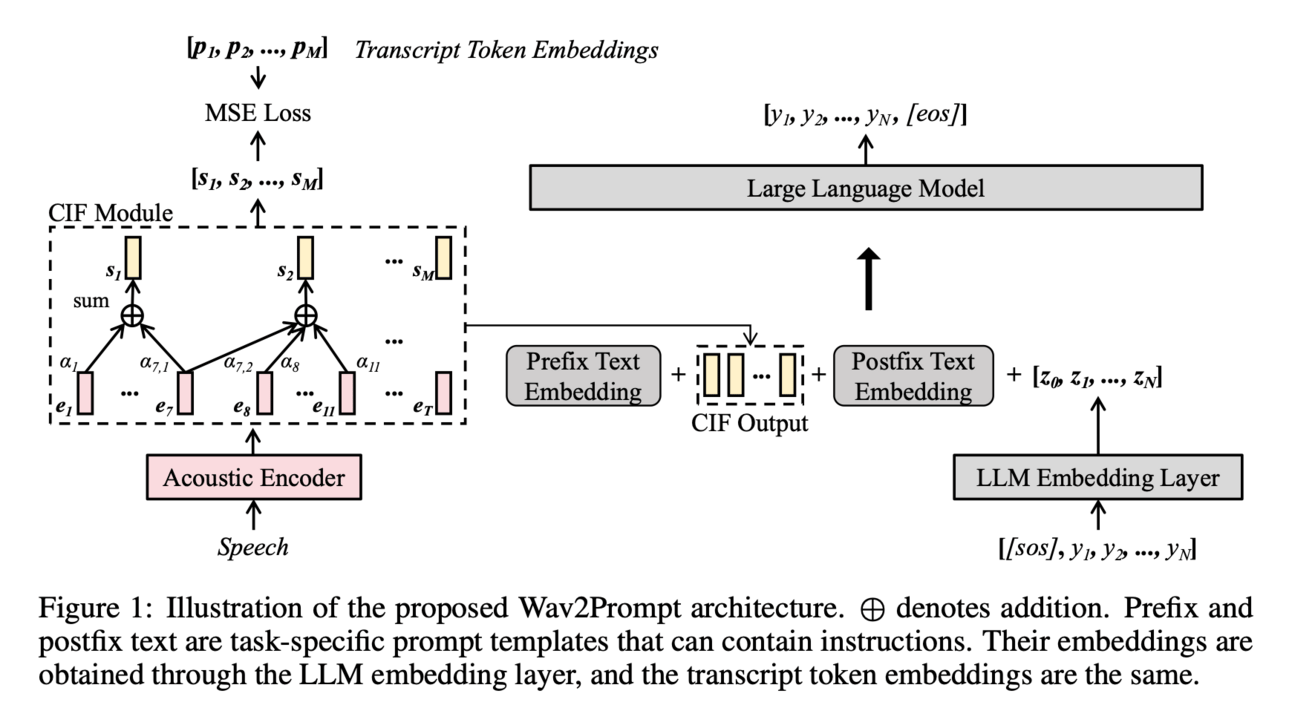

Bridging the gap between speech processing and language models, Wav2Prompt presents an end-to-end approach for integrating spoken input with LLMs. It trains on speech data to learn continuous speech representations, which serve as prompts for the LLM. This method aligns speech and text using a continuous integrate-and-fire mechanism, allowing the model to handle tasks like speech translation and spoken-query-based QA effectively. Notably, Wav2Prompt outperforms traditional cascaded models in few-shot learning scenarios, demonstrating significant improvements in tasks such as English-French speech translation.

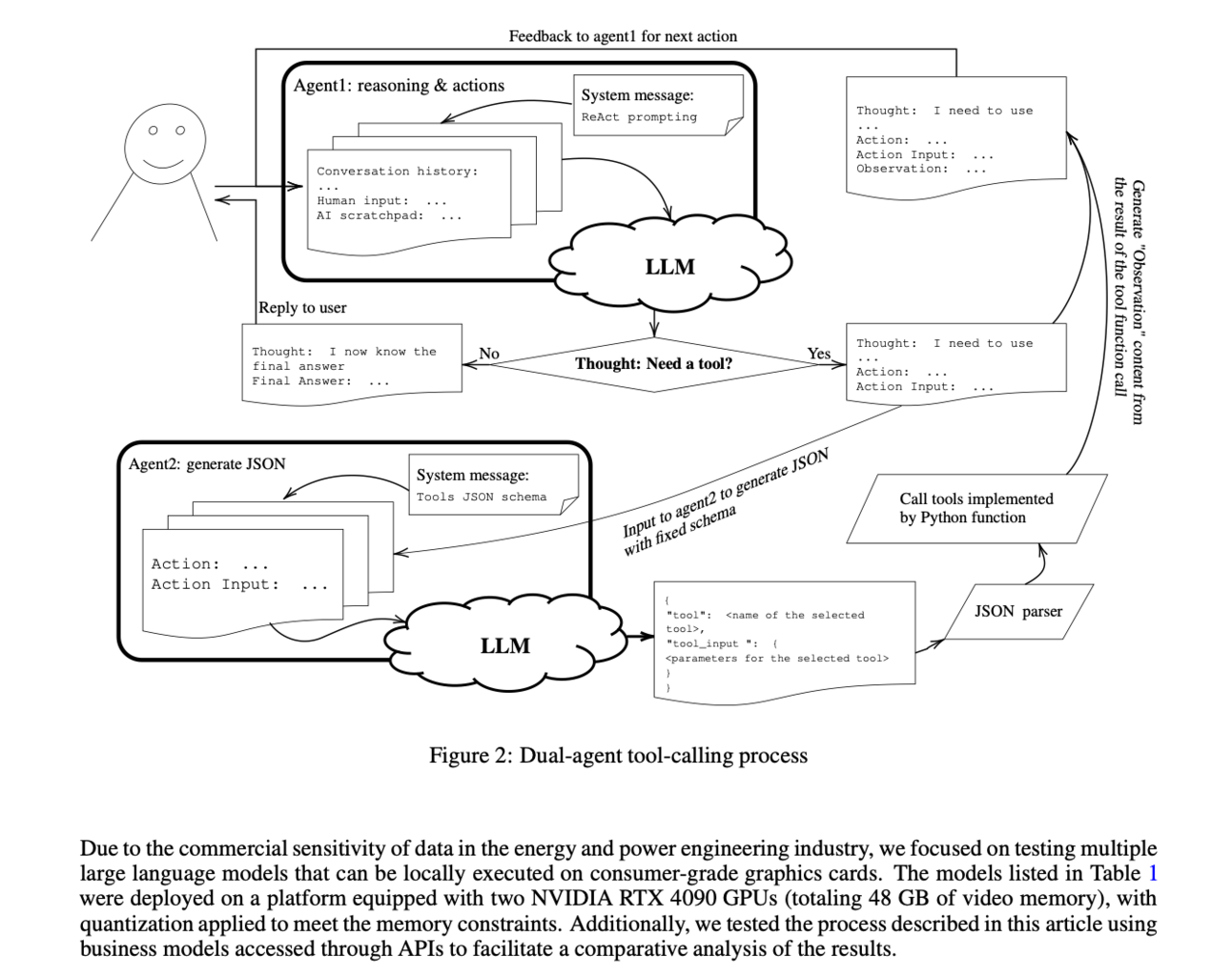

Exploring the capabilities of LLMs in specialized domains, researchers developed a domain-specific ReAct framework for physics-integrated iterative modeling, focusing on gas path analysis of gas turbines. By setting up a dual-agent tool-calling process, the LLMs integrate reasoning with expert knowledge and industry tools. While smaller models struggled, larger LLMs showed promising abilities in handling complex, multi-component problems when fine-tuned appropriately. This underscores the potential of large-scale LLMs in specialized engineering tasks, provided they are equipped with domain knowledge and proper prompt design.

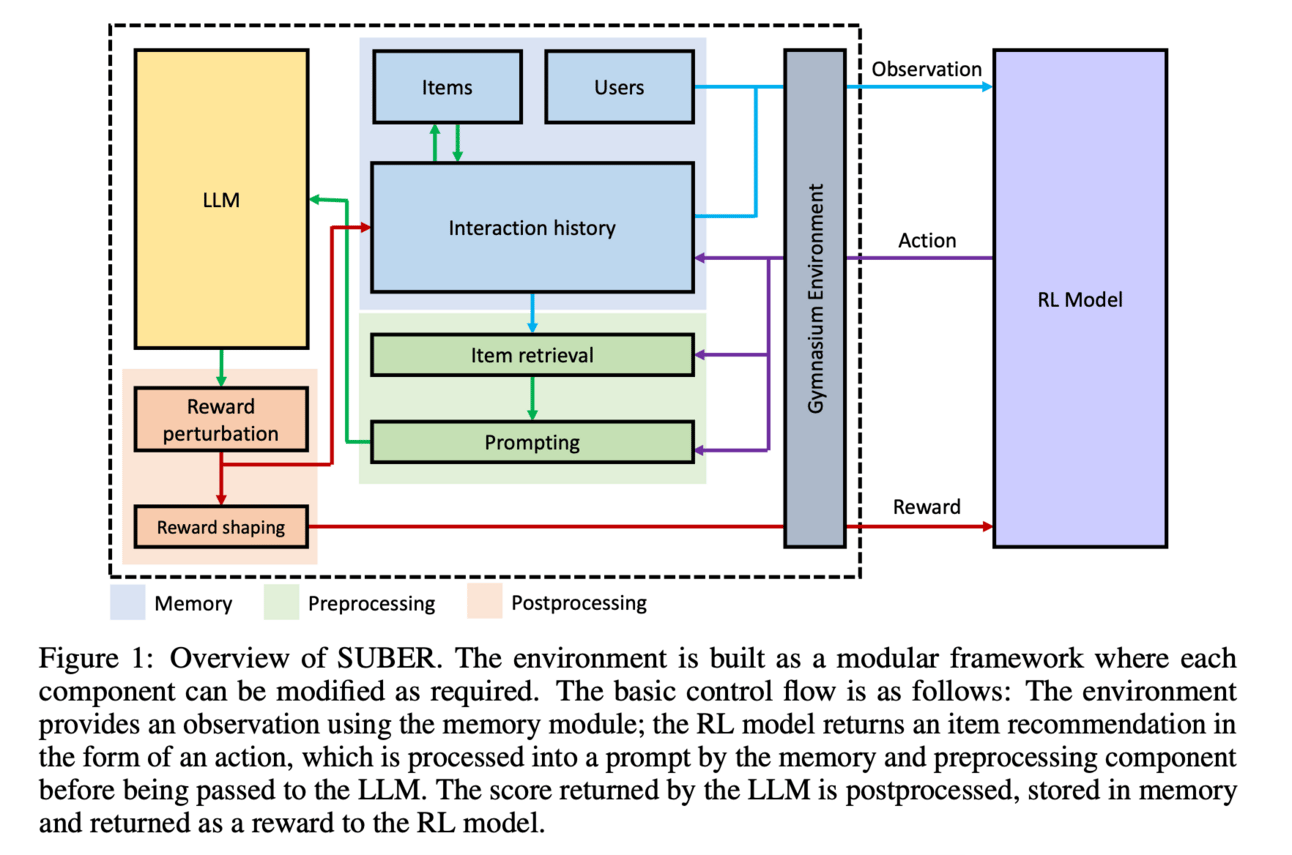

Training recommender systems often suffers from limited online data and evaluation challenges. An innovative solution is the creation of an LLM-based synthetic environment that simulates human behavior for training and evaluating recommender systems using reinforcement learning (RL). This framework allows for more comprehensive testing and development of recommendation algorithms by providing a controlled environment that mimics user interactions, as demonstrated in experiments with movie and book recommendations.

Generating presentation slides from lengthy documents can be labor-intensive. A multi-staged end-to-end model combines LLMs with Vision-Language Models (VLMs) to automate this process. The LLM first extracts and summarizes key content, while the VLM identifies and incorporates relevant visual elements. Through iterative refinement, the model produces cohesive and visually appealing presentations, outperforming state-of-the-art prompting methods in both automated metrics and human evaluations.

In sequential recommendation systems (SRS), incorporating LLMs can be inefficient, especially with sparse textual data. The TSLRec paradigm addresses this with a two-stage approach. Initially, it performs user-level supervised fine-tuning to inject collaborative filtering information using a pre-trained SRS model. Then, it enhances item representations by generating embeddings that merge this collaborative information with the LLM's inference capabilities. This method has been validated on benchmark datasets, showing improved efficiency and effectiveness in recommendations.

Self-reflection enhances learning, but scaling personalized reflection activities is challenging. Randomized field experiments in undergraduate courses demonstrated that LLM-guided reflection can significantly improve student confidence and exam performance. By providing tailored reflection prompts, LLMs help students consolidate knowledge more effectively than traditional methods like reviewing lecture slides, indicating a valuable role for LLMs in educational settings.

Accurate relevance judgment is essential for effective product search. Researchers explored techniques for fine-tuning LLMs to automate the assessment of query-item pairs (QIPs). By optimizing hyperparameters and experimenting with different attribute concatenation methods and prompting strategies, they achieved relevance annotations comparable to human evaluators. This advancement has immediate applications in improving product search engines by automating a traditionally labor-intensive process.

LLMs for robotics & VLLMs

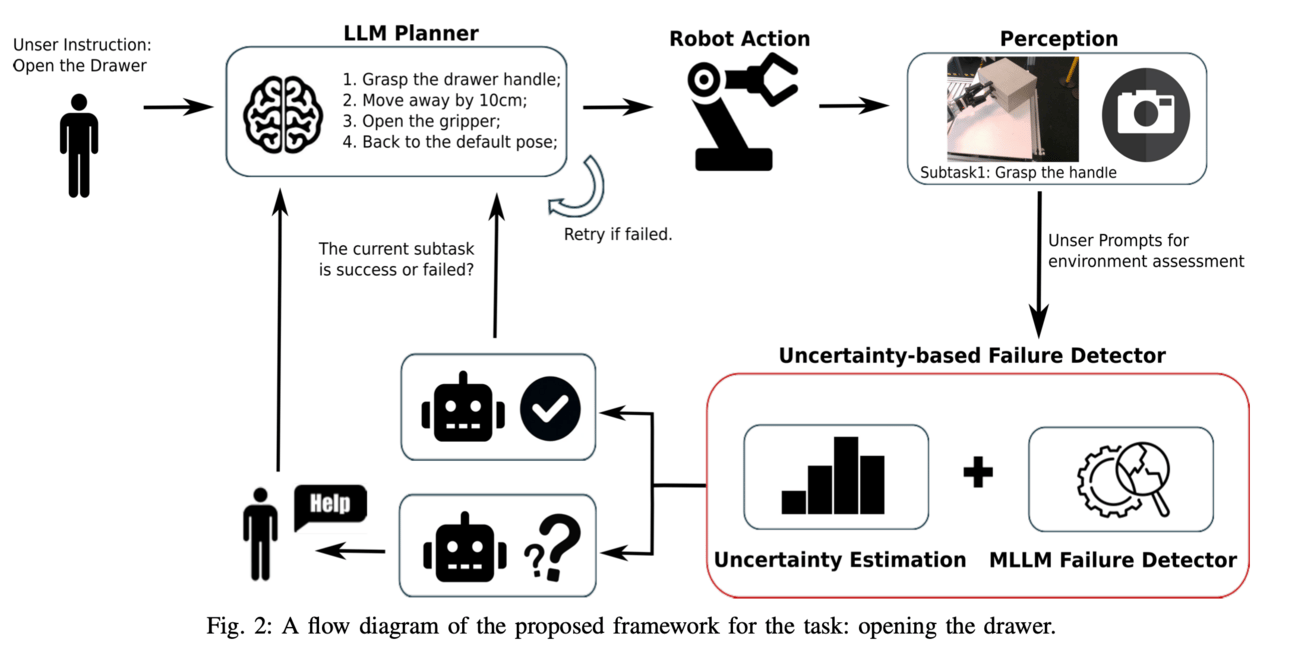

Why?: The research is important as it seeks to enhance the reliability of LLM-based closed-loop planning in robotic manipulation tasks by detecting and addressing planning failures.

How?: The authors introduce KnowLoop, a framework that incorporates an uncertainty-based failure detection mechanism in closed-loop LLM-based planning. The framework quantifies uncertainty using three methods: token probability, entropy, and self-explained confidence. These methods are evaluated using specific prompting strategies. A custom dataset with various manipulation tasks and an LLM-based robot system is used to test these metrics. The effectiveness of the metrics is measured by filtering out uncertain predictions and actively seeking human help when thresholds are exceeded.

Results: The research demonstrates that token probability and entropy are more reflective metrics compared to self-explained confidence. By setting an appropriate threshold to filter out uncertain predictions, the accuracy of failure detection can be significantly enhanced, thus boosting the effectiveness of closed-loop planning and the overall success rate of tasks.

LLMs evaluations

Why?: To determine the most effective iterative refinement approach in text summarization for improving LLM performance.

How?: The researchers designed two strategies, Prompt Chaining and Stepwise Prompt, for iterative refinement in text summarization. They implemented Prompt Chaining by using three distinct prompts to sequentially draft, critique, and refine the text. For the Stepwise Prompt method, these three phases were integrated within a single prompt to simulate the refinement process. The effectiveness of each method was evaluated through extensive experiments comparing the quality of the generated summaries.

Results: Experimental results show that the prompt chaining method can produce a more favorable outcome. The stepwise prompt might produce a simulated refinement process, according to various experiments. These insights could be extrapolated to other applications, contributing to the broader development of LLMs.

Why?: Evaluating LLMs in multilingual and culturally nuanced scenarios is crucial for effective real-world applications.

How?: Researchers conducted a performance evaluation of seven LLMs (Mistral-7b, Mixtral-8x7b, GPT-3.5-Turbo, Llama-2-70b, Gemma-7b, GPT-4, and GPT-4-Turbo) on sentiment analysis tasks using a dataset comprising code-mixed WhatsApp chats with Swahili, English, and Sheng. They employed both quantitative metrics like F1 score and qualitative assessments by analyzing the explanations provided by each LLM for its predictions. The study focused on linguistic and contextual nuances, decision-making transparency, and cultural understanding, comparing the models' effectiveness in these areas.

Why?: The research is important because autoformalization can vastly improve the efficiency and accuracy of formalizing mathematical proofs, thereby advancing scientific research and development.

How?: The research introduced a new evaluation benchmark specifically designed for Lean4, a mathematical programming language. The benchmark was then applied to test the autoformalization capabilities of state-of-the-art LLMs, including GPT-3.5, GPT-4, and Gemini Pro. The evaluation involved analyzing the performance of these LLMs on various mathematical tasks, particularly focusing on more complex areas of mathematics to gauge their autoformalization potential comprehensively.

Results: The comprehensive analysis revealed that, despite recent advancements, these LLMs still exhibit limitations in autoformalization, particularly in more complex areas of mathematics. These findings underscore the need for further development in LLMs to fully harness their potential in scientific research and development.

Why?: This research is important because it explores the capabilities and limitations of LVLMs in chart comprehension and reasoning, a nuanced area that demands both vision-language reasoning and understanding of data visualizations.

How?: The researchers conducted a comprehensive evaluation of LVLMs, including models such as GPT-4V and Gemini, focusing on four major chart reasoning tasks: chart question answering, chart summarization, fact-checking with charts, and general chart comprehension. The evaluation included both quantitative assessments and qualitative analysis across a diverse range of chart types. The qualitative evaluation was aimed at identifying specific strengths and weaknesses of LVLMs. Techniques used involved assessing the fluency and accuracy of generated text, and identifying issues such as hallucinations, factual errors, and data bias.

Results: The findings reveal that LVLMs demonstrate impressive abilities in generating fluent texts covering high-level data insights while also encountering common problems like hallucinations, factual errors, and data bias. The study highlights the key strengths and limitations of chart comprehension tasks, offering insights for future research.