🔑 takeaway from today’s newsletter

🧠 LLM Surgery: Learn how researchers can update or remove specific knowledge in LLMs without retraining the entire model, making AI more efficient and up-to-date.

🔀 Adaptive Transformers: Meet new Transformer models that adjust their depth dynamically, processing simpler inputs faster by skipping unnecessary layers.

⚡ FP8 Training Acceleration: Discover how using FP8 precision speeds up training and reduces memory usage in LLMs, leading to faster and more efficient models without sacrificing performance.

🎨 Creative LLM Applications: See how LLMs are being used in innovative ways—from assisting in Alzheimer's detection to generating recipes and food images.

Don’t have much time?

No worries! Jump onto your car or subway for commute and listen to this amazing podcast covering these papers!

Core research improving LLMs!

Updating large language modes (LLMs) with new information and removing outdated or problematic knowledge without retraining from scratch is a significant challenge. Traditional fine-tuning methods are computationally expensive and risk overwriting valuable existing knowledge.

How?

The paper introduces LLM Surgery, a framework that efficiently modifies an LLM's knowledge base using a three-component objective function:

Unlearning Objective (Reverse Gradient): Removes outdated knowledge by performing gradient ascent (reverse gradient) on an unlearning dataset Dunlearn. This effectively increases the model's loss on this data, causing it to forget specific information.

Learning Objective (Gradient Descent): Incorporates new knowledge by performing gradient descent on an update dataset Dupdate.

Retention Objective (KL Divergence Minimization): Ensures that the model's outputs remain similar to the original model on a retain dataset Dretain by minimizing the Kullback-Leibler (KL) divergence between the original and updated models' output distributions.

Results

Applying LLM Surgery to the LLaMA2-7B model, the researchers achieved:

Significant forgetting on the unlearn dataset.

A 20% improvement on the update dataset.

Minimal performance degradation on the retain dataset.

Training LLMs using lower-precision formats like FP8 can significantly reduce computational costs and memory usage. However, scaling FP8 training to large datasets (trillion tokens) reveals instabilities that need to be addressed for practical deployment.

How?

The researchers identified that the SwiGLU activation function amplifies outlier gradients over long training durations, leading to numerical instabilities in FP8 training. To mitigate this, they introduced:

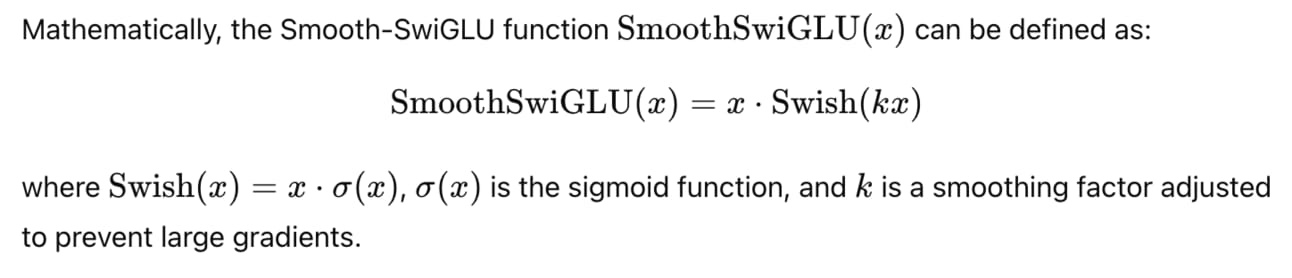

Smooth-SwiGLU Activation Function: A modification that stabilizes FP8 training by smoothing the activation function, preventing gradient explosions without altering its functional behavior.

FP8 Quantization of Adam Optimizer Moments: Applied FP8 quantization to the first and second moments in the Adam optimizer to maintain stability and efficiency.

Results

Training a 7B parameter model on 2 trillion tokens using 256 Intel Gaudi2 accelerators:

Achieved comparable performance to BF16 baselines.

Realized up to a 34% speedup and 43% memory reduction during training.

Demonstrated that FP8 training is feasible and efficient for large-scale LLMs when addressing activation-induced instabilities.

Adaptive Large Language Models by Layerwise Attention Shortcuts

Traditional Transformer architectures process inputs sequentially layer by layer, which can be inefficient and may not adapt computations based on input complexity. Introducing adaptability can improve efficiency and performance.

How?

Paper proposes modifications to the Transformer architecture by adding attention shortcuts from the final layer to all intermediate layers:

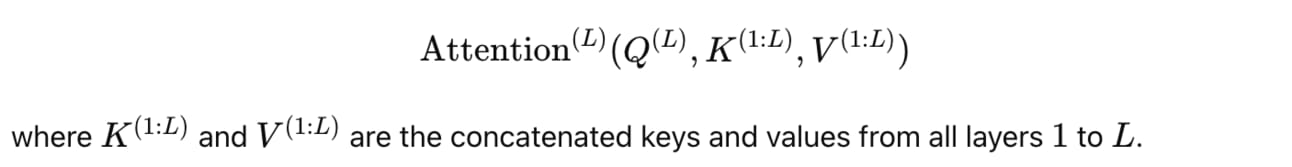

Layerwise Attention Shortcuts: The final layer attends to the outputs of all previous layers, allowing it to directly access lower-level features.

Dynamic Depth Adaptation: The model can adjust its computational depth based on input complexity, effectively skipping unnecessary layers for simpler inputs.

The modified attention mechanism at the final layer LL becomes:

Handling long contexts in LLMs is computationally intensive due to the quadratic complexity of self-attention mechanisms. Reducing inference time and memory consumption is critical for deploying LLMs in practical applications.

How?

The paper introduces RetrievalAttention, a method that accelerates inference by:

Key-Value (KV) Caching with Vector Retrieval: Instead of processing the entire context, the model retrieves only the most relevant key-value pairs from the KV cache using Approximate Nearest Neighbor Search (ANNS).

Attention-Aware Vector Search: Adapts the retrieval process to the distribution of query vectors, ensuring that relevant context is efficiently retrieved.

for a query vector q, the attention is computed over a subset of keys {ki} and values {vi}:

By selecting only the top-k keys ki that are most similar to q, the computation is significantly reduced.

Results

The method accesses only a small fraction of the KV cache (1-3% KV cache access) during inference. It achieves up to a 4x speedup in inference time with minimal impact on model performance!

By understanding the limitations of LLMs working memory capacity their ability to handle tasks requiring long-term dependencies can be improved.

How?

Paper investigate the working memory capacity of Transformers by training models on N-back tasks to test their ability to remember information NN steps back. It observes that as NN increases, the entropy of the attention distribution increases, leading to dispersed attention and reduced capacity.

Paper proposes that self-attention mechanisms inherently limit working memory capacity due to this dispersion. It shows that attention mechanisms contribute to capacity limits due to increased entropy.

Let’s make LLMs safe!!

Defending against Reverse Preference Attacks is Difficult - shows how adversarial reinforcement learning can induce harmful behaviours in safety-aligned LLMs through Reverse Preference Attacks (RPAs). Explores defence strategies using Constrained Markov-Decision Processes, finding that 'online' defences with controlled loss functions are effective, while 'offline' defences are less so.

MEOW: MEMOry Supervised LLM Unlearning Via Inverted Facts proposes MEOW, a gradient descent-based method for unlearning sensitive information in LLMs. Generates inverted facts and utilizes the MEMO metric to quantify memorization, effectively improving forgetfulness without sacrificing model utility.

LLM-Powered Text Simulation Attack Against ID-Free Recommender Systems Introduces a text poisoning attack using LLMs to manipulate textual information of target items in ID-free recommender systems. Leverages popular item characteristics to create promotional descriptions that mimic popular items.

Jailbreaking Large Language Models with Symbolic Mathematics shows how encoding harmful prompts into mathematical problems can bypass LLM safety measures. It encodes harmful natural language prompts into mathematical problems.The MathPrompt technique achieves 73.6% attack success rate in top 13 LLMs!

Creative ways to use LLMs!!

Introduces a framework that utilizes LLMs for profiling linguistic deficits in patient transcripts. Shows an 8.51% improvement over state-of-the-art methods in Alzheimer's disease detection.

Paper develops ChefFusion, a foundation model integrating LLMs with pre-trained image encoders and decoders. Performs tasks like recipe generation and food image generation, advancing multimodal food computing.

LLMs for robotics & VLLMs

Leveraging LLMs for Automated Framing Analysis in TV Shows uses prompt engineering to guide LLMs in identifying framing in spoken content from TV shows. Achieves agreement rates up to 43% with human annotations, offering a support tool for framing analysis.

Paper introduces Arena 4.0, utilizing LLMs and diffusion models to generate human-centric environments from text prompts or floorplans. Offers a 3D model database and migrates to ROS 2 for hardware compatibility, validated through a user study.

Paper introduces a hierarchical LLM-based framework for optimizing multi-robot task allocation in real-time. Validated through simulations and real-world experiments, enhancing adaptability in hazardous environments.

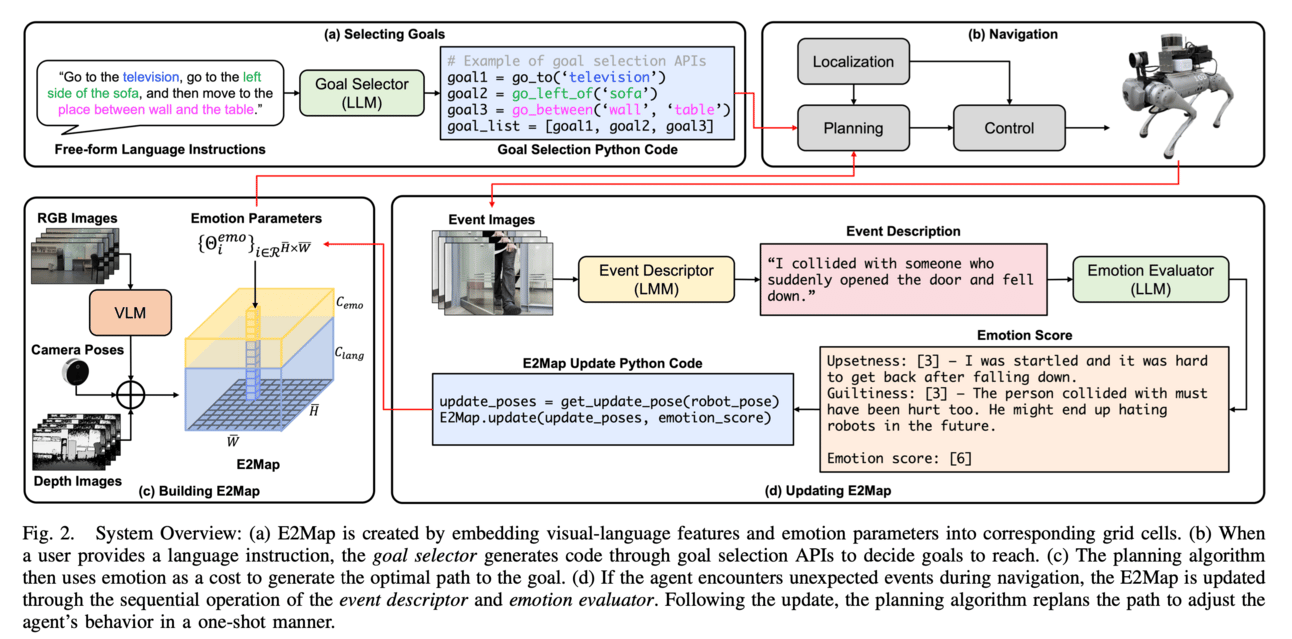

Paper creates an 'Experience-and-Emotion Map' integrating LLM knowledge with real-world experiences. Allows robots to adjust behavior dynamically in stochastic environments.

LLMs evaluations

What Would You Ask When You First Saw a2+b2=c2? Evaluating LLM on Curiosity-Driven Questioning Introduces a framework to assess LLMs ability to generate curious questions when presented with new scientific statements. Surprisingly it found out that models like GPT-4 and Mistral 8x7b generate coherent questions, with smaller models matching or exceeding their effectiveness.

SpecEval: Evaluating Code Comprehension in Large Language Models via Program Specifications introduces SpecEval, a framework using program specifications to assess LLMs code comprehension. It designs four tasks utilizing formal specifications, counterfactual analysis, and consistency checks.

Investigating Context-Faithfulness in Large Language Models studies factors influencing context-faithfulness in LLMs by quantifying memory strength and evaluating different evidence styles. Uses datasets with well-known and long-tail questions.

Confidence Estimation for LLM-Based Dialogue State Tracking explores methods to estimate confidence scores in dialogue state tracking. It finds that fine-tuning open-weight LLMs enhances calibration accuracy and improves performance.

Survey papers

From Linguistic Giants to Sensory Maestros: A Survey on Cross-Modal Reasoning with Large Language Models - survey of methodologies for cross-modal reasoning using LLMs.

Art and Science of Quantizing Large-Scale Models: A Comprehensive Overview - A review of variations of quantization methods like post-training quantization (PTQ) and quantization-aware training (QAT).