🔑 takeaway from today’s newsletter

Tokens are so yesterday! The Byte Latent Transformer ditches tokens for dynamic byte patches, making models faster and more efficient.

Less is more! TrimLLM trims unnecessary layers, boosting speed without sacrificing smarts. It's like a transformer on a diet!

Now you cache it, now you don't! Slashing KV cache memory usage to just 20%, it's the Houdini of memory optimization.

Now you cache it, now you don't! Slashing KV cache memory usage to just 20%, it's the Houdini of memory optimization.

From drone dances to AR cooking! See how LLMs are shaking things up in creative ways you never imagined.

TL;DR - No time to read entire newsletter? Don’t worry We’ve got you covered! Listen 🎧 to this fun and informative podcast 🎙️ using Google’s NotebookLM which discuss important research papers from this newsletter in good detail with real world references!

Architecture & Token Processing

Research papers from this section explore fundamental architectural improvements and token processing methods, achieving better scalability and efficiency through novel approaches to model architecture and token handling.

The Byte Latent Transformer (BLT) operates at the byte level rather than on tokens. Instead of fixed-size tokens, BLT forms dynamically sized byte patches determined by the entropy (unpredictability) of the next byte. High-entropy regions, which contain more information, receive more computational focus.

Dynamic Patching: BLT groups sequences of bytes into variable-length patches based on predictability, efficiently capturing patterns without relying on a predefined vocabulary.

Entropy-Based Processing: By allocating computational resources according to the complexity of the data (measured by entropy), BLT ensures that challenging inputs receive more attention.

The researchers conducted a FLOP-controlled scaling study, training BLT models with up to 8 billion parameters on 4 trillion bytes of data. This study assessed the efficiency and performance of byte-level models compared to traditional token-based LLMs.

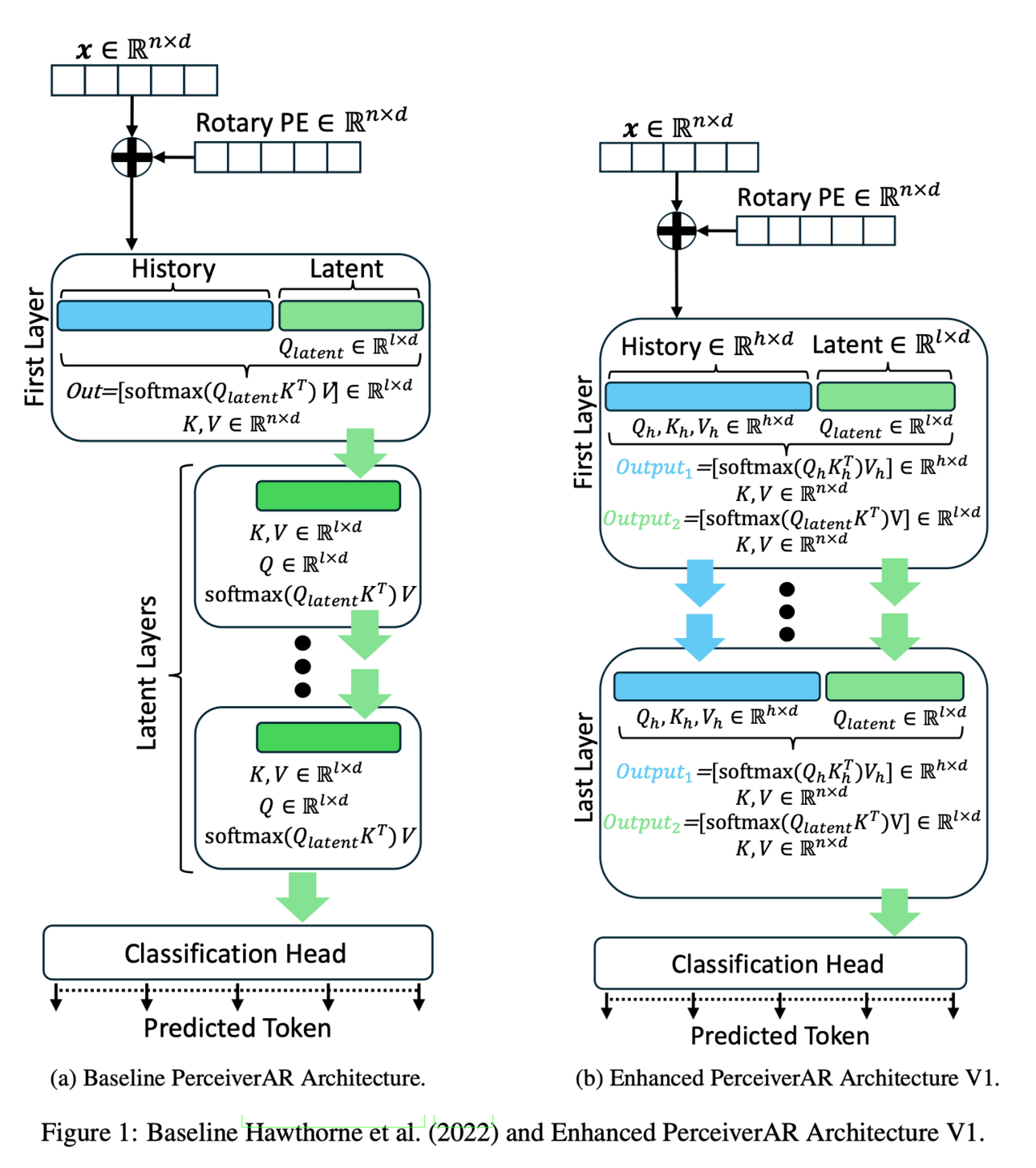

This research builds upon the Perceiver AR architecture, designed to handle longer sequences efficiently. By integrating ideas from Long Low-Rank Adaptation (Long-LoRA), the researchers develop an enhanced architecture called the Long LoRA Perceiver. This model introduces architectural enhancements that balance computational efficiency and performance. It applies low-rank approximations to the attention matrices, reducing computational complexity from quadratic to linear with respect to sequence length. Additionally, it utilizes hierarchical attention mechanisms to capture both local and global dependencies efficiently, enabling the model to focus on relevant information across different scales within the sequence. By carefully optimizing model depth, width, and other hyperparameters, the Long LoRA Perceiver maximizes performance while keeping computational demands manageable.

The research introduces an efficient method to "upcycle" a pre-trained dense model into an MoE architecture. Specifically, an 8-Expert Top-2 MoE model is trained using pre-trained dense checkpoints from Llama 3-8B. This approach leverages existing knowledge in the dense model and integrates MoE layers with minimal additional training. By adding MoE layers where each expert specializes in different data subsets, the model increases its capacity. During inference, only the top two most relevant experts are activated per input token, keeping computational costs similar to the original dense model. The upcycling process requires less than one epoch of training, significantly reducing time and resources compared to training an MoE model from scratch. Initializing the MoE model with pre-trained dense weights helps maintain performance while benefiting from the increased capacity.

Results: The upcycled MoE model achieves a 2× increase in model capacity compared to the original Llama 3-8B dense model

Advertisement

Please support my work by checking what this advertise offer. Maybe it helps you!👇

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

Model Efficiency & Compression

Recent advancements have introduced innovative techniques to compress and accelerate LLMs. current methods such as quantization and layer pruning achieve substantial speedups (2× to 5×) and memory savings while maintaining, or even enhancing, model performance. This section covers papers improving the LLMs efficiency and their compression.

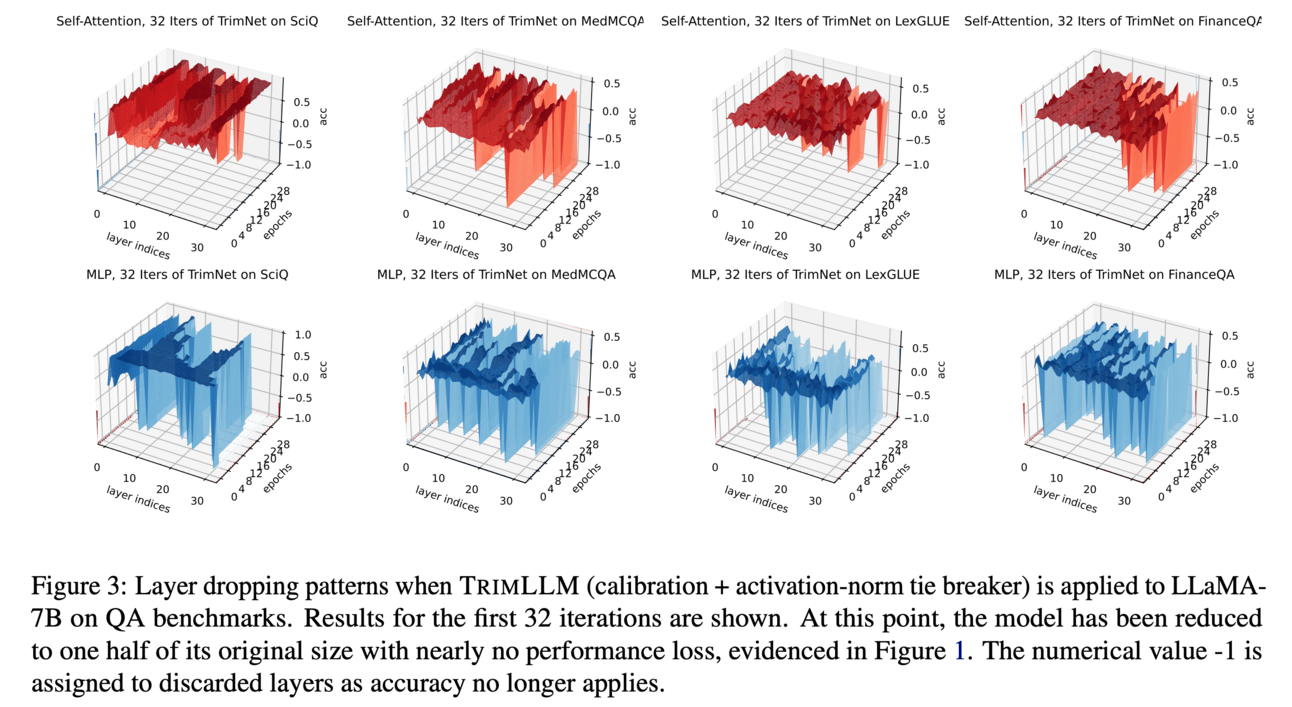

TrimLLM introduces a progressive layer dropping technique inspired by the layer-wise specialization phenomenon observed in LLMs. The method begins with a thorough analysis of each transformer's layer contribution to the model's performance on domain-specific tasks. By measuring changes in loss or accuracy when a layer is temporarily removed, less critical layers are identified.

The progressive layer dropping involves iteratively removing these least important layers. After each layer is dropped, the model is evaluated on validation data to ensure performance remains within acceptable bounds. Domain-specific fine-tuning is applied after each pruning step, allowing the model to adjust to its new, slimmer architecture and mitigate any performance loss. Also, this methodology does not require specialized hardware, making it accessible for a wide range of users.

Results: TrimLLM achieved 2.1-5.7× inference speedup on consumer GPUs and up to 3.1× on A100 GPUs compared to state-of-the-art compression methods, while maintaining no loss in accuracy at 50∼60% model compression ratio.

Microsoft research team introduced a method to mitigate quantization errors by introducing low-rank weight matrices in full precision that act on unquantized activations. The approach augments the quantized weight matrix with a low-rank correction matrix, resulting in a corrected weight matrix that combines both. A joint optimization objective is defined to minimize the discrepancy between the outputs of the corrected quantized model and the original full-precision model.

During fine-tuning, quantization-aware training is performed where both the quantized weights and correction matrices are updated. The quantized weights are adjusted using quantized gradients, while the correction matrices are updated using full-precision gradients, effectively compensating for the precision loss in aggressive quantization. By correcting the activations through these low-rank matrices, the method restores model accuracy without significantly increasing model size, as the correction matrices require minimal additional storage.

Results: Proposed approach reduced the accuracy gap by over 50% compared to quantized models without correction.

LLM-BIP: Structured Pruning for Large Language Models with Block-Wise Forward Importance Propagation

LLM-BIP introduces a structured pruning technique that calculates the importance of parameters using block-wise forward importance propagation. The model is divided into blocks, such as attention heads or MLP layers. The method simulates the impact of pruning each block by approximating its effect on the model's output using a forward pass and leveraging Lipschitz continuity to ensure stability.

By assessing how changes in a block affect the loss function, the importance of different structures is accurately evaluated. Blocks are ranked based on these importance scores, and the least important ones are pruned while ensuring the model remains functional. After pruning, fine-tuning is conducted to recover any potential performance degradation, using learning rate schedules that stabilize training.

Results: The method increases accuracy by 3.26% compared to previous pruning methods at similar compression rates.

DFRot reduces outliers in LLM quantization by refining weight matrices through rotation to produce outlier-free and evenly distributed activation values. The method applies randomized Hadamard transforms to the weight matrices, spreading out large values and reducing the chance of large activations. A weighted loss function is introduced to penalize activations exceeding a certain threshold, effectively handling long-tail distributions.

The rotation matrices are further refined using orthogonal Procrustes optimization, ensuring the transformed weights remain close to the original weights while achieving desired activation properties. This results in a more uniform activation distribution, facilitating better quantization without sacrificing precision for other values.

Results: DFRot improved perplexity by 0.25 on W4A4KV4 and 0.21 on W4A4KV16, demonstrating effectiveness in reducing outliers and massive activations in quantized LLaMA3-8B.

GeLoRA introduces a geometry-inspired approach that dynamically adjusts the ranks of Low-Rank Adaptation (LoRA) matrices based on the intrinsic dimensionality of hidden state representations in each layer. By computing the singular values of hidden representations, the method estimates the intrinsic dimensionality, with layers processing more complex information receiving higher ranks.

Analyzing the decay of singular values allows GeLoRA to adjust ranks to match each layer's complexity, ensuring efficient parameter allocation. This leverages geometric principles to determine a theoretical lower bound for optimal rank selection, balancing efficiency and expressiveness.

During fine-tuning, only the LoRA parameters are updated while the base model remains frozen. This parameter-efficient strategy enables task-specific adaptation without requiring full model training, making it suitable for environments with limited resources.

Memory Management & KV Cache Optimization

The Key-Value (KV) cache is very important for efficient transformer model inference, storing past hidden states to avoid redundant computations. However, the KV cache can consume significant memory resources, leading to scalability issues and high computational costs.

Recent research has focused on optimizing KV cache memory usage without compromising model performance. By developing sophisticated compression and pruning techniques, researchers aim to reduce memory footprints by up to 80-90%, enabling LLMs to handle longer sequences and be deployable in resource-constrained environments.

In this section, we explore innovative approaches that address memory efficiency in LLMs, particularly focusing on KV cache optimization through dynamic compression, sparse coding, adaptive strategies, and novel pruning methods.

ZigZagkv introduces a dynamic KV cache compression method that allocates memory budgets per layer based on layer uncertainty. The key idea is to assess the necessity of memory allocation for each layer individually, rather than applying a uniform compression strategy across all layers. The method estimates uncertainty scores for each layer by analyzing attention patterns and the variability of hidden state outputs. Layers with higher uncertainty—indicating greater importance for accurate predictions—are assigned larger memory budgets to retain more precise KV representations. Conversely, layers with lower uncertainty have their KV caches compressed to save memory without significantly impacting performance.

By dynamically adjusting the compression ratio for the KV cache in each layer, the model retains essential information in critical layers while substantially reducing overall memory usage. This approach ensures efficient memory utilization, enabling LLMs to handle longer contexts without exceeding memory limitations.

Results: The proposed method reduces KV cache memory usage to approximately 20% of the original size during inference, significantly alleviating memory bottlenecks.

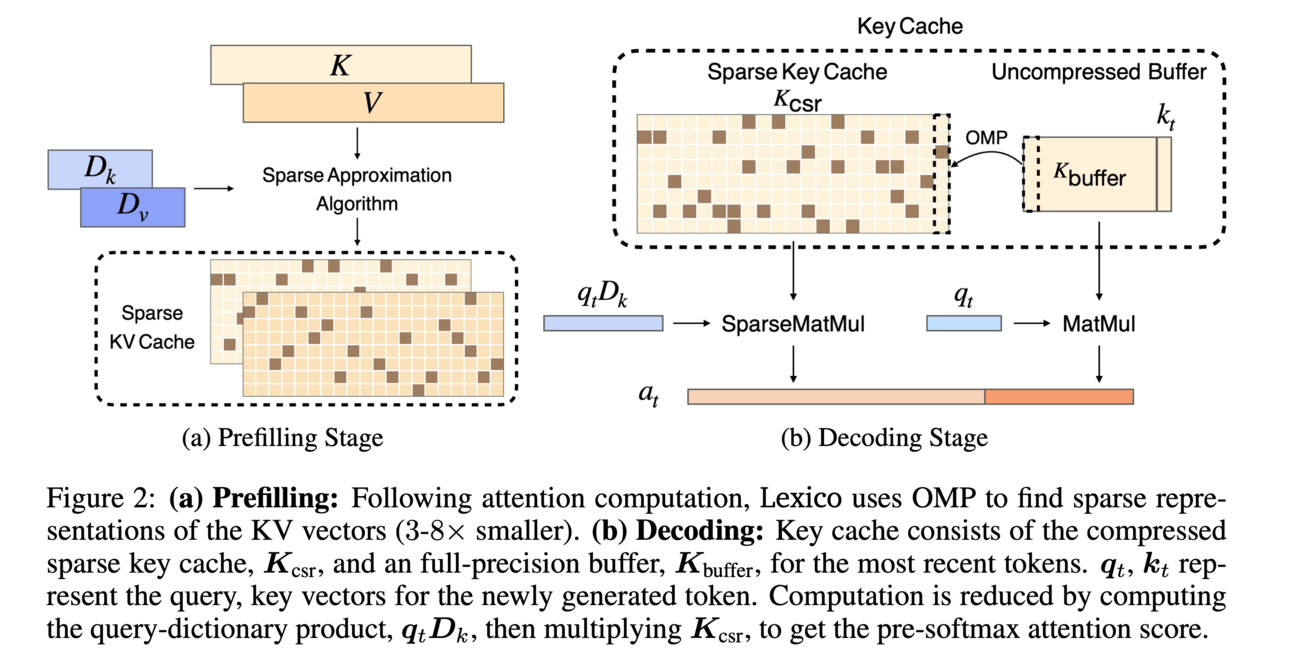

Lexico uses a sparse coding approach using a universal dictionary to approximate the KV caches with sparse linear combinations. The universal dictionary, consisting of approximately 4,000 atoms (basis vectors), captures essential patterns in KV caches across various inputs, tasks, and model architectures. By representing each key and value vector as a sparse combination of these atoms, the method compresses the KV cache significantly.

Orthogonal Matching Pursuit (OMP) is utilized to find the optimal sparse representation for each vector, ensuring accurate reconstruction with minimal coefficients. The sparsity level can be adjusted to control the compression ratio, allowing flexible trade-offs between memory savings and performance. This approach maintains the model's ability to reconstruct necessary information during inference, achieving extreme compression without significant loss.

Results: Lexico maintains 90-95% of the original model performance despite significant compression.

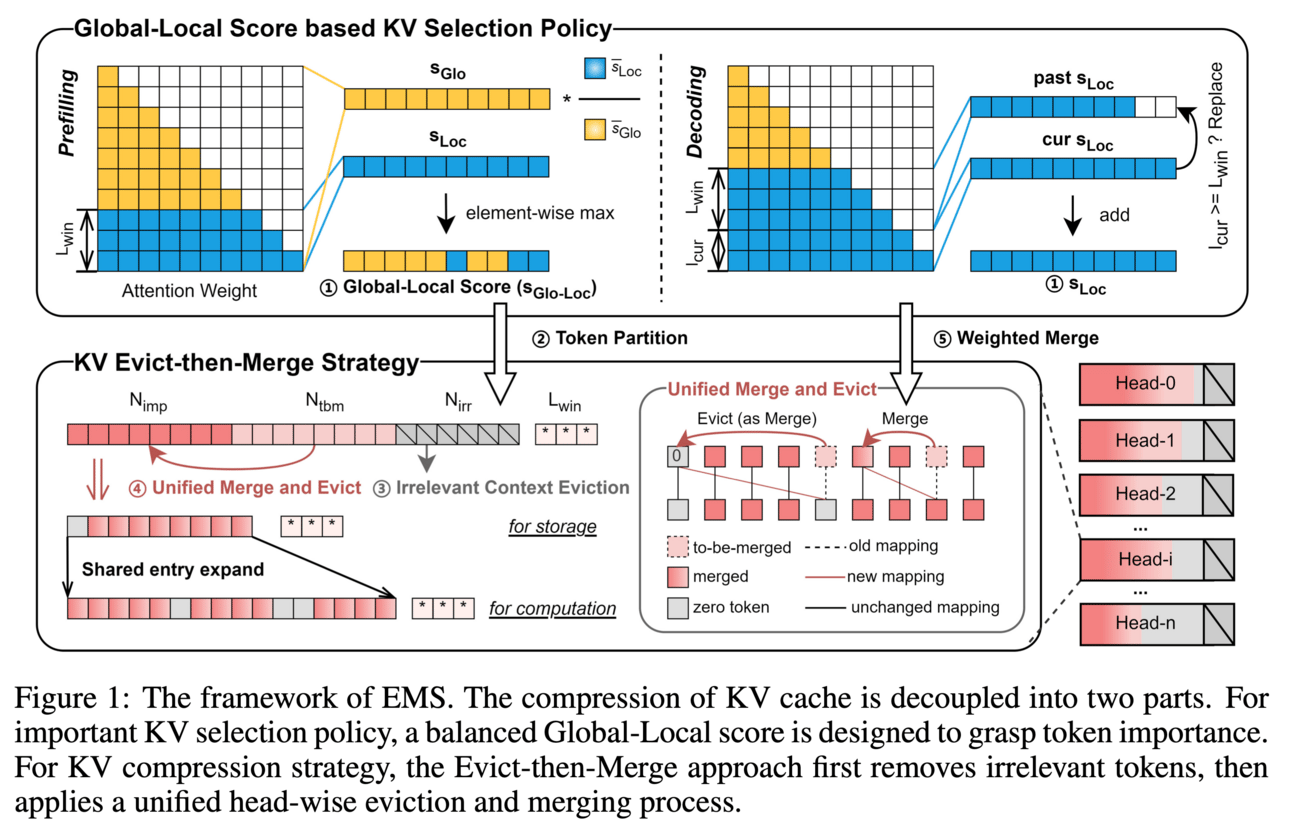

EMS introduces an adaptive evict-then-merge strategy for KV cache compression by calculating a Global-Local Importance (GLI) score for each token. The GLI score integrates global accumulated attention scores—reflecting how much a token is attended to throughout the entire sequence—with local attention scores that capture importance within specific contexts or attention heads. This combined score provides a more accurate estimation of token significance.

Using the GLI scores, less important tokens are first evicted to reduce the KV cache size. Then, redundant or similar tokens are merged by aggregating their representations, further saving memory. This process is applied on a per-head basis, recognizing that different attention heads may focus on different aspects of the input. A zero-class mechanism is introduced to handle evicted tokens consistently during inference without adverse effects.

By adapting the compression strategy based on the GLI scores and processing it head-wise, EMS effectively balances memory savings with performance preservation, allowing efficient handling of long-context inputs in LLMs.

Results: EMS achieves state-of-the-art performance with low perplexity, improving metrics by over 1.28 points on four LLMs evaluated on the LongBench benchmark under a 256 token cache budget.

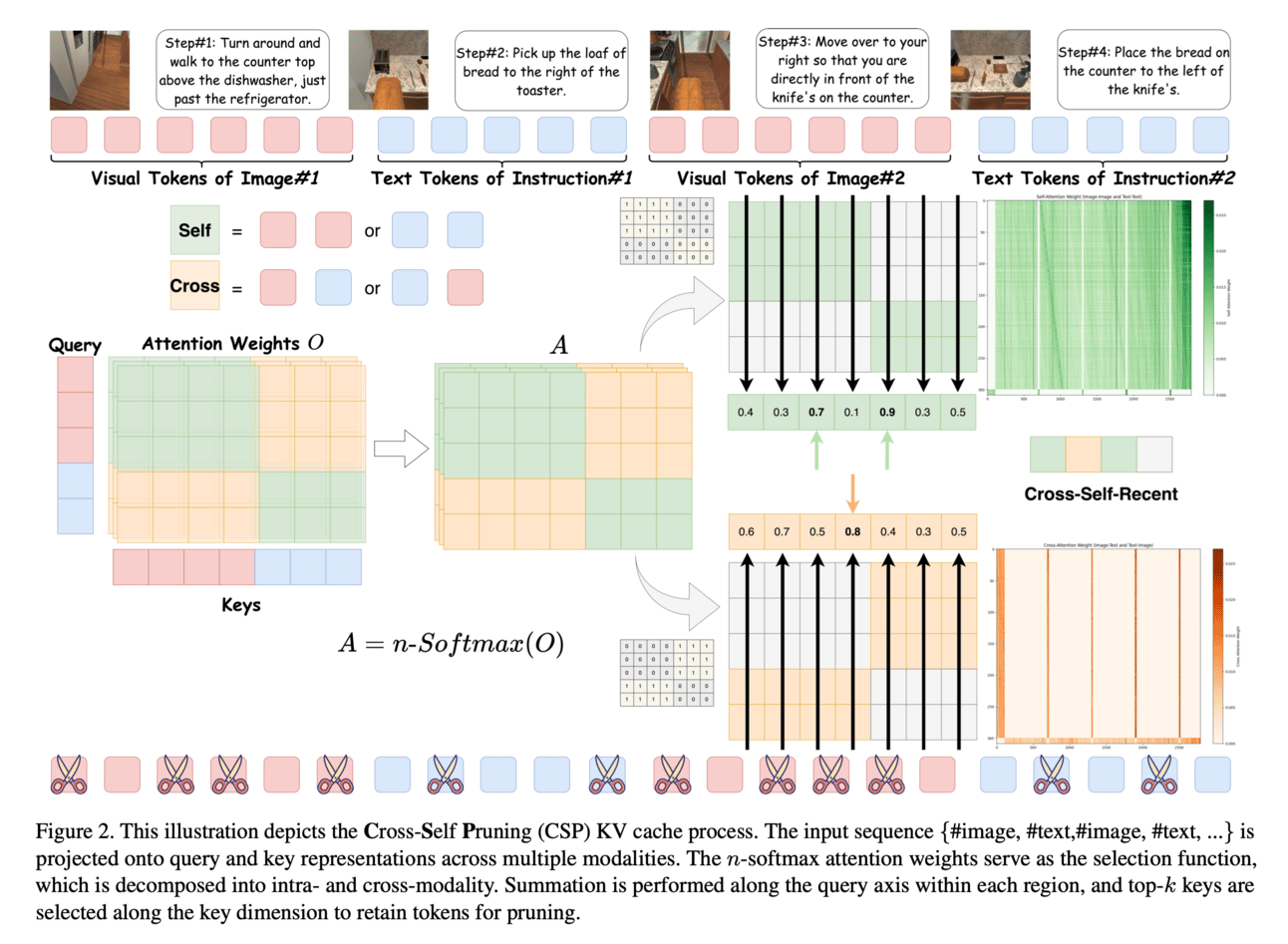

The proposed method decomposes attention scores into intra-modality (within the same modality) and inter-modality (across modalities) components. By separately analyzing these components, the model can more precisely estimate the importance of tokens in both visual and textual modalities.

Intra-modality attention captures relationships among tokens within the same modality, helping to identify essential visual features or textual elements. Inter-modality attention examines interactions between modalities, ensuring that tokens critical for cross-modal understanding are retained. Additionally, an n-softmax function is introduced to maintain the smoothness of attention scores after pruning, adjusting attention distributions to account for the pruned tokens and preventing abrupt changes that could destabilize performance.

By employing this tailored pruning approach, the model effectively reduces the KV cache size without losing important information from either modality, leading to more efficient inference in vision-language tasks.

Results: The Cross-Self Pruning (CSP) method matches the performance of models with full KV caches and outperforms previous pruning methods, achieving up to a 41% memory reduction.

iLLaVA introduces a method to optimize LVLMs by merging redundant tokens in image representations. Utilizing a precise and rapid algorithm, the method identifies tokens within image inputs that carry redundant or less significant information. These redundant tokens are merged, effectively reducing the number of tokens required to represent an image.

To ensure important visual information is not lost, the method recycles information from the removed tokens by integrating their contributions into the remaining tokens. This process preserves essential features and relationships necessary for the model's performance. By reducing the token count for images, iLLaVA decreases memory usage and doubles the inference throughput, as the model has fewer tokens to process. The approach achieves these efficiency gains with minimal modifications to the model architecture, facilitating easy integration into existing systems.

Results: iLLaVA doubles throughput and halves memory consumption with only a 0.2% drop in performance, demonstrating efficiency gains without sacrificing accuracy.

Training & Optimization Methods

These papers improve LLM training efficiency and effectiveness through enhanced optimization techniques, distributed training methods, and novel adaptation approaches, achieving better convergence and reduced computational requirements.

EDiT introduces an efficient distributed training framework that integrates a specific variant of Local Stochastic Gradient Descent (Local SGD) with model sharding. By allowing workers to perform multiple local updates before synchronizing, the method reduces communication frequency and overhead. The key innovation lies in using layer-wise synchronization during the forward pass. This approach overlaps computation and communication, minimizing idle time and enhancing efficiency.

To stabilize training, EDiT introduces a pseudo-gradient penalty that acts as a regularizer, mitigating the divergence that can occur with delayed updates in Local SGD. Furthermore, recognizing the heterogeneity of cluster environments, the researchers present A-EDiT, an asynchronous version of the method. A-EDiT accommodates varied computational resources and network conditions by allowing workers to operate without strict synchronization, further improving scalability and robustness in diverse training environments.

APOLLO addresses this challenge by proposing an optimizer that retains the memory efficiency of SGD while achieving performance comparable to AdamW. The key idea is to approximate the per-parameter adaptive learning rates of AdamW through a structured update rule that relies on an auxiliary low-rank optimizer state. By leveraging random projection techniques, APOLLO maintains a low-rank approximation of the gradients, capturing essential variance information without the need to store full-size moment estimates.

This approach reduces memory usage significantly since the auxiliary optimizer state requires far less storage than the full moment vectors. APOLLO adjusts learning rates adaptively based on the low-rank approximation, effectively emulating the benefits of AdamW's adaptive updates. Additionally, the researchers introduce APOLLO-Mini, a variant designed for even lower memory environments, making it suitable for training on devices with limited resources.

Results: APOLLO and its variant, APOLLO-Mini, deliver competitive or superior performance compared to AdamW, with significant memory savings: 3x throughput and 4x larger batch sizes on an 8xA100-80GB setup, enabling model scalability and low-end GPU training.

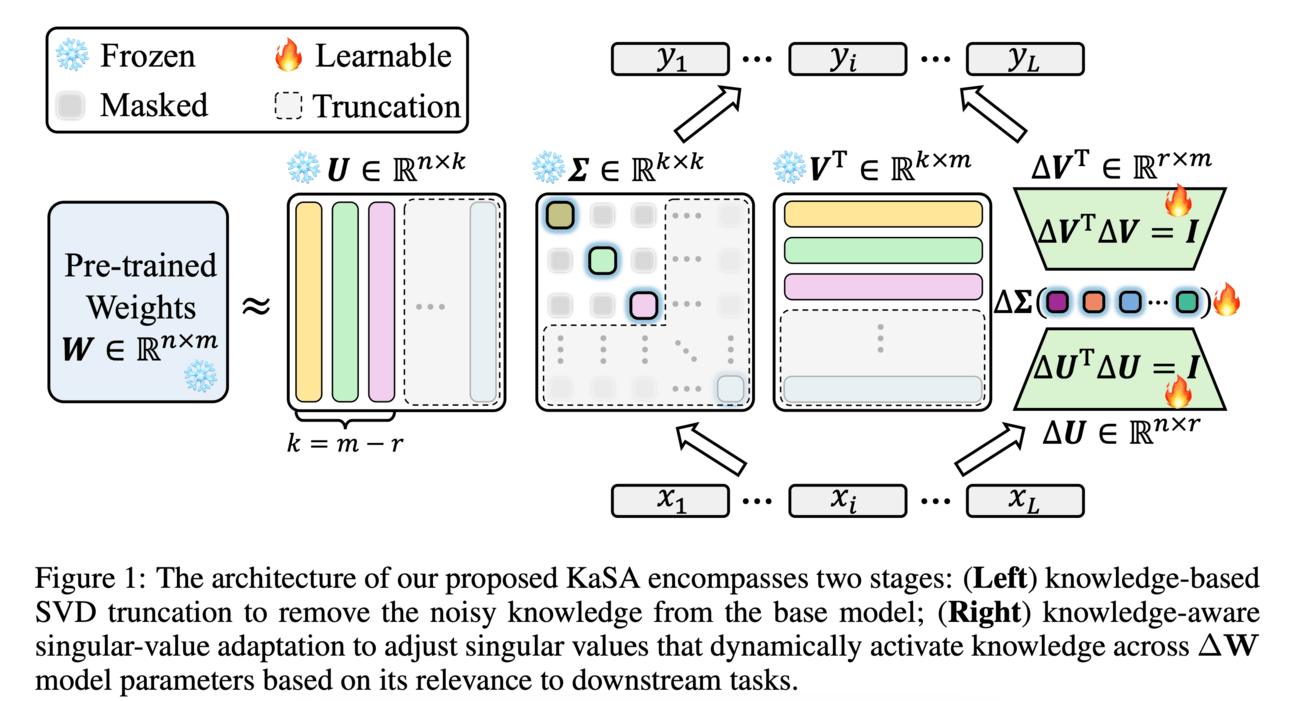

KaSA introduces a novel PEFT method that employs Singular Value Decomposition (SVD) with knowledge-aware singular values to adapt LLMs more efficiently. The method decomposes weight matrices into singular vectors and singular values, allowing fine-grained control over the model's parameter adaptation. By analyzing task relevance, KaSA dynamically activates singular values corresponding to components of the model that are most pertinent to the target task.

This knowledge-aware activation reduces noise by de-emphasizing irrelevant parameters, effectively focusing the model's capacity on task-specific knowledge. The approach improves expressiveness without significantly increasing the computational burden. By updating only the most relevant singular values, KaSA minimizes memory overhead and accelerates the fine-tuning process.

Results: KaSA outperforms 14 popular PEFT baselines and FFT across 16 benchmarks and 4 synthetic datasets, demonstrating its efficacy in tasks such as NLU, NLG, and commonsense reasoning.

Paper introduces Noise Perturbation Fine-Tuning (NPFT), a method designed to diminish the impact of sensitive weights during quantization. NPFT involves applying random weight perturbations during parameter-efficient fine-tuning (PEFT). By adding controlled noise to the weights identified as sensitive (those with high influence on the loss Hessian trace), the method encourages the model to distribute importance more evenly across parameters.

This process reduces the reliance on outlier weights, effectively smoothing the loss landscape and enhancing the model's robustness to quantization errors. NPFT operates without requiring special treatment for outliers or altering the model architecture, making it a straightforward addition to existing fine-tuning procedures. By integrating NPFT with PEFT, the approach maintains parameter efficiency while improving quantized model performance.

Results: NPFT enhanced the performance of OPT and LLaMA models across uniform and non-uniform quantizers. On the LLaMA2-7B-4bits benchmark, RTN matched the performance of GPTQ, achieving better inference efficiency.

Knowledge Management & Reasoning

This section focuses on research that helps LLMs to acquire, maintain, and utilize knowledge through innovative approaches such as memory compression, knowledge graph completion, interpretability techniques, and novel prompt optimization methods. These advancements not only improve the models reasoning capabilities but also address challenges like continual learning, interpretability, and efficient adaptation.

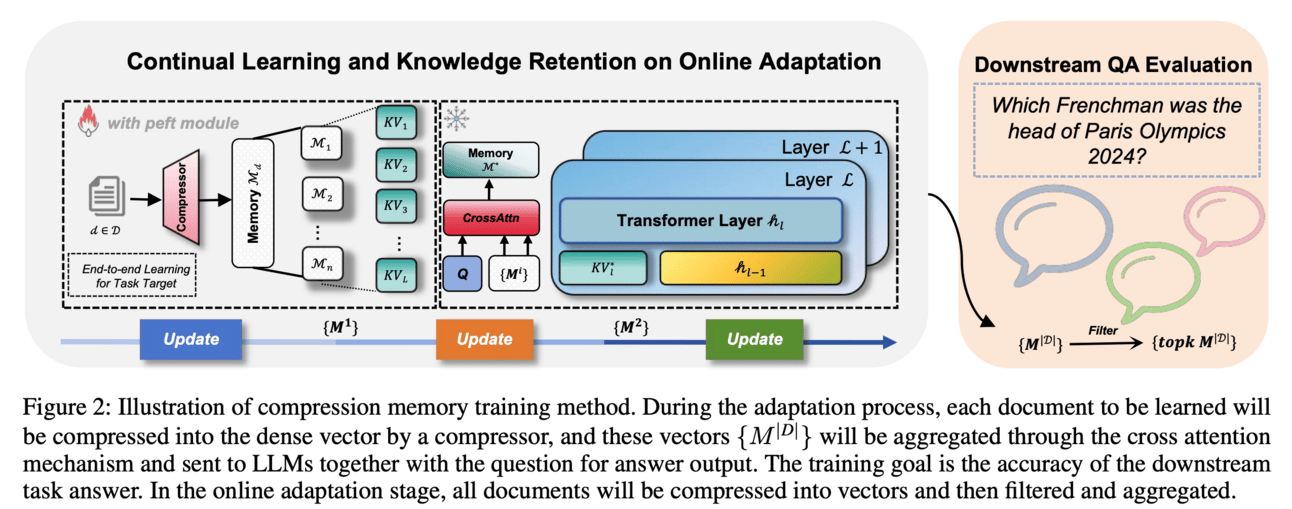

The CMT method emulates human memory by compressing and storing information from new documents into an external memory bank. Instead of updating the entire model, CMT retrieves relevant compressed memories during query processing. It introduces three key techniques to enhance performance:

Memory-Aware Objective: This encourages effective utilization of the compressed memory by adjusting the training objective, balancing reliance on stored knowledge versus internal parameters.

Self-Matching: It improves the quality of stored memories by matching and integrating similar information within the memory bank, enhancing representational coherence.

Top-Aggregation: During inference, it retrieves and aggregates the most relevant memories, allowing the model to access and reason over pertinent information without altering its original parameters.

By leveraging these techniques, CMT reduces catastrophic forgetting—the loss of previously learned knowledge when new information is added—allowing the model to incorporate new knowledge seamlessly.

Results: The CMT method demonstrates improved adaptability and robustness, achieving enhancements like +4.07 EM and +4.19 F1 on the StreamingQA dataset with Llama-2-7b.

The study introduces a contextualized BERT model that leverages contextual information from neighboring entities and relationships within the knowledge graph. Unlike traditional KGC approaches that rely heavily on entity descriptions or require negative triplet sampling, this model incorporates structural context directly into the embeddings. By encoding the neighborhood information of entities using BERT's transformer architecture, the model captures both local and global semantics, enabling it to infer missing links more accurately. This approach reduces computational demands and mitigates semantic inconsistencies by eliminating the need for negative sampling.

Results: The model outperforms existing methods, achieving a 5.3% improvement in standard evaluation metrics such as Mean Reciprocal Rank (MRR) and Hits@N.

Frame Representation Hypothesis: Multi-Token LLM Interpretability and Concept-Guided Text Generation

Researchers propose the Frame Representation Hypothesis, modeling multi-token words as frames—a sequence of vectors representing each token in the word. Analyzing these frames uncovers how LLMs internally represent complex concepts composed of multiple tokens. By interpreting concepts using the average of word frames that share the same concept, the methodology facilitates manipulation of these concepts within the model's latent space. This enables detection and modification of biases, as well as concept-guided text generation where specific concepts can be emphasized or de-emphasized in generated text. The approach was validated on models such as Llama 3.1, Gemma 2, and Phi 3, demonstrating its ability to identify biases related to gender and language and to remediate them through frame manipulation.

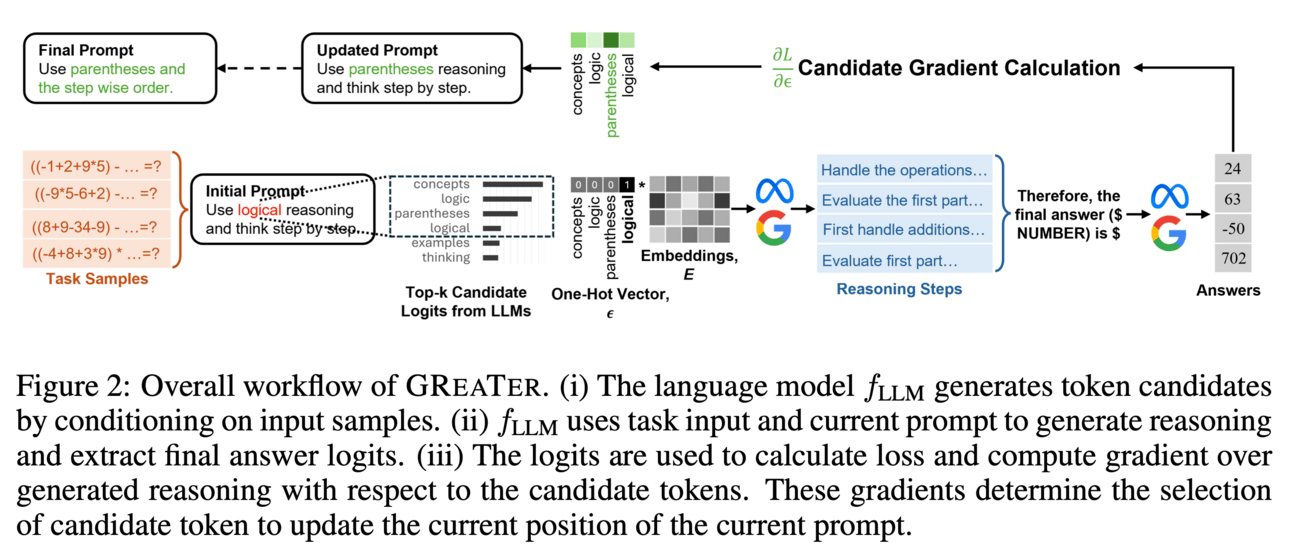

GReaTer introduces a novel approach that utilizes task loss gradients for prompt optimization in smaller language models. Instead of relying solely on textual feedback or guidance from larger models, GReaTer allows the smaller model to introspect its own performance by analyzing gradients computed during task-specific losses. By leveraging these gradients, the model adjusts its prompts to improve performance iteratively. This self-improvement mechanism enables the model to enhance its reasoning capabilities without external assistance or significant computational overhead, making it practical for deployment in resource-constrained environments.

Creative ways to use LLMs!!

Semantic Steganography: A Framework for Robust and High-Capacity Information Hiding using Large Language Models - Paper constructs a semantic space to map secret messages using ontology-entity trees, enhancing robustness, transmission reliability, and indistinguishability of stego texts.

Embracing Large Language Models in Traffic Flow Forecasting - Paper uses LLMs to improve traffic flow prediction by capturing complex spatio-temporal relationships through graph and hyper graph structures, advancing intelligent transportation systems.

IntelEX: A LLM-driven Attack-level Threat Intelligence Extraction Framework - Paper uses LLMs to convert unstructured cyber threat intelligence reports into structured formats, facilitating better security analysis and response planning.

WHAT-IF: Exploring Branching Narratives by Meta-Prompting Large Language Models [Marvel Fans check this paper!] 🔥 - Paper applies zero-shot meta-prompting with GPT-4 to generate branching narratives from a prewritten story. Starting with a linear plot, it creates branches at key decision points by prompting the LLM to consider major plot points, ensuring coherent alternate storylines. The branching plot is stored in a graph for tracking and structuring the interactive fiction system.

SwarmGPT-Primitive: A Language-Driven Choreographer for Drone Swarms Using Safe Motion Primitive Composition - Paper achieves smooth drone swarm choreographies using natural language commands powered by LLMs, integrating motion planning and safety constraints.

Beyond pip install: Evaluating LLM Agents for the Automated Installation of Python Projects - Paper Creates an agent named Installamatic that autonomously installs Python project dependencies by interpreting and following documentation, improving software installation processes.

HiVeGen -- Hierarchical LLM-based Verilog Generation for Scalable Chip Design - Paper uses hierarchical LLMs to generate complex hardware designs in Verilog by structuring tasks into smaller submodules, enhancing scalability and reducing errors in chip design.

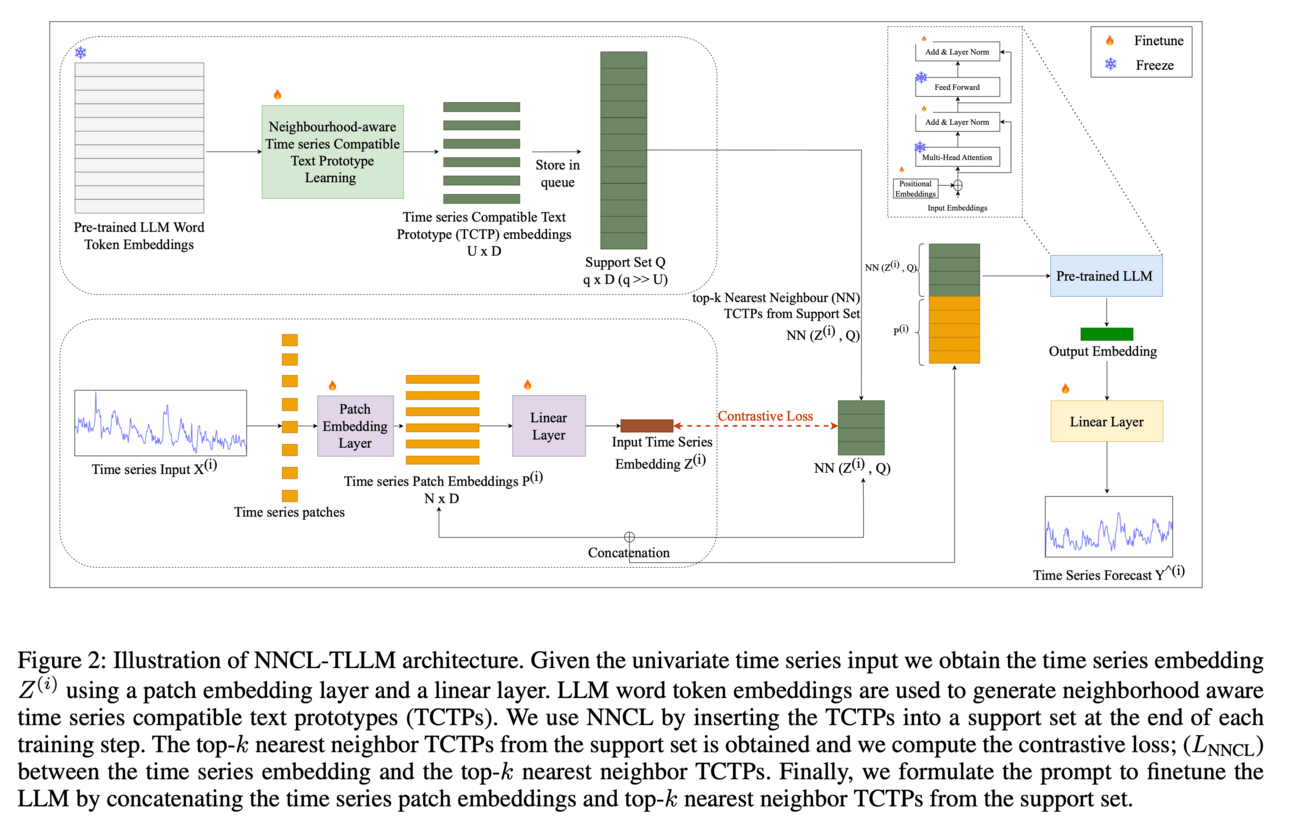

Rethinking Time Series Forecasting with LLMs via Nearest Neighbor Contrastive Learning - Paper adapts LLMs for time series forecasting using Nearest Neighbor Contrastive Learning to align textual embeddings with time series data, excelling in few-shot and long-term predictions.

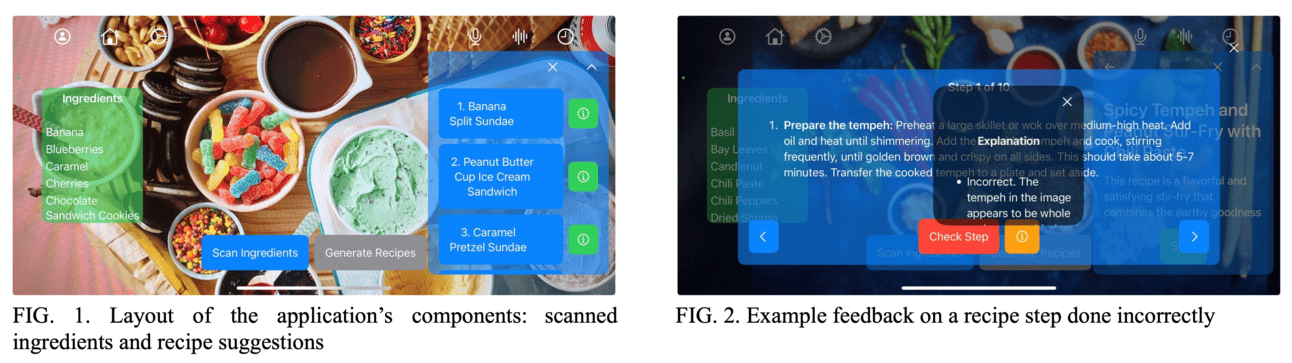

ARChef: An iOS-Based Augmented Reality Cooking Assistant Powered by Multimodal Gemini LLM - Paper combines LLMs with augmented reality to identify ingredients and generate personalized recipes through an iOS application, enhancing the cooking experience and accessibility.