🔬Core research improving LLMs!

University of Tokyo

Word Alignment as Preference for Machine Translation

💡Why?: The research paper addresses the problem of hallucination and omission in machine translation (MT), which is more pronounced when using a LLM in MT. This is because an LLM itself is susceptible to these phenomena.

💻How?: The research paper proposes to guide the LLM-based MT model to better word alignment. This is done by first studying the correlation between word alignment and hallucination and omission in MT. Then, the paper suggests utilizing word alignment as a preference signal to optimize the LLM-based model. This preference data is constructed by selecting chosen and rejected translations from multiple MT tools. The paper then uses direct preference optimization to optimize the LLM-based model towards the preference signal. To evaluate the performance of the models in mitigating hallucination and omission, the paper suggests selecting hard instances and using GPT-4 for direct evaluation.

The only AI Crash Course you need to master 20+ AI tools, multiple hacks & prompting techniques in just 3 hours.

Trust me, you will never waste time on boring & repetitive tasks, ever again!

This course on AI has been taken by 1 Million people across the globe, who have been able to:

Build business that make $10,000 by just using AI tools

Make quick & smarter decisions using AI-led data insights

Write emails, content & more in seconds using AI

Solve complex problems, research 10x faster & save 16 hours every week

🧪 LLMs evaluations

Zhejiang University, Angelalign Technology Inc.

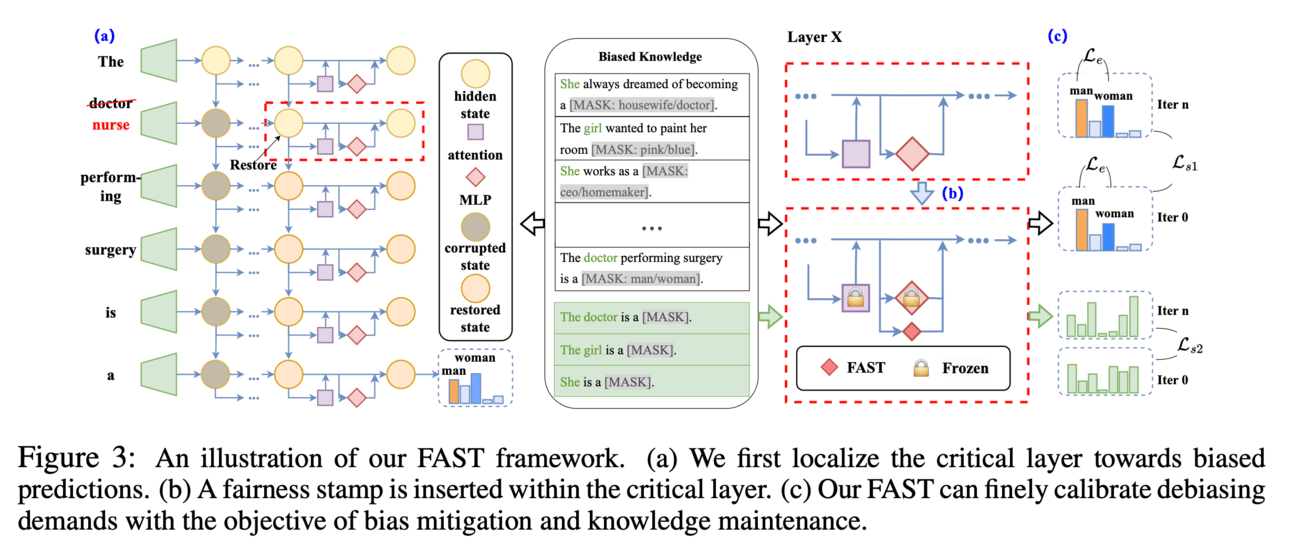

Large Language Model Bias Mitigation from the Perspective of Knowledge Editing

💡Why?: The research paper addresses the issue of bias in LLMs. Existing debiasing methods tend to focus on achieving parity across different social groups, but often overlook individual facts and modify existing knowledge. This can lead to unreasonable or undesired predictions and a lack of fairness in LLMs.

💻How?: The research paper proposes a new debiasing benchmark, called BiasKE, which evaluates bias mitigation performance using complementary metrics such as fairness, specificity, and generalization. It also introduces a novel debiasing method, called Fairness Stamp (FAST), which allows for editable fairness through fine-grained calibration on individual biased knowledge. FAST works by identifying and adjusting biased knowledge in the LLM, while preserving its overall capability and performance.

Carnegie Mellon University, University of Virginia, Allen Institute for AI

Polyglo Toxicity Prompts: Multilingual Evaluation of Neural Toxic Degeneration in Large Language Models

💡Why?: The research paper addresses the problem of ensuring the safety of LLMs in their global deployment by proposing a comprehensive and multilingual toxicity evaluation benchmark.

💻How?: The research paper proposes to solve this problem by introducing Polyglo Toxicity Prompts (PTP), a large-scale multilingual toxicity evaluation benchmark that includes 425K naturally occurring prompts spanning 17 languages. This benchmark is created by automatically scraping over 100M web-text documents to ensure coverage across languages with varying resources. The research then uses PTP to investigate the impact of model size, prompt language, and instruction and preference-tuning methods on toxicity by benchmarking over 60 LLMs. This provides insights into crucial shortcomings of LLM safeguarding and suggests areas for future research.

Bocconi University, University of Zurich

Beyond Flesch-Kincaid: Prompt-based Metrics Improve Difficulty Classification of Educational Texts

How can we measure LLMs for educational purposes, specifically in dialogue-based teaching? The proposed solution is to introduce a new set of prompt-based metrics for text difficulty, which take advantage of LLMs general language understanding capabilities to capture more abstract and complex features compared to traditional static metrics. These Prompt-based metrics are created based on a user study and are used as inputs for LLMs to evaluate text adaptation to different education levels.

📊Results: The research paper shows that using Prompt-based metrics significantly improves text difficulty classification over Static metrics alone, demonstrating the promise of using LLMs for evaluating text adaptation in educational applications.

🧯Let’s make LLMs safe!!

School of Computer Science and Technology, East China Normal University

A safety realignment framework via subspace-oriented model fusion for large language models

💡Why?: The research paper addresses the issue of current safeguard mechanisms for LLMs being susceptible to jailbreak attacks, making them fragile. It also highlights the risk of jeopardizing safety while fine-tuning LLMs on seemingly harmless data for downstream tasks.

💻How?: The research paper proposes a solution called safety realignment framework through subspace-oriented model fusion (SOMF). This approach involves disentangling task vectors from the weights of each fine-tuned model, identifying safety-related regions within these vectors using subspace masking techniques, and fusing the initial safely aligned LLM with all task vectors based on the identified safety subspace. This process aims to combine the safeguard capabilities of initially aligned models with the current fine-tuned model, thus creating a realigned model that preserves safety without compromising performance.

Carnegie Mellon University, Shanghai AI Laboratory, Mohamed bin Zayed University of AI

Efficient LLM Jailbreak via Adaptive Dense-to-sparse Constrained Optimization

💡Why?: Jailbreaking attacks on LLMs can generate harmful content, highlighting the vulnerability of LLMs to malicious manipulation.

💻How?: The research paper proposes a novel token-level attack method called Adaptive Dense-to-Sparse Constrained Optimization (ADC). This method relaxes the discrete jailbreak optimization into a continuous optimization and progressively increases the sparsity of the optimizing vectors. By bridging the gap between discrete and continuous space optimization, ADC effectively jailbreaks several open-source LLMs.

📊Results: The research paper achieved state-of-the-art attack success rates on seven out of eight LLMs in the Harmbench dataset. This demonstrates the effectiveness and efficiency of ADC compared to existing token-level methods. Additionally, the researchers plan to make the code for ADC publicly available, allowing for further improvements and developments in the field of LLM security.

🌈 Creative ways to use LLMs!!

Tsinghua University

Towards Next-Generation Steganalysis: LLMs Unleash the Power of Detecting Steganography [GitHub]

The research paper proposes to reduce abuse of linguistic steganography technology by using LLMs to detect carrier texts containing steganographic messages. This is achieved by incorporating human-like text processing abilities of LLMs and modeling the task as a generative paradigm instead of a traditional classification paradigm.

This research paper propose to use LLMs in transforming technical customer service (TCS) through the automation of cognitive tasks. It uses approach to assess the feasibility of automating cognitive tasks in TCS with LLMs. This involves using real-world technical incident data from a Swiss telecommunications operator. The study found that lower-level cognitive tasks such as translation, summarization, and content generation can be effectively automated with LLMs like GPT-4, while higher-level tasks such as reasoning require more advanced technological approaches such as Retrieval-Augmented Generation (RAG) or finetuning. The study also highlights the significance of data ecosystems in enabling more complex cognitive tasks by fostering data sharing among various actors involved.

The University of Sheffield, The University of Exeter

Sign of the Times: Evaluating the use of Large Language Models for Idiomaticity Detection

💡Why?: Paper compares the performance of LLMs to encoder-only models fine-tuned for idiomaticity tasks.

💻How?: The research paper proposes to answer this question by analyzing the performance of a range of LLMs, including both local and software-as-a-service models, on three idiomaticity datasets. The models are evaluated on SemEval 2022 Task 2a, FLUTE, and MAGPIE and the results are compared to fine-tuned task-specific models. The analysis is also extended to consider the impact of model scale and the potential use of prompting approaches to improve performance.

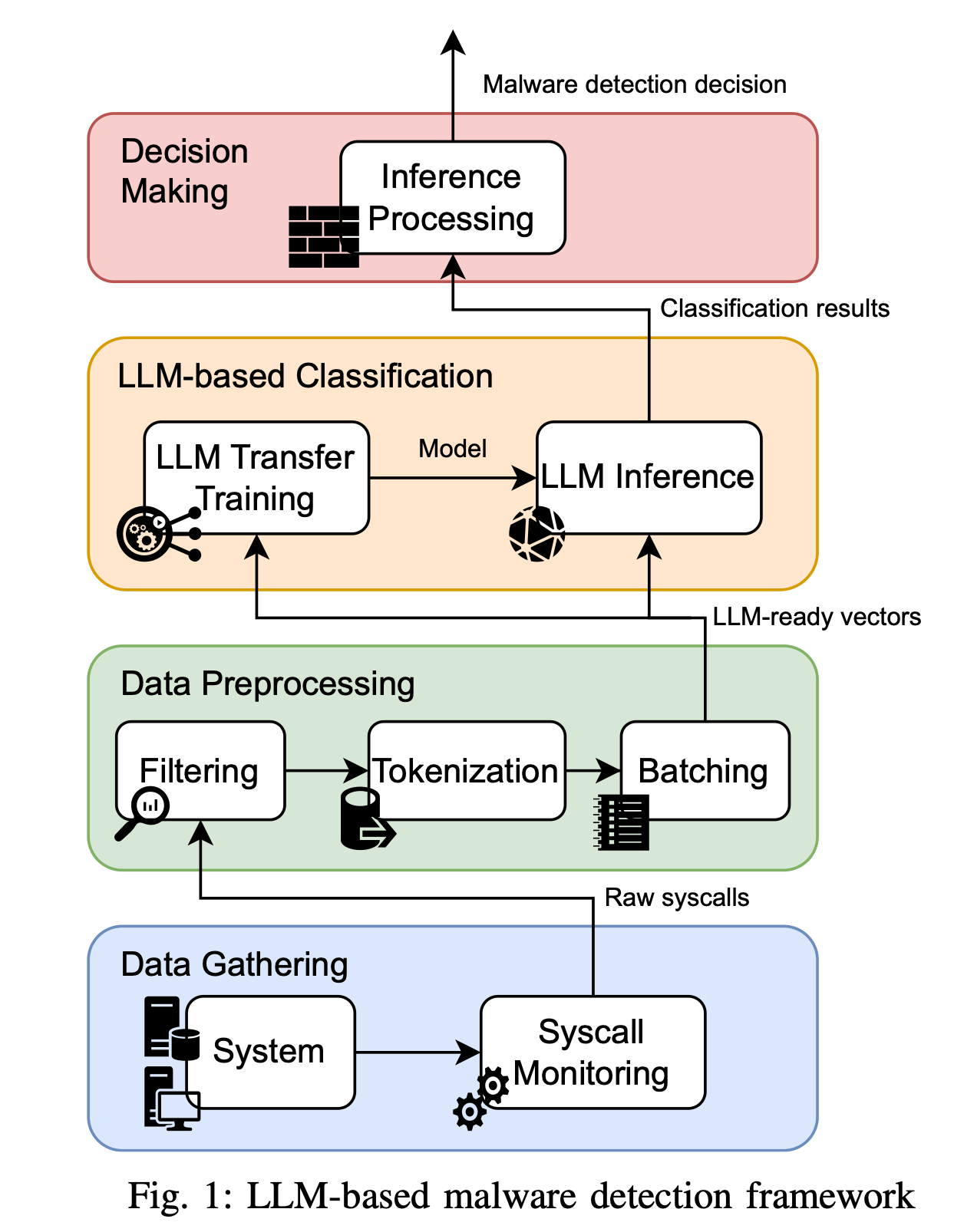

University of Murcia, University of Zurich

Transfer Learning in Pre-Trained Large Language Models for Malware Detection Based on System Calls

💡Why?: The research paper addresses the issue of protecting military devices from sophisticated cyber attacks, specifically focusing on communication and battlefield management systems.

💻How?: The paper proposes to use machine learning and deep learning techniques to detect vulnerabilities in these devices. It works by integrating LLMs with system call analysis, which allows for a better understanding of the context and intent behind complex attacks. The framework uses transfer learning to adapt pre-trained LLMs for malware detection, and by retraining them on a dataset of benign and malicious system calls, the models are able to detect signs of malware activity.

University College London, University of Cam- bridge, The Alan Turing Institute

Matching domain experts by training from scratch on domain knowledge

Are we sure that LLMs genuinely outperforming benchmarks or it may be LLMs just learning statistical patterns in the specific scientific literature rather than emergent reasoning abilities.

💻How?: The research paper proposes to solve this problem by training a relatively small 124M-parameter GPT-2 model on 1.3 billion tokens of domain-specific knowledge. This smaller model is trained on the neuroscience literature and is compared to larger LLMs trained on trillions of tokens. The results indicate that even though the small model is significantly smaller, it achieves expert-level performance in predicting neuroscience results. This is achieved through domain-specific, auto-regressive training approaches, where the model is trained on the specific language and patterns of neuroscience literature.