🔑 highlights from today’s newsletter

The CAP principle for LLMs!

Uni-MoE: A mixture of Experts for MLLMs

Slimcode: Removal of lower-impact tokens

EPIC: Framework automatically parallelizes LLM interactions without user intervention

LinkedIn improves content recommendation model

Create 360o avatar with just text prompt!

🔬Core research improving LLMs!

💡Why?: The research paper addresses the problem of the intricate dynamics between cost-efficiency and accuracy in large language model (LLMs) serving, which is magnified by the growing need for longer contextual understanding at a massive scale.

💻How?: The research paper proposes a CAP principle for LLM serving, inspired by the CAP theorem in databases. This principle suggests that any optimization can improve at most two of the three conflicting goals: serving context length, accuracy, and performance. The paper categorizes existing works within this framework and highlights the importance of defining and measuring user-perceived metrics. The CAP principle serves as a guiding principle for designers to navigate the trade-offs in serving models.

💡Why?: The research paper addresses the issue of computational costs in scaling Multimodal Large Language Models (MLLMs), which hinders their performance.

💻How?: The research paper proposes a solution called Uni-MoE, which is a unified MLLM with a Mixture of Experts (MoE) architecture. This architecture consists of modality-specific encoders with connectors for a unified multimodal representation. It also uses a sparse MoE architecture for efficient training and inference through modality-level data parallelism and expert-level model parallelism. To further improve multi-expert collaboration and generalization, a progressive training strategy is implemented, which involves cross-modality alignment, training modality-specific experts with cross-modality instruction data, and tuning the Uni-MoE framework using Low-Rank Adaptation (LoRA) on mixed multimodal instruction data.

📊Results: The research paper reports significant improvements in reducing performance bias, improving multi-expert collaboration and generalization, and handling mixed multimodal datasets.

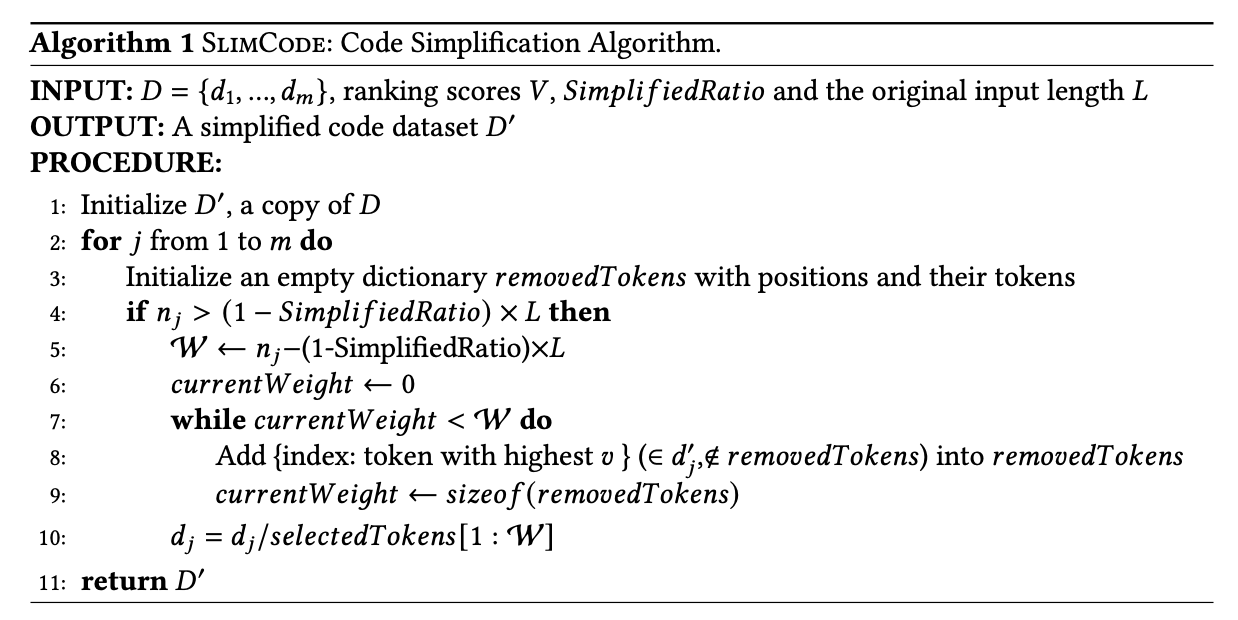

💡Why?: The research paper addresses the issue of heavy computational complexity in code-oriented LLMs and the need to simplify input programs for more efficient use.

💻How?: The research paper proposes a solution called SlimCode, which is a model-agnostic code simplification approach that relies on the nature of input code tokens. This is achieved by categorizing the tokens and removing those with lower impact on code simplification. This approach is applicable to various LLMs and tasks, including code search and summarization, and can also be used with paradigm-prompt engineering and interactive in-context learning.

📊Results: The research paper reports a 9.46% improvement in MRR and a 5.15% improvement in BLEU score for code search and summarization tasks, as well as a 133 times faster speed compared to the state-of-the-art approach. Additionally, SlimCode can reduce the cost of invoking GPT-4 by up to 24% per API query while still producing comparable results to those with the original code.

💡Why?: The research paper addresses the critical need for safety in LLMs. Specifically, it aims to address the issues of bias and toxicity in LLM generations, as these models often risk losing contextual meaning while mitigating bias and toxicity. This is a significant problem as it can lead to underrepresentation or negative portrayals across various demographics, as well as inappropriate linguistic mentions and biased content in social media.

💻How?: The research paper proposes a framework called MBIAS, which stands for "Model Bias Intervention for Accuracy and Safety". This framework is fine-tuned on a custom dataset specifically designed for safety interventions. MBIAS aims to reduce bias and toxicity in LLM generations while still retaining contextual accuracy. It works by incorporating safety interventions during the fine-tuning process, which involves training the model on the custom dataset. This allows the model to learn how to generate safe outputs while also retaining key information.

📊Results: The research paper demonstrates that MBIAS is effective in reducing bias and toxicity in LLM generations. It reports a reduction of over 30% in overall bias and toxicity while still retaining key information.

⚙️LLMOps & GPU level optimization

💡Why?: LLMs suffer with hallucinating facts and struggling with arithmetic. Recent research papers uses sophisticated decoding techniques to solve this problem.

However, performant decoding, particularly for sophisticated techniques, relies crucially on parallelization and batching, which are difficult for developers. This paper observes two gaps in current research:

Existing approaches are high-level domain-specific languages for gluing expensive black-box calls, but are not general or compositional

LLM programs are essentially pure (all effects commute)

💻How?: The research paper proposes a novel, general-purpose lambda calculus called EPIC that automatically parallelizes a wide-range of LLM interactions without user intervention. This is achieved through a "opportunistic" evaluation strategy that allows independent parts of a program to be executed in parallel, dispatching external calls as eagerly as possible. To maintain simplicity and uniformity, control-flow and looping constructs are implemented in the language itself. EPIC is embedded in and interoperates closely with Python.

📊Results: The research paper provides experimental results that show a 1.5x to 4.8x speedup over sequential evaluation, while still allowing practitioners to write straightforward and composable programs without manual parallelism or batching.

🧪 LLMs evaluations

Can Public LLMs be used for Self-Diagnosis of Medical Conditions ? - This research paper assess the efficiency of LLMs in self-diagnosis of medical conditions. It creates a prompt engineered dataset of 10000 samples and testing the performance of two LLMs - GPT-4.0 and Gemini - on the task of self-diagnosis. It compares the performance of these two models and recording their accuracies.

Large Language Models Lack Understanding of Character Composition of Words - To what extent LLMs understand the fundamental building blocks of language, namely characters? This paper analyze LLMs ability to understand the composition of characters within words. This is done by comparing their performance to that of humans, who are considered to have perfect understanding of characters in text. The paper also discusses potential areas for future research in this field.

🌈 Creative ways to use LLMs!!

💡Why?: Code synthesis, which requires a deep understanding of complex natural language problem descriptions, generation of code instructions for complex algorithms and data structures, and the successful execution of comprehensive unit tests.

💻How?: The research paper proposes a new approach to code generation tasks called MapCoder, which leverages multi-agent prompting. This approach replicates the full cycle of program synthesis as observed in human developers. MapCoder consists of four large language model agents that are specifically designed to emulate the stages of this cycle: recalling relevant examples, planning, code generation, and debugging. These agents work together to generate high-quality code solutions.

📊Results: MapCoder showcases remarkable code generation capabilities and achieves new state-of-the-art results on HumanEval benchmark.

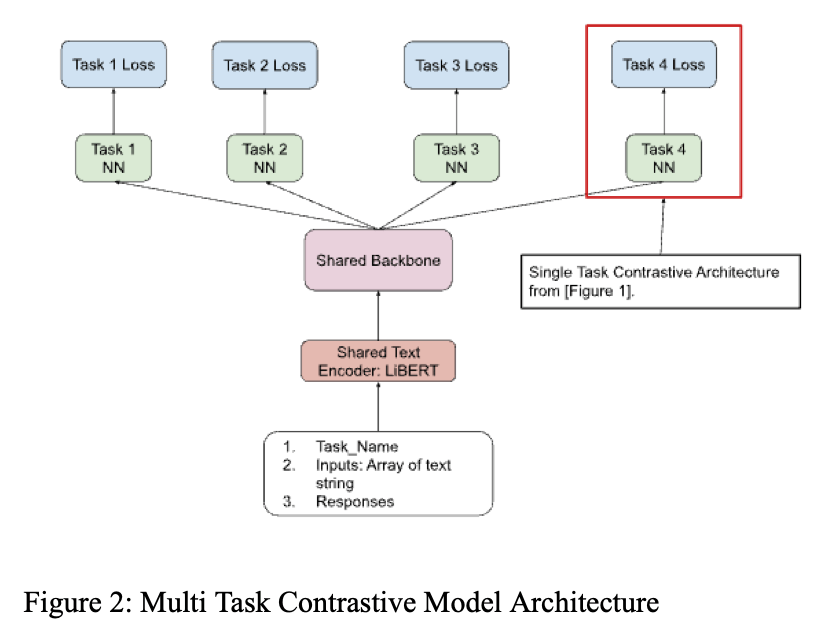

💡Why?: LinkedIn team trying to improve the semantic understanding capabilities of LinkedIn's core content recommendation models.

💻How?: Team leverages multi-task learning, specifically by fine-tuning a pre-trained, transformer-based LLM using multi-task contrastive learning with data from a diverse set of semantic labeling tasks. This method has shown promise in various domains and has resulted in positive transfer, leading to superior performance across all tasks compared to training independently on each. The specialized content embeddings produced by the model also outperform generalized embeddings offered by OpenAI on the LinkedIn dataset and tasks.

📊Results: The research paper does not provide specific performance improvement numbers, but it mentions that the model outperforms the baseline on zero-shot learning and offers improved multilingual support, highlighting its potential for broader application.

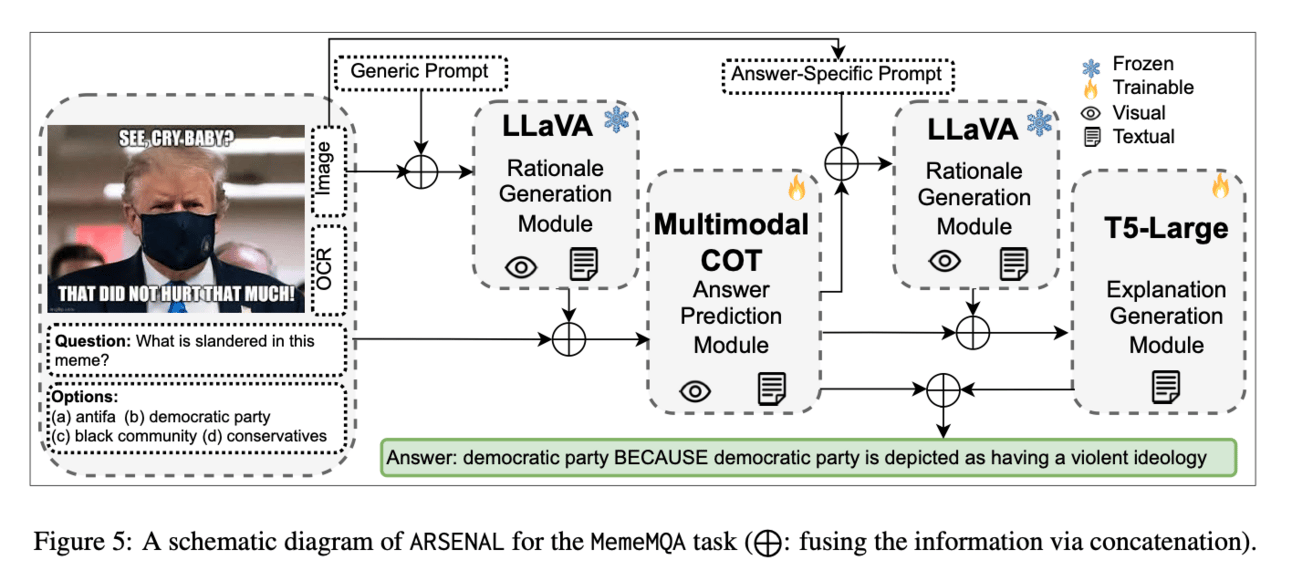

💡Why?: Author sense and urgency to decode image focused memes. Paper tries to setup a benchmark and propose a framework which can answer certain questions of meme to understand the true context of meme.

💻How?: The research paper proposes a multimodal question-answering framework called MemeMQA, which aims to accurately answer structured questions about memes while providing coherent explanations. It works by leveraging the reasoning capabilities of LLMs (large language models) and using a two-stage framework called ARSENAL.

📊Results: The research paper has achieved a significant improvement in performance compared to competitive baselines, with an 18% increase in answer prediction accuracy and better text generation capabilities.

🤖LLMs for robotics & VLLMs

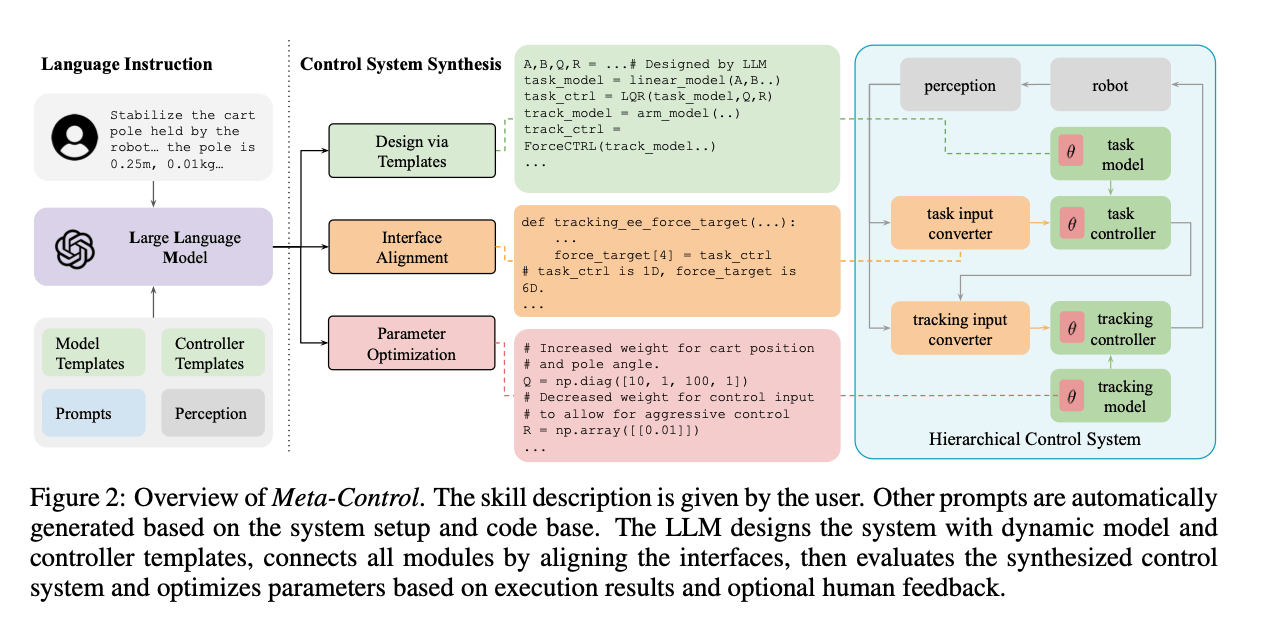

💡Why?: In real world manipulation tasks, diverse and conflicting requirements make it challenging to develop a universal robotic foundation model.

💻How?: The research paper proposes Meta-Control, an automatic control synthesis approach that utilizes a generic hierarchical control framework to address a wide range of heterogeneous tasks. It works by decomposing the state space into an abstract task space and a concrete tracking space, and then leveraging LLMs common sense and control knowledge to design these spaces using pre-defined abstract templates. This approach allows for rigorous analysis, efficient parameter tuning, and reliable execution, as well as formal guarantees such as safety and stability.

📊Results: The research paper validates its method through real-world scenarios and simulations, and achieves performance improvements in terms of task customization and formal guarantees such as safety and stability.

** Videos and additional results can be found on paper page.

Samples. Check more on their project page!

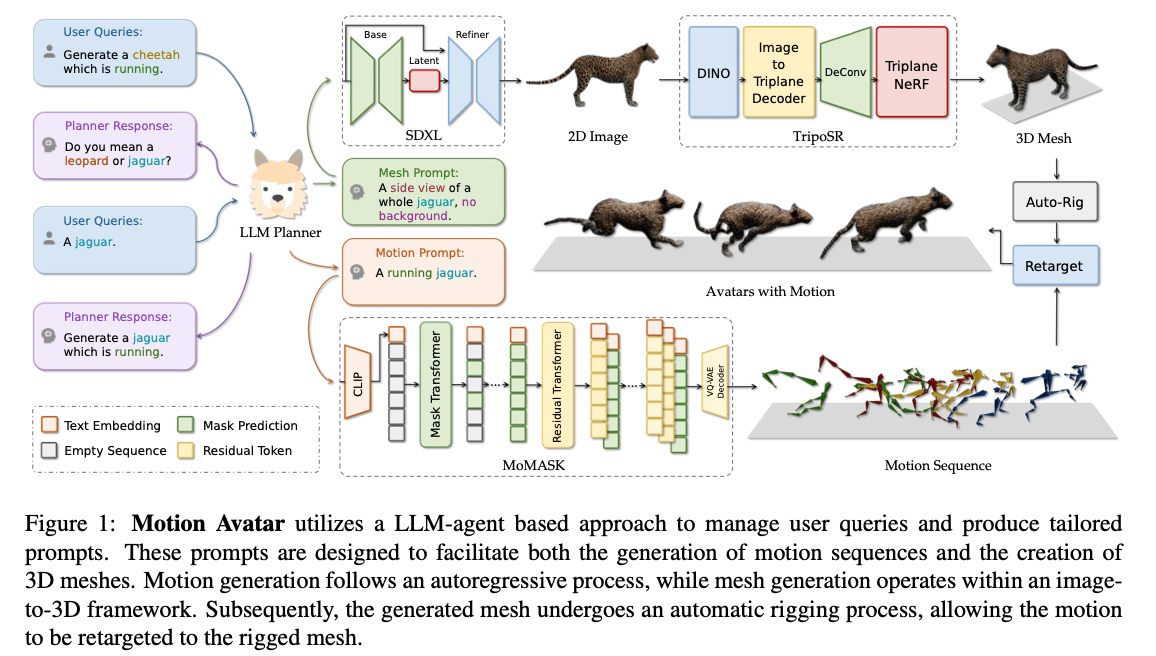

💡Why?: The research paper tries to integrate 3D avatar mesh and motion generation, as well as extending these techniques to animals due to inadequate training data and methods.

💻How?: The research paper proposes a novel agent-based approach called Motion Avatar, which utilizes text queries to automatically generate high-quality customizable human and animal avatars with motions. This is achieved through a LLM planner that coordinates both motion and avatar generation, transforming it into a customizable Q&A fashion. This allows for a more efficient and seamless process of generating dynamic 3D characters.

📊Results: The research paper achieved significant progress in dynamic 3D character generation and presented a valuable resource for the community in the form of an animal motion dataset named Zoo-300K and its building pipeline ZooGen. These contributions greatly advance the field of avatar and motion generation, bridging the gaps and providing a framework for further development.