Core research improving LLMs performance

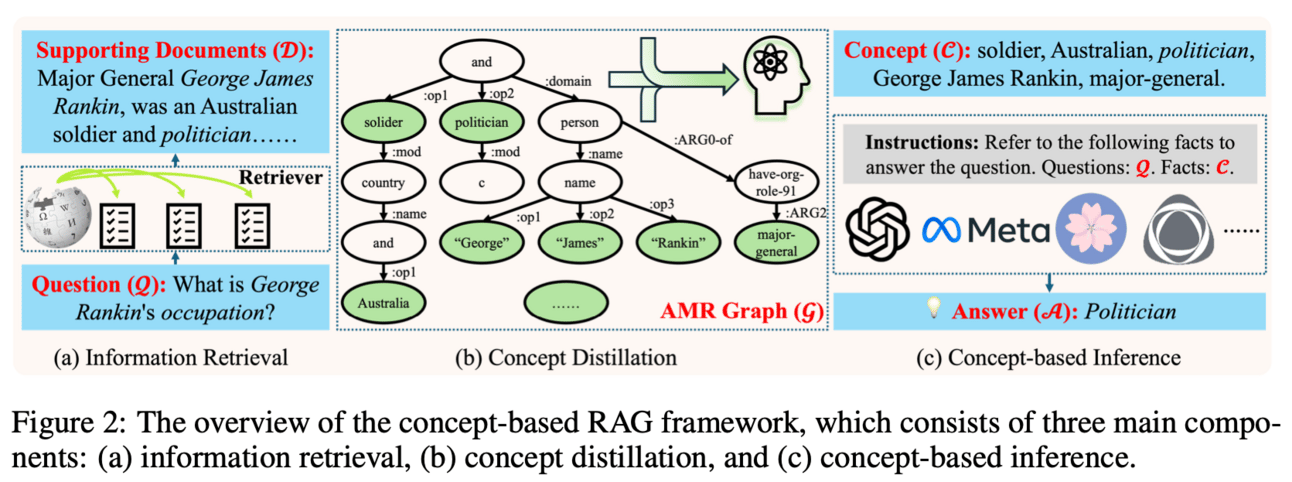

🤔 Why?: The research paper addresses the issue of over reliance on potentially flawed parametric knowledge in LLMs, which can lead to hallucinations and inaccuracies when handling long-tail, domain-specific queries. This limitation can negatively affect the information acquisition process.

💻 How?: To solve this problem, the research paper proposes a concept-based Retrieval Augmented Generation (RAG) framework with the Abstract Meaning Representation (AMR) based concept distillation algorithm. This algorithm compresses the cluttered raw retrieved documents into a compact set of crucial concepts distilled from the informative nodes of AMR using reliable linguistic features. This way, the concepts explicitly constrain LLMs to focus solely on vital information in the inference process, avoiding noisy and irrelevant information.

Memorization in LLMs can lead to privacy and copyright issues.

The paper proposes treating each textual sequence to be forgotten differently based on its level of memorization within the LLM. This is done through two new unlearning methods - Gradient Ascent and Task Arithmetic. These methods aim to properly deal with the side-effects of memorized data while maintaining the model's utility.

**Please read paper in details to understand these 2 methods, not covering here because of size constraint!

Learning from Students: Applying t-Distributions to Explore Accurate and Efficient Formats for LLMs 🔥🔥

🤔 Why?: LLMs struggles with strict latency and power demands due to their large computational requirements.

💻 How?: Paper proposing to convert models to a new theoretically optimal format called Student Float (SF4). This format is derived by analyzing the weight and activation distributions of 30 LLM networks and is shown to consistently improve model accuracy over traditional formats, such as Normal Float (NF4). In addition, the paper also proposes two variants of supernormal support for higher model accuracy in the E2M1 format. This works by optimizing the format's support range based on the t-distribution of LLM weights and activations.

📊 Results:

The research paper achieves a 0.76% increase in average accuracy on LLaMA2-7B across tasks using the SF4 format. Additionally, the proposed supernormal support variants for E2M1 also improve the accuracy of Phi-2 by up to 2.19% with only 1.22% area overhead. These improvements enable more LLM-based applications to be run at four bits, further reducing computational requirements and improving efficiency.

🤔 Why?: The research paper addresses the problem of outdated or erroneous knowledge in LLMs and the need for continuous model editing without the need for retraining.

💻 How?: The research paper proposes a method called RECIPE, which stands for RetriEval-augmented ContInuous Prompt lEarning. This method involves converting knowledge statements into short and informative prompts, which are added to the LLM's input query embedding. The prompts are used to refine the responses and the Knowledge Sentinel (KS) is used to determine whether the retrieved knowledge is relevant. Both the retriever and prompt encoder are jointly trained to achieve editing properties such as reliability, generality, and locality. This method aims to boost editing efficacy and inference efficiency in lifelong learning.

🤔 Why?: LLMs prone to overconfidence and poor calibration on fine tuned datasets.

💻 How?: To solve this problem, the research paper proposes a combination of Low-Rank Adaptation (LoRA) and Gaussian Stochastic Weight Averaging (SWAG). This approach allows for approximate Bayesian inference in LLMs, improving model generalization and calibration. LoRA helps to reduce the complexity of the model, making it easier to handle small datasets. SWAG, on the other hand, allows for efficient sampling of model weights, which helps to improve the uncertainty estimation of the model.

🤔 Why?: LLMs are prone to logical and numerical errors when solving complex mathematical reasoning tasks.

💻 How?: The paper proposes an innovative approach that leverages the Monte Carlo Tree Search (MCTS) framework to automatically generate both process supervision and evaluation signals. Essentially, this means that the LLM only needs the mathematical questions and their final answers, without requiring the solutions, in order to generate training data. The researchers then train a step-level value model to improve the LLM's inference process in mathematical domains.

🤔 Why?: computation constraint of LLMs

💻 How?: The paper proposes a novel approach to create accurate and sparse foundational versions of performant language models. This is achieved by combining the SparseGPT one-shot pruning method and sparse pretraining on a subset of datasets mixed with a Python subset. The paper also utilizes Neural Magic's DeepSparse and nm-vllm engines for training and inference acceleration.

📊 Results:

The paper demonstrates a total speedup of up to 8.6x on CPUs for sparse-quantized language models. These results are achieved across diverse and challenging NLP tasks, proving the generality of the proposed approach. Overall, this work paves the way for creating smaller and faster language models without sacrificing accuracy.

🤔 Why?: LLMs hindered by high computational costs and limited hardware memory.

💻 How?: The paper proposes a solution called Collage, which utilizes a multi-component float representation in low-precision to accurately perform operations with numerical errors accounted for at critical locations during the training process. This allows for effective use of low-precision representation without sacrificing numerical accuracy or stability.

📊 Results: The research paper demonstrates that pre-training using Collage can eliminate the need for using 32-bit floating-point copies of the model, resulting in similar or even better training performance compared to the commonly used (16,32)-bit mixed-precision strategy.

LLMs evaluations

🤔 Why?: The research paper addresses the lack of objective assessment of energy efficiency in source code generated by LLMs, used by software developers.

💻 How?: Paper conducts an empirical study that compares the energy efficiency of code generated by Code Llama, one of the most recent LLM tools, with human-written source code. The study involves designing an experiment with three human-written benchmarks in different programming languages, and comparing the energy efficiency of both implementations.

CRAFT: Extracting and Tuning Cultural Instructions from the Wild [not the benchmark but instead a model fine-tuning]

🖥️ Compute upgrade

🤔 Why?: The research paper addresses the lack of study on how wireless communications can support LLMs.

💻 How?: The paper proposes a wireless distributed LLMs paradigm called WDMoE, which utilizes a Mixture of Experts (MoE) approach. This involves deploying LLMs collaboratively across edge servers of base station (BS) and mobile devices, with the gating network and neural network layer at the BS and expert networks distributed across devices. This arrangement leverages the parallel capabilities of expert networks and a expert selection policy is designed to overcome the instability of wireless communications by considering both model performance and end-to-end latency.

⁉️ Sponsored

Seems like a good learning opportunity. Haven’t tried it but thought it might be useful to many of my readers.

This 3-hour ChatGPT & AI Workshop will help you automate tasks & simplify your life using AI at no cost. (+ you get a bonus worth $500 on registering) 🎁

With AI & Chatgpt, you will be able to:

✅ Make smarter decisions based on data in seconds using AI

✅ Automate daily tasks and increase productivity & creativity

✅ Solve complex business problem to using the power of AI

✅ Build stunning presentations & create content in seconds

👉 Hurry! Click here to register (Limited seats: FREE for First 100 people only)🎁

📚Survey papers

🧯Let’s make LLMs safe!!

🤔 Why?: The research paper addresses the problem of malicious intent being hidden and undetected in user prompts. This poses a threat to content security measures and can lead to the generation of restricted content.

💻 How?: The research paper proposes a theoretical hypothesis and analytical approach to demonstrate and address the underlying maliciousness. They introduce a new black-box jailbreak attack methodology called IntentObfuscator, which exploits the identified flaw by obfuscating the true intentions behind user prompts. This method manipulates query complexity and ambiguity to evade malicious intent detection, thus forcing LLMs to generate restricted content. The research paper details two implementations under this framework - "Obscure Intention" and "Create Ambiguity" - to effectively bypass content security measures.

🤖Multi-agent learning

The research paper proposes an innovative framework called MARE, which leverages collaboration among LLMs in the entire requirements engineering (RE) process. MARE consists of five agents and nine actions, divided into four tasks: elicitation, modeling, verification, and specification. Each agent is responsible for specific tasks, and they can collaborate by uploading their generated intermediate requirements artifacts to a shared workspace. This allows them to obtain the necessary information and produce more accurate requirements models.

🌈 Creative ways to use LLMs!!

Exploring the Potential of the Large Language Models (LLMs) in Identifying Misleading News Headlines [Twitter is doing the same with community headlines! - for now it is asking for our feedback (RLHF)!]

🤔 Why?: The research paper addresses the issue of learning efficiency in reinforcement learning, specifically in complex environments where traditional methods may not be effective.

💻 How?: The research paper proposes LLM-guided Q-learning, which combines the use of LLMs as heuristics to aid in learning the Q-function for reinforcement learning. This approach leverages the strengths of both Q-learning and LLMs without introducing performance bias. The LLM heuristic provides action-level guidance and the architecture can also convert the impact of hallucinations into exploration costs. This allows for more efficient sampling and improved performance in complex control tasks.

The research paper proposes a solution called "Snake Learning", which is a distributed learning framework designed to address the challenges faced by existing frameworks such as Federated Learning and Split Learning in dynamic network environments. It works by respecting the heterogeneity of computing capabilities and data distribution among network nodes in 6G networks, and sequentially training designated parts of the model on individual nodes. This layer-by-layer serpentine update mechanism significantly reduces the demands for storage, memory, and communication during the training phase, making it more efficient and adaptable for both Computer Vision (CV) and LLM tasks.

🤔 Why?: The research paper addresses the challenge of creating personalized conversational agents by utilizing LLMs to generate multi-session and multi-domain conversations that reflect real-world user preferences.

💻 How?: Paper proposes a method called LAPS, which stands for large-scale Personalized Sessions. It works by using LLMs to guide a single human worker in generating personalized dialogues. This method has proven to be more efficient and effective compared to previous methods that rely on expert-generated conversations in a wizard-of-oz setup. LAPS is able to collect large-scale, human-written conversations and extract user preferences, which can then be used to train the conversational agent to provide personalized responses.

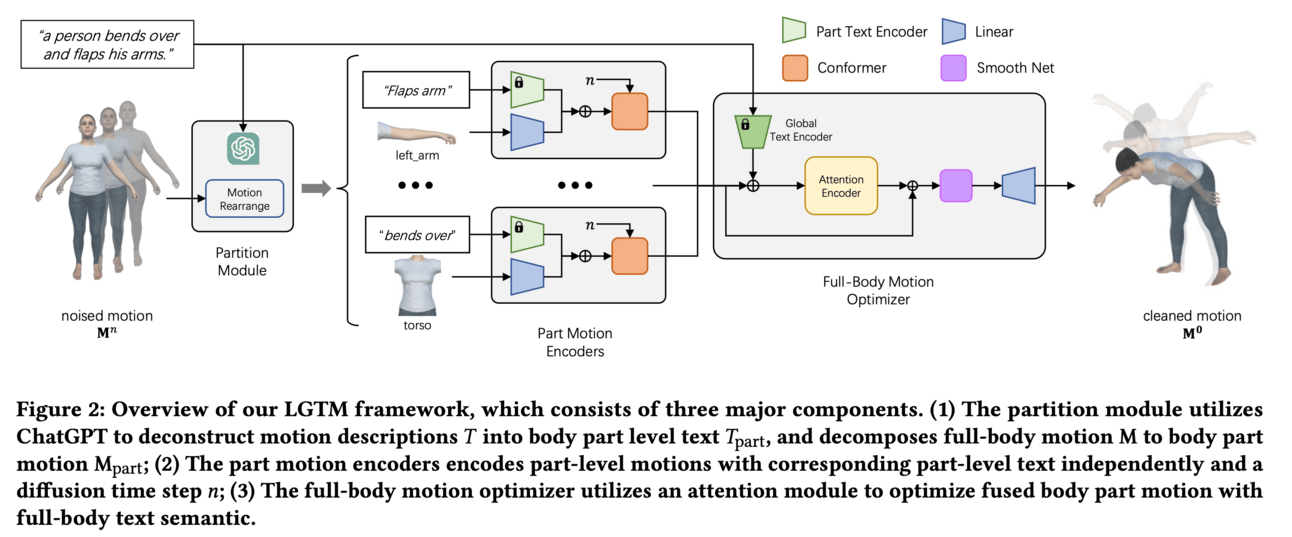

🔗GitHub: https://github.com/L-Sun/LGTM

🤔 Why?: The research paper addresses the challenge of accurately translating textual descriptions into semantically coherent human motion in computer animation. Traditional methods often struggle with semantic discrepancies, particularly in aligning specific motions to the correct body parts.

💻 How?: To solve this problem, the research paper proposes LGTM, a novel Local-to-Global pipeline for Text-to-Motion generation. It utilizes a diffusion-based architecture and employs a two-stage pipeline. In the first stage, LLMs are used to decompose global motion descriptions into part-specific narratives. These narratives are then processed by independent body-part motion encoders to ensure precise local semantic alignment. In the second stage, an attention-based full-body optimizer refines the motion generation results and guarantees overall coherence.

🤔 Why?: The research paper aims to address the challenge of automatically performing APIzation for Stack Overflow code snippets.

💻 How?: Paper uses LLMs to generate well-formed APIs for given code snippets. It does not require additional model training or manual crafting rules, making it easy to deploy on personal computers without relying on external tools. Code2API guides the LLMs through well-designed prompts and utilizes chain-of-thought reasoning and few-shot in-context learning to fully understand the task and solve it step by step, similar to how a developer would approach it.

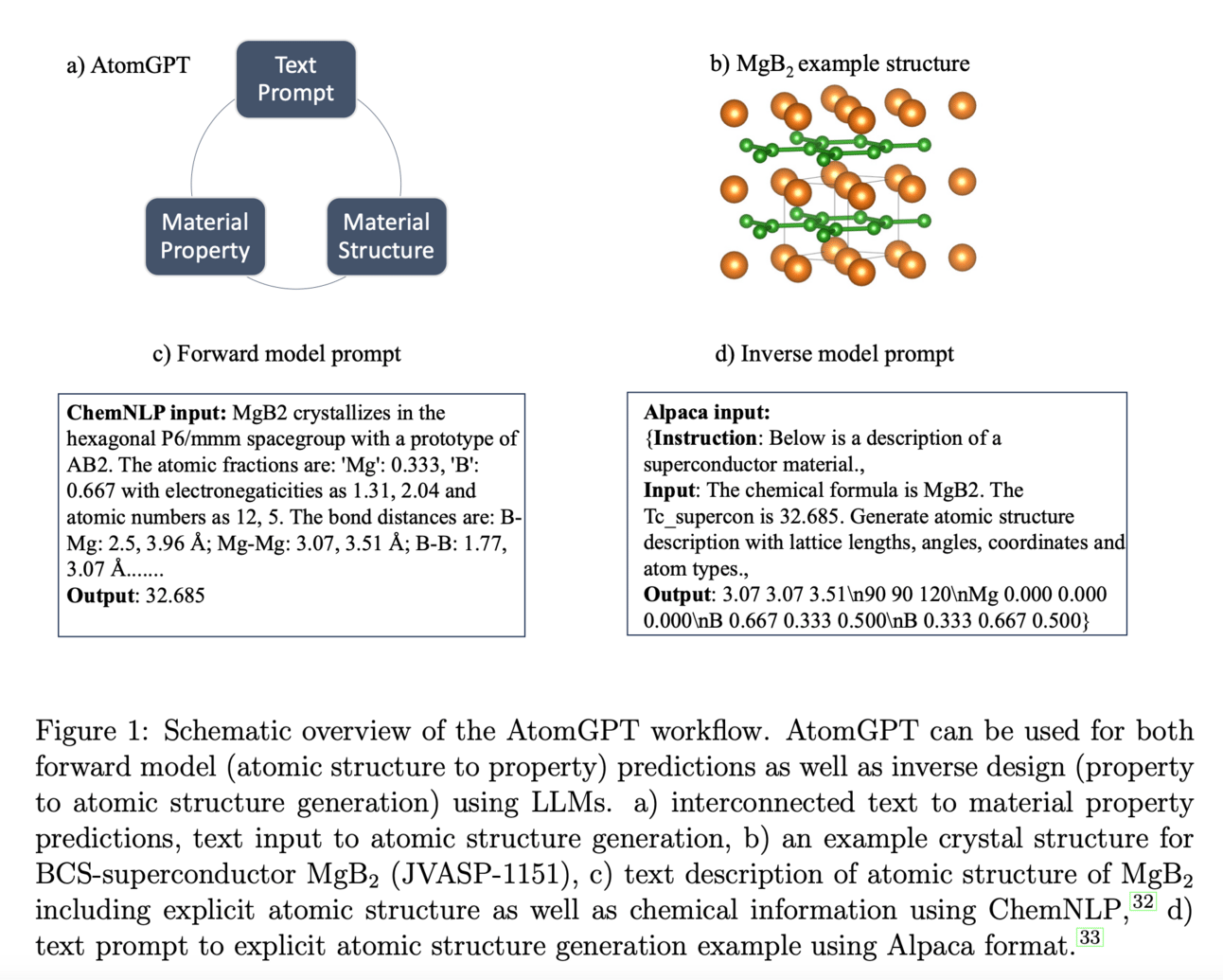

The research paper proposes a solution in the form of AtomGPT, a model specifically designed for materials design using transformer architectures. AtomGPT is capable of predicting both atomistic properties and generating atomic structures, leveraging a combination of chemical and structural text descriptions. This approach is efficient and comparable in accuracy to graph neural network models. The predictions are also validated through density functional theory calculations.

🤖LLMs goes multi-modal! & robotics

🤔 Why?: Performance degrades automatic speech recognition (ASR) when faced in certain scenarios such as multi-accent scenarios.

💻 How?: The research paper proposes a unified ASR-AR GER model, named MMGER, which leverages multi-modal correction and multi-granularity correction. This works by using multi-task learning for dynamic 1-best hypotheses and accent embeddings, fine-grained frame-level correction through force-aligning acoustic features with character-level 1-best hypotheses, and coarse-grained utterance-level correction by incorporating regular 1-best hypotheses.

🔗Project page: https://janghyuncho.github.io/Cube-LLM

🤔 Why?: The research paper addresses the issue of extending MLLMs capabilities to ground and reason about images in 3-dimensional space.

💻 How?: The research paper proposes to solve this problem by first creating a large-scale pre-training dataset called LV3D, which combines multiple existing 2D and 3D recognition datasets under a common task formulation. They then introduce a new MLLM called Cube-LLM and pre-train it on LV3D. This MLLM shows strong 3D perception capability without the need for specific 3D architectural design or training objective. It also exhibits intriguing properties, such as being able to apply chain-of-thought prompting, follow complex and diverse instructions, and be visually prompted by specialists.