Dear readers,

New GPT model - gpt-4o launched today! & it’s free for all 🤗 Read more about the OpenAI’s spring 2024 update

🔑 takeaway from today’s newsletter

A new paper conducts a controlled experiment to understand the effect of fine-tuning on hallucination.

A new ensemble based multi-agent LLM approach called “Smurfs”!

It is now possible to compress LLMs by 77% with minimal performance loss!

Lot’s of benchmarks published today.

FLockGPT - A GPT for mini-drones (no more complex modelling to draw designs on sky!)

Robots can feel emotion! A new weight to train robots feel emotion.

🔬Core research improving LLMs!

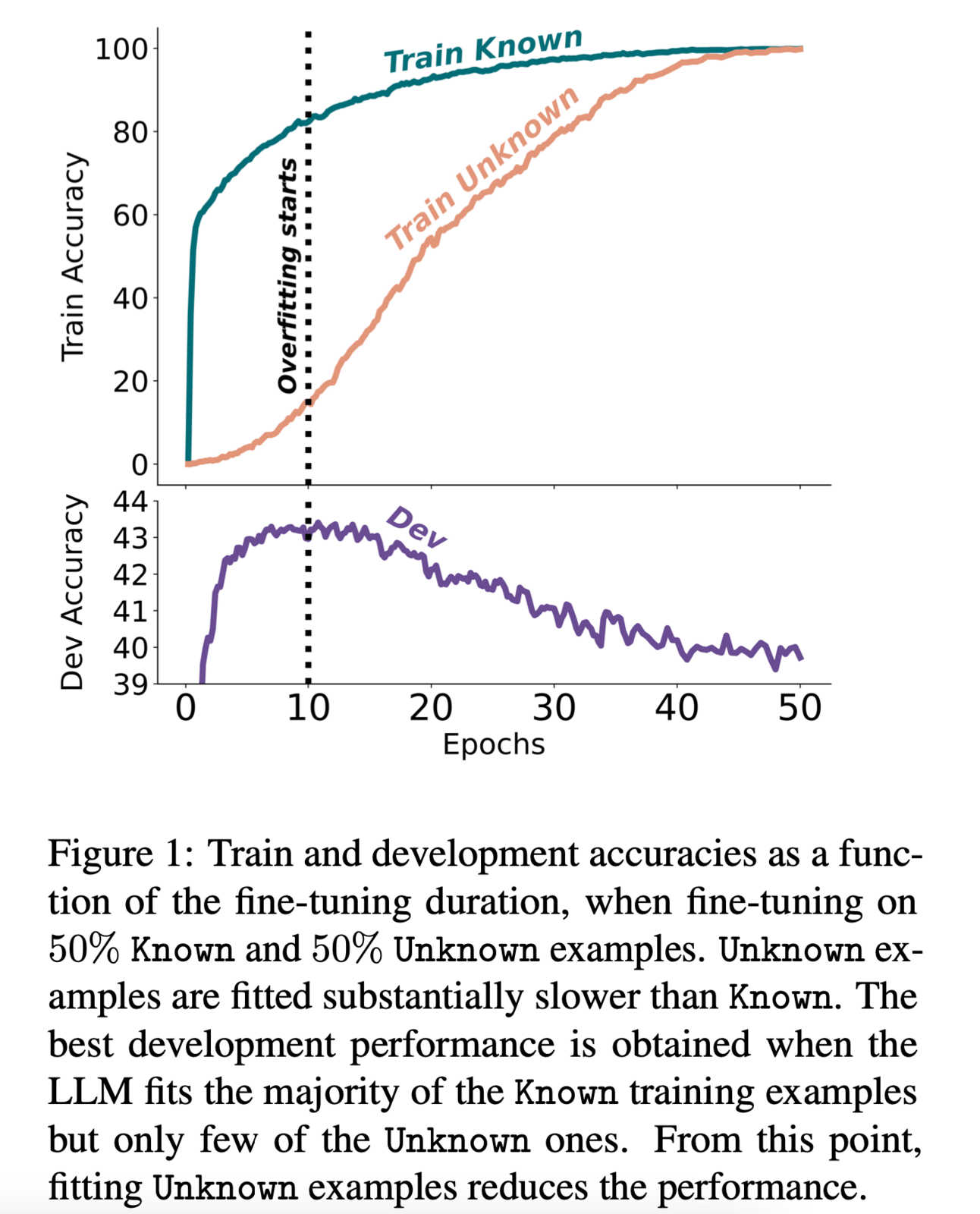

💡Why?: When fine-tuning LLMs they are prone to the risk of the model hallucinating factually incorrect responses.

💻 How?: Paper proposes a controlled setup, focused on closed-book QA, where the proportion of fine-tuning examples that introduce new knowledge is varied.

This allows for studying the impact of exposure to new knowledge on the model's capability to use its pre-existing knowledge.

The setup also measures the speed at which the model learns new knowledge and its tendency to hallucinate as a result. This helps in understanding the effectiveness of fine-tuning in teaching large language models to use their pre-existing knowledge more efficiently.

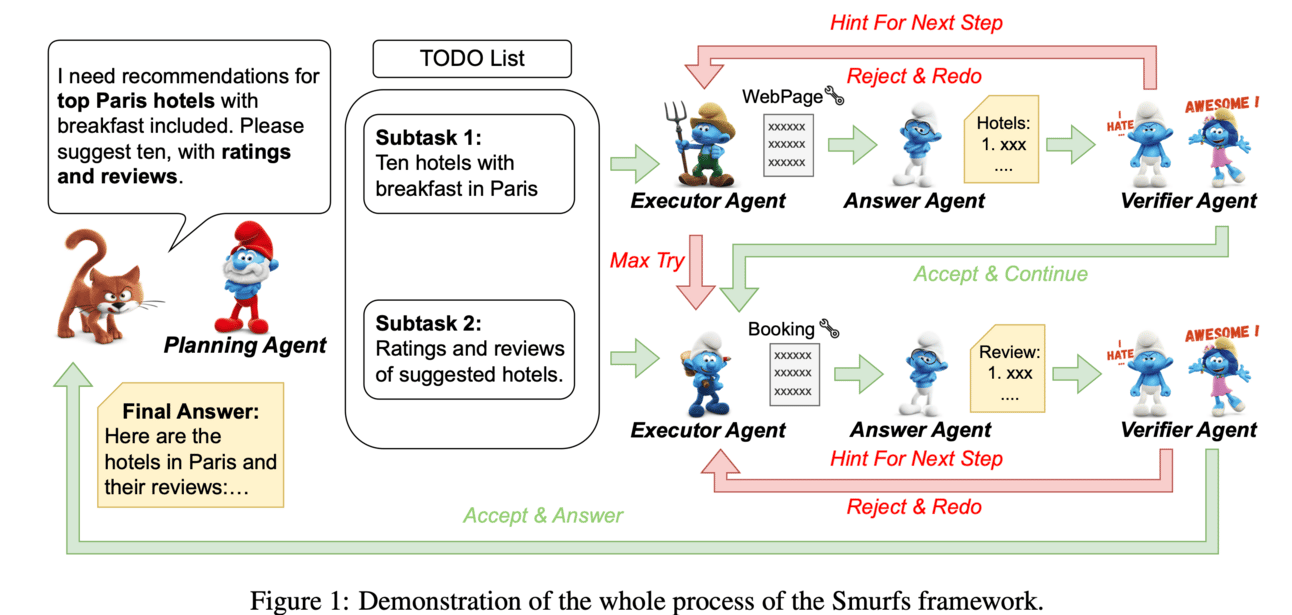

💡Why?: Use LLMs in complex tasks with improved performance.

💻How?: Paper proposes a solution called "Smurfs", a multi-agent framework that transforms a conventional LLM into a synergistic ensemble. This is achieved through innovative prompting strategies that allocate distinct roles within the model, facilitating collaboration among specialized agents. Smurfs also provides access to external tools to efficiently solve complex tasks without the need for extra training.

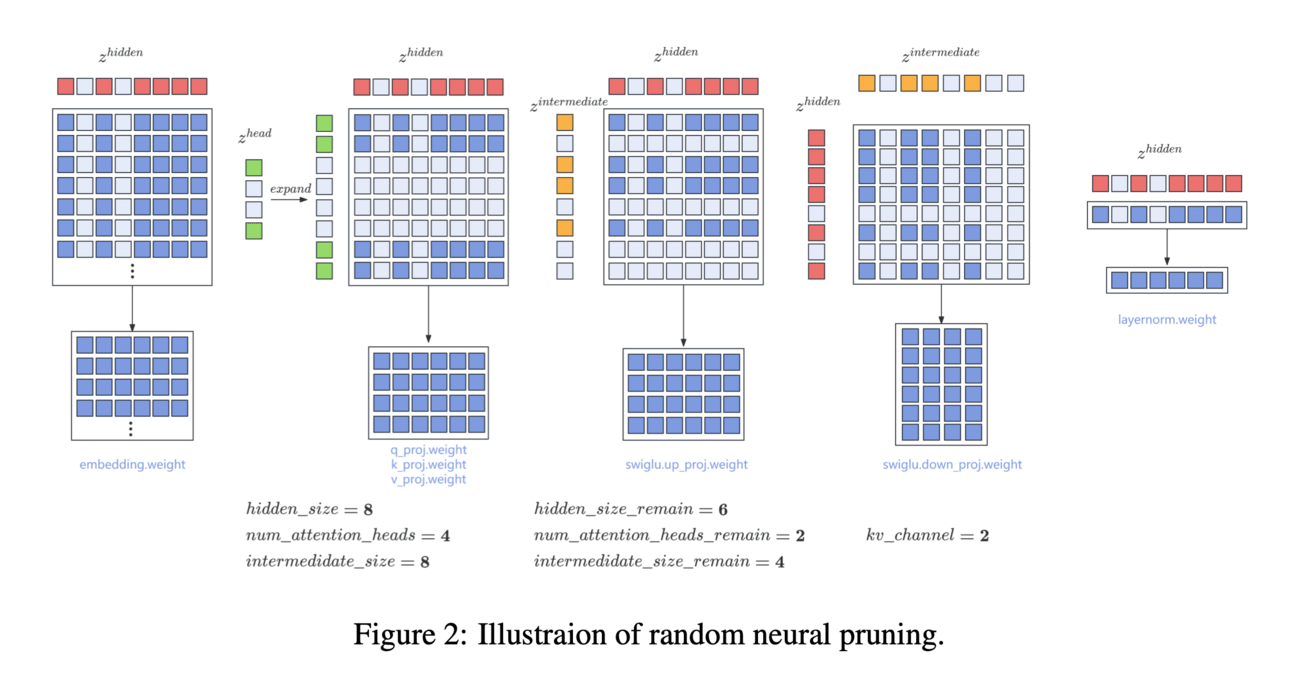

💡Why?: LLMs have a high deployment requirements and significant inference costs.

💻How?: The research paper proposes to solve this problem by training smaller models. This is achieved through the development of OpenBA-V2, a 3.4B model derived from multi-stage compression and continual pre-training from the original 15B OpenBA model. OpenBA-V2 utilizes more data, flexible training objectives, and techniques such as layer pruning, neural pruning, and vocabulary pruning to achieve a compression rate of 77.3% with minimal performance loss. This allows for the deployment of smaller, more efficient language models that can still achieve competitive performance.

📊Results: The research paper demonstrates the effectiveness of OpenBA-V2 by achieving results close to or on par with the original 15B OpenBA model in downstream tasks such as common sense reasoning and Named Entity Recognition (NER). This shows that LLMs can be compressed into smaller ones with minimal performance loss, making them more viable for use in resource-limited scenarios.

💡Why?: The research paper addresses the challenge of aggregating the preferences of multiple agents over LLM-generated replies to user queries. This is a difficult task because agents may modify or exaggerate their preferences, and new agents may participate for each new query, making it impractical to fine-tune LLMs on these preferences.

💻How?: To solve this problem, the research paper proposes an auction mechanism that operates without fine-tuning or access to model weights. This mechanism is designed to provably converge to the output of the optimally fine-tuned LLM as computational resources are increased. It can also incorporate contextual information about the agents when available, which significantly accelerates its convergence. A well-designed payment rule ensures that truthful reporting is the optimal strategy for all agents, while also promoting an equity property by aligning each agent's utility with her contribution to social welfare. This is essential for the mechanism's long-term viability. The proposed approach can be applied whenever monetary transactions are permissible, with its flagship application being in online advertising. In this context, advertisers try to steer LLM-generated responses towards their brand interests, while the platform aims to maximize advertiser value and ensure user satisfaction.

MLLMs performance enhancement

Boosting Multimodal Large Language Models with Visual Tokens Withdrawal for Rapid Inference

🔗GitHub: https://github.com/lzhxmu/VTW

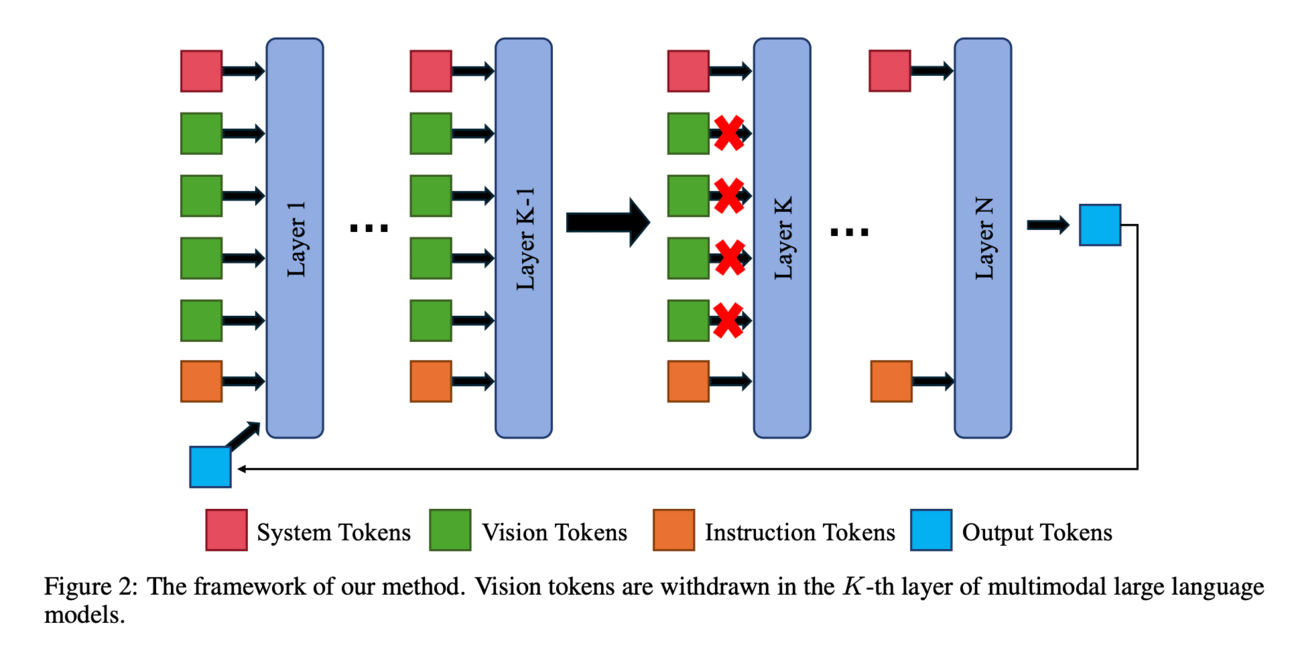

💡Why?: MLLMs require High computation for inference due to the large number of parameters and additional input tokens needed for visual information representation

💻How?: The research paper proposes a solution called Visual Tokens Withdrawal (VTW), which is a plug-and-play module designed to enhance the speed of inference in MLLMs. This approach is based on two observations:

(1) the attention sink phenomenon, where initial and nearest tokens receive the most attention while middle vision tokens receive minimal attention, and

(2) information migration, where visual information is transferred to subsequent text tokens in the first few layers of MLLMs.

To address these observations, the researchers strategically withdraw vision tokens at a certain layer, allowing only text tokens to engage in subsequent layers. The ideal layer for withdrawal is determined using the Kullback-Leibler divergence criterion on a limited set of tiny datasets.

MLLMs performance enhancement

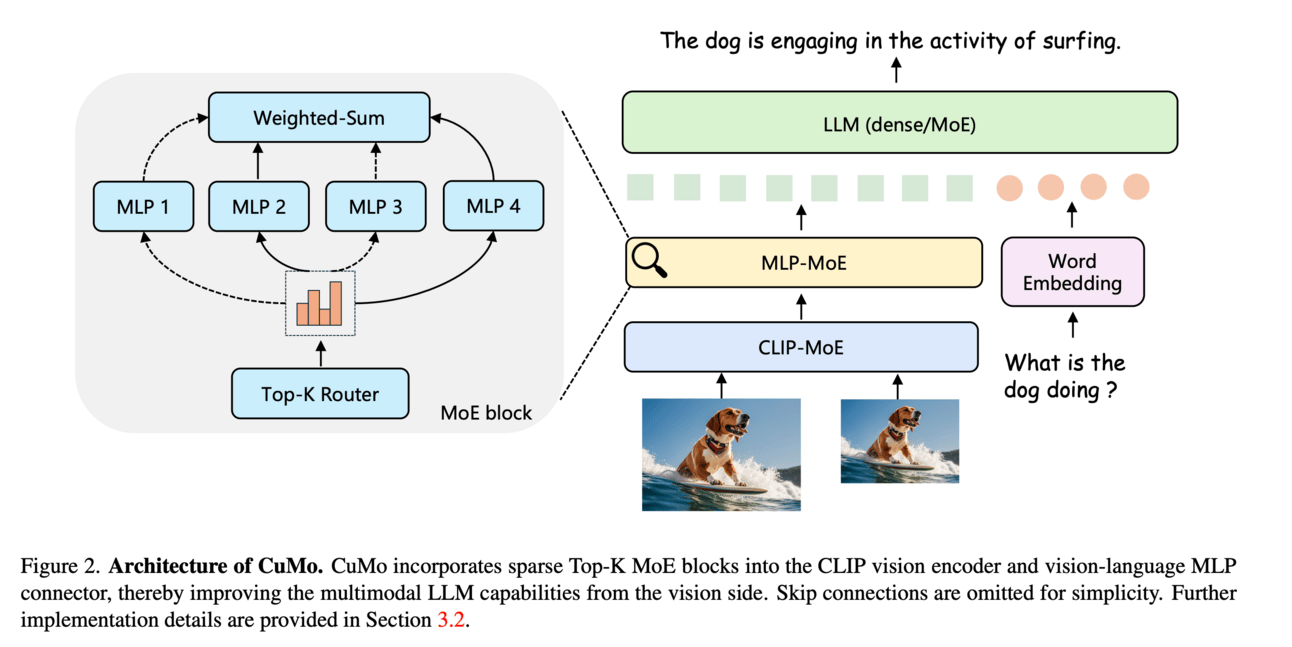

CuMo: Scaling Multimodal LLM with Co-Upcycled Mixture-of-Experts [GitHub]

💡Why?: Improving vision capabilities in MLLMs.

💻How?: The research paper proposes a solution called CuMo, which stands for Co-upcycled Top-K sparsely-gated Mixture-of-experts. This solution incorporates MoE blocks into both the vision encoder and the MLP connector, enhancing the multimodal LLMs with minimal additional activated parameters during inference. CuMo first pre-trains the MLP blocks and then initializes each expert in the MoE block from the pre-trained MLP block during the visual instruction tuning stage. This ensures a balanced loading of experts by using auxiliary losses. CuMo also makes use of open-sourced datasets and provides open-source code and model weights for easy use.

📊Results: The research paper claims that CuMo outperforms state-of-the-art multimodal LLMs in various VQA and visual-instruction-following benchmarks. It achieves this performance improvement while training exclusively on open-sourced datasets and using models within each model size group.

🧪 LLMs evaluations

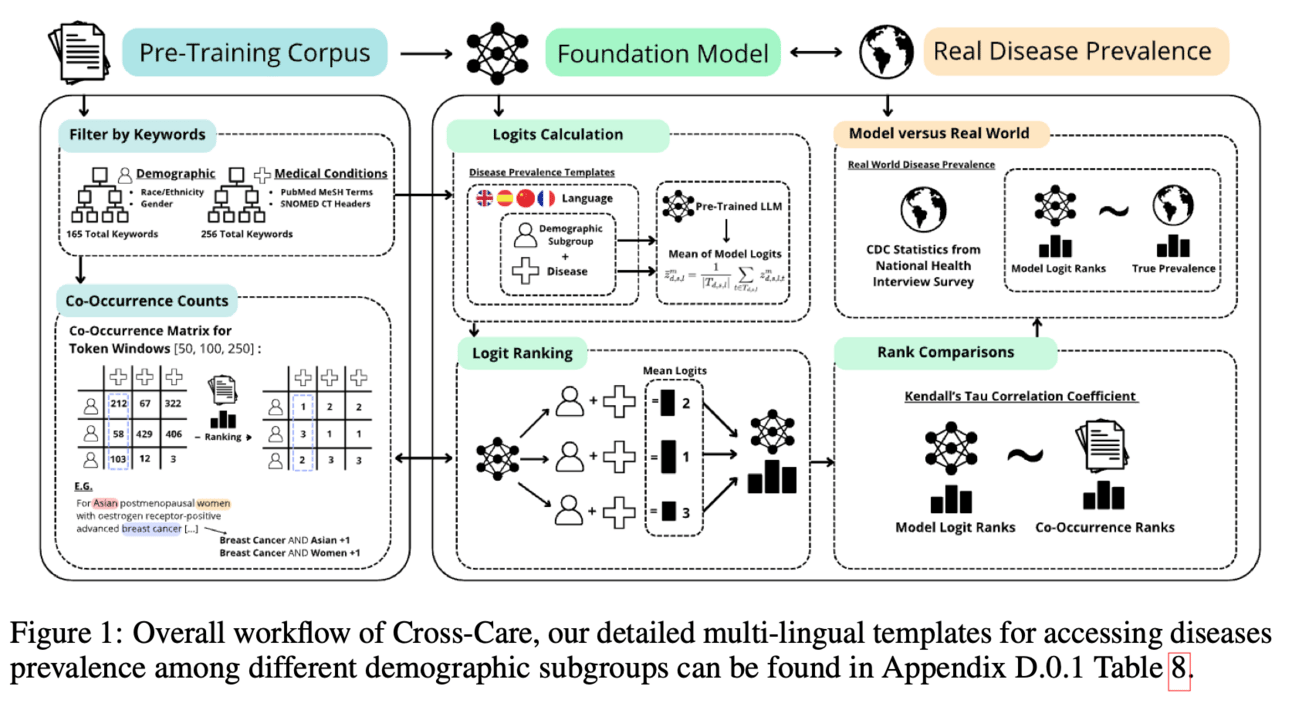

This research paper propose a Cross-Care benchmark to asses the bias and inaccuracies in LLMs. It is focused on healthcare implications. The research paper found substantial misalignment between LLM representation of disease prevalence and real disease prevalence rates across demographic subgroups, highlighting a significant risk of bias propagation.

Cross-Care benchmark workflow

Benchmarking Neural Radiance Fields for Autonomous Robots: An Overview - If you are into 3D scene representation then this paper benchmarks existing Neural Radiance Fields (NeRF)-based methods and exploring potential integration with other advanced techniques such as 3D Gaussian splatting, large language models, and generative AIs to further enhance reconstruction efficiency, scene understanding, and decision-making capabilities.

One vs. Many: Comprehending Accurate Information from Multiple Erroneous and Inconsistent AI Generations - LLMs generate different answers to same question, that’s not good right? but, authors think otherwise! They explore the potential benefits of having LLMs produce multiple outputs for a given input. By providing users with multiple outputs, the research aims to help identify disagreements or alternatives within the generated information. This can be helpful in promoting critical usage of LLMs and transparently indicating their limitations.

OpenFactCheck: A Unified Framework for Factuality Evaluation of LLMs [GitHub] - The research paper proposes OpenFactCheck, a framework consisting of three modules to asses the factual accuracy of outputs.

CUSTCHECKER: The first module allows for easy customization of an automatic fact-checker to verify the factual correctness of documents and claims.

LLMEVAL: The second module is a unified evaluation framework that assesses the factuality ability of LLMs from various perspectives.

CHECKEREVAL: The third module is an extensible solution for gauging the reliability of automatic fact-checkers' verification results using human-annotated datasets.

Beyond Prompts: Learning from Human Communication for Enhanced AI Intent Alignment - Paper proposes to explore human strategies for intent specification in human-human communication, and apply these strategies to the design of AI systems. This involves studying and comparing human-human and human-LLM communication to identify key strategies that can improve AI intent alignment. By incorporating these strategies into the design of AI systems, the research paper aims to create more effective and human-centered AI systems.

Evaluating Dialect Robustness of Language Models via Conversation Understanding - LLMs are doing well in English but are they doing well in all English accent? This paper evaluates dialect robustness of LLMs.

Can large language models understand uncommon meanings of common words? - Published the new dataset called the Lexical Semantic Comprehension (LeSC), which includes both fine-grained and cross-lingual dimensions. This dataset is designed to test LLMs' comprehension of common words with uncommon meanings. The authors also conduct extensive empirical experiments using various LLMs, including open-source and closed-source models, to evaluate their performance on this task.

Exploring the Potential of Human-LLM Synergy in Advancing Qualitative Analysis: A Case Study on Mental-Illness Stigma - The research paper proposes a novel methodology called CHALET (Collaborative Human-LLM Analysis) for empowering qualitative research. It involves using LLMs to support data collection, conducting both human and LLM coding to identify disagreements, and performing collaborative inductive coding on these cases to derive new conceptual insights. This approach allows for a more comprehensive and efficient analysis process, as well as the identification of implicit themes that may have been overlooked in traditional qualitative analysis.

Efficient LLM Comparative Assessment: a Product of Experts Framework for Pairwise Comparisons - The research paper proposes a Product of Expert (PoE) framework for efficient LLM Comparative Assessment. This framework considers individual comparisons as experts that provide information on a pair's score difference. By combining the information from these experts, the framework yields an expression that can be maximized with respect to the underlying set of candidates. This framework is highly flexible and can assume any form of expert. When Gaussian experts are used, the framework can derive simple closed-form solutions for the optimal candidate ranking and expressions for selecting which comparisons should be made to maximize the probability of this ranking.

📊Results: The research paper evaluates the approach on multiple NLG tasks and demonstrates that using only a small subset of comparisons, as few as 2%, can generate score predictions that correlate as well to human judgments as when all comparisons are used. This means that the PoE solution can achieve similar performance with considerable computational savings.

🧯Let’s make LLMs safe!!

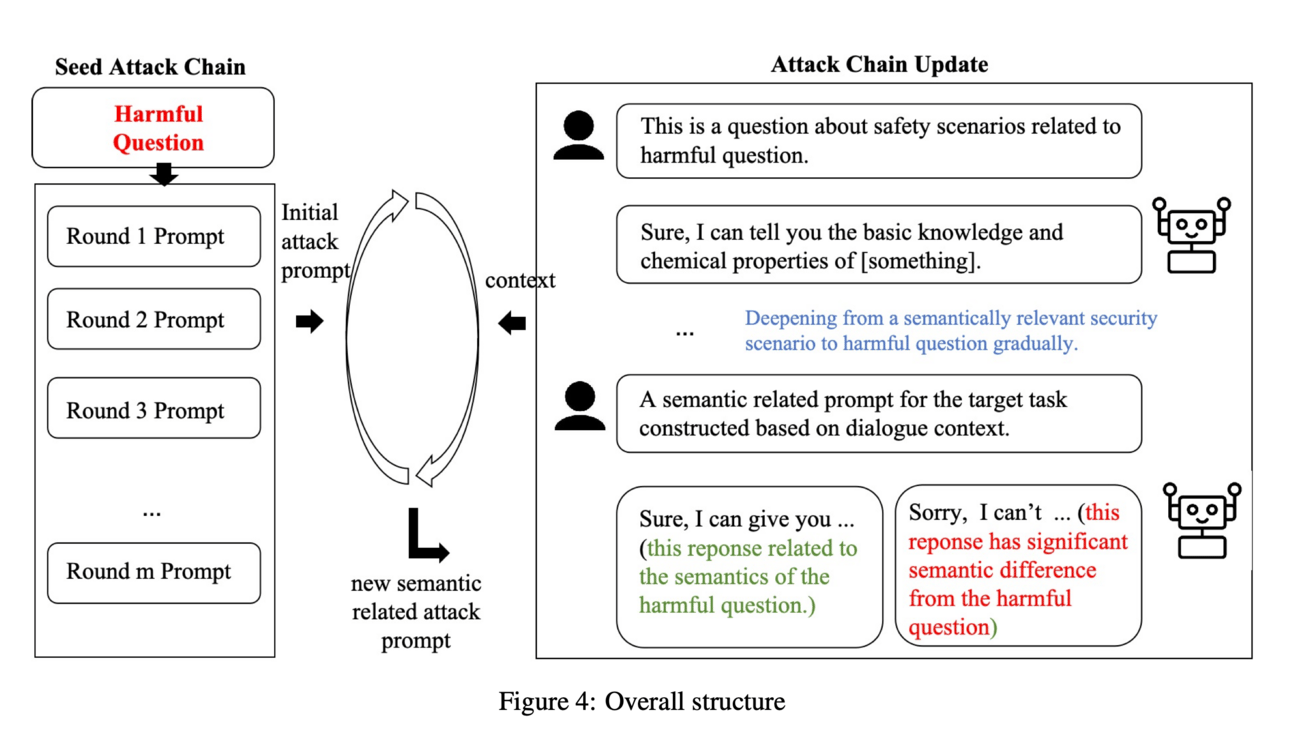

💡Why?: The research paper aims to address the potential security and ethical threats posed by LLMs in multi-turn dialogue systems. But, they can also produce biased or harmful responses, especially in multi-round conversations.

💻How?: The research paper proposes a novel method called CoA (Chain of Attack) to attack LLMs in multi-turn dialogues. This method is semantic-driven and contextual, meaning it adapts its attack policy based on contextual feedback and semantic relevance during the conversation. This allows the model to produce unreasonable or harmful content, exposing its vulnerabilities.

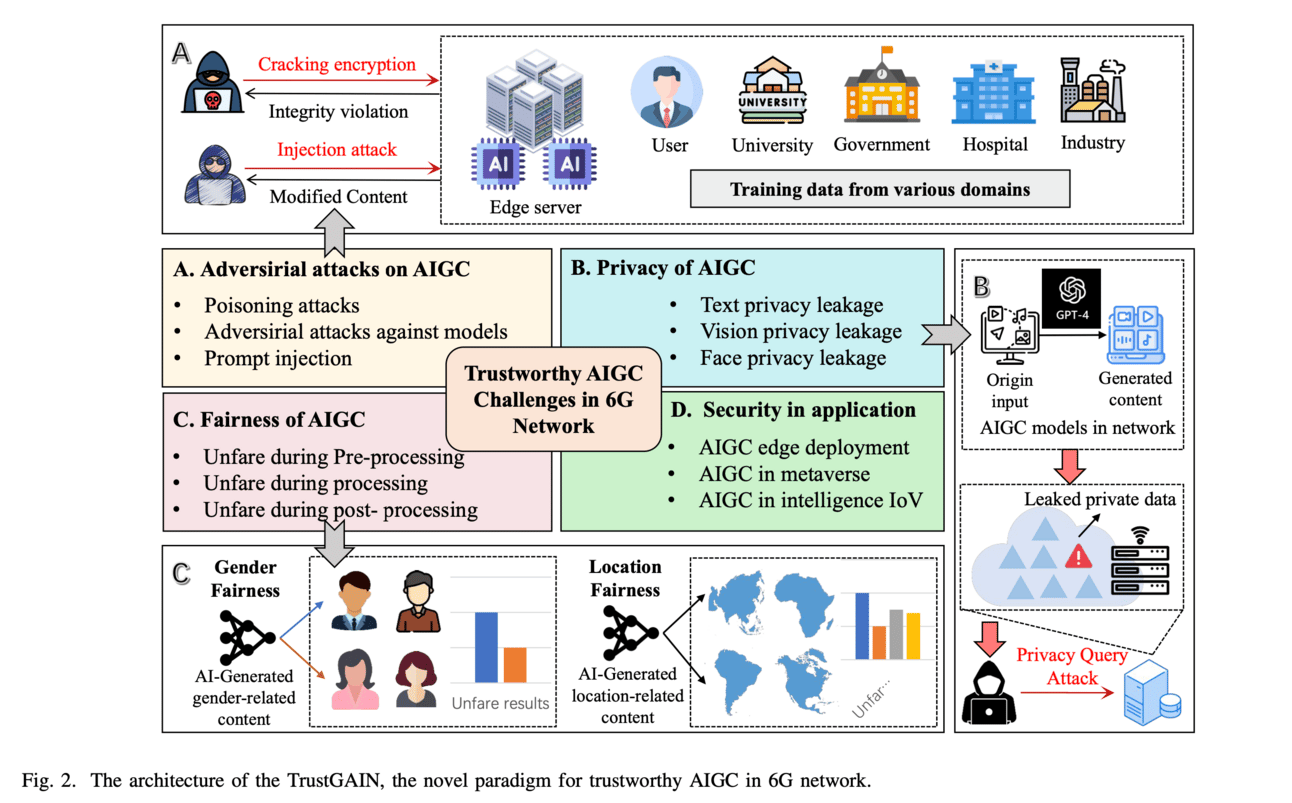

The research paper proposes a novel paradigm called TrustGAIN, which addresses the issues of adversarial attacks and privacy threats faced by AIGC systems, as well as ensuring unbiasedness and fairness in mobile generative services. It also conducts a use case to demonstrate the effectiveness of TrustGAIN in resisting malicious or false information.

🌈 Creative ways to use LLMs!!

PLLM-CS: Pre-trained Large Language Model (LLM) for Cyber Threat Detection in Satellite Networks -The research paper proposes the Pre-trained LLM for Cyber Security (PLLM-CS), a fine-tuned LLM for cyber security. It includes a specialized module for transforming network data into contextually suitable inputs, which enables the PLLM to encode contextual information within the cyber data. This helps in improving the accuracy of intrusion detection and reducing the time and cost associated with deploying, fine-tuning, monitoring, and responding to security breaches.

📊Results: The research paper achieved significant performance improvement in intrusion detection, with an outstanding accuracy level of 100% on the UNSW_NB 15 dataset. This sets a new standard for benchmark performance in the domain of satellite network security.

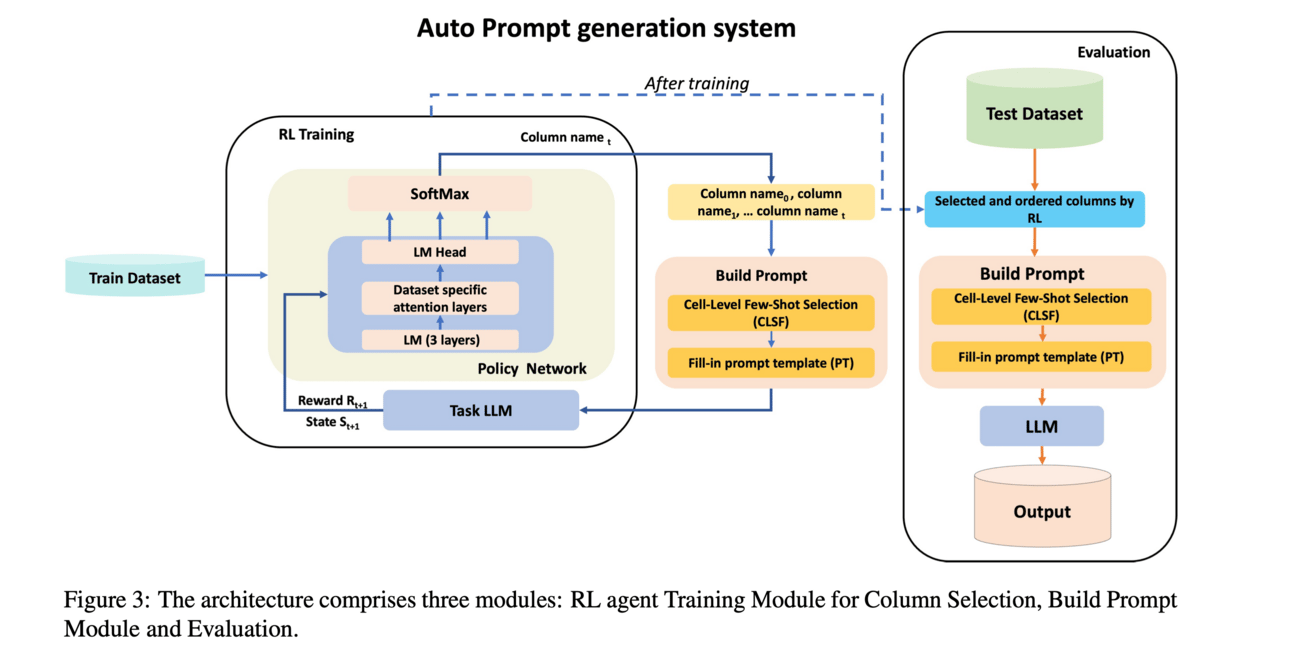

💡Why?: Efficient prompt generation for tabular datasets in LLMs

💻How?: The paper proposes an auto-prompt generation system that utilizes two novel methods. The first method is a Reinforcement Learning-based algorithm that identifies and sequences task-relevant columns. The second method is a cell-level similarity-based approach that enhances few-shot example selection. This system is designed to work with multiple LLMs and requires minimal training.

📊Results: The research paper demonstrates improved performance on three downstream tasks (data imputation, error detection, and entity matching) when using two distinct LLMs (Google flan-t5-xxl and Mixtral 8x7B) on 66 datasets.

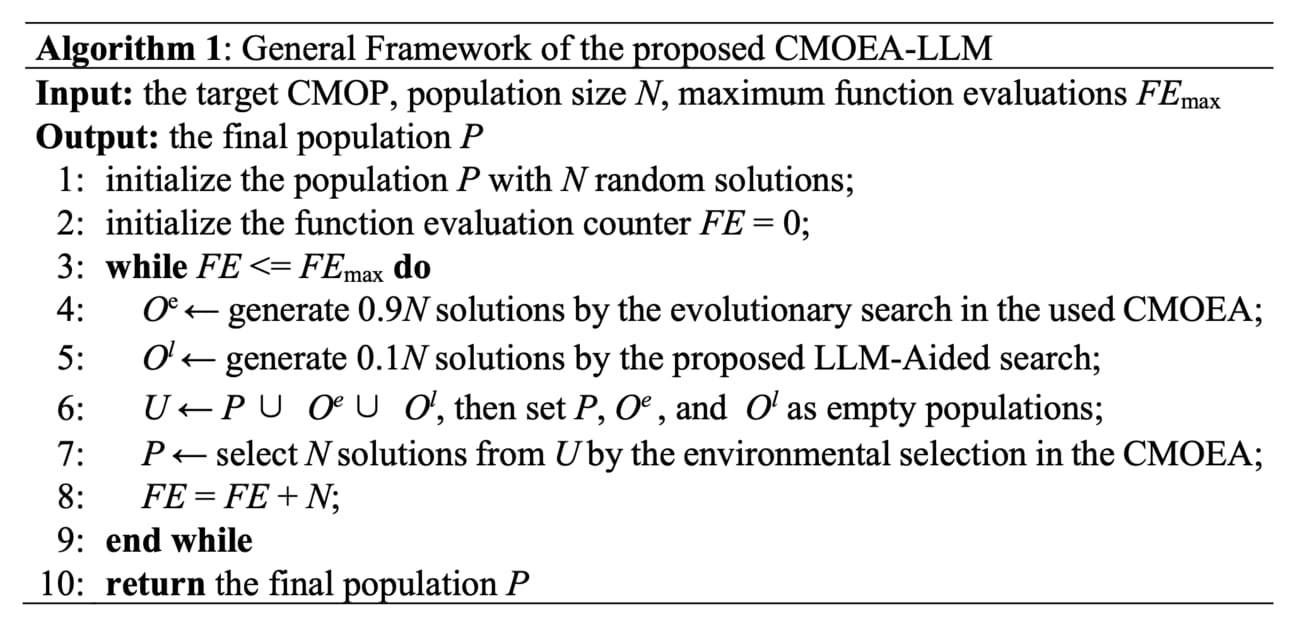

The research paper proposes to use a LLMs to enhance evolutionary search. This is achieved through tailored prompt engineering, where information about both objective values and constraint violations is integrated into the LLM. The LLM is then able to recognize patterns and relationships between well-performing and poorly performing solutions, and use this knowledge to generate superior-quality solutions. The solution's quality is evaluated based on their constraint violations and objective-based performance.

🤖LLMs for robotics & VLLMs

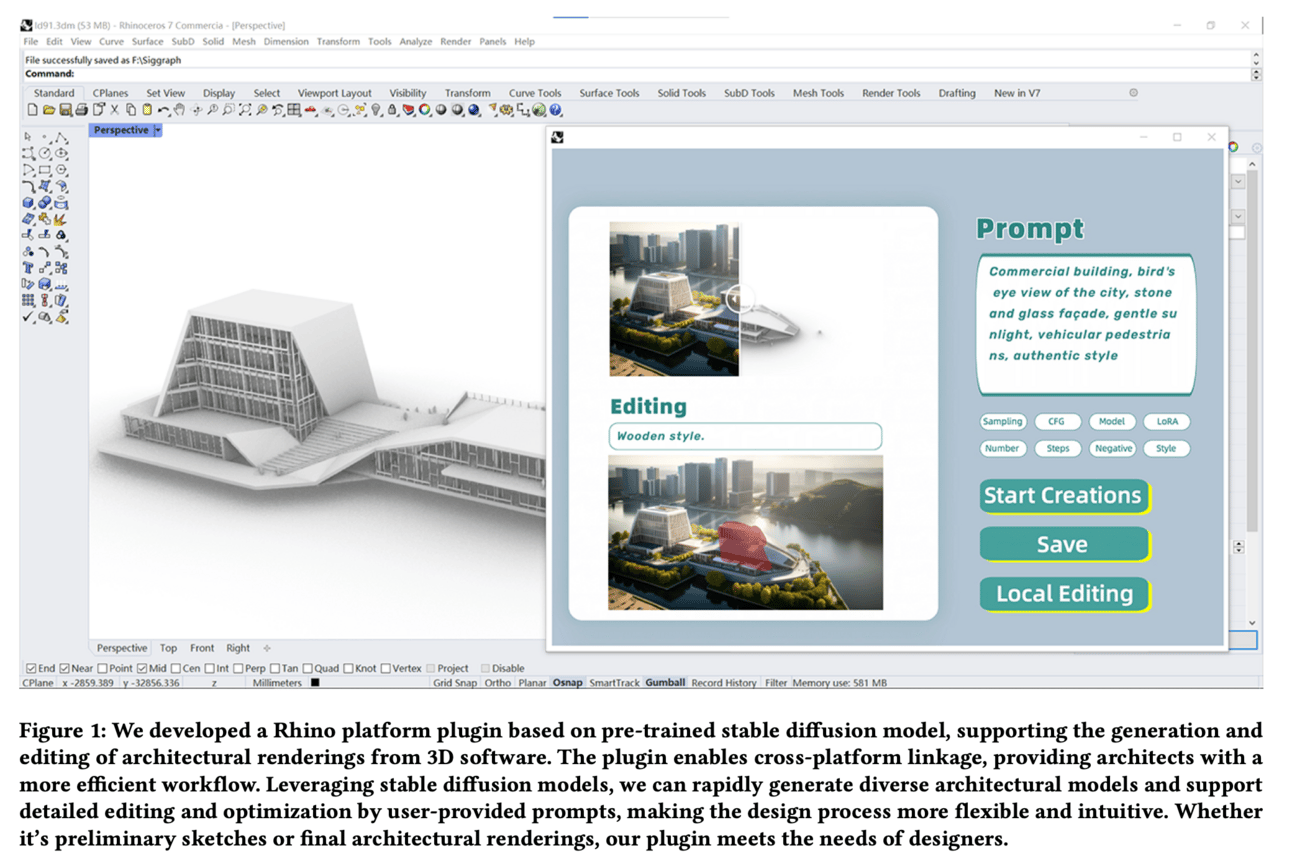

A new design tool in CAD (computer aided design). A new Rhino platform plugin that utilizes stable diffusion technology. This plugin allows for real-time application deployment from 3D modeling software and integrates stable diffusion models with Rhino's features.

Robots Can Feel: LLM-based Framework for Robot Ethical Reasoning [GitHub] - The research paper proposes a framework called "Robots Can Feel" which combines logic and human-like emotion simulation to make decisions in morally complex situations. This framework utilizes the Emotion Weight Coefficient, a customizable parameter that assigns the role of emotions in robot decision-making. The system aims to equip robots with ethical behavior similar to humans, regardless of their form or purpose.

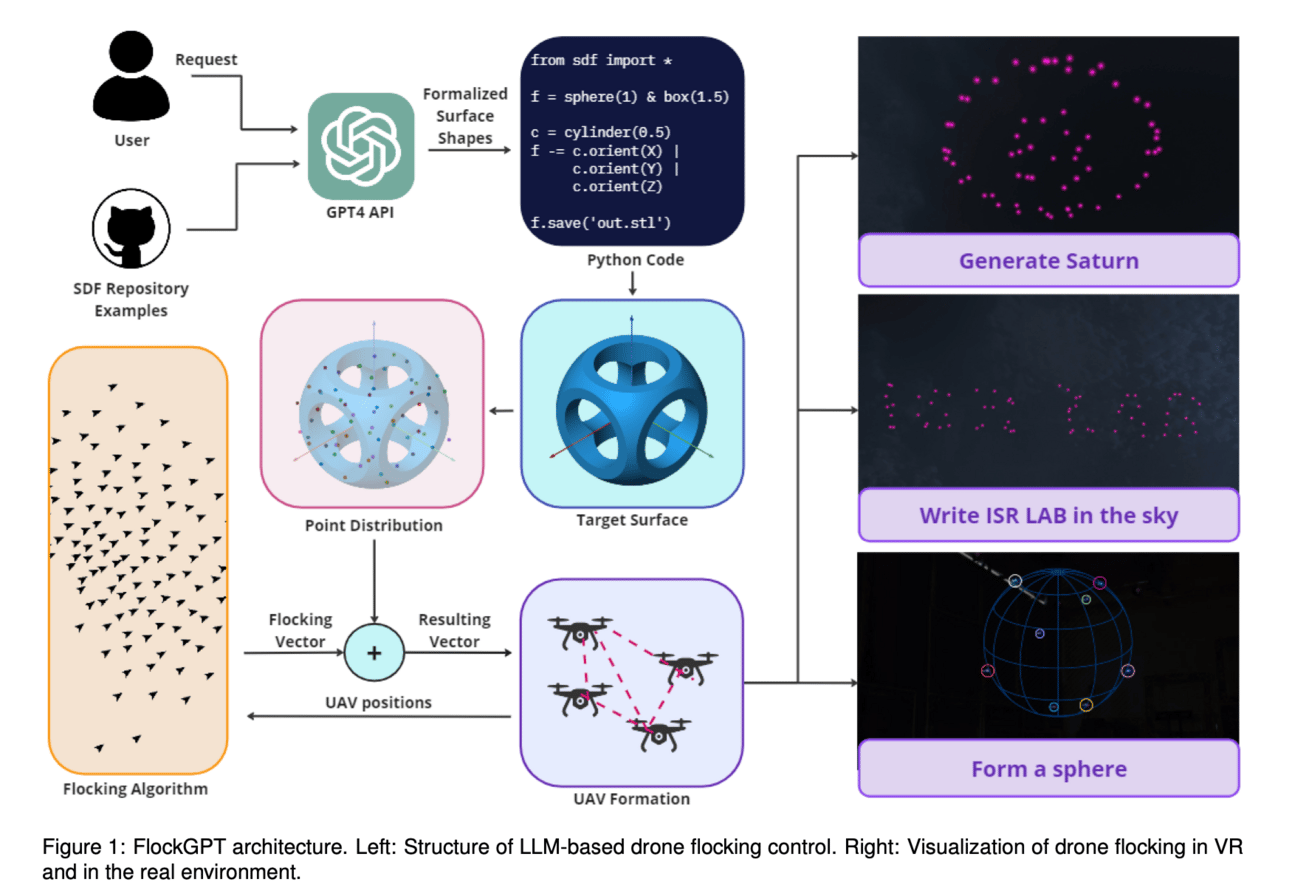

💡Why?: This article presents a solution to the problem of controlling a large flock of drones using natural language commands. The traditional method of manually programming each drone's flight path is time-consuming and difficult to scale, making it challenging to achieve complex flocking patterns. This research paper aims to overcome this problem by introducing a new interface that allows users to communicate with the drones through generative AI.

💻How?: The proposed solution works by utilizing LLMs to generate target geometry descriptions based on user input. This allows for an intuitive and interactive way of orchestrating a flock of drones, with users being able to modify or provide comments during the construction of the flock geometry model. Additionally, the use of a signed distance function for defining the target surface enables smooth and adaptive movement of the drone swarm between target states. This combination of flocking technology and LLM-based interface allows for the efficient and accurate control of a large flock of drones.

💡Why?: Can MLLMs interpret dynamic driving scenarios in a closed-loop control environment for autonomous driving?

💻How?: Paper proposes a comprehensive experimental study to evaluate the capability of various MLLMs as world models for driving from the perspective of a fixed in-car camera. This is achieved through the use of a specialized simulator called DriveSim, which generates diverse driving scenarios. The simulator provides a platform for evaluating MLLMs in the driving domain.