🔬Core research improving LLMs!

💡Why?: Current methods of training LLMs on fixed-length token sequences. This method leads to cross-document attention within a sequence, which is not an ideal learning signal and is computationally inefficient. Additionally, training on long sequences becomes difficult due to the quadratic cost of attention.

💻How?: Paper proposes a technique called "dataset decomposition" which breaks down a dataset into multiple buckets, each containing sequences of the same size extracted from a unique document. During training, variable sequence length and batch size are used, with samples being taken from all buckets simultaneously. This approach incurs a penalty proportional to the actual document lengths at each step, resulting in significant time savings. Compared to the baseline approach of concatenating and chunking, which incurs a fixed attention cost at every step, the proposed method is more efficient in terms of both time and computational resources.

📊Results: The research paper conducted experiments on a web-scale corpus and demonstrated that their approach significantly improves performance on standard language evaluations and long-context benchmarks.

💡Why?: the quadratic complexity of the self-attention mechanism and issues with length extrapolation caused by pretraining exclusively on short inputs makes it difficult to implement long-context modeling for LLMs.

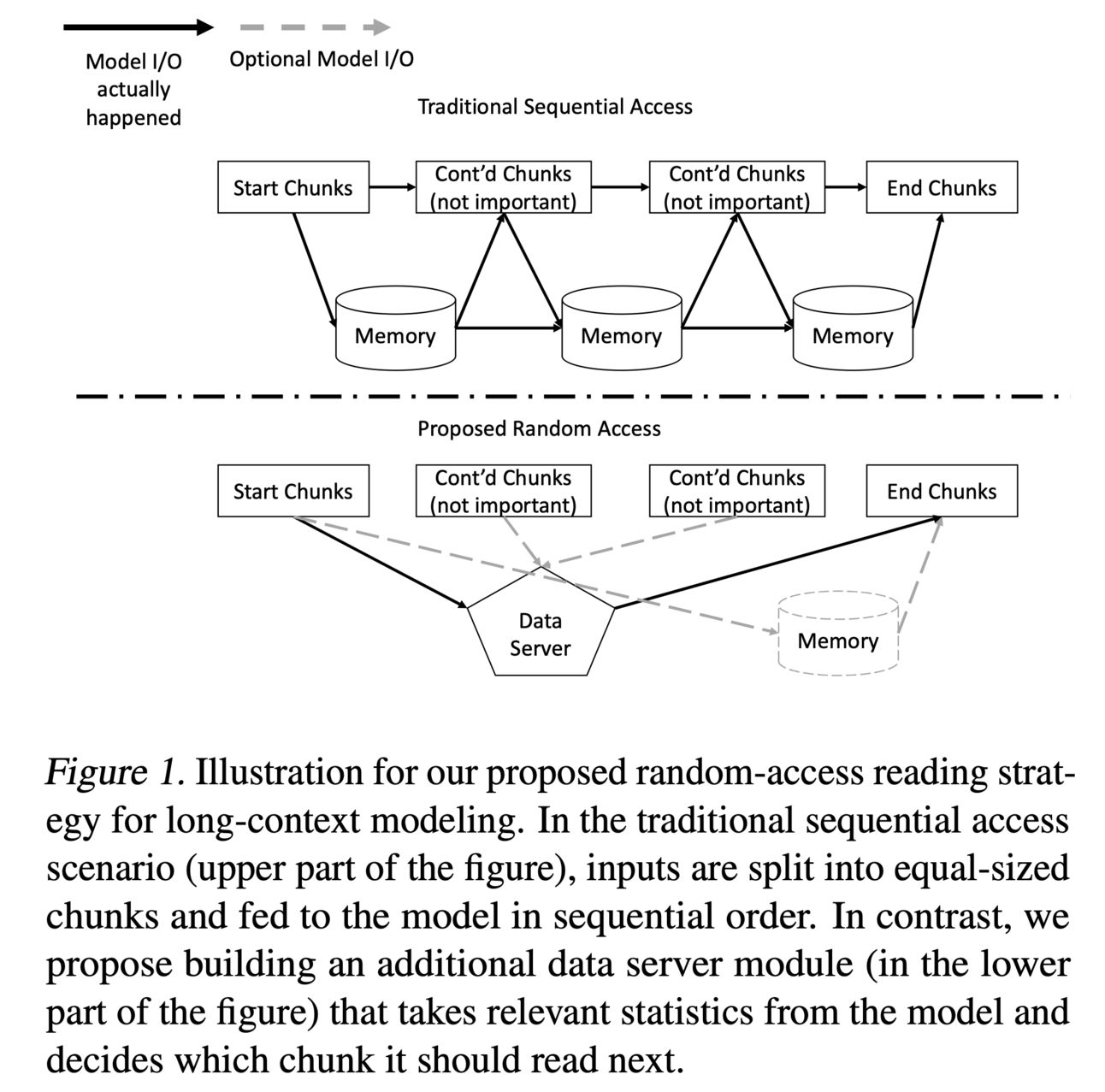

💻How?: Paper proposes a novel reading strategy called "random access". This strategy is inspired by human reading behaviours and existing empirical observations. It allows transformers to efficiently process long documents without examining every token. This is achieved by training the model to omit hundreds of less pertinent tokens and only focus on relevant information. This reduces the computational complexity and improves the model's ability to handle long-context modeling.

📊Results: The research paper provides experimental results from pretraining, fine-tuning, and inference phases, which validate the efficacy of the proposed method. It shows significant performance improvement in handling long-context modeling compared to existing methods, such as text chunking, the kernel approach, and structured attention. This improvement is achieved through faster processing speed and better accuracy in goal-oriented reading of long documents. Overall, the research paper demonstrates the effectiveness of random access as a reading strategy for long-context modeling.

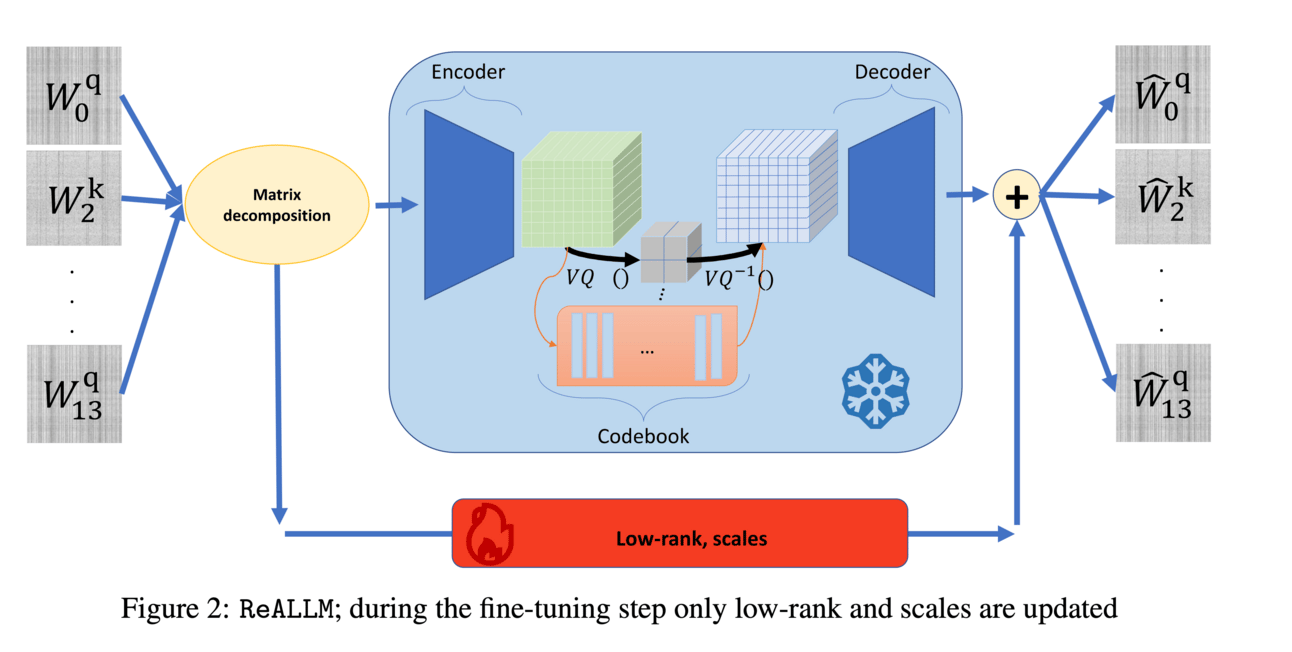

💡Why?: Compression and memory-efficient adaptation of pre-trained language models.

💻How?: The research paper proposes a novel approach called ReALLM, which decomposes pre-trained matrices into a high-precision low-rank component and a vector-quantized latent representation using an autoencoder. During fine-tuning, only the low-rank components are updated. This approach adapts the shape of the encoder to each matrix, resulting in a small embedding on a few bits and a neural decoder model with its weights on a few bits. The decompression of a matrix only requires one embedding and a single forward pass with the decoder, making it memory-efficient.

📊Results: The research paper reports that ReALLM achieves state-of-the-art performance on language generation tasks (C4 and WikiText-2) for a budget of 3 bits without any training. With a budget of 2 bits, ReALLM achieves even better results after fine-tuning on a small calibration dataset.

💡Why?: Improving long-form responses generated by LLMs.

💻How?: The research paper proposes a technique called Atomic Self-Consistency (ASC) which aims to improve the recall of relevant information in LLM responses. This is achieved by using multiple stochastic samples from an LLM and merging relevant subparts from these samples to create a more accurate and comprehensive response. Unlike previous approaches, which only select the best single generation, ASC combines authentic subparts from multiple samples to create a superior composite answer.

📊Results: Through extensive experiments and ablations, the research paper shows that ASC outperforms previous approaches, such as Universal Self-Consistency (USC), on multiple factoids and open-ended QA datasets. ASC also demonstrates untapped potential for further enhancing long-form generations by merging multiple samples.

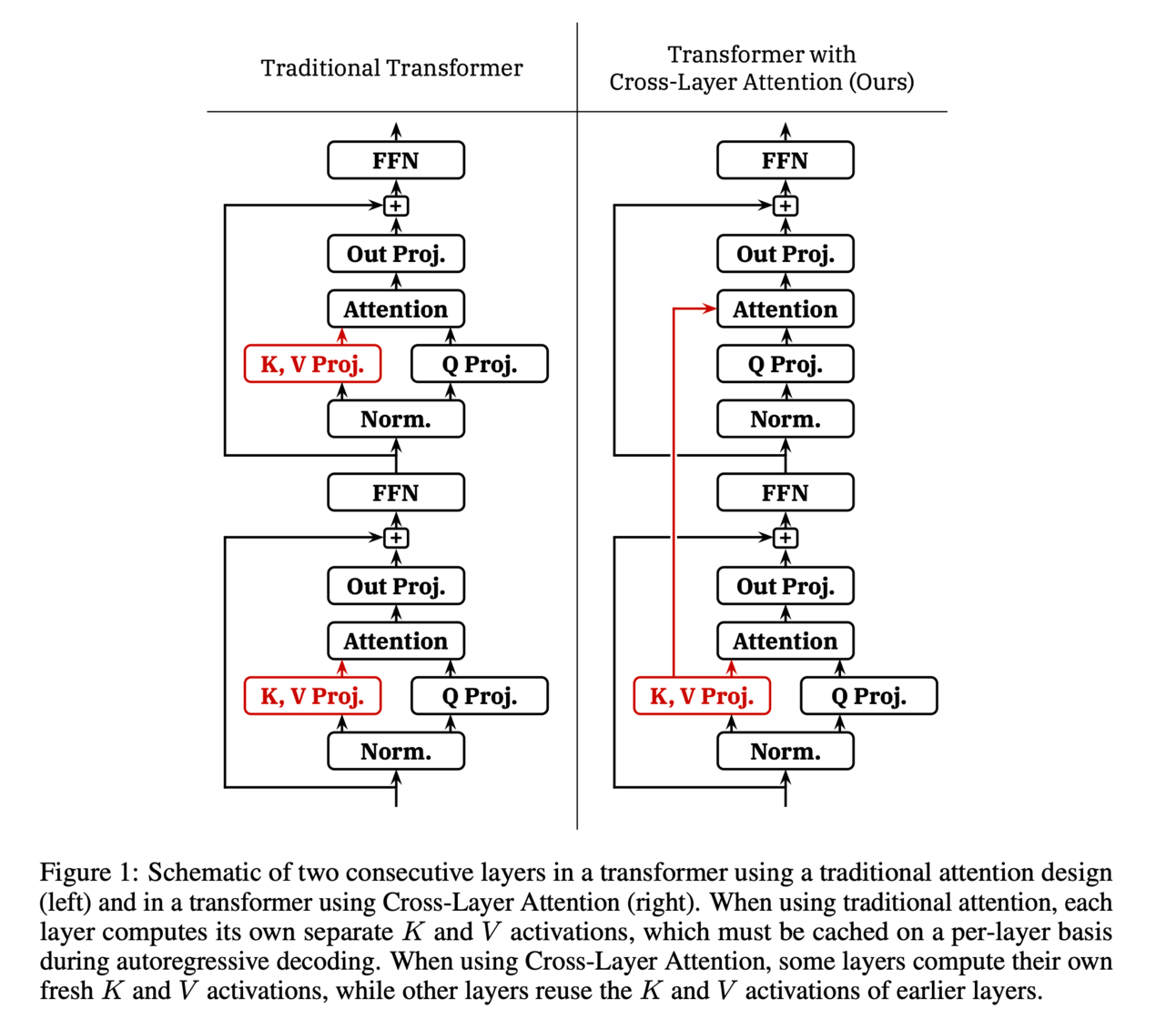

💡Why?: Improve memory limitations in key-value (KV) caching for autoregressive LLMs, which can become prohibitive at long sequence lengths and large batch sizes.

💻How?: Paper introduces a new attention design called Cross-Layer Attention (CLA), which builds upon the previously discovered Multi-Query Attention (MQA) and its generalization, Grouped-Query Attention (GQA). CLA further reduces the size of the KV cache by sharing key and value heads between adjacent layers, resulting in a 2x reduction in memory usage while maintaining similar accuracy as unmodified MQA. This is achieved by allowing multiple query heads to share a single key/value head, reducing the number of distinct key/value heads needed.

📊Results: The research paper demonstrates that CLA provides a Pareto improvement over traditional MQA in terms of memory/accuracy tradeoffs, allowing for longer sequence lengths and larger batch sizes in inference. Experiments training 1B- and 3B-parameter models from scratch show the effectiveness of CLA in achieving this performance improvement.

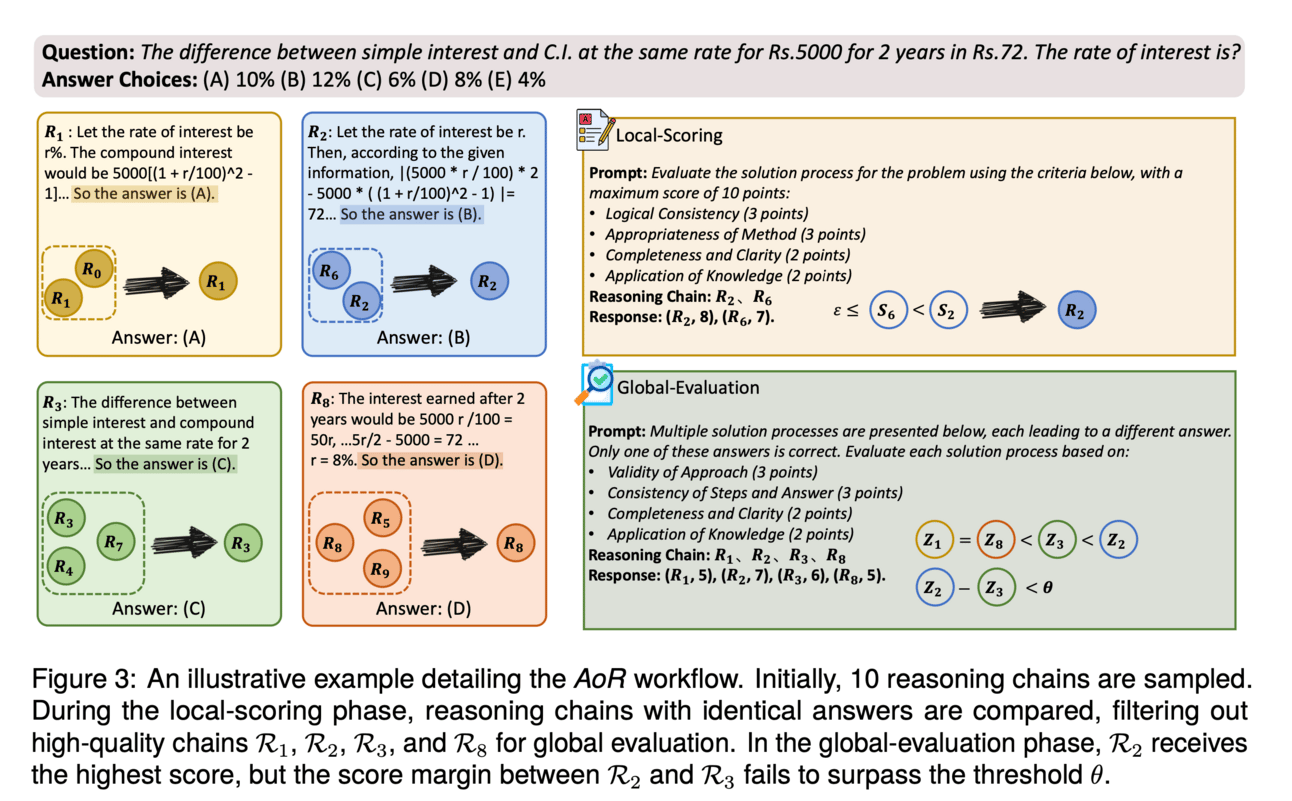

💡Why?: Limited reasoning capabilities of LLMs in complex reasoning tasks, particularly in scenarios where the correct answers are in the minority.

💻How?: A new framework called AoR (Aggregation of Reasoning), which utilizes a hierarchical reasoning aggregation framework. This framework selects answers based on the evaluation of multiple reasoning chains and incorporates dynamic sampling to adjust the number of reasoning chains according to the complexity of the task. Essentially, AoR combines multiple reasoning chains and ensembles them based on the frequency of correct answers, overcoming the limitation of minority answers.

📊Results: The research paper shows that AoR outperforms other prominent ensemble methods in a series of complex reasoning tasks. Further analysis reveals that AoR not only adapts to various LLMs but also achieves a superior performance ceiling compared to current methods. This improvement in performance demonstrates the effectiveness of AoR in addressing the limited reasoning capabilities of LLMs.

💡Why?: Establishes a mathematical foundation for the Adam optimizer and improving its performance in various domains including LLM, ASR, and VQ-VAE.

💻How?: Paper elucidates the connection between Adam optimizer and natural gradient descent through Riemannian and information geometry. It rigorously analyzes the diagonal empirical Fisher information matrix (FIM) in Adam and suggests using log probability functions as loss, based on discrete distributions. It also uncovers flaws in the original Adam algorithm and suggests corrections such as enhanced momentum calculations, adjusted bias corrections, adaptive epsilon, and gradient clipping. Additionally, it refines the weight decay term based on theoretical framework.

📊Results: The research paper achieved superior performance in various domains including LLM, ASR, and VQ-VAE, and achieved state-of-the-art results in ASR. This shows that the proposed modifications in the Adam optimizer have improved its performance significantly.

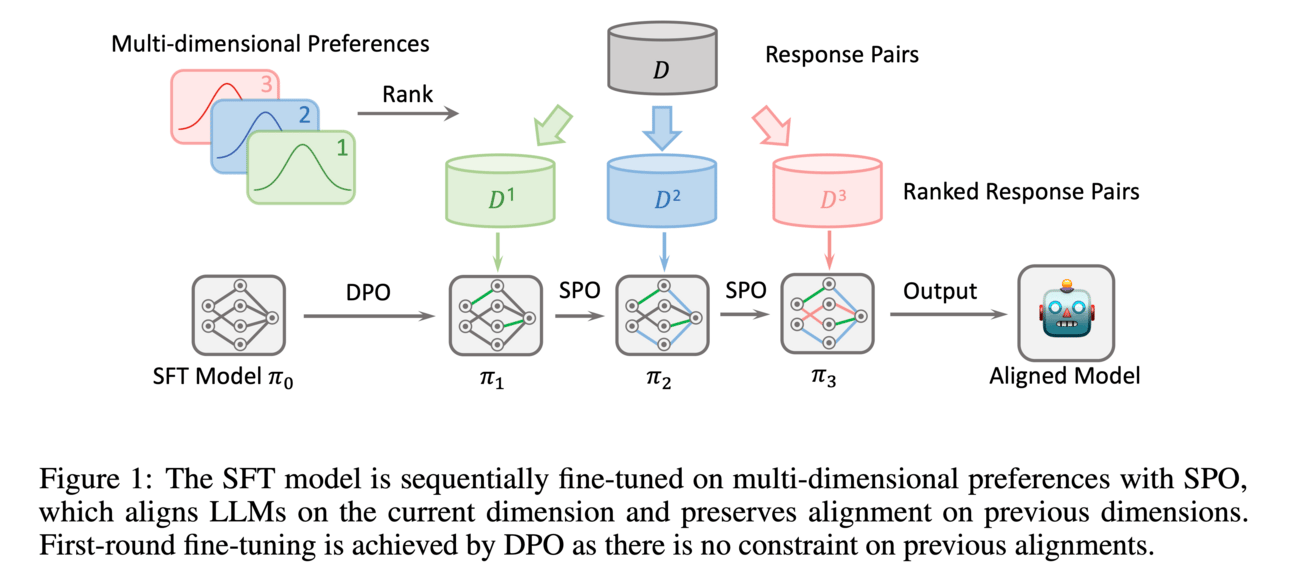

💡Why?: Aligning LLMs with human preference.

💻How?: Paper proposes Sequential Preference Optimization (SPO) method, a fine-tuning process that sequentially aligns LLMs with multiple dimensions of human preferences. This is achieved by optimizing the models without the need for explicit reward modeling, which can be complex and difficult to manage. SPO uses a closed-form optimal policy and loss function to achieve this alignment. This means that the LLMs are directly optimized to align with nuanced human preferences, rather than using a predefined reward model.

📊Results: The research paper shows empirical results on LLMs of different sizes and multiple evaluation datasets to demonstrate the effectiveness of SPO. The results show that SPO successfully aligns LLMs across multiple dimensions of human preferences and significantly outperforms the baseline methods. This means that SPO is able to achieve better performance in aligning LLMs with human preferences.

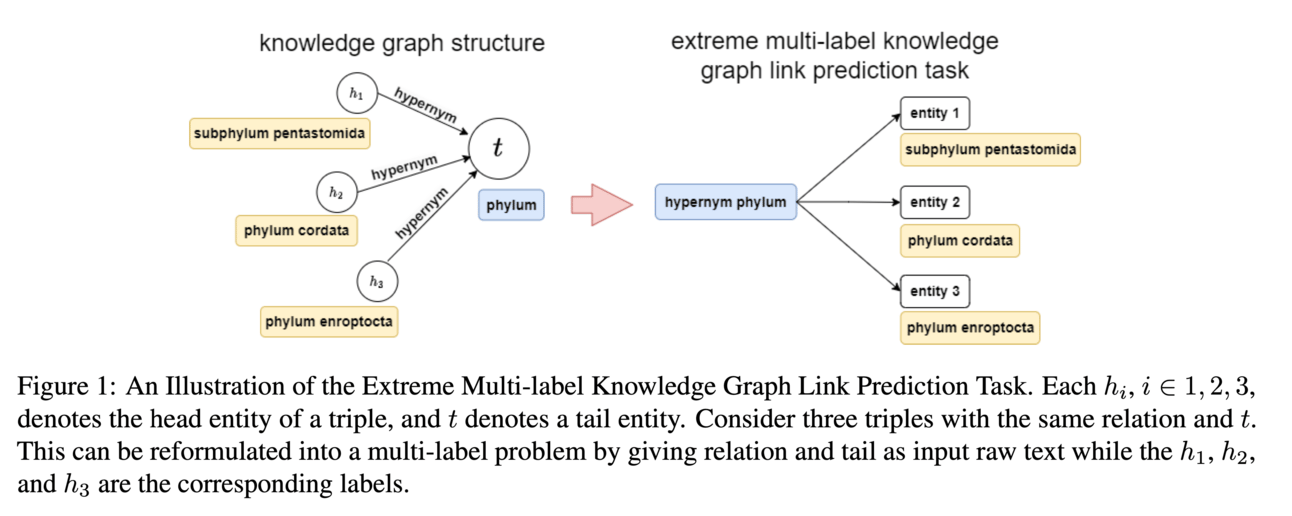

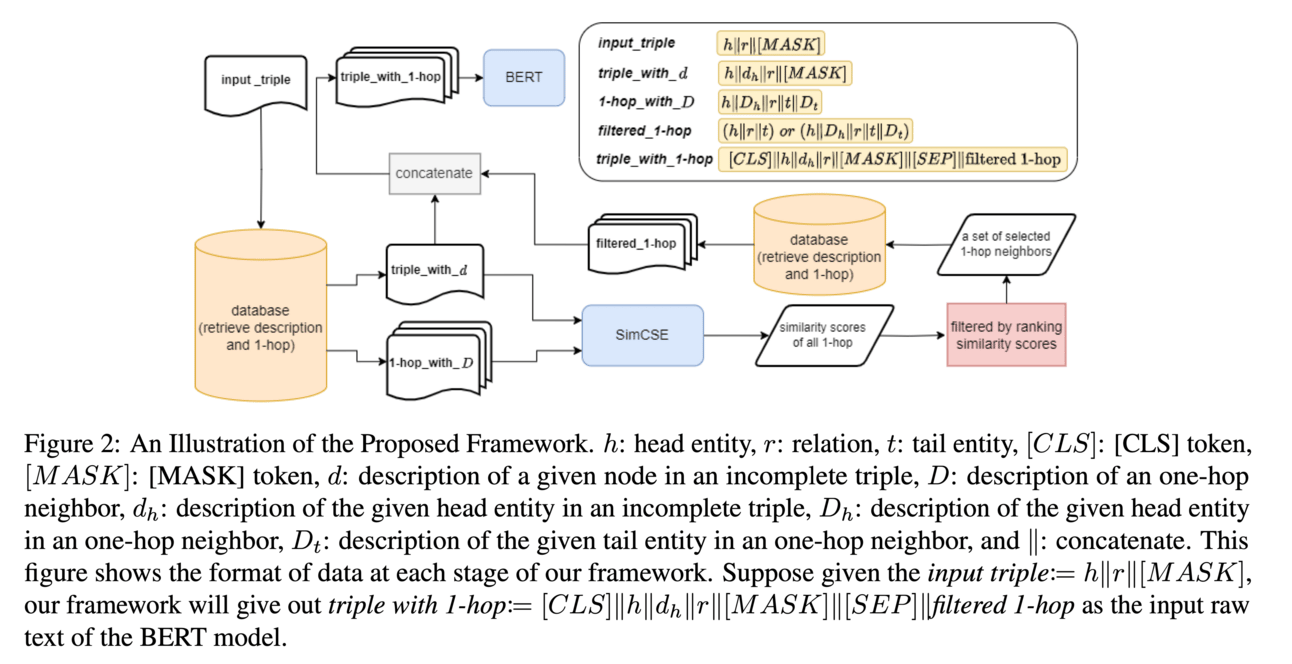

Retrieval-Augmented Language Model for Extreme Multi-Label Knowledge Graph Link Prediction - project page

💡Why?: Improves hallucination

💻How?: Paper proposes a new task called the extreme multi-label KG link prediction task. This task enables a model to perform extrapolation with multiple responses using structured real-world knowledge. The proposed framework, called Retrieval-Augmented Language Model, consists of a retriever that identifies relevant one-hop neighbours in a knowledge graph, and a language model that is augmented with textual data. The retriever considers entity, relation, and textual data together to identify relevant information from a large one-hop neighborhood in the knowledge graph. This framework is able to extrapolate based on a given knowledge graph with a small parameter size.

📊Results: The research paper does not specifically mention any performance improvement achieved, but the experiments demonstrate that KGs with different characteristics require different augmenting strategies and augmenting the language model's input with textual data significantly improves task performance. This suggests that the proposed framework is effective in addressing the problem of extrapolation in LLMs.

💡Why?: Significant memory overhead in key-value caching for language models, which limits their practicality in deployment.

💻How?: The research paper proposes a novel data-free low-bit quantization technique called DecoQuant, based on tensor decomposition methods, to effectively compress key-value cache. This method adjusts the outlier distribution of the original matrix by performing tensor decomposition, which helps to migrate the quantization difficulties from the matrix to decomposed local tensors. The key idea is to apply low-bit quantization to large tensors, which tend to have a narrower value range, while maintaining high-precision representation for small tensors. This is achieved by using an efficient dequantization kernel tailored specifically for DecoQuant.

📊Results: Through extensive experiments, DecoQuant demonstrates remarkable efficiency gains, showcasing up to a 75% reduction in memory footprint while maintaining comparable generation quality. This shows that DecoQuant is an effective solution for compressing key-value cache and improving the performance of language models.

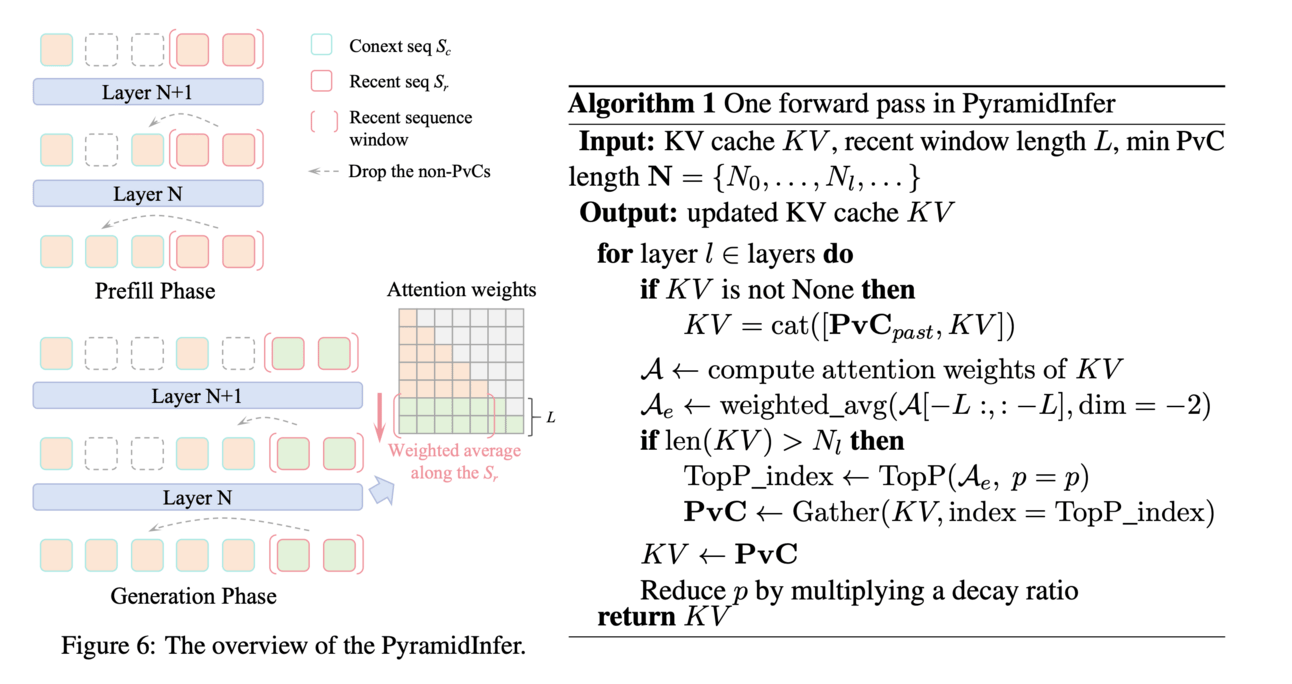

💡Why?: Optimize GPU usage during inference time

💻How?: The research paper proposes a method called PyramidInfer to compress the KV cache (computed keys and values) in the GPU memory by layer-wise retaining crucial context. This is done by identifying the crucial keys and values that influence future generations, which decrease layer by layer, and extracting them through consistency in attention weights. This method helps to reduce memory consumption in pre-computation and improves overall throughput without sacrificing performance.

📊Results: The research paper reports that PyramidInfer improves 2.2x throughput compared to Accelerate with over 54% GPU memory reduction in KV cache.

💡Why?: LLMs struggles to process inputs of any length and maintain a degree of memory. This becomes a problem when the input lengths exceed the LLMs' pre-trained text length, resulting in a decline in text generation capabilities.

💻How?: To solve this problem, the research paper proposes a new model called Streaming Infinite Retentive LLM (SirLLM). SirLLM utilizes the Token Entropy metric and a memory decay mechanism to filter key phrases, allowing LLMs to have long-lasting and flexible memory during infinite-length dialogues without the need for fine-tuning. It works by continuously updating and filtering the key phrases in the input, allowing the model to retain important information and improve its memory capabilities.

📊Results: The research paper provides experimental results that demonstrate SirLLM's effectiveness in improving LLMs performance across different tasks and models. It achieves stable and significant improvements, proving its effectiveness in maintaining long-term memory during conversations.

Have an AI Idea and need help building it?

When you know AI should be part of your business but aren’t sure how to implement your concept, talk to AE Studio.

Elite software creators collaborate with you to turn any AI/ML idea into a reality–from NLP and custom chatbots to automated reports and beyond.

AE Studio has worked with early stage startups and Fortune 500 companies, and we’re ready to partner with your team. Computer vision, blockchain, e2e product development, you name it, we want to hear about it.

📚Survey papers

Generative AI and Large Language Models for Cyber Security: All Insights You Need - Explores the application of LLMs in hardware design security, intrusion detection, and malware detection.

🧯Let’s make LLMs safe!!

💡Why?: Limits the toxicity in LLMs response

💻How?: Paper proposes an innovative training algorithm called adversarial DPO (ADPO) that builds upon the direct preference optimization (DPO) algorithm. ADPO trains the model to assign higher probabilities to preferred responses and lower probabilities to unsafe responses, which are generated using a toxic control token. This helps the model to become more resilient against harmful conversations while minimizing performance degradation. ADPO also offers a more stable training procedure compared to traditional DPO.

💻How?: The paper proposes a novel approach called Iterative Refinement Induced Self-Jailbreak (IRIS) that leverages the reflective capabilities of LLMs for jailbreaking with only black-box access. This method simplifies the jailbreaking process by using a single LLM model as both the attacker and target. It first iteratively refines adversarial prompts through self-explanation, ensuring that well-aligned LLMs will still follow adversarial instructions. Then, it rates and enhances the output to increase its harmfulness.

📊Results: The paper reports impressive results, with IRIS achieving jailbreak success rates of 98% on GPT-4 and 92% on GPT-4 Turbo in under 7 queries. This outperforms previous approaches in terms of automatic, black-box, and interpretable jailbreaking, while also requiring fewer queries. This establishes IRIS as a new standard for interpretable jailbreaking methods.