🌈 Creative ways to use LLMs!!

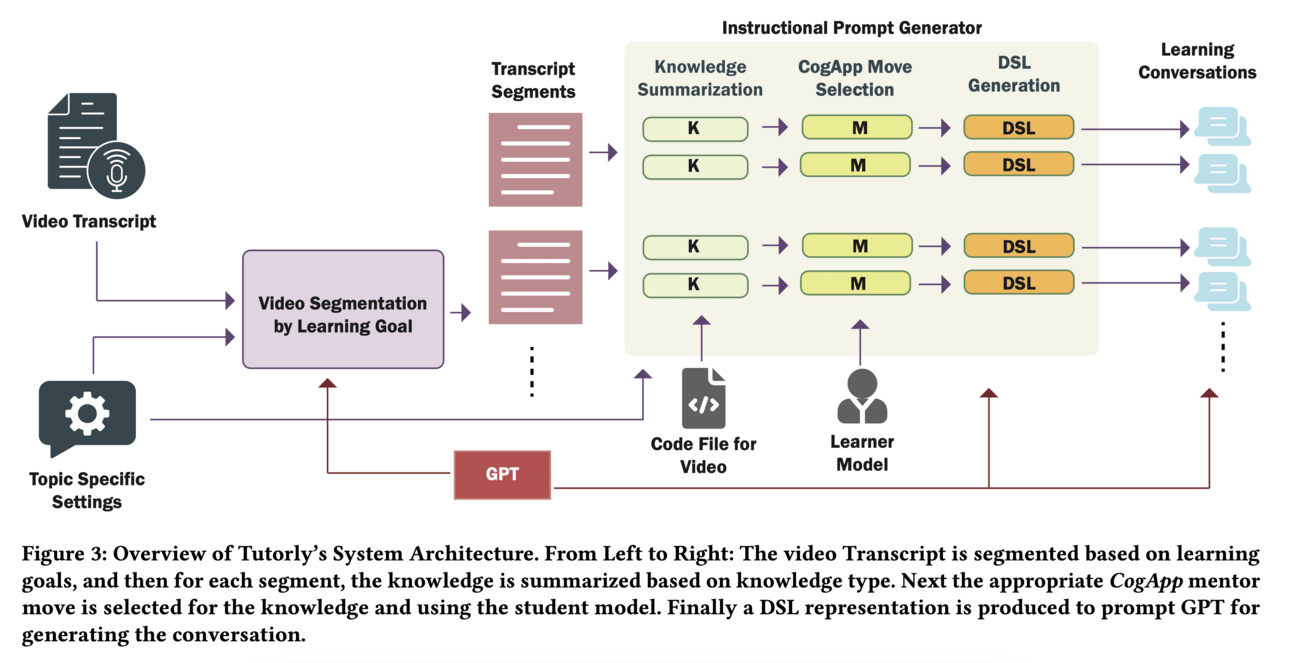

Paper presents Jupyter Lab plugin called “Tutorly” which transforms programming videos into one-on-one tutoring experiences using the cognitive apprenticeship framework. Plugin allows learners to set personalized learning goals, engage in learning-by-doing with a conversational mentor agent, and receive guidance and feedback based on a student model. The mentor agent uses LLM and learner modeling to steer the student's moves and provide personalized support and monitoring.

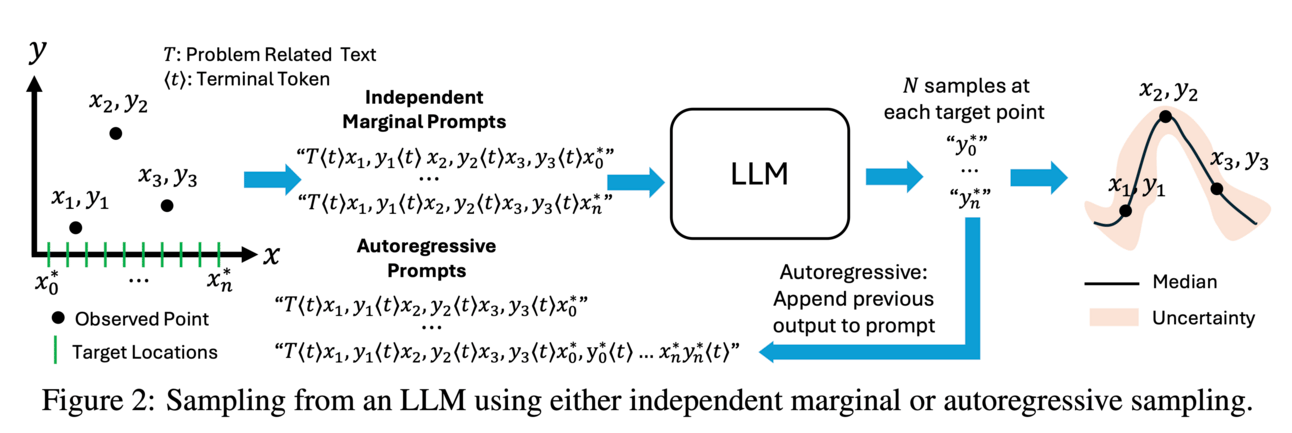

💻How?: The research paper proposes to solve this problem by building a regression model that can process numerical data and make probabilistic predictions based on natural language text describing a user's prior knowledge. This is achieved by leveraging the latent problem-relevant knowledge encoded in LLMs and providing an interface for users to incorporate their expert insights in natural language. The model, called LLM Processes, is able to generate coherent predictive distributions over multiple quantities, making it suitable for a variety of tasks such as forecasting, regression, optimization, and image modeling.

📊Results: The research paper does not specifically mention any performance improvements achieved, but it does demonstrate the effectiveness of the proposed model in incorporating text into numerical predictions, resulting in improved predictive performance and providing quantitative structure that reflects qualitative descriptions. This allows for the exploration of a rich and grounded hypothesis space that is implicitly encoded in LLMs.

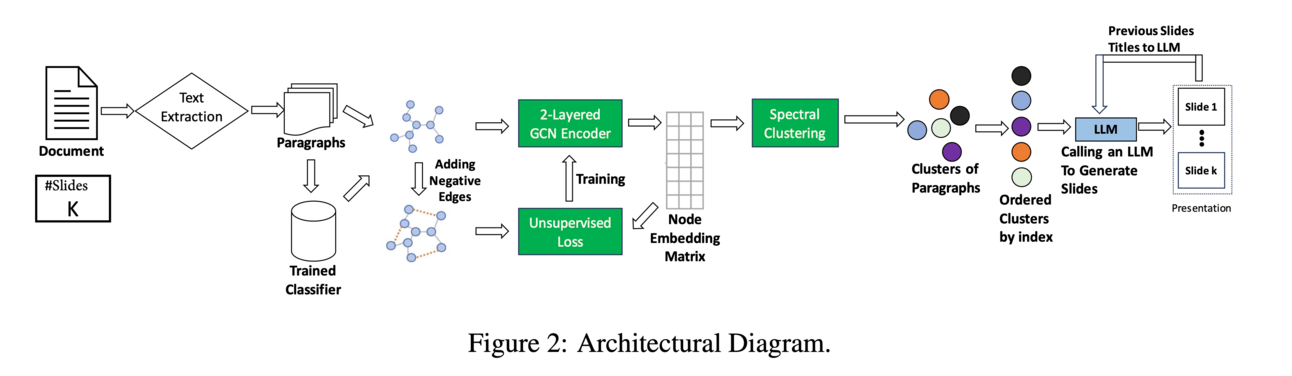

💡Why?: Automatically generates presentations from a long document.

💻How?: The proposed solution is a combination of a graph neural network and a LLM. The LLM is used to generate the presentation content, while the graph neural network is used to map the content to the corresponding slides. This approach allows for a non-linear narrative in the presentation, with content from different parts of the document being attributed to each slide.

A novel LLM-based virtual doctor system called DrHouse. DrHouse utilizes sensor data from smart devices to enhance accuracy and reliability in the diagnosis process. It also continuously updates its medical databases and introduces a new diagnostic algorithm that evaluates potential diseases and their likelihood concurrently. Through multi-turn interactions, DrHouse determines the next steps and progressively refines its diagnoses.

📊Results: The research paper reports an 18.8% increase in diagnosis accuracy over existing baselines, based on evaluations on three public datasets and self-collected datasets. Additionally, a user study with 32 participants showed that 75% of medical experts and 91.7% of patients were willing to use DrHouse.

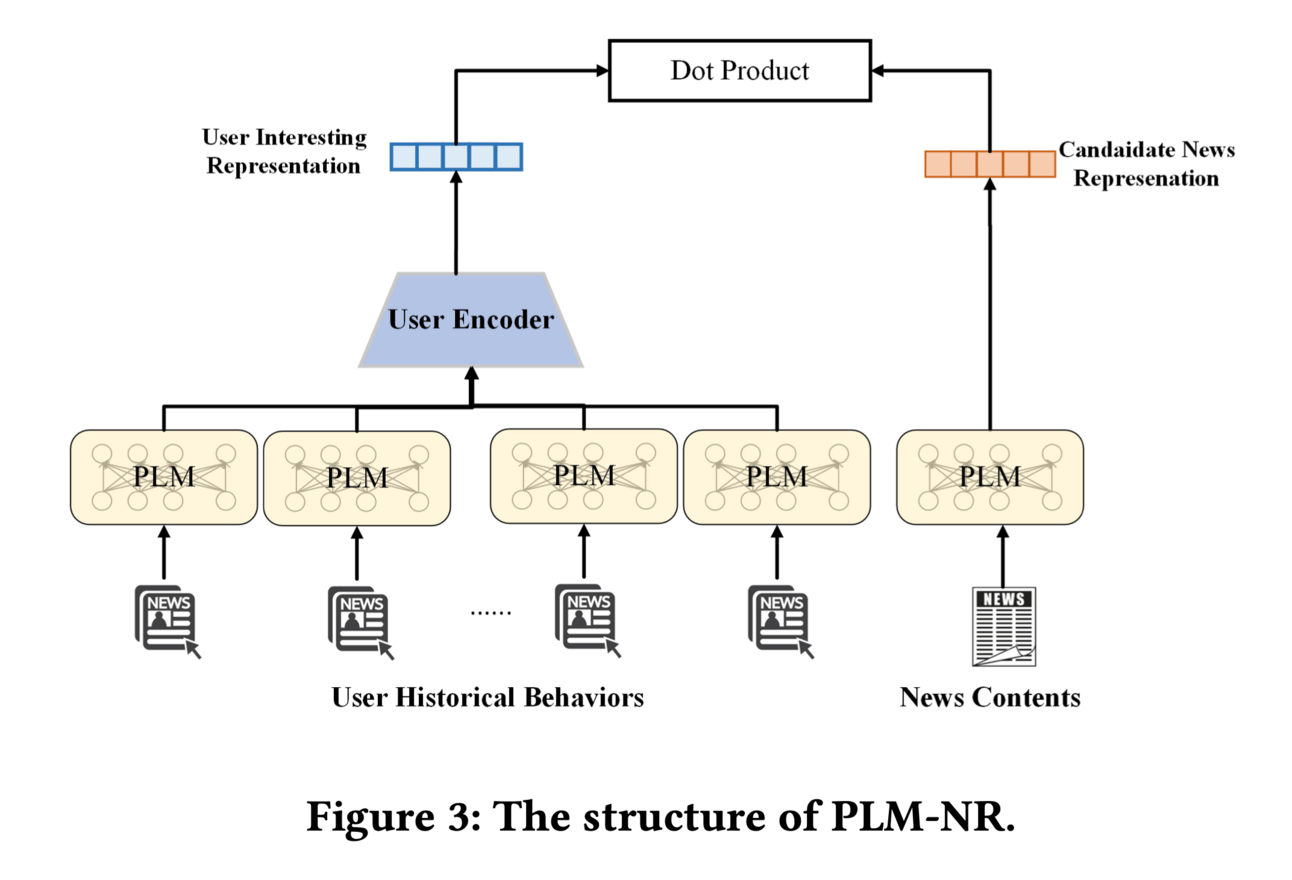

Time Matters: Enhancing Pre-trained News Recommendation Models with Robust User Dwell Time Injection

The research paper proposes two novel strategies to imrpoveuser preference modeling in news recommendation and those are, Dwell time Weight (DweW) and Dwell time Aware (DweA). DweW focuses on refining user clicks by analyzing dwell time data, which provides valuable insights into user behavior. This information is then integrated with initial behavioral inputs to construct a more robust user preference. DweA, on the other hand, empowers the model with an awareness of dwell time information, allowing it to adjust attention values in user modeling autonomously. This leads to a sharper ability to accurately identify user preferences.

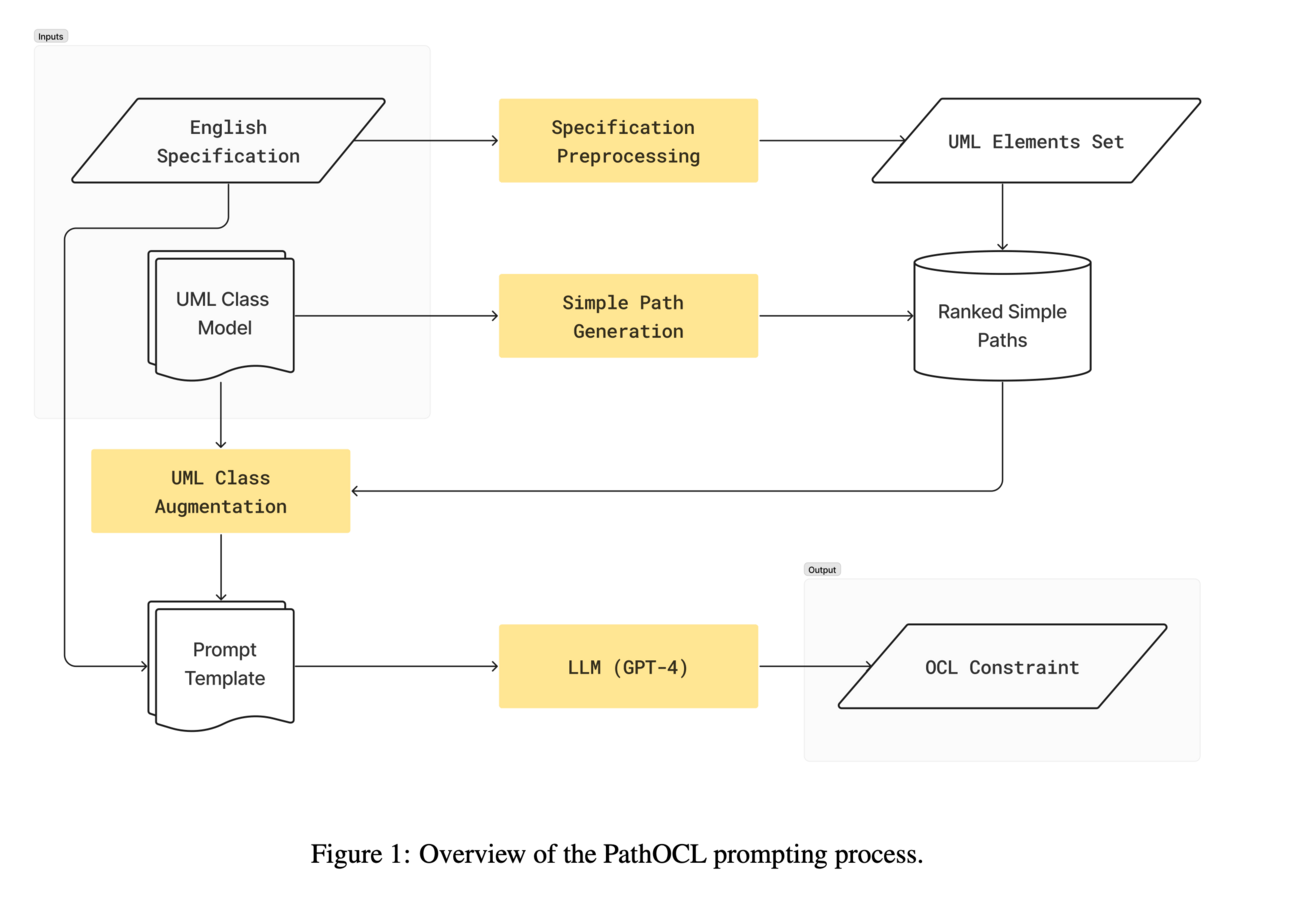

💡Why?: Generating Object Constraint Language (OCL) specifications from large Unified Modeling Language (UML) class models.

💻How?: The research paper proposes PathOCL, a novel path-based prompt augmentation technique. It works by chunking or selecting a subset of relevant UML classes from the English specification and augmenting them to the prompt. This addresses the limitations of large language models (LLMs) in processing tokens and handling large UML class models.

📊Results: The research paper's findings show that PathOCL outperforms the traditional method of augmenting the complete UML class model (UML-Augmentation). It generates a higher number of valid and correct OCL constraints using the GPT-4 model. Moreover, it significantly decreases the average prompt size when scaling the size of UML class models.

🤖LLMs for robotics & VLLMs

🔗GitHub: https://smartflow-4c5a0a.webflow.io/

The research paper proposes SmartFlow, an AI-based RPA system that utilizes pre-trained large language models and deep-learning based image understanding. This allows SmartFlow to adapt to new scenarios and changes in the user interface without the need for human intervention. The system uses computer vision and natural language processing to convert visible elements on the graphical user interface (GUI) into a textual representation. This information is then used by the language models to generate a sequence of actions that are executed by a scripting engine to complete a given task.

📊Results: The research paper does not explicitly mention any performance improvements achieved. However, the evaluations conducted on a dataset of generic enterprise applications with diverse layouts demonstrate the robustness of SmartFlow across different layouts and applications. This suggests that SmartFlow can improve the performance of RPA systems by automating a wide range of business processes, such as form filling, customer service, invoice processing, and back-office operations.

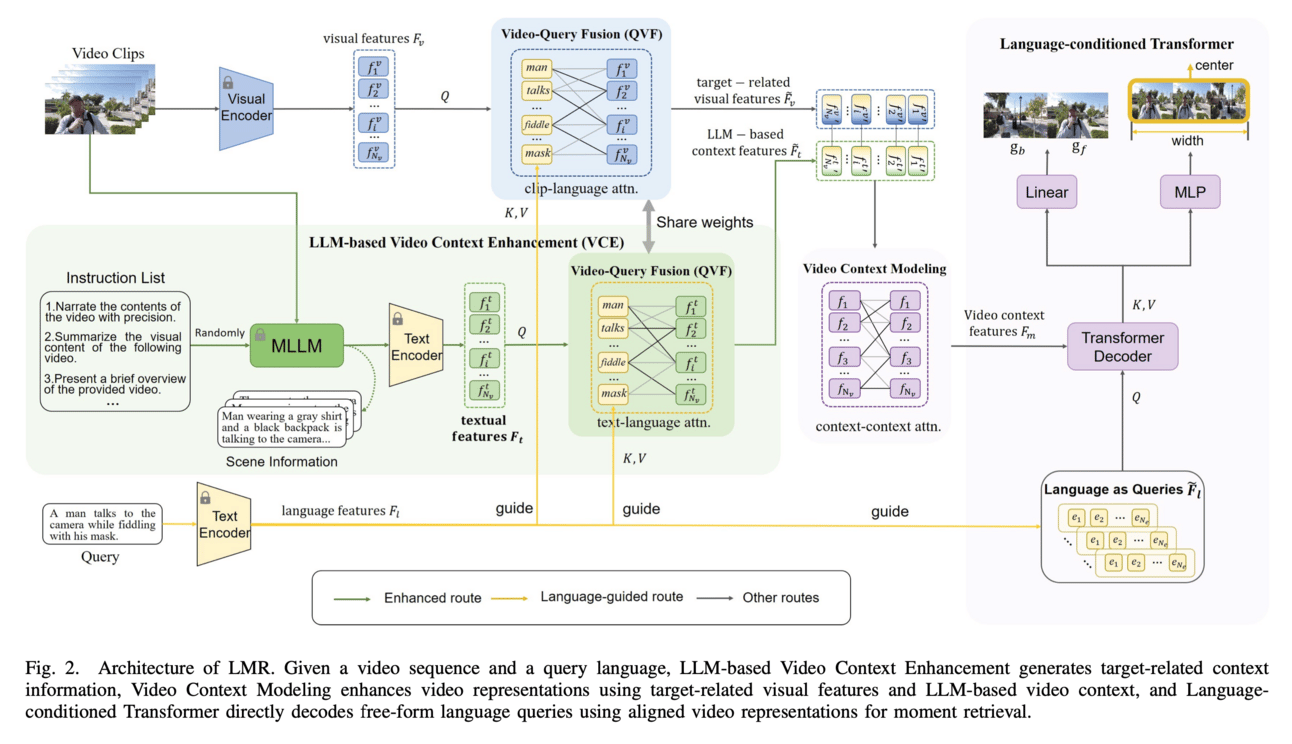

💡Why?: Current methods for Video Moment Retrieval struggling to accurately align complex situations involving specific environmental details, character descriptions, and action narratives.

💻How?: The research paper proposes a LLM-guided Moment Retrieval (LMR) approach, which utilizes the extensive knowledge of LLMs to improve video context representation and cross-modal alignment. This is achieved through a context enhancement technique with LLMs, generating crucial target-related context semantics that are integrated with visual features to produce discriminative video representations. A language-conditioned transformer is also designed to decode free-form language queries using aligned video representations for moment retrieval.