🤔 Problem?:

The research paper addresses the problem of task-agnostic prompt compression for better generalizability and efficiency in natural language processing.

💻 Proposed solution:

Paper proposes a data distillation procedure to derive knowledge from an LLM (large language model) and compress prompts without losing crucial information. This is achieved by introducing an extractive text compression dataset and formulating prompt compression as a token classification problem. The model uses a Transformer encoder to capture essential information from full bidirectional context and explicitly learns the compression objective.

📊 Results:

Team achieved significant performance gains over strong baselines and demonstrated robust generalization ability across different LLMs. Additionally, the model is 3x-6x faster than existing methods and accelerates end-to-end latency by 1.6x-2.9x with compression ratios of 2x-5x.

🔗Code/data/weights: https://yay-robot.github.io/

🤔 Problem?:

The research paper addresses the problem of achieving high success rates in long-horizon robotic tasks, particularly for complex and dexterous skills. Despite the use of hierarchical policies that combine language and low-level control, failures are still likely to occur due to the length of the task.

💻 Proposed solution:

It proposes a framework that allows robots to continuously improve their long-horizon task performance through intuitive and natural feedback from humans. This is achieved by incorporating human feedback in the form of language corrections into high-level policies that index into expressive low-level language-conditioned skills. The framework enables robots to rapidly adapt to real-time language feedback and incorporate it into an iterative training scheme, improving both low-level execution and high-level decision-making purely from verbal feedback.

📊Results:

It achieved significant performance improvement in long-horizon, dexterous manipulation tasks without the need for additional teleoperation. This was demonstrated in real hardware experiments.

🤔 Problem?:

This research paper addresses the issue of knowledge cutoff dates for released Large Language Models (LLMs). These models are often used for applications that require up-to-date information, but it is unclear if all resources in the training data share the same cutoff date and if the model's knowledge aligns with these dates.

💻 Proposed solution:

The research paper proposes a solution by defining the notion of an "effective cutoff," which is different from the reported cutoff by the LLM designer. This effective cutoff is applied separately to sub-resources and topics. To estimate the effective cutoff, the paper suggests a simple approach of probing across versions of the data.

Connect with fellow researchers community on Twitter to discuss more about these papers at

🤔 Problem?:

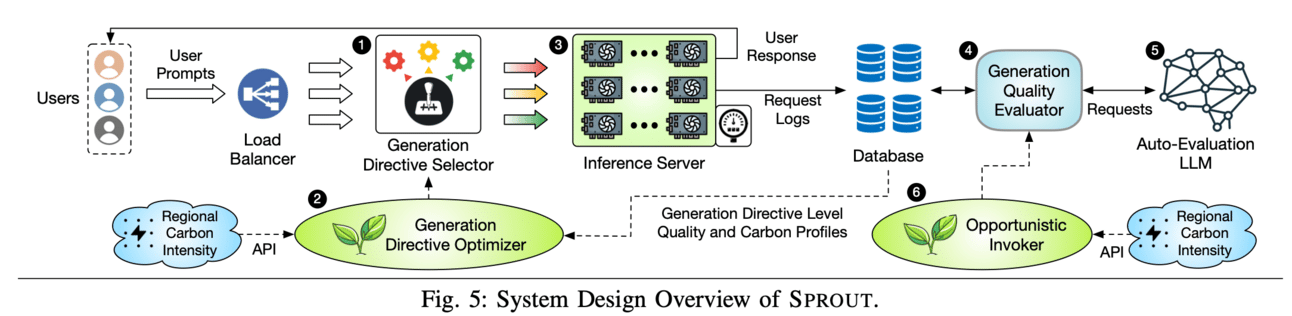

The research paper addresses the issue of carbon emissions from cloud and high performance computing infrastructure used for Generative Artificial Intelligence (GenAI) across various industries.

💻 Proposed solution:

The paper proposes a framework called Sprout, which aims to reduce the carbon footprint of generative Large Language Model (LLM) inference services. It does so by using "generation directives" to guide the autoregressive generation process, optimizing for carbon efficiency. This involves strategically assigning generation directives to user prompts using a directive optimizer and evaluating the quality of the generated outcomes using an offline quality evaluator.

📊 Results:

In real-world evaluations using the Llama2 LLM and global electricity grid data, Sprout was able to achieve a significant reduction in carbon emissions by over 40%. This highlights the potential of aligning AI technology with sustainable practices and mitigating environmental impacts in the rapidly expanding field of generative artificial intelligence.

🤔Problem?:

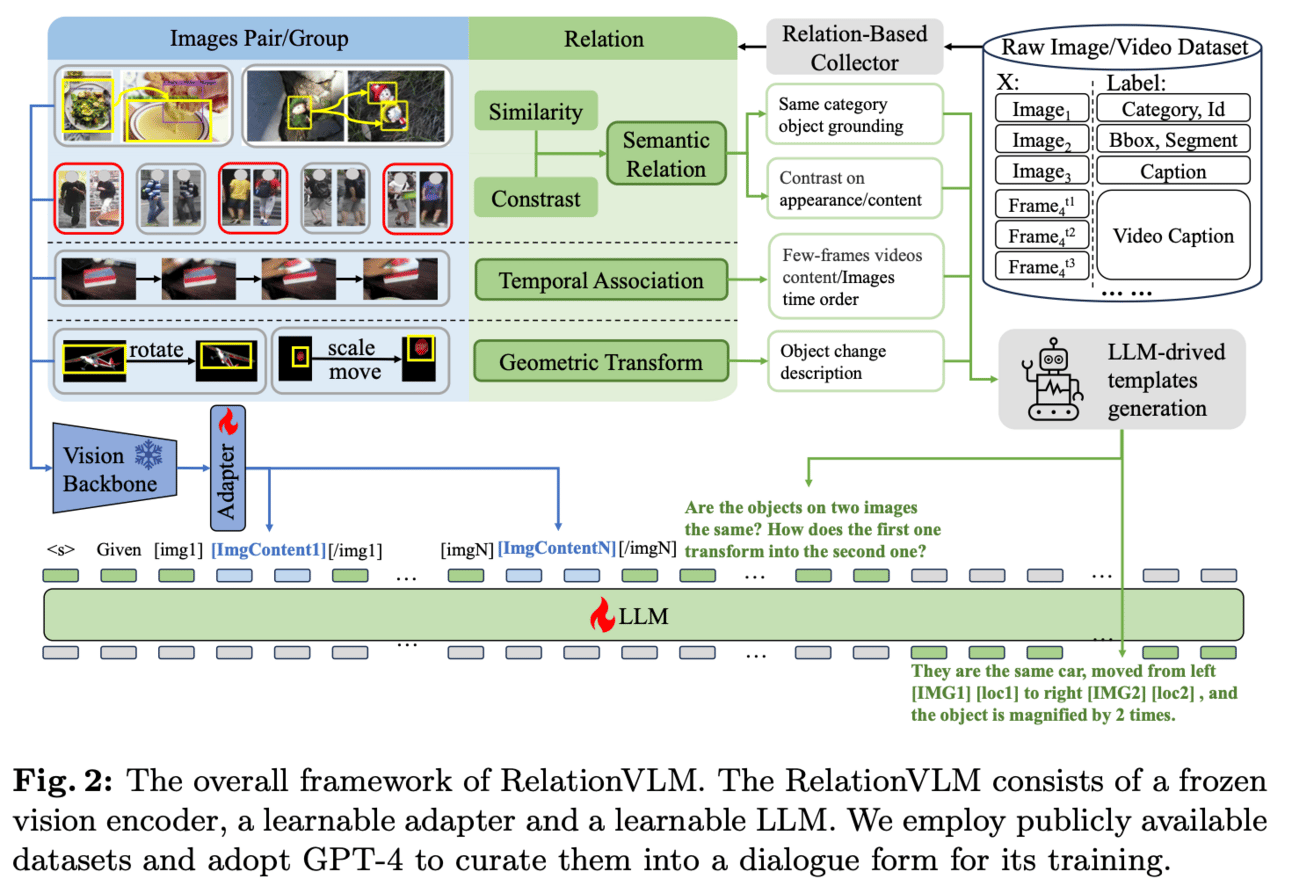

The research paper addresses the problem of developing Large Vision-Language Models (LVLMs) that can accurately understand visual relations in addition to text understanding. This is a more challenging task compared to Large Language Models (LLMs) which only focus on understanding text.

💻Proposed solution:

The research paper proposes a solution called RelationVLM, which is a large vision-language model that is capable of comprehending various levels and types of relations across multiple images or within a video. This is achieved through a multi-stage relation-aware training scheme and a series of data configuration strategies that enable RelationVLM to understand semantic relations, temporal associations, and geometric transforms.

📊Results:

The research paper shows that RelationVLM has a strong capability in understanding visual relations and performs impressively when reasoning from few-shot examples. This improvement in performance showcases the effectiveness of RelationVLM in supporting a wider range of downstream applications towards artificial general intelligence.

🔗Code/data/weights: https://iceedit.github.io/

🤔Problem?:

The research paper addresses the problem of limited flexibility in current methods of in-game 3D character customization. These methods only allow for a single-round generation, making it difficult for players to make detailed and fine-grained modifications to their characters.

💻Proposed solution:

The research paper proposes an Interactive Character Editing framework (ICE) which allows for a multi-round dialogue-based refinement process. This framework utilizes large language models (LLMs) to parse multi-round dialogues into clear editing instruction prompts. To make modifications at a fine-grained level, the Semantic-guided Low-dimension Parameter Solver (SLPS) is used. This solver first identifies the relevant character control parameters and then optimizes them in a low-dimension space to avoid unrealistic results.

📊Results:

The research paper demonstrates the effectiveness of ICE for in-game character creation and the superior editing performance compared to existing methods. The proposed framework allows for a more user-friendly and iterative process of character customization, resulting in more realistic and satisfying characters for players.

🤔Problem?:

The research paper addresses the problem of resource requirements associated with Large-scale Language Models (LLMs), and the need for techniques to compress and accelerate neural networks in order to make them more cost-effective.

💻Proposed solution:

The research paper proposes a technique called Post-Training Quantization (PTQ) for LLMs, which involves optimizing the weights using equivalent Affine transformations. This allows for a wider scope of optimization and minimizes quantization errors. The paper also introduces a gradual mask optimization method to ensure invertibility of the transformation, which aligns with the Levy-Desplanques theorem. This method results in significant performance improvements for LLMs on different datasets.

📊Results:

The research paper achieved a C4 perplexity of 15.76 (2.26 lower vs 18.02 in OmniQuant) on the LLaMA2-7B model of W4A4 quantization without overhead. On zero-shot tasks, AffineQuant achieved an average of 58.61 accuracy (1.98 lower vs 56.63 in OmniQuant) when using 4/4-bit quantization for LLaMA-30B, setting a new state-of-the-art benchmark for PTQ in LLMs.