In partnership with

Highlights

InfiniteHiP prunes tokens like scissors, extending context to 3M

LongRoPE stretches context to 2M+ tokens with fine-tuning

DarwinLM uses evolution to prune LLMs , keeping performance high with structured pruning and training

New paper draws a line between context length and model size

Get a $50 free credit to get the humanized data for your project. No credit card required!

Don’t have much time to read entire newsletter? Well, listen to this fun and engaging podcast covering these research papers in detail.

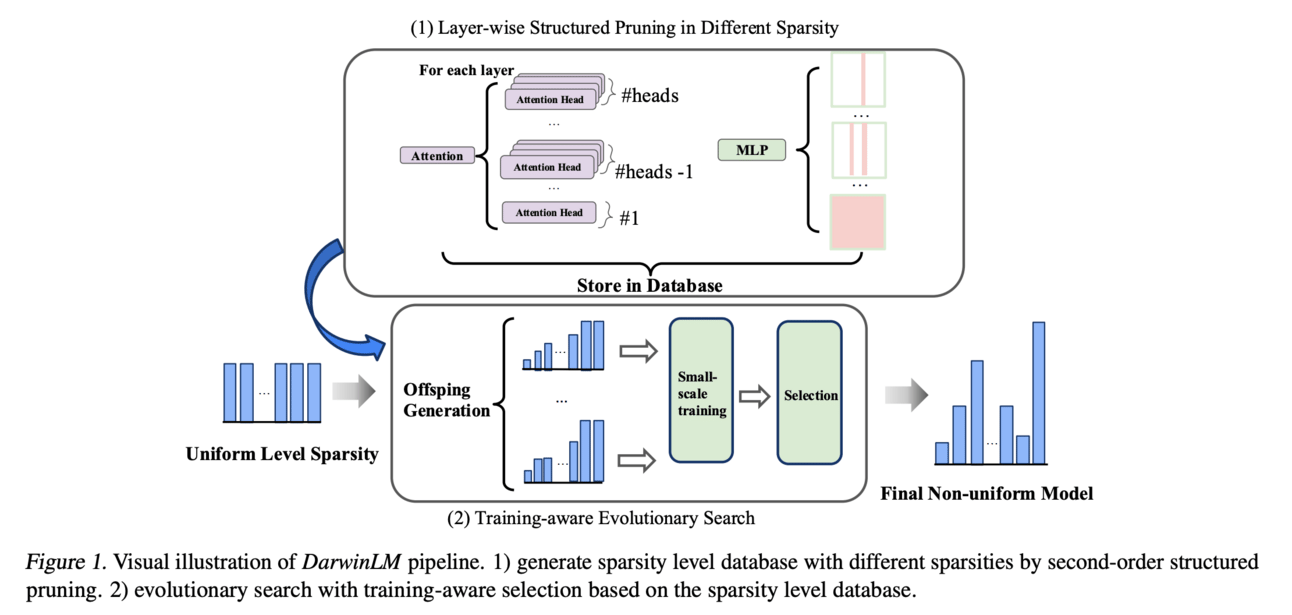

DarwinLM: Evolutionary Structured Pruning of Large Language Models

Note: This is not the official implementation of paper. We have implemented this paper to improve its adoption. We want to create a community where we all can come together and implement papers! We will share a link of discord channel soon where we all can implement unimplemented papers!

This research paper propose effective model compression technique. Pruning is a popular model compression technique. In LLMs, pruning refers to the process of removing less important or redundant connections (parameters) of model. By strategically removing these parameters, the model becomes smaller and more efficient, requiring less computation and memory.

Pruning can be unstructured or structured. Unstructured pruning allows for the removal of individual connections seemingly at random, leading to sparsity in the model's weight matrices. While this can reduce the model size, it often doesn't translate directly into speed improvements on standard hardware. Structured pruning, on the other hand, removes entire structures within the network, such as neurons, channels, or even layers. This results in a more compact model that can be executed more efficiently on conventional hardware, providing end-to-end speedups without requiring specialized hardware or software.

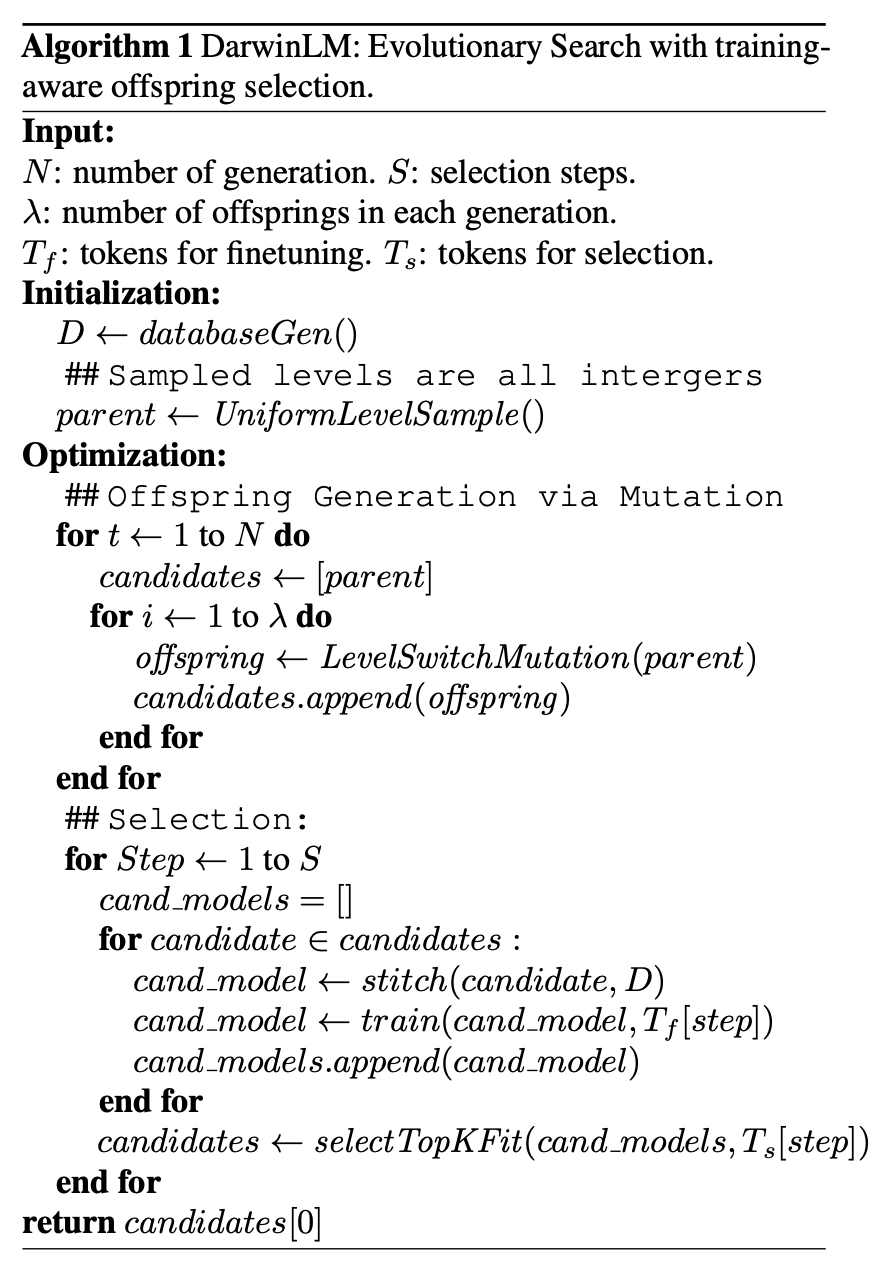

However, not all parts of an LLM are equally important. Some components are more sensitive to pruning than others. This research paper ntroduces a novel method for training-aware, non-uniform structured pruning. The core idea is to combine an evolutionary search process with a lightweight training procedure to identify the optimal substructure of the LLM. The name "DarwinLM" itself is inspired by the concept of natural selection. The method works by generating multiple "offspring" models from a parent model, each with slightly different pruning configurations (mutations). These offspring models are then evaluated based on their performance on a given task. The best-performing offspring are selected to "survive" and become the parents for the next generation. This process is repeated over several generations, gradually refining the pruning configuration and identifying the most efficient and effective substructure.

A key innovation in DarwinLM is its training-aware approach. Recognizing that the performance of a pruned model depends not only on its initial structure but also on how it's subsequently trained, DarwinLM incorporates a lightweight, multi-step training process within each generation. This allows the method to assess the effect of post-compression training and eliminate poorly performing models early on. The training process progressively increases the number of tokens used, providing a more comprehensive evaluation of the model's ability to learn and generalize.

Results:

DarwinLM achieves a remarkable 2x reduction in model size with only a 3% performance loss across various tasks. The effectiveness of DarwinLM is consistent across different model sizes, including smaller models (350M parameters) and larger ones (7B parameters). Meaning, it works well on all size of models.

Sponsored: Special offer for you claim $50 free credits!

Accelerate your AI projects with Prolific. Claim $50 free credits and get quality human data in minutes from 200,000+ taskers. No setup cost, no subscription, no delay—get started, top up your account to claim your free credit, and test Prolific for yourself now.

Use code: LLM-RESEARCH-50

Link: https://eu1.hubs.ly/H0gYdYX0

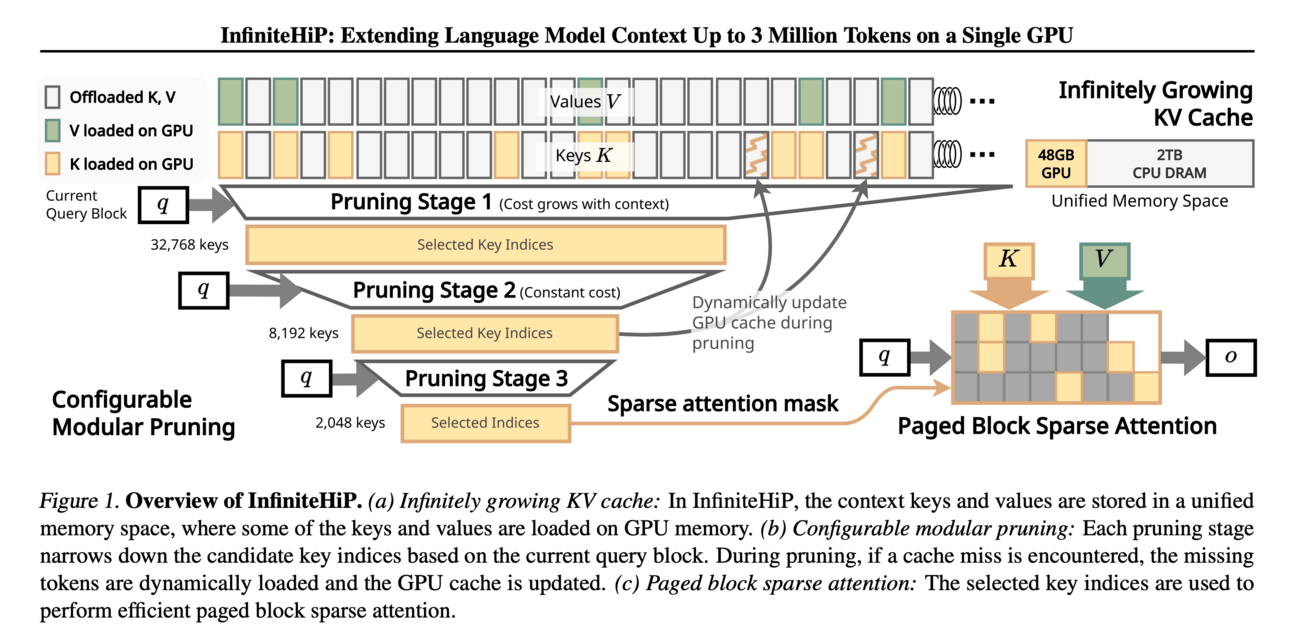

InfiniteHiP: Extending Language Model Context Up to 3 Million Tokens on a Single GPU

Context window or length is the amount of text a model can consider when processing information. Think of the context window as the model's short-term memory. A larger context window allows the model to better understand complex relationships, resolve ambiguities, and maintain coherence over longer passages. However, increasing the context window of an LLM presents significant technical challenges. One of the main bottlenecks is computational cost. As the context window grows, the amount of computation required to process the information increases dramatically, often requiring more powerful hardware and more energy consumption. Another challenge is memory. LLMs need to store information about the entire context window in memory, and as the context window expands, the memory requirements can quickly exceed the capacity of available hardware. This research paper works in this direction.

How it works?

This paper introduces a novel framework called InfiniteHiP, which stands for Infinite Hierarchical Pruning. This framework applies several innovative techniques to extend the context length that can be processed by an LLM to an impressive 3 million tokens, all while using a single GPU.

The first key component of InfiniteHiP is hierarchical token pruning. In simple terms, this technique intelligently removes less important tokens from the input, reducing the computational load without significantly impacting the quality of the output. It does this at multiple levels, ensuring that the most relevant information is retained for processing.

Next, the framework uses adaptive adjustments to Rotary Position Embeddings (RoPE). Positional embeddings are a crucial component of many LLMs, as they help the model understand the order of words in a sequence. Traditional positional embeddings assign a fixed vector to each position in the sequence. However, these fixed embeddings can struggle to generalize to longer sequences than the model was originally trained on. RoPE offer a more flexible and generalizable approach. RoPE encodes positional information using rotations in a high-dimensional space. This allows the model to better extrapolate to unseen sequence lengths. By modifying RoPE based on observed attention patterns, InfiniteHiP allows the model to effectively handle much longer sequences than it was originally trained on.

Main contribution in InfiniteHiP is its approach to memory management. LLMs typically store intermediate computations (known as the key-value cache) in GPU memory, which is fast but limited in capacity. InfiniteHiP instead stores this cache in the computer's main memory (RAM), which is much larger but slower. This clever offloading technique allows for processing of extremely long contexts without running out of GPU memory. This framework is implemented within a system called SGLang, which optimizes how these various components work together. This integration ensures that the token pruning, memory management, and computational processes are coordinated efficiently.

Result

The results of this research are impressive. InfiniteHiP achieves a speedup of 18.95 times in processing attention (a key component of LLMs) for sequences of 1 million tokens compared to baseline methods. It can handle sequences three times longer than previous approaches without losing important information. Remarkably, these improvements are achieved without needing to retrain the model or change its fundamental architecture.

In practical terms, this means that a single GPU with 48GB of memory (specifically, an NVIDIA L40s) can now process up to 3 million tokens at once. This is a massive leap forward, enabling applications that were previously impractical or impossible.

Explaining Context Length Scaling and Bounds for Language Models

Ever wondered how does the length of context affect the performance of these language models? Well this research paper has answers for that!

To understand the significance of this question, it's important to know that context in language models refers to the amount of preceding text the model considers when predicting the next word or performing a task. Traditionally, most models were limited to relatively short contexts, often just a few hundred words. However, recent advancements have pushed this boundary, with some models capable of considering thousands or even millions of words as context.

This increase in context length has led to some interesting and sometimes conflicting observations. Some studies have shown that longer contexts can improve model performance, leading to more accurate predictions and better understanding of complex topics. This phenomenon is often described as "Scaling Laws," suggesting that performance improves as context length increases. On the other hand, other research has found that long, irrelevant contexts can actually degrade performance, confusing the model rather than helping it. Now, question is which one is true?

This research paper provides a theoretical framework to explain how context length impacts language modeling. It introduces the concept of "Intrinsic Space" as a key to understanding context length effects. Intrinsic Space refers to a lower-dimensional representation of the data that captures the essential features relevant to the language modeling task. Think of it as distilling the most important information from the vast sea of language data. Paper suggests that impact of context length can be explained by how well the model can learn and represent these intrinsic features. As context length increases, the model has more information to work with, potentially allowing it to better capture these essential features. However, there's a point of diminishing returns, beyond which additional context doesn't provide meaningful new information.

One of the key findings from this research is that there's an optimal context length for any given size of training dataset. This means that simply increasing context length indefinitely isn't always beneficial. Instead, the optimal context length grows with the size of the training data. The paper also establishes theoretical bounds for context length scaling under certain conditions. These bounds provide guidance on how context length should be increased as models and training datasets grow larger.

LongRoPE: Extending LLM Context Window Beyond 2 Million Tokens

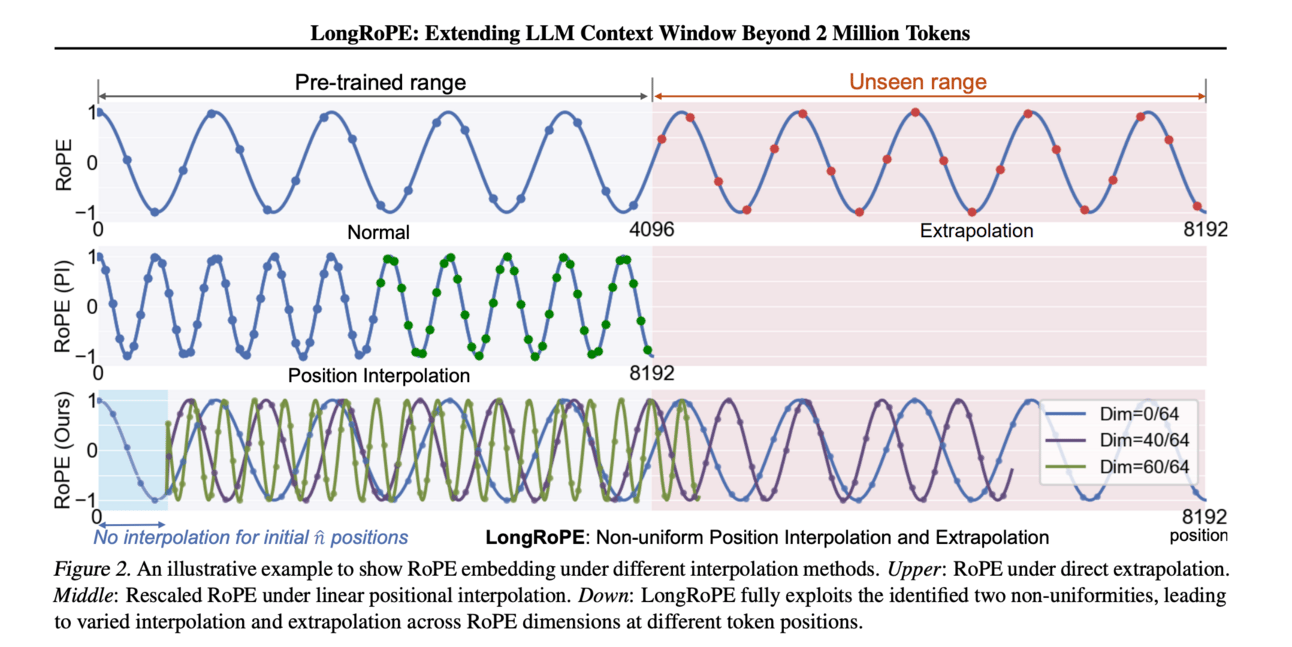

This research paper introduces a novel technique called "LongRoPE" that allows LLMs to effectively handle extremely long context windows – in this case, extending beyond 2 million tokens – while maintaining strong performance and reasonable computational costs.

LongRoPE modifies the RoPE (explained in 1st paper) mechanism to make it more efficient for very long sequences. The researchers observed that for very long contexts, the high-frequency components of the RoPE embeddings become less important. LongRoPE takes advantage of this observation by scaling down these high-frequency components as the context length increases. This scaling reduces the computational burden and allows the model to process much longer sequences without sacrificing performance.

In simpler terms, LongRoPE is like a zoom lens for the model's attention. When focusing on a small area (short context), the lens provides a detailed view. But when zooming out to see a larger area (long context), some of the fine details become less important, and the lens adjusts to provide a broader, more efficient view.

The important thing about LongRoPE is its simplicity and ease of integration. It can be applied to existing LLMs with minimal modifications.

Results:

The models extended via LongRoPE maintained low perplexity scores across evaluation lengths ranging from 4k to 2048k tokens. Low perplexity indicates that the model's predictions are more accurate and reliable. In tasks requiring long contexts, such as passkey retrieval, LongRoPE achieved over 90% accuracy! and, most importantly, models utilizing LongRoPE preserved their original performance levels on shorter context windows while significantly reducing perplexity when processing longer contexts.