In partnership with

What’s in it today?

SCONE: who needs a bigger vocabulary when you can just contextualize the heck out of your n-grams?

DAAs: Making LLMs agree with humans, one preference at a time (and sometimes only needing 5% of the data to do it!)

CT-KL: Ignoring the KL penalty and focusing on critical tokens to boost LLMs, because sometimes you just need to be a rebel

LIMO: Less is More for Reasoning - Proving you don't need a mountain of data, just a tiny, well-curated molehill!

Don’t have much time to read entire newsletter? No problem? Listen to this fun and engaging podcast covering these research papers in detail.

Scaling Embedding Layers in Language Models

Embedding layers in language models maps discrete tokens to continuous vector representations. Scaling embedding layers enhances model performance but simply increasing vocabulary size has limitations due to the tight coupling between input and output embedding layers, especially the increasing computational cost of the output layer's logits calculation. The benefits of simply scaling the vocabulary also diminish due to the proliferation of low-frequency "tail tokens" which are rarely updated during training, resulting in lower quality embeddings.

To address these issues, the paper introduces SCONE (Scalable, Contextualized, Offloaded, N-gram Embedding), a novel approach designed to disentangle the input and output embeddings, enabling effective input embedding scaling with minimal additional inference cost.

Proposed approach

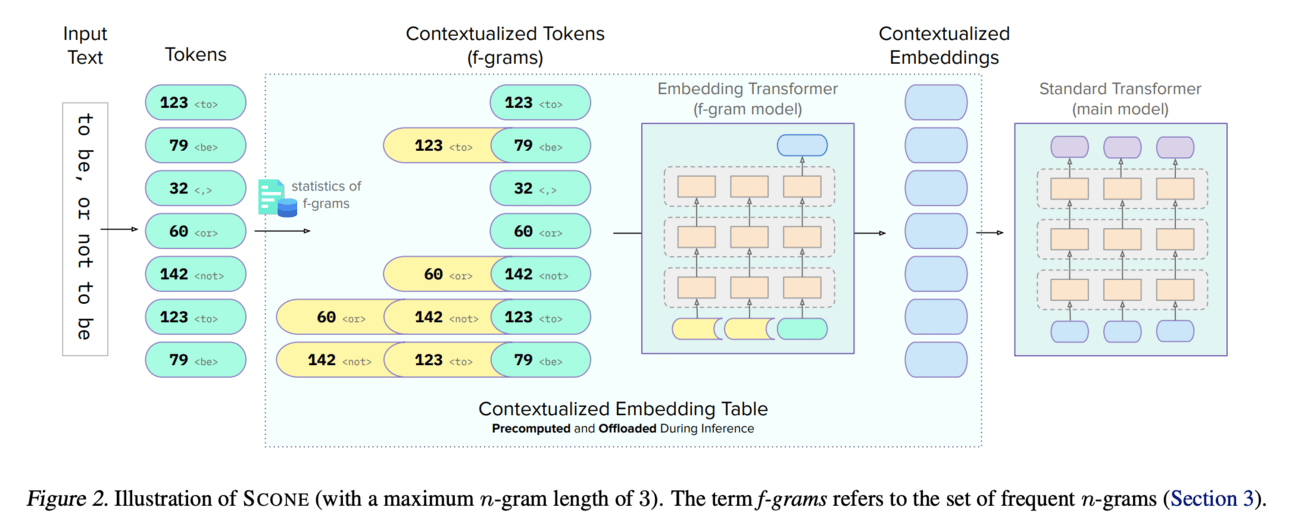

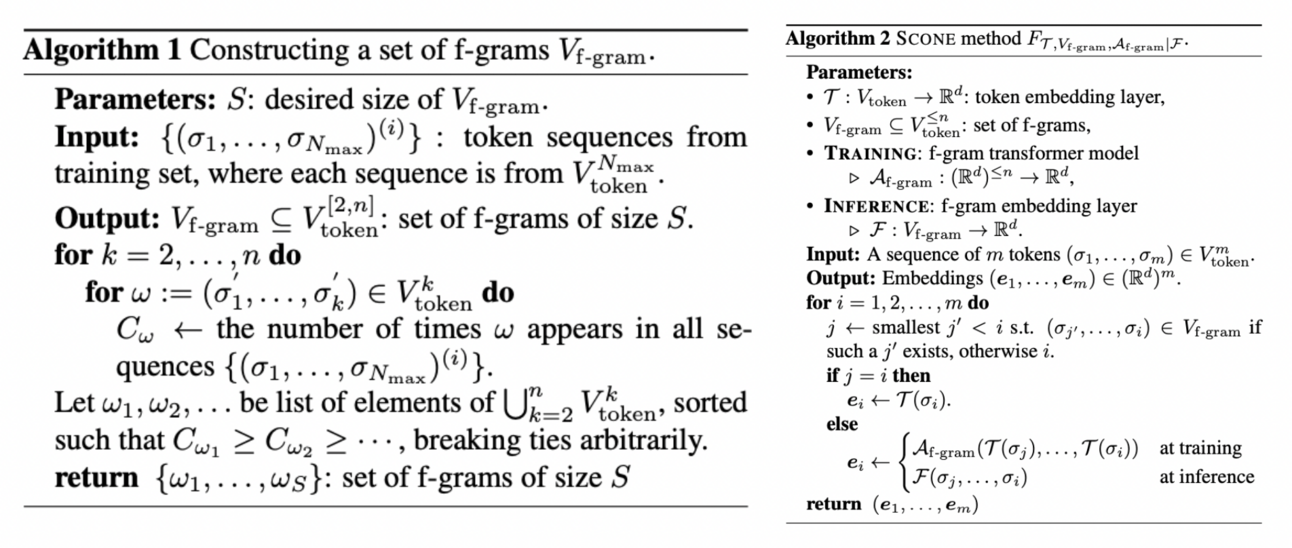

The core of SCONE lies in augmenting the existing token vocabulary with contextualized variants derived from frequent n-grams (f-grams). Instead of directly increasing the vocabulary size, SCONE uses a set of frequently occurring n-grams to provide contextualized representations for each input token. These contextualized tokens are used only for input embedding computation, allowing for a massive augmented input embedding table without increasing the output layer's computational burden. The embeddings for these contextualized tokens are generated by a separate embedding transformer model, referred to as the f-gram model, which is jointly trained with the main language model. This allows for rich contextualized representations without the sparsity issues associated with simply increasing the vocabulary.

The paper proposes an efficient method, analogous to continuing a Byte Pair Encoding (BPE) tokenizer's training, to identify frequent n-grams (f-grams) in the training corpus. This process involves scanning the corpus to count the occurrences of n-grams of length up to a specified maximum (n). To reduce memory usage, a minimum frequency threshold is applied during the counting process. The SCONE method maps a sequence of tokens to a sequence of embedding vectors. During training, the method utilizes an f-gram transformer model (Af-gram) to generate embeddings for contextualized tokens. At inference time, a precomputed f-gram embedding layer (F) is used to map the f-grams to embedding vectors, allowing for efficient retrieval of contextualized embeddings. After that, The embeddings generated by the SCONE method are passed to a standard transformer model (Amain), referred to as the main model, followed by a prediction head (D). This combination enables next-word prediction with SCONE. The f-gram model and the main model are trained jointly, allowing the f-gram model to learn contextualized representations that are aligned with the main model's objective.

Results

The paper shows that scaling both the number of cached f-gram embeddings and the size of the f-gram model allows SCONE to outperform a 1.9B parameter baseline model across diverse corpora. For example, with 10M f-grams and a 1.8B f-gram model, a 1.3B main model matches the perplexity of the 1.9B baseline. With 1B f-grams and a 1.8B f-gram model, a 1B main model surpasses the 1.9B baseline. Also, SCONE enables improved performance while using only half the inference-time FLOPS of the baseline model.

Sponsored: Special offer for you claim $50 free credits!

Accelerate your AI projects with Prolific. Claim $50 free credits and get quality human data in minutes from 200,000+ taskers. No setup cost, no subscription, no delay—get started, top up your account to claim your free credit, and test Prolific for yourself now. Use code: LLM-RESEARCH-50

The Differences Between Direct Alignment Algorithms are a Blur

Aligning Large Language Models (LLMs) with human values is a challenging task. Generally methods like Supervised Fine-Tuning (SFT), Reward Modeling (RM), and Reinforcement Learning from Human Feedback (RLHF) is used for that. Recenlty Direct Alignment Algorithms (DAAs) is gained a traction. It directly optimize the policy based on human preferences, without the explicit reward modeling step. However, the paper notes the confusing landscape of DAAs, differing in their theoretical underpinnings (pairwise vs. pointwise objectives), implementation details (e.g., using a reference policy or an odds ratio), and whether they require a separate SFT phase (one-stage vs. two-stage). The paper aims to clarify the relationships between these algorithms, determine the importance of the SFT stage, and identify the key factors influencing their alignment quality.

Methodology

The core of the paper's methodology is a systematic empirical comparison of different Direct Alignment Algorithms. The paper investigates the role of the SFT stage, the influence of a tempering factor (β), and the impact of pairwise vs. pointwise preference optimization. To achieve this, the paper focuses primarily on two single-stage DAA methods, ORPO (Odds Ratio Preference Optimization) and ASFT (Aligned Supervised Fine-Tuning), and modifies them in several ways.

Introducing an Explicit SFT Stage: The paper explores whether adding a separate SFT phase before applying ORPO and ASFT improves their performance. This involves training a model using standard supervised fine-tuning on a dataset of instructions and responses, and then using ORPO or ASFT to align the model with human preferences.

Adding a Tempering Factor (β): The paper introduces a scaling parameter β into the ORPO and ASFT loss functions. This parameter, inspired by similar parameters used in other DAAs like DPO, controls the strength of the preference optimization. By varying the value of β, the paper investigates its impact on the alignment quality of ORPO and ASFT.

Theoretical Analysis and Equivalence Proofs: The paper presents theoretical analyses demonstrating relationships between different DAA loss functions. These analyses include theorems proving the equivalence of ASFT to a binary cross-entropy loss, establishing an upper bound of ASFT on ORPO, and demonstrating collinearity of gradients under certain conditions. These theoretical results are used to support and interpret the empirical findings.

Controlled Experiments: The paper conducts a series of carefully controlled experiments to evaluate the performance of different DAA configurations. These experiments involve training and evaluating models on benchmark datasets, using metrics designed to assess alignment quality.

Varying SFT Data Size: The paper examines how the final alignment quality depends on the amount of data used in the SFT stage. This is done to determine whether it's necessary to use the full dataset for SFT, or if a smaller subset is sufficient.

Result

The paper shows that incorporating an explicit SFT stage significantly improves the performance of both ORPO and ASFT. The paper demonstrates that introducing a tempering factor (β) enhances the alignment quality of ASFT and ORPO. The authors found that tuning β was essential for achieving good performance.

Ignore the KL Penalty! Boosting Exploration on Critical Tokens to Enhance RL Fine-Tuning

The paper presents a novel approach to improving the exploration capabilities of LLMs during reinforcement learning (RL) fine-tuning. The core challenge in RL fine-tuning is balancing exploration and stability. Traditional methods often use a Kullback-Leibler (KL) penalty to prevent the model from deviating too far from its pre-trained behavior, ensuring stability but potentially limiting exploration.

The paper introduces the Critical Token KL (CT-KL) method, which dynamically adjusts the KL penalty during training based on critical tokens identified as crucial decision points. These critical tokens are determined using attention patterns and gradient information, indicating where targeted exploration can significantly impact performance. By reducing or eliminating the KL penalty for these tokens, CT-KL encourages more extensive exploration at these key junctures while maintaining stability elsewhere.

This selective approach contrasts with traditional methods that apply uniform penalties across all tokens or eliminate penalties entirely, which can lead to instability or inefficient learning. The CT-KL method modifies the standard transformer architecture by adjusting its training objective without altering its base structure.

Implementation Details

This method integrates CT-KL into existing RL frameworks for LLMs. The first step is identifying critical tokens within input sequences using attention weights and gradients. After that, the training objective is modified to selectively reduce or remove KL penalties for these identified critical tokens. Models are than trained with this modified objective on various tasks requiring complex reasoning and problem-solving skills.

Results

The paper demonstrates significant improvements in performance when using CT-KL compared to standard approaches: On mathematical reasoning tasks, models trained with CT-KL showed an improvement of up to 8.2% compared to those using traditional uniform penalties.

LIMO: Less is More for Reasoning

This paper challenges the conventional wisdom that complex reasoning in LLMs requires extensive training data. This hypothesis tests that if a model has sufficient pre-trained knowledge and is presented with effective "cognitive templates" demonstrating problem-solving processes, it can achieve strong reasoning capabilities with minimal fine-tuning data.

Methodology

Paper carefully curated a small training dataset to act as "cognitive templates" for reasoning. The key is not the quantity of the data, but its quality and how well it demonstrates the reasoning process. While the specific details of how these training samples are orchestrated is limited from the paper, they must have done so effectively to get such positive outcomes.

The general idea for the methodology is:

Leveraging Pre-trained Knowledge: The paper builds upon modern foundation models that have used significant mathematical knowledge during pre-training. This pre-trained knowledge serves as the foundation for reasoning.

Minimal Exemplars as Cognitive Templates: The core approach involves creating a small set of training examples that demonstrate how to utilize the pre-trained knowledge to solve complex reasoning problems.

Emphasis on Inference-Time Computation: The approach is designed to encourage extended deliberation and systematic application of pre-trained knowledge during inference. This highlights the importance of having sufficient computational resources at inference time.

Results

The paper reports impressive results on mathematical reasoning benchmarks:

AIME Benchmark: LIMO achieves 57.1% accuracy on the AIME benchmark, a significant improvement over previous SFT-based models which achieved only 6.5% accuracy. This demonstrates the ability to achieve strong performance on a challenging reasoning task.

MATH Benchmark: LIMO achieves 94.8% accuracy on the MATH benchmark, also a substantial improvement over previous models (59.2%).

Out-of-Distribution Generalization: Most remarkably, LIMO demonstrates exceptional out-of-distribution generalization, achieving a 40.5% absolute improvement across 10 diverse benchmarks, outperforming models trained on 100x more data. This result challenges the common belief that SFT inherently leads to memorization rather than generalization.