Google I/O summarized

First openAI releasing GPT4o and now it’s Google’s turn releasing mind blowing AI capabilities, here’s a quick recap:

Veo: Google DeepMind's most capable video generation model which generates cinematic and visual styles video of around ~ 1 minute with 1080p resolution

Project Astra: Google's new project focused on building a future AI assistant. Google's new assistant is powered by Gemini and supports audio, text, video and image shared in real-time, pretty similar to openAI’s GPT4o

Gemini 1.5 Pro context length doubled to 2M tokens!

Imagen 3: A new image generation model

PaliGemma: Google's first vision-language open-source model and it is available now

Illuminate: You pick a research paper and Google will create a podcast explaining the paper!

🔑 takeaway from today’s newsletter

Hermes 2 Θ, a new model by Nous Research combining best of both Hermes 2 pro and LLama 3 instruct, surpassing LLama3 almost on all benchmarks! - HuggingFace model

Sliding window based KV qunatization can help process context lengths of up to 1M on an 80GB memory GPU for a 7b model.

Identifying and pruning domain specific weights to reduce model size

Reducing hallucination using Self-Refinement-Enhanced Knowledge Graph Retrieval (Re-KGR) method

Using low-rank decomposition method to reduce model size by 9% without affecting performance

LLMs can be used in data-lake for data manipulation (DML) tasks!

🔬Core research improving LLMs!

Peking University, Infinigence AI,Shanghai AI Laboratory

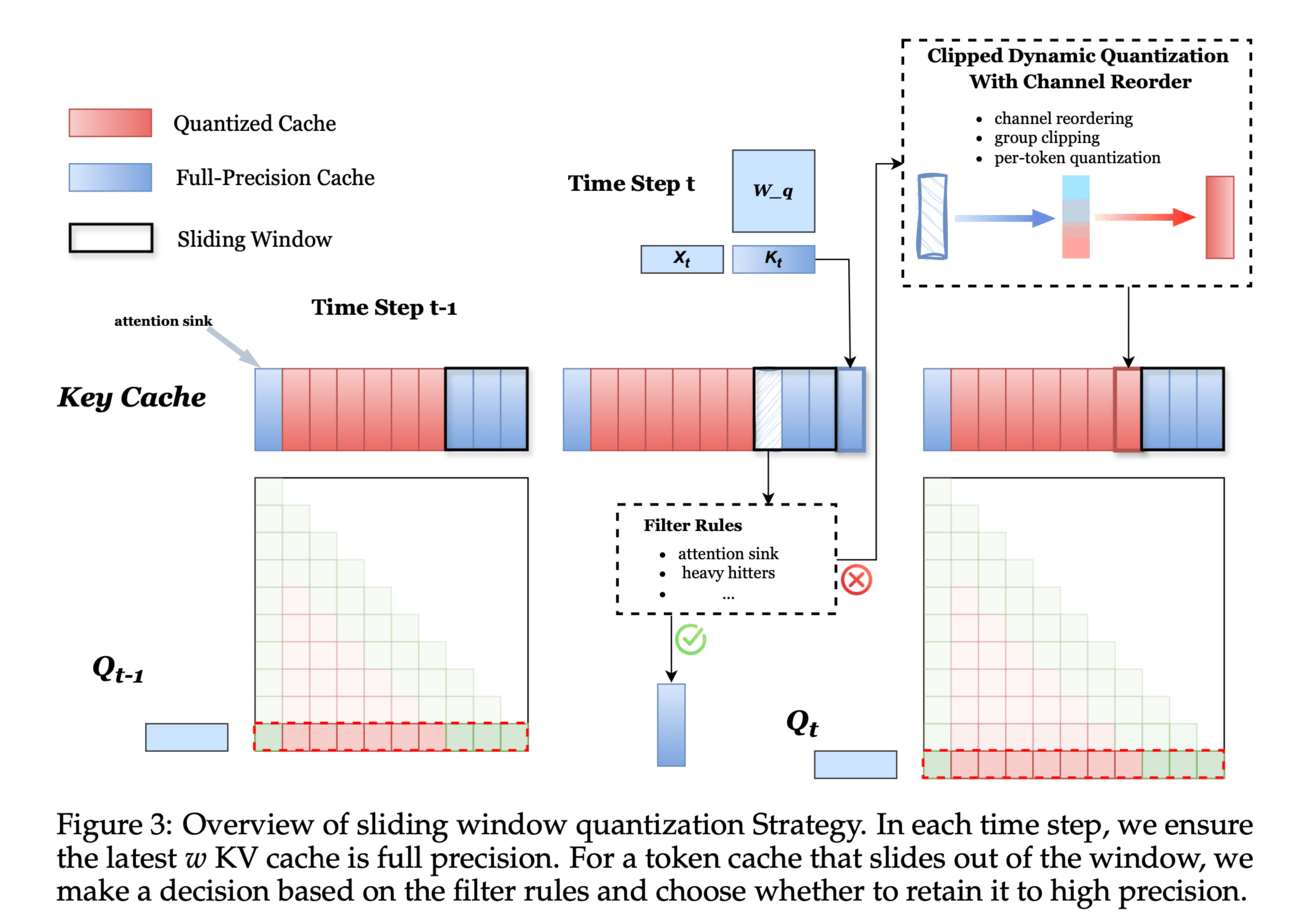

SKVQ: Sliding-window Key and Value Cache Quantization for Large Language Models 🔥

💡Why?: Key-value (KV) cache consumes substantial memory in LLMs, which becomes a bottleneck for deployment as the context length increases. This can limit the ability of LLMs to handle longer sequences of tokens thus limiting the complex tasks, such as book understanding and generating lengthy novels.

💻How?: The research paper proposes a strategy called SKVQ, which stands for sliding-window KV cache quantization, to address the issue of extremely low bit width KV cache quantization. This strategy involves rearranging the channels of the KV cache to improve the similarity of channels in quantization groups, and applying clipped dynamic quantization at the group level. Additionally, SKVQ ensures that the most recent window tokens in the KV cache are preserved with high precision. This helps maintain the accuracy of a small but important portion of the KV cache.

📊Results: The research paper demonstrates that SKVQ achieves high compression ratios while maintaining accuracy in LLMs. It surpasses previous quantization approaches, allowing for quantization of the KV cache to 2-bit keys and 1.5-bit values with minimal loss of accuracy. This results in faster decoding, with the potential to process context lengths of up to 1M on an 80GB memory GPU for a 7b model and up to 7 times faster decoding.

NEC labs, The Pennsylvania State University

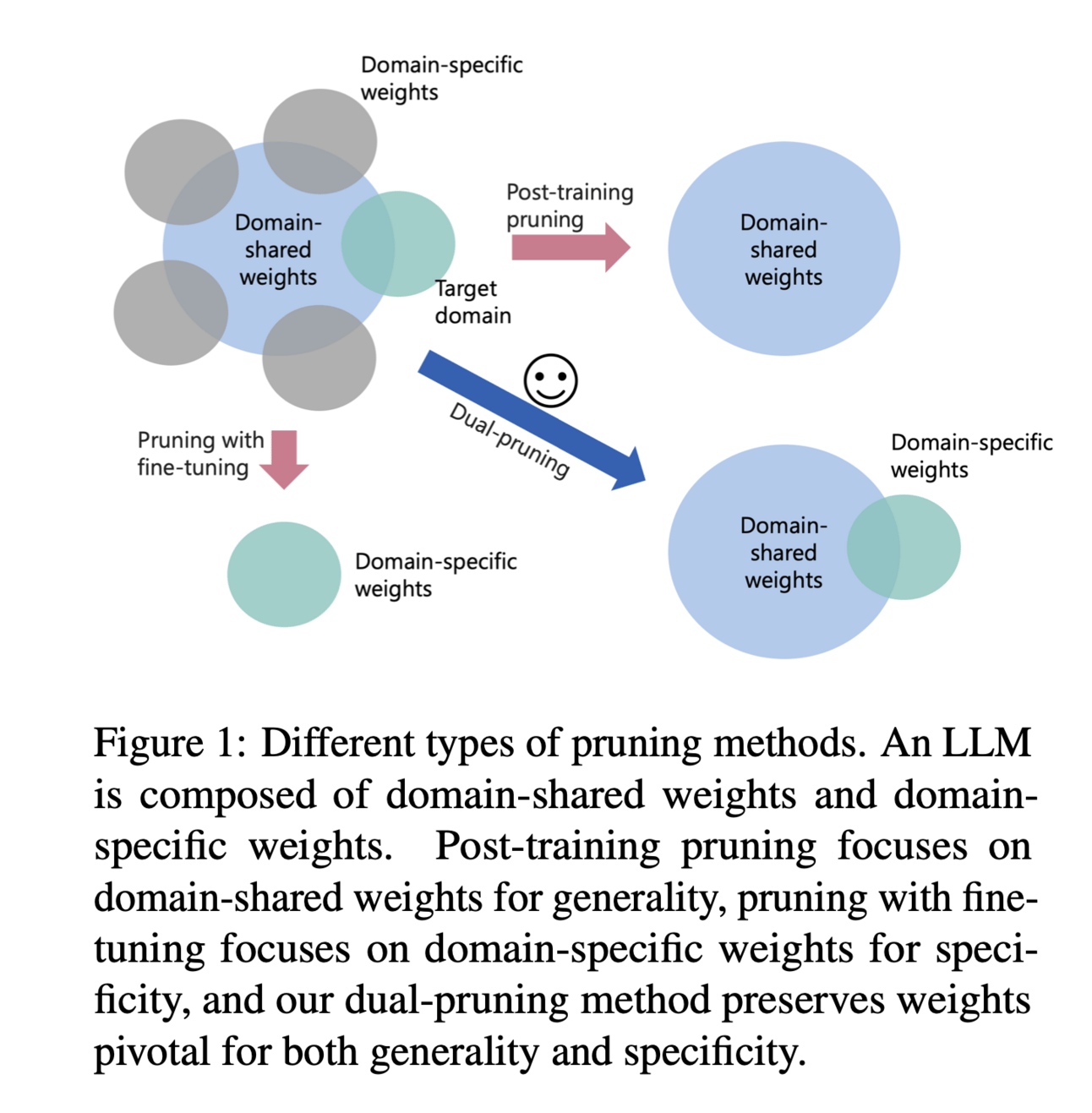

Pruning as a Domain-specific LLM Extractor 🔥

💡Why?: LLMs have a high deployment costs. Their large size makes them expensive to deploy in practical applications.

💻How?: The research paper proposes an innovative unstructured dual-pruning methodology called D-Pruner to reduce the size of LLMs for domain-specific use. This methodology identifies and removes weights that are specific to a certain task, while preserving weights that are important for general capabilities such as linguistic capability and multi-task solving. This is achieved through a two-step process of assessing weight importance using an open-domain calibration dataset and refining the training loss to preserve generality when fitting into a specific domain.

📊Results: Paper showcases that even at a 50% sparsity, models achieved almost similar ROUGE scores.

Huawei, Imperial College London

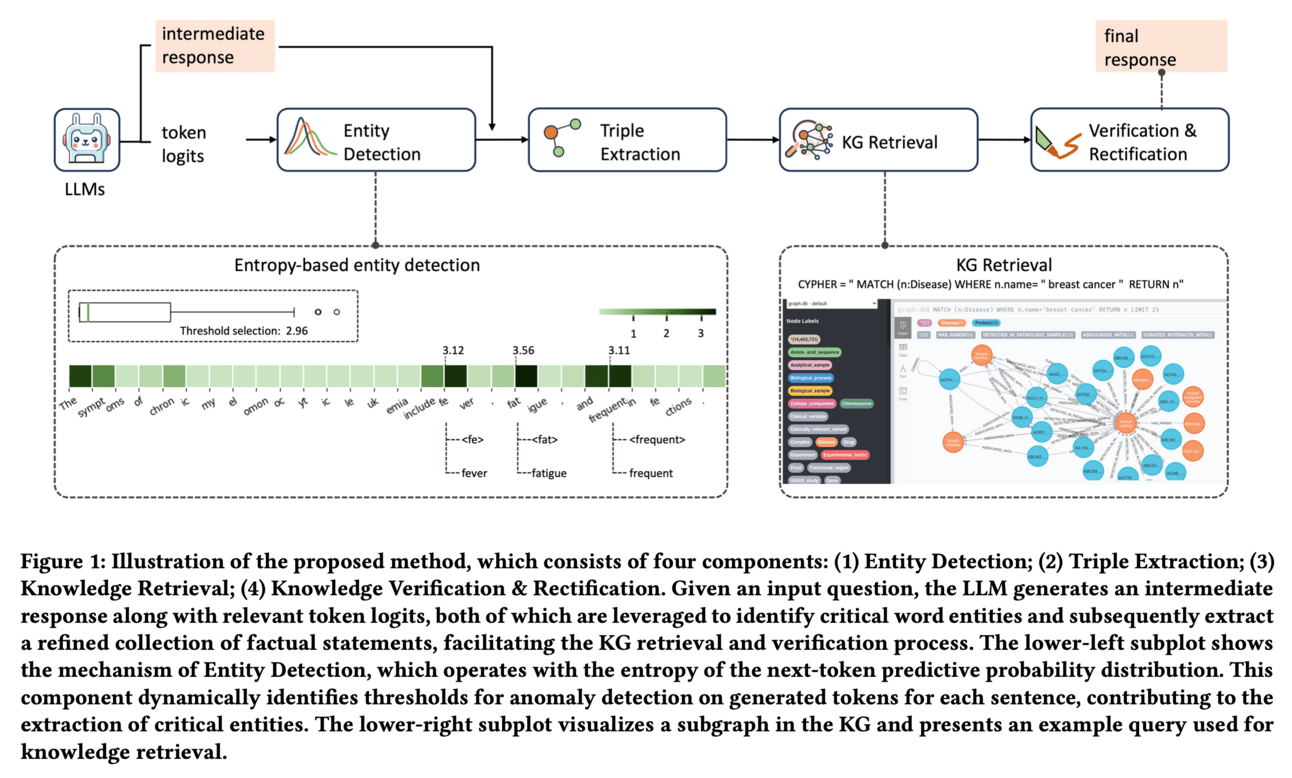

Mitigating Hallucinations in Large Language Models via Self-Refinement-Enhanced Knowledge Retrieval 🔥

💡Why?: Hallucinations in LLMs, specially in healthcare field

💻How?: The research paper proposes a method called Self-Refinement-Enhanced Knowledge Graph Retrieval (Re-KGR) to augment the factuality of LLMs' responses with less retrieval efforts in the medical field. This approach uses predictive probability distributions and different model layers to identify tokens with a high potential for hallucination. These tokens are then refined by retrieving relevant knowledge triples, reducing the need for multiple rounds of verification. Additionally, inaccurate content is rectified using retrieved knowledge in the post-processing stage to improve the truthfulness of generated responses.

Nvidia, University of California

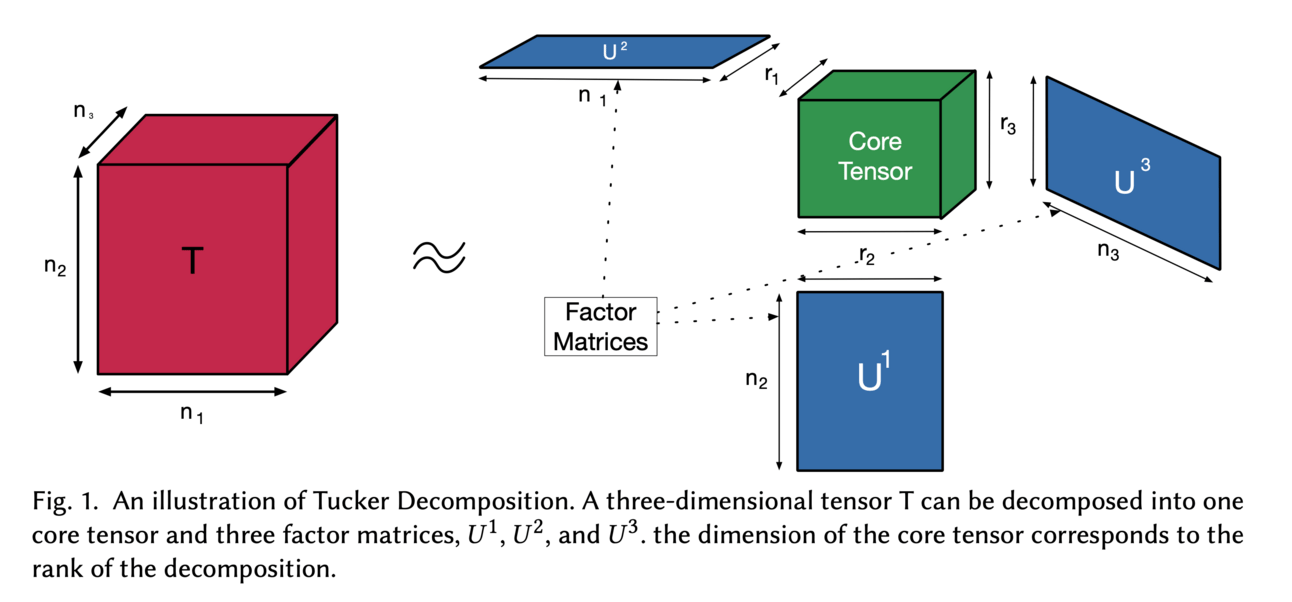

Characterizing the Accuracy - Efficiency Trade-off of Low-rank Decomposition in Language Models 🔥

💡Why?: LLMs are growing bigger and bigger with each release! Paper explores low-rank decomposition methods as a solution for optimizing the memory footprint and traffic of LLMs.

💻How?: The research paper proposes to use low-rank decomposition methods, specifically Tucker decomposition, to reduce the size of LLMs while maintaining their accuracy. This method breaks down the LLM into smaller sub-models, reducing the number of parameters and thus the memory footprint. It works by identifying the most important parameters and keeping them intact while discarding the less important ones. This allows for a smaller, more efficient model that can still solve a wide range of problems.

📊Results: The research paper shows promising results in reducing the model size by 9% without sacrificing accuracy. The accuracy drop ranges from 4% to 10% depending on the difficulty of the benchmark, but without any retraining, the accuracy can be recovered.

** Please refer https://linear-transformers.com/ before reading this research paper. It assumes you are familiar with the concept of a “linear transformer”

💡Why?: Finding a cost-effective alternative to pre-training linear transformers, which requires significant data and compute investments.

💻How?: The research paper proposes a method called "Scalable UPtraining for Recurrent Attention (SUPRA)" which aims to uptrain existing large pre-trained transformers into Recurrent Neural Networks (RNNs) with a modest compute budget. This is achieved through a linearization technique, which allows for leveraging the strong pre-training data and performance of existing transformer LLMs while requiring only 5% of the training cost.

Improbable AI Lab, MIT, MIT-IBM Watson AI Labs

Value Augmented Sampling for Language Model Alignment and Personalization

💡Why?: Train LLMs to understand different human preferences, learning new skills, and unlearning harmful behaviour.

💻How?: The research paper proposes a new framework called Value Augmented Sampling (VAS) to solve this problem. VAS is a reinforcement learning method that maximizes different reward functions using data sampled from only the initial, frozen LLM. This means that the policy and value function do not need to be co-trained, making the optimization stable and efficient. VAS also allows for the composition of multiple rewards, giving more control over the personalized alignment of the LLM.

📊Results: The research paper shows that VAS outperforms established baselines such as PPO and DPO on standard benchmarks and achieves comparable results to Best-of-128 with lower inference cost. This means that VAS can effectively align LLMs while being more computationally efficient. Furthermore, unlike existing RL methods, VAS does not require access to the weights of the pre-trained LLM, that means it can also work with models whose weights are not available in public and only run with APIs like chatGPT!!

National Taiwan University

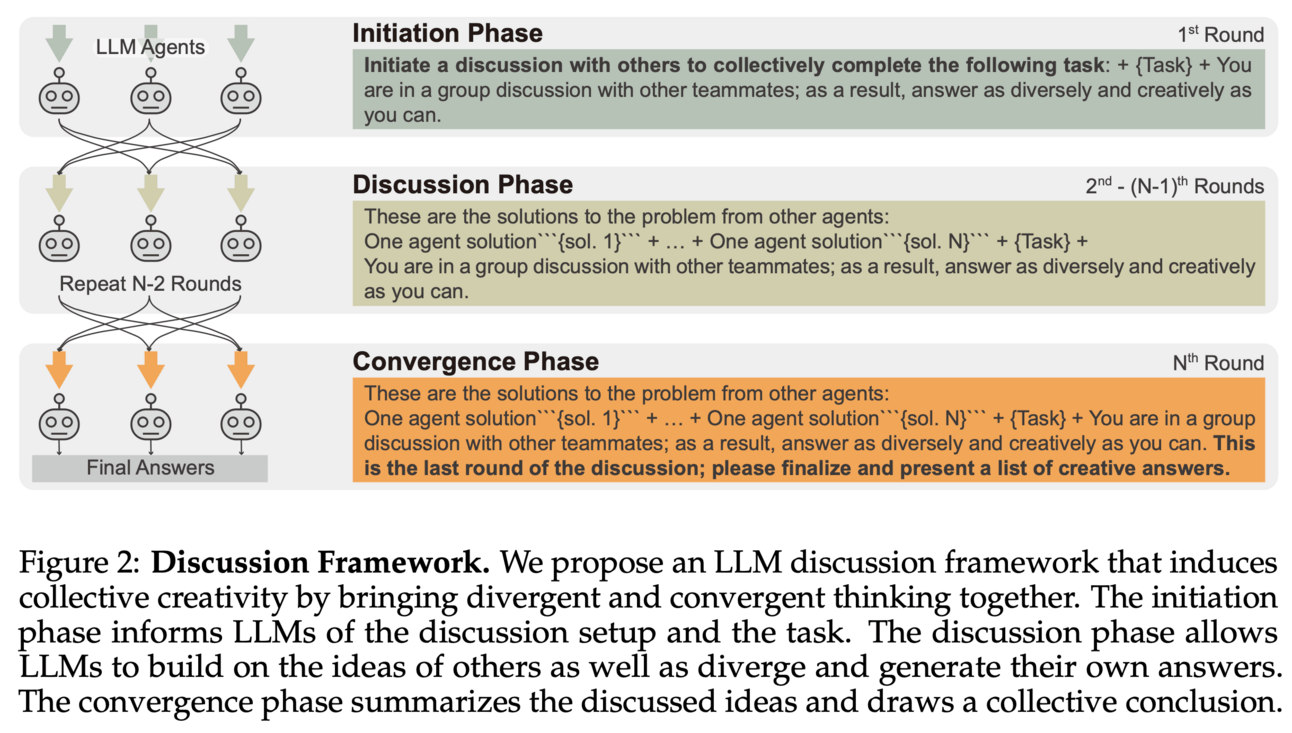

LLM Discussion: Enhancing the Creativity of Large Language Models via Discussion Framework and Role-Play

💡Why?: The paper addresses the issue of limited creativity in LLMs when generating responses to open-ended questions.

💻How?: The paper proposes a three-phase discussion framework called LLM Discussion, which imitates the human process of inducing collective creativity through engaging discussions with diverse participants. This framework encourages vigorous and diverging idea exchanges, as well as ensures convergence to creative answers. It also incorporates a role-playing technique by assigning distinct roles to LLMs to combat the homogeneity of individual models. This approach allows for a more diverse and creative output from LLMs.

📊Results: The paper evaluates the efficacy of the proposed framework through various creativity tests and shows that it outperforms both single-LLM approaches and existing multi-LLM frameworks in terms of creativity metrics. This improvement is demonstrated through both LLM evaluation and human study.

Enjoying this newsletter? 😊

Please forward it to a friend or colleague. It’ll help keep content free.

Please share: https://llm.beehiiv.com/subscribe

🧪 LLMs evaluations

This research paper evaluates LLMs readiness agains various attacks to asses its feasibility to use in critical infrastructure like smart grids. Paper: Risks of Practicing Large Language Models in Smart Grid: Threat Modeling and Validation - proposes to systematically evaluating the vulnerabilities of LLMs and identifying two major types of attacks that are relevant to smart grid applications. They also present corresponding threat models to better understand these attacks. To validate their findings, they use popular LLMs and real smart grid data to demonstrate that attackers are capable of injecting bad data and retrieving domain knowledge from LLMs used in smart grid scenarios.

This research paper highlights the information incompleteness in LLMs model and data card. This research paper, Automatic Generation of Model and Data Cards: A Step Towards Responsible AI proposes CardBench, a comprehensive dataset aggregated from over 4.8k model cards and 1.4k data cards, and the development of the CardGen pipeline which comprises a two-step retrieval process. This approach aims to improve completeness, objectivity, and faithfulness in generated model and data cards, thereby promoting responsible AI documentation practices and ensuring better accountability and traceability.

This research paper try to measure the structured semantics. It check if LLMs are capable of understanding and representing language in a structured way, which has implications for their interpretability and potential biases. Paper Potential and Limitations of LLMs in Capturing Structured Semantics: A Case Study on SRL proposes Semantic Role Labeling (SRL) as a fundamental task to evaluate LLMs ability to extract structured semantics. They introduce a new approach called prompting, which involves providing LLMs with specific prompts or cues to guide their understanding and representation of language. This approach led to the creation of a few-shot SRL parser, called PromptSRL, which allows LLMs to map natural language to explicit semantic structures. This provides a more interpretable window into the inner workings of LLMs and their potential limitations.

LLMs struggle when handling tasks which require extensive knowledge. This limitation highlights the need to supplement LLMs with non-parametric knowledge. This paper Prompting Large Language Models with Knowledge Graphs for Question Answering Involving Long-tail Facts analyze the effects of different types of non-parametric knowledge, such as textual passages and knowledge graphs (KGs), and creating a benchmark called LTGen that requires knowledge of long-tail facts for answering questions. The benchmark is created using a fully automatic pipeline. LLMs are then evaluated using this benchmark in different knowledge settings. The research paper suggests that prompting LLMs with KG triples can be more effective than passage-based prompting using a state-of-the-art retriever. Additionally, prompting LLMs with both KG triples and documents can reduce hallucinations in the generated content.

This research paper tries to evaluate the zero-shot ability of Vision Language Models (VLMs) to perform Visual Network Analysis (VNA) tasks on small-scale graphs. Multimodal LLMs Struggle with Basic Visual Network Analysis: a VNA Benchmark evaluates the performance of two VLMs, GPT-4 and LLaVa, on 5 different VNA tasks related to three foundational network science concepts. These tasks are designed to be easily solvable by a human who understands the underlying graph theoretic concepts, and can all be solved by counting the appropriate elements in graphs. The models are evaluated on their ability to identify nodes of maximal degree, determine whether signed triads are balanced or unbalanced, and count components. This evaluation provides insights into the strengths and weaknesses of VLMs in performing VNA tasks.

This research paper tries to create a benchmark which is currently non solvable by any LLM! This benchmark, known as the Abstraction and Reasoning Corpus (ARC), demands strong generalization and reasoning capabilities, which are known to be weaknesses of LLMs. Program Synthesis using Inductive Logic Programming for the Abstraction and Reasoning Corpus proposes a Program Synthesis system that uses Inductive Logic Programming (ILP), a branch of Symbolic AI, to solve ARC. The system works by using a manually defined Domain Specific Language (DSL) that corresponds to a small set of object-centric abstractions relevant to ARC. This DSL serves as the Background Knowledge for ILP to create Logic Programs that provide reasoning capabilities to the system. With ILP, the system is able to generalize to unseen tasks by creating Logic Programs from just a few examples. These Logic Programs can generate Objects present in the Output grid and combine them to form a complete program that transforms an Input grid into an Output grid.

📚Survey papers

A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models - covering the recent advancements in RAG and innovative applications created using in RAG in different industries.

🌈 Creative ways to use LLMs!!

Poly- technic University of Bari, Italy

XAI4LLM. Let Machine Learning Models and LLMs Collaborate for Enhanced In-Context Learning in Healthcare

💡Why?: Paper tries to integrate LLMs into healthcare diagnostics.

💻How?: The paper proposes a novel method for zero-shot/few-shot in-context learning (ICL) by integrating medical domain knowledge using a multi-layered structured prompt. This method involves exploring two different communication styles between the user and LLMs: the Numerical Conversational (NC) style and the Natural Language Single-Turn (NL-ST) style. The research also systematically evaluates the diagnostic accuracy and risk factors, such as gender bias and false negative rates, using a dataset of 920 patient records in various few-shot scenarios.

Alibaba Group, University of Science and Technology of China

UniDM: A Unified Framework for Data Manipulation with Large Language Models

💡Why?: Designing effective data manipulation methods for data lakes using LLMs.

💻How?: The research paper proposes a solution called UniDM, which is a unified framework that utilizes LLMs to process data manipulation tasks. It works by formalizing different data manipulation tasks into a unified form and abstracting three main general steps to solve each task. This includes an automatic context retrieval for LLMs to retrieve data from data lakes, as well as designing effective prompts to guide LLMs in producing high-quality results.