Dear subscriber,

I have migrated my newsletter to Beehiiv from Substack 🎉 and I imported my subscriberes list to here. From now on, you will get newsletter in improved UI!

Here is the list of research papers published today on large language models.

I am working on improving look and depth of newsletter. Time to get excited! 🤗

🔗Code/data/weights: https://github.com/thunlp/LLaVA-UHD

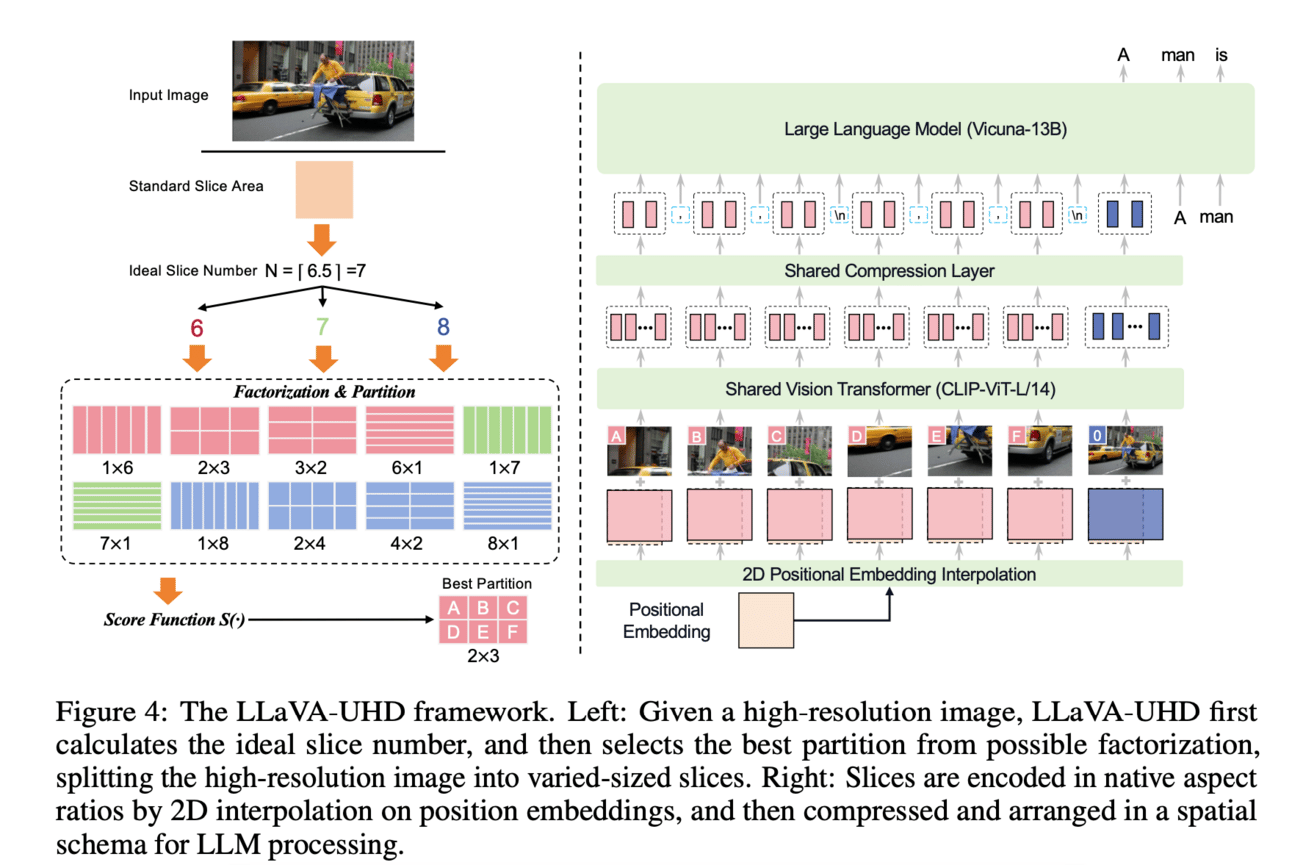

🤔 Problem?:

The research paper addresses the limitations of current large multimodal models (LMMs) in processing images, particularly in terms of fixed sizes, limited resolutions, and lack of adaptivity and efficiency.

💻 Proposed solution:

The research paper proposes a new LMM called LLaVA-UHD, which is designed to efficiently perceive images of any aspect ratio and high resolution. It includes three key components: an image modularization strategy, a compression module, and a spatial schema. The image modularization strategy divides images into smaller variable-sized slices, which are then compressed and organized for processing by LLMs. This allows for more efficient and extensible encoding of images.

📊 Results:

The research paper reports significant performance improvements of LLaVA-UHD over established LMMs on 9 benchmarks. For example, the model built on LLaVA-1.5 is able to support images with 6 times larger resolution while using only 94% of the inference computation, and achieves 6.4 accuracy improvement on the TextVQA benchmark. Additionally, LLaVA-UHD can be efficiently trained in academic settings, taking only 23 hours on 8 A100 GPUs, compared to 26 hours for LLaVA-1.5.

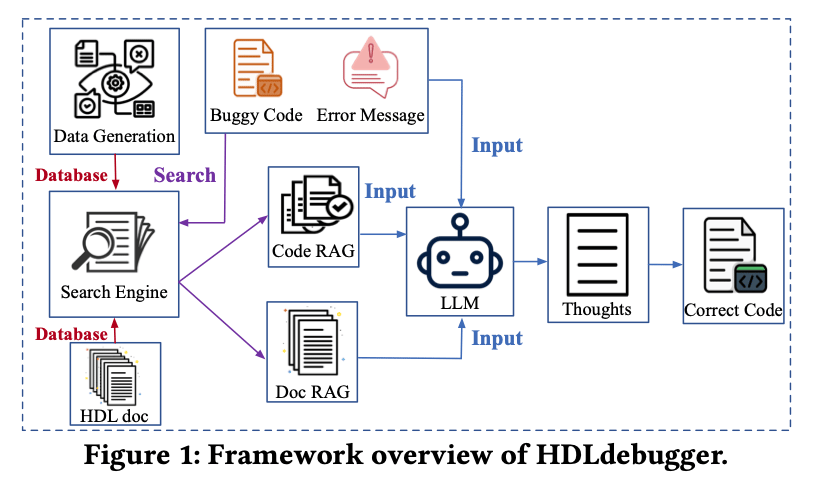

🤔 Problem?:

The research paper addresses the problem of complex syntax and limited online resources in debugging Hardware Description Language (HDL) codes, which makes it a difficult and time-consuming task for even experienced hardware engineers.

💻 Proposed solution:

The research paper proposes an LLM-assisted HDL debugging framework, called HDLdebugger, to automate and streamline the HDL debugging process. It consists of three components: HDL debugging data generation through reverse engineering, a search engine for retrieval-augmented generation, and a retrieval-augmented LLM fine-tuning approach. This framework utilizes the capabilities of Large Language Models (LLMs) to generate, complete, and debug the HDL code, making it easier for hardware engineers to identify and fix errors in their code.

📊 Results:

The research paper conducted comprehensive experiments on an HDL code dataset sourced from Huawei and compared the performance of HDLdebugger with 13 cutting-edge LLM baselines. The results showed that HDLdebugger outperformed all the baselines, displaying exceptional effectiveness in HDL code debugging.

🤔 Problem?:

The research paper addresses the problem of seamlessly translating natural language descriptions into executable code in the ever-evolving landscape of machine learning. This is a formidable challenge as it requires bridging the gap between task descriptions and functional code.

💻 Proposed solution:

The research paper proposes a solution in the form of Linguacodus, an innovative framework that utilizes a dynamic pipeline to iteratively transform natural language task descriptions into code through high-level data-shaping instructions. At its core, Linguacodus uses a fine-tuned large language model (LLM) to evaluate diverse solutions for various problems and select the most fitting one for a given task. This allows for automated code generation with minimal human interaction. The paper details the fine-tuning process and sheds light on how natural language descriptions can be translated into functional code.

📊 Results:

The paper showcases the effectiveness of Linguacodus through extensive experiments on a vast machine learning code dataset originating from Kaggle. It highlights the potential applications of Linguacodus across diverse domains and its impact on applied machine learning in various scientific fields. However, it does not provide a specific performance improvement, as the effectiveness of Linguacodus will vary depending on the specific task and dataset.

🤔 Problem?:

The research paper addresses the challenging problem of video understanding, specifically in capturing long-term temporal relations in lengthy videos.

💻 Proposed solution:

The paper proposes a multimodal agent called VideoAgent, which combines the strengths of both large language models and vision-language models with a unified memory mechanism. This allows the agent to construct a structured memory to store temporal event descriptions and object-centric tracking states of the video, and then use tools like video segment localization and object memory querying, along with other visual foundation models, to interactively solve a given task query. The agent also utilizes the zero-shot tool-use ability of LLMs, allowing it to perform well on previously unseen tasks.

📊 Results:

The research paper reports impressive performance improvements on several long-horizon video understanding benchmarks, with an average increase of 6.6% on NExT-QA and 26.0% on EgoSchema over baselines. This closes the gap between open-sourced models and private counterparts, including Gemini 1.5 Pro.

🤔 Problem?:

The research paper addresses the issue of traditional model development and automated approaches being limited by layers of abstraction, which can hinder creativity and insight in the model evolution process.

💻 Proposed solution:

The research paper proposes a solution called "Guided Evolution" (GE), which utilizes Large Language Models (LLMs) to directly modify code. This approach allows for a more intelligent and supervised evolutionary process, where the LLMs guide mutations and crossovers. Additionally, the paper introduces the "Evolution of Thought" (EoT) technique, which enables LLMs to reflect on and learn from previous mutations, creating a self-sustaining feedback loop. This process is able to maintain genetic diversity through the use of LLMs' ability to generate diverse responses from prompts and modulate model temperature. This results in an accelerated evolution process and injects expert-like creativity and insight into the process.

📊 Results:

The research paper demonstrates the efficacy of GE by applying it to the ExquisiteNetV2 model, which resulted in an improved accuracy from 92.52% to 93.34%.

🤔 Problem?:

The research paper addresses the problem of enhancing the translation capabilities of large language models (LLMs) in the context of machine translation (MT) tasks.

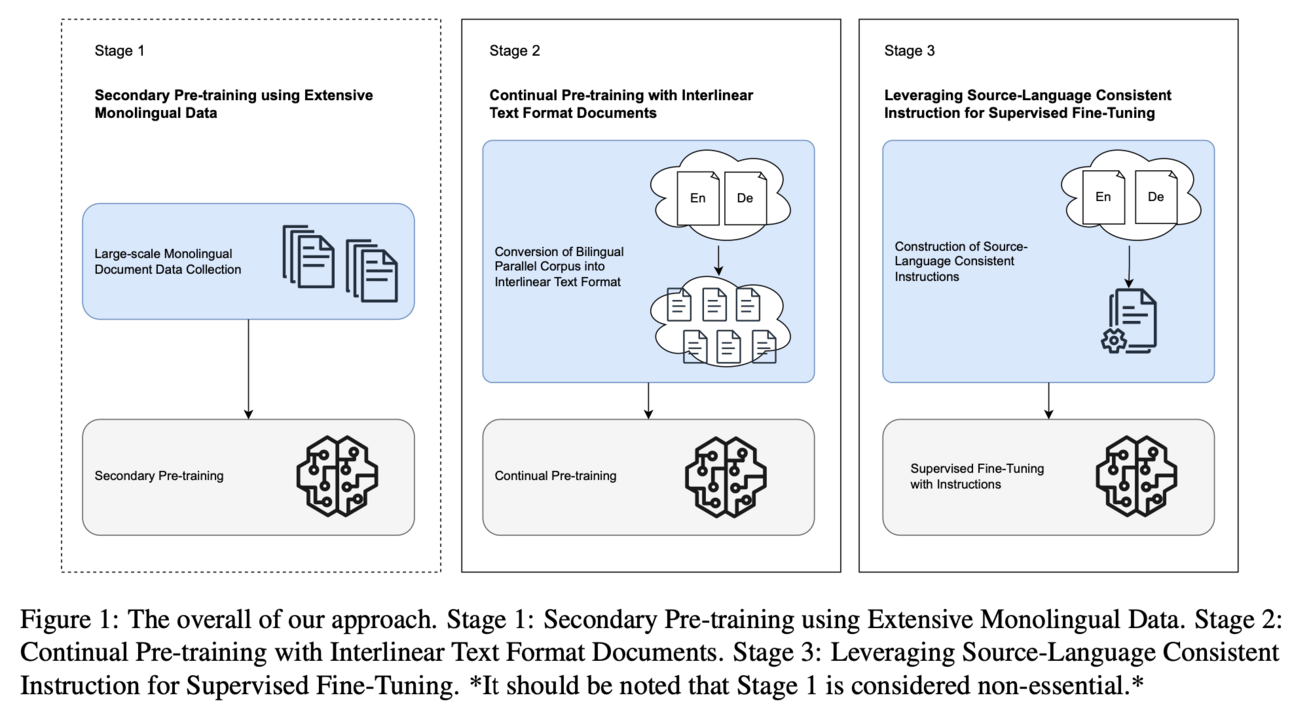

💻 Proposed solution:

The research paper proposes a novel paradigm consisting of three stages: Secondary Pre-training using Extensive Monolingual Data, Continual Pre-training with Interlinear Text Format Documents, and Leveraging Source-Language Consistent Instruction for Supervised Fine-Tuning. This approach focuses on augmenting LLMs' cross-lingual alignment abilities during pre-training rather than solely relying on extensive bilingual data during supervised fine-tuning. This is achieved by using smaller sets of high-quality bilingual data and setting instructions consistent with the source language during the fine-tuning process. In addition, the research paper highlights the efficiency of their method, particularly in Stage 2, which only requires less than 1B training data.

📊 Results:

The research paper achieves significant performance improvement compared to previous work and achieves superior performance compared to models such as NLLB-54B and GPT3.5-text-davinci-003, despite having a significantly smaller parameter count of only 7B or 13B.

🤔 Problem?:

The research paper addresses the challenge of creating cost-effective methods for constructing multilingual and multimodal datasets for large language models (LLMs).

💻 Proposed solution:

The paper proposes two solutions to this problem: (1) vocabulary expansion and pretraining of multilingual LLMs for specific languages, and (2) using GPT4-V for automatic and elaborate construction of multimodal datasets. These methods aim to reduce the expenses and challenges associated with creating multilingual and multimodal training data by utilizing existing resources and technology.

📊 Results:

The research paper demonstrates the effectiveness of these methods by constructing a 91K English-Korean-Chinese multilingual, multimodal training dataset and developing a bilingual multimodal model that outperforms existing approaches in both Korean and English. This showcases a significant performance improvement in creating and utilizing multilingual and multimodal data for LLMs.

Connect with fellow researchers community on Twitter to discuss more about these papers at

🤔 Problem?:

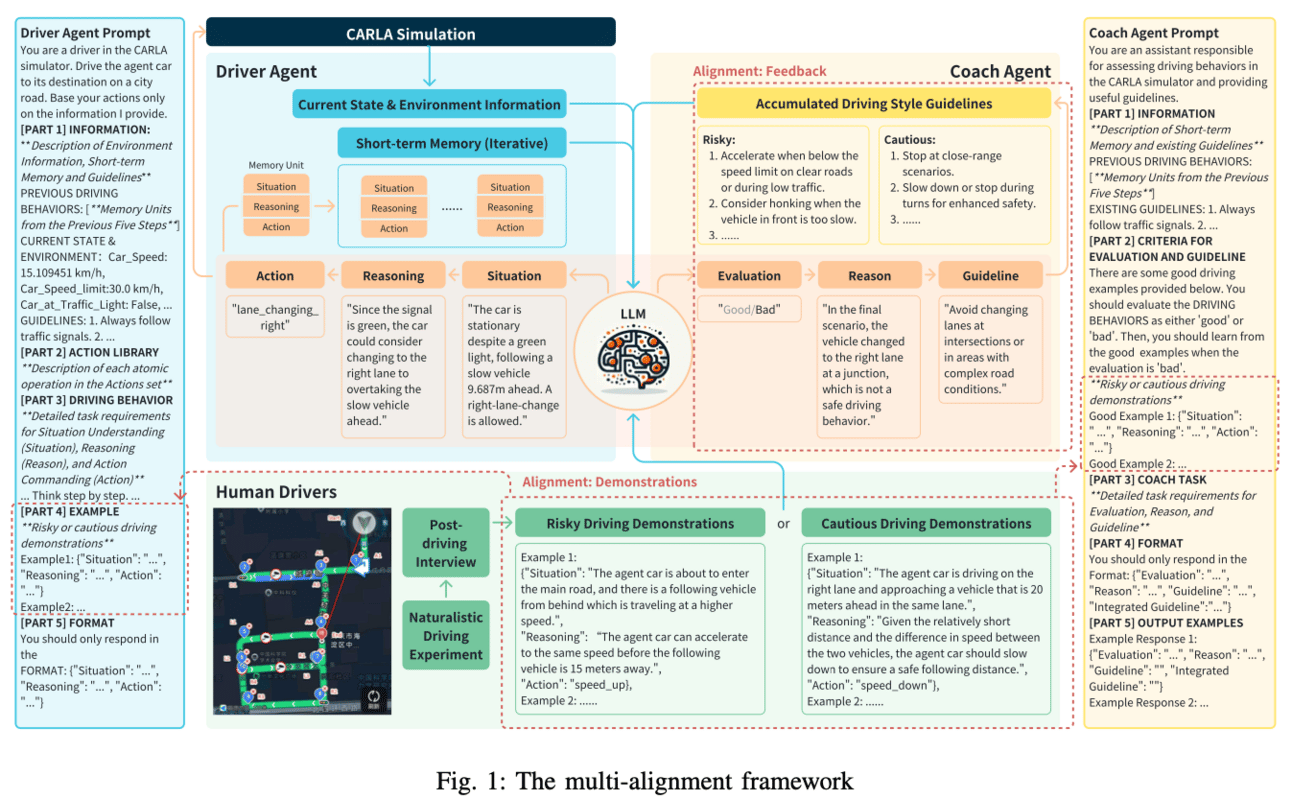

The research paper addresses the problem of aligning driver agents with human driving styles in the field of autonomous driving. Despite the potential of LLM-powered driver agents in showcasing human-like reasoning and decision-making abilities, there is a scarcity of high-quality natural language data from human driving behaviors, making it difficult to align the behaviors of driver agents with those of humans.

💻 Proposed solution:

The research paper proposes a multi-alignment framework to address this problem. This framework is designed to align driver agents with human driving styles through demonstrations and feedback. To achieve this, the researchers conducted naturalistic driving experiments and post-driving interviews to construct a high-quality natural language dataset of human driver behaviors. This dataset is then used to train driver agents and align their behaviors with human driving styles. The effectiveness of this framework is validated through simulation experiments in the CARLA urban traffic simulator and further corroborated by human evaluations.

📊 Results:

The research paper does not mention any specific performance improvements achieved through their proposed framework. However, by offering valuable insights into designing driving agents with diverse driving styles, the research paper contributes to the advancement of autonomous driving technology. The framework and dataset provided by the researchers can serve as a valuable resource for future research in this field.

🤔 Problem?:

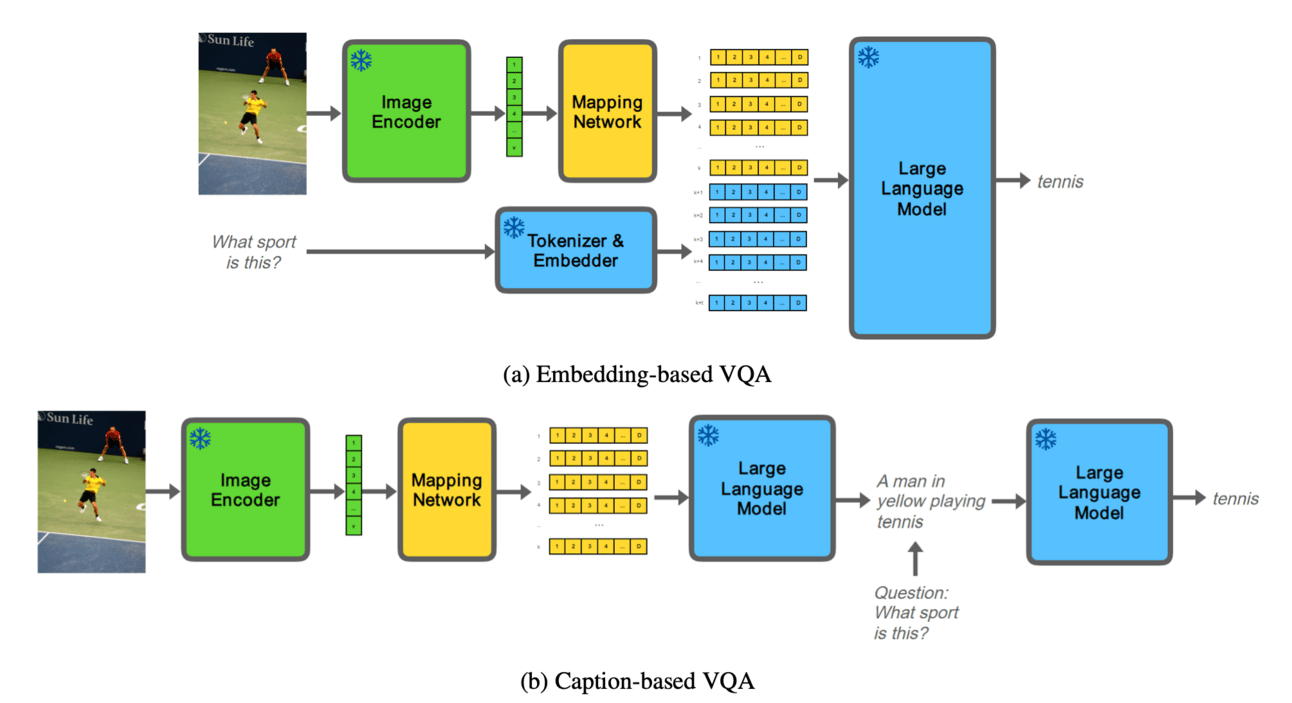

The research paper addresses the problem of inputting images into large language models (LLMs) for few-shot visual question answering (VQA). It specifically focuses on comparing two approaches for inputting images into LLMs, namely captioning images into natural language and mapping image feature embeddings directly into the LLM domain.

💻 Proposed solution:

The research paper proposes to solve the problem by conducting a controlled and focused experiment that compares the two aforementioned approaches for few-shot VQA with LLMs. This experiment involves using Flan-T5 XL, a 3B parameter LLM, and testing the performance of both approaches in the zero-shot and few-shot regimes. The experiment also takes into account how the in-context examples are selected, as this can affect the performance of each approach.

📊 Results:

The research paper does not explicitly mention any performance improvements achieved. However, it does highlight that in the zero-shot regime, using textual image captions is better, while in the few-shot regimes, the method of selecting in-context examples determines which approach is more effective. This suggests that there is no clear winner between the two approaches, and their performance may vary depending on the specific scenario.