LLMs performance improvement

🤔Problem?:

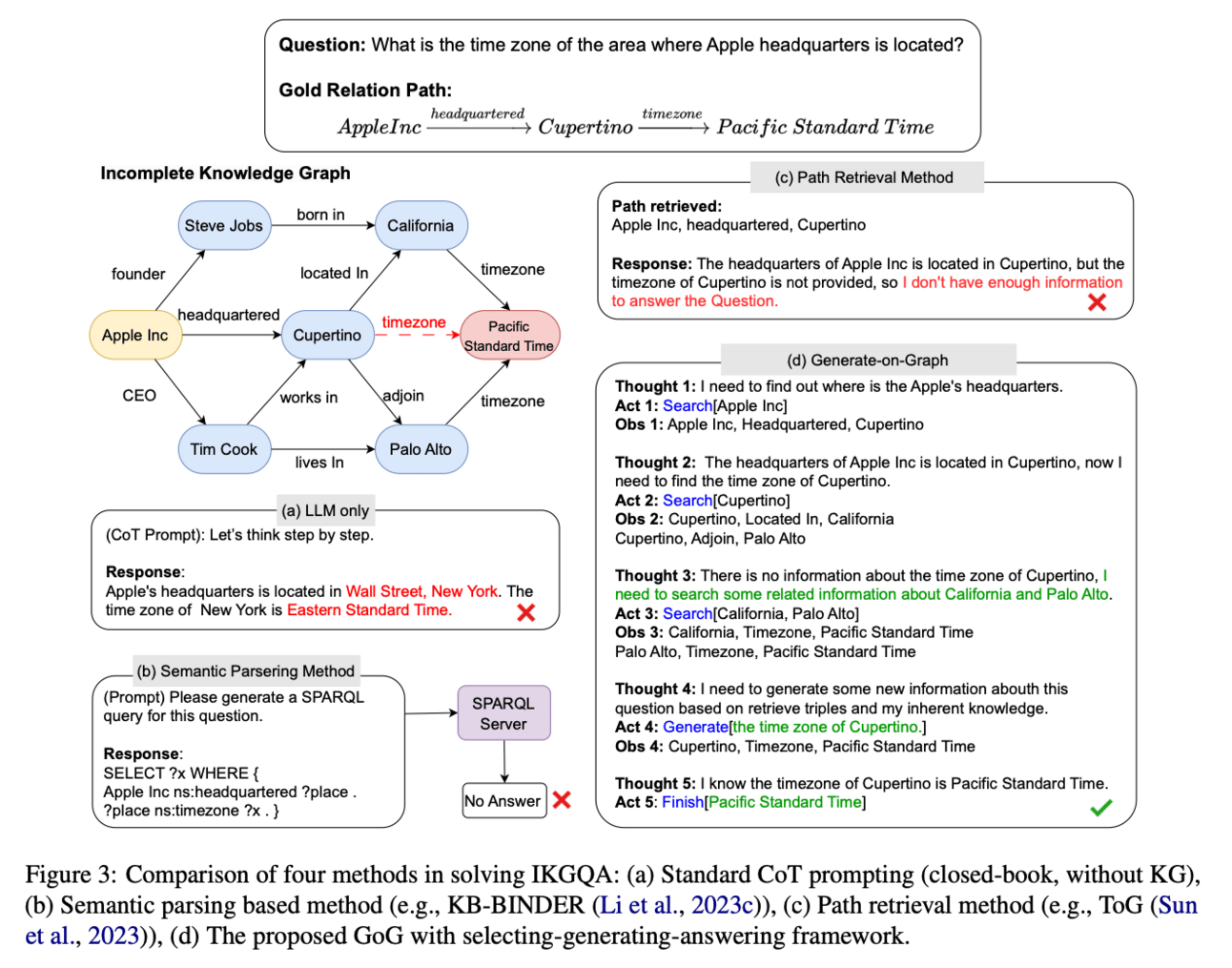

The research paper addresses the issue of insufficient knowledge and the tendency to generate hallucination in Large Language Models (LLMs) when integrated with Knowledge Graphs (KGs). This is a common problem faced by LLMs in conventional Knowledge Graph Question Answering (KGQA) with complete KGs, where the given KG does not cover all the factual triples involved in each question.

💻Proposed solution:

The research paper proposes to solve this problem by leveraging LLMs for QA under Incomplete Knowledge Graph (IKGQA), where the given KG does not include all the required knowledge. To handle IKGQA, the paper introduces a training-free method called Generate-on-Graph (GoG). This method works by exploring the KG and generating new factual triples while doing so. It uses a selecting-generating-answering framework, which treats the LLM as both an agent to explore on KGs and a KG to generate new facts based on the explored subgraph and its inherent knowledge.

🤔Problem?:

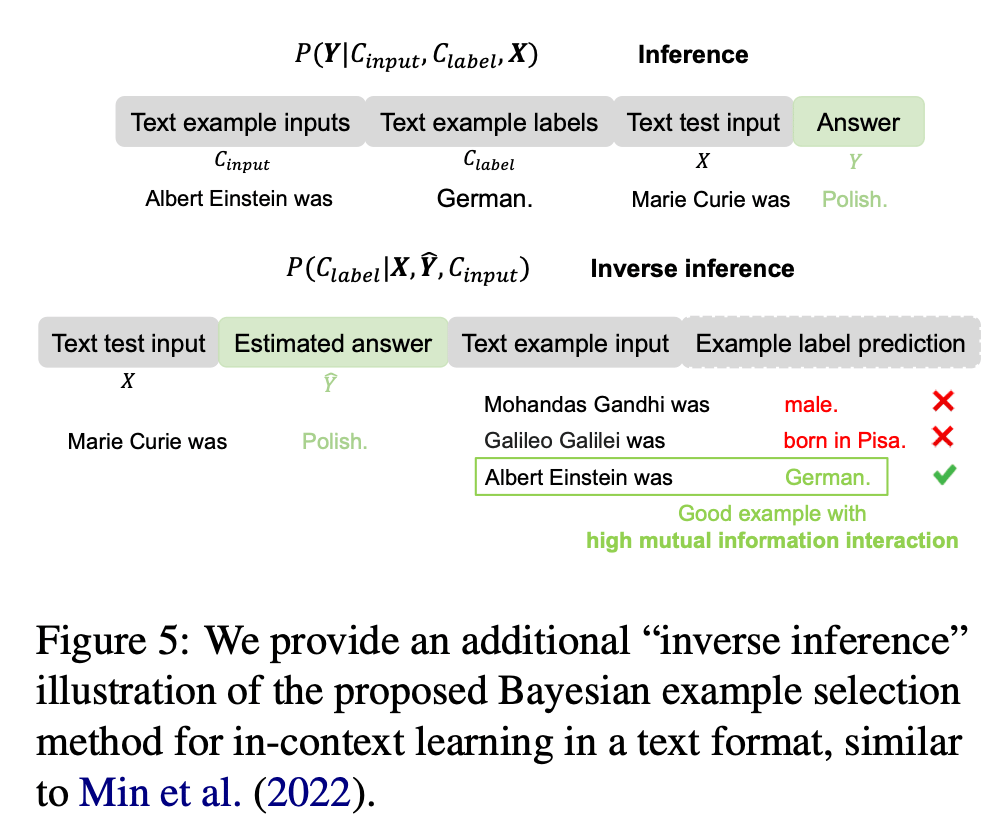

The research paper addresses the problem of selecting high-quality in-context examples for in-context learning in large language models.

💻Proposed solution:

The research paper proposes a novel Bayesian in-Context example Selection method (ByCS) for in-context learning. This method focuses on the inverse inference conditioned on test input, selecting in-context examples based on their inverse inference results. By considering the likelihood of accurate inverse inference as an indicator of accurate posterior inference, the ByCS method aims to improve the performance of in-context learning by selecting the best in-context examples.

Pattern-Aware Chain-of-Thought Prompting in Large Language Models

The research paper proposes a solution called Pattern-Aware CoT, which is a prompting method that considers the diversity of demonstration patterns. It works by incorporating patterns such as step length and reasoning process within intermediate steps, effectively mitigating any bias induced by demonstrations. This enables better generalization to diverse scenarios and improves reasoning performance.

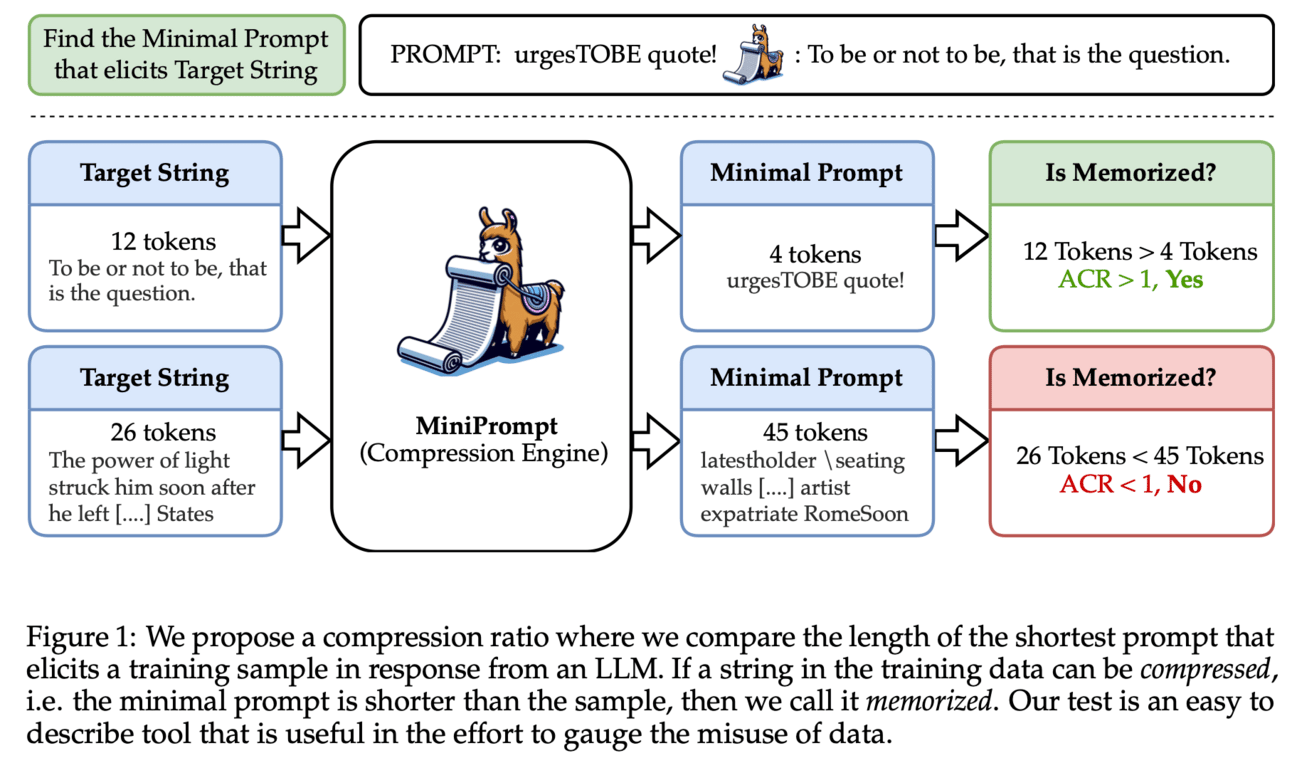

🤔Problem?: With the increasing use of LLMs, there are concerns regarding whether these models "memorize" all their training data or if they integrate data from multiple sources like how humans learn and synthesize information.

💻Proposed solution:

The research paper proposes the Adversarial Compression Ratio (ACR) as a metric for measuring memorization in LLMs. This metric considers a given string from the training data as memorized if it can be elicited by a prompt shorter than the string itself. In other words, the string can be "compressed" by the model by using fewer tokens. The ACR overcomes the limitations of existing notions of memorization by offering an adversarial view, especially for monitoring unlearning and compliance. It also allows for flexibility in measuring memorization for any string with reasonably low compute. The ACR serves as a valuable and practical tool for determining when model owners may be violating terms around data usage, providing a potential legal tool and a critical lens for addressing such scenarios.

Generative agents for code improvement and beyond🕵️🕵️♂️

XFT: Unlocking the Power of Code Instruction Tuning by Simply Merging Upcycled Mixture-of-Experts [GitHub: https://github.com/ise-uiuc/xft]

The paper proposes a solution called XFT (eXplainable Fine-Tuning), which combines two techniques - upcycling Mixture-of-Experts (MoE) and model merging - to utilize the performance limit of instruction-tuned LLMs. First, XFT introduces a shared expert mechanism with a novel routing weight normalization strategy into sparse upcycling, which significantly boosts instruction tuning. Then, after fine-tuning the upcycled MoE model, XFT uses a learnable model merging mechanism to compile the model back to a dense model, achieving upcycled MoE-level performance with only dense-model compute.

🤔Problem?:

The research paper addresses the problem of interactive learning for language agents. Specifically, it focuses on improving the alignment between the agent's output and the user's preferences, as well as reducing the cost of user edits over time.

💻Proposed solution:

The research paper proposes a learning framework called PRELUDE, which infers the user's latent preference based on historic edit data and uses it to guide future response generation. This avoids the costly and challenging process of fine-tuning the agent for each individual user. Additionally, the paper introduces a new algorithm called CIPHER, which leverages a large language model to infer the user's preference for a given context and uses this information to form an aggregate preference for response generation. This approach takes into account the complexity and variability of user preferences, making it more effective at learning and adapting to different contexts.

Papers with database/benchmarks

📚Want to learn more, Survey paper

🧯Let’s make LLMs safe!! (LLMs security related papers)

🌈 Creative ways to use LLMs!! (Applications based papers)

This research paper addresses the issue of unsatisfactory performance and high cost associated with learning-based transpilers for automated code translation tasks.

The research paper proposes to solve the problem of regressive side effects of LLMs to mimic student misconceptions for personalized education by introducing a "hallucination token" technique. This token is added at the beginning of each student response during training and instructs the model to switch between mimicking student misconceptions and providing factually accurate responses. This helps to strike a balance between personalized education and factual accuracy.

Re-Thinking Inverse Graphics With Large Language Models [Paper page: https://ig-llm.is.tue.mpg.de/]

The research paper proposes the Inverse-Graphics Large Language Model (IG-LLM), which is an inverse-graphics framework centered around a LLM. This LLM autoregressively decodes a visual embedding into a structured, compositional 3D-scene representation. It incorporates a frozen pre-trained visual encoder and a continuous numeric head for end-to-end training. This approach leverages the broad world knowledge encoded in LLMs to solve inverse-graphics problems through next-token prediction, without the use of image-space supervision.

🤔Problem?:

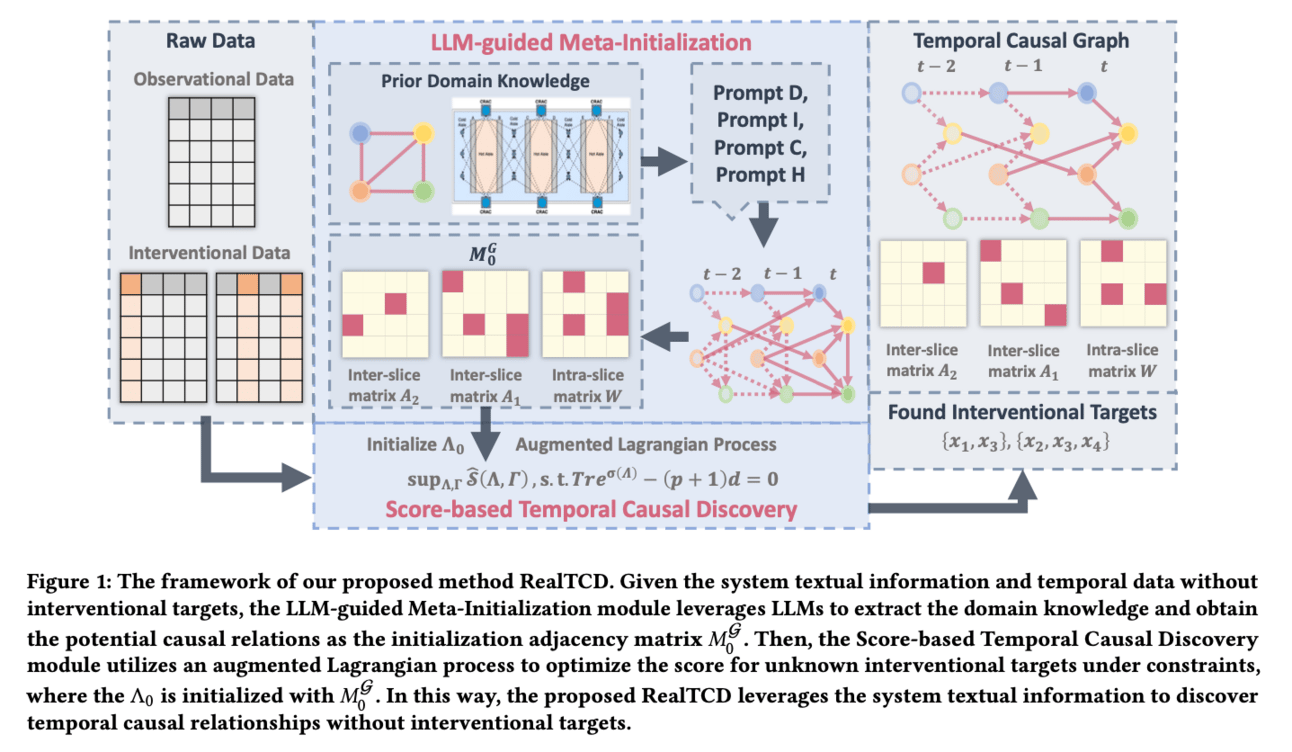

The research paper addresses the problem of temporal causal discovery in industrial scenarios, where existing methods fail to effectively identify causal relationships due to a heavy reliance on intervention targets and a lack of consideration for textual information.

💻Proposed solution:

The research paper proposes the RealTCD framework which leverages domain knowledge to discover temporal causal relationships without relying on interventional targets. It achieves this through a score-based temporal causal discovery method, which strategically masks and regularizes the data to identify causal relations. Additionally, the framework utilizes LLMs to integrate textual information and extract meta-knowledge, further improving the quality of discovery.

📊Results:

The research paper conducts extensive experiments on simulation and real-world datasets to demonstrate the superiority of the RealTCD framework over existing baselines in discovering temporal causal structures. The results show a significant performance improvement in discovering causal relationships without the need for interventional targets, highlighting the effectiveness of the proposed framework.

🤖LLMs for robotics