🔑 takeaway from today’s newsletter

LongLLaVA scales up multimodal LLMs to process nearly 1,000 images efficiently - boosting AI's visual prowess without overloading your GPU.

The Single-Turn Crescendo Attack (STCA) reveals LLM vulnerabilities in just one interaction - underlining the need for stronger AI safeguards.

SmileyLlama modifies large language models for chemical exploration- bringing a fresh twist to molecular discoveries.

Mamba serves as a motion encoder for robotic imitation learning - helping robots mimic human movements more effectively.

LLMs hypothesize missing causal variables—acting as digital detectives to fill gaps in scientific understanding.

Core research improving LLMs!

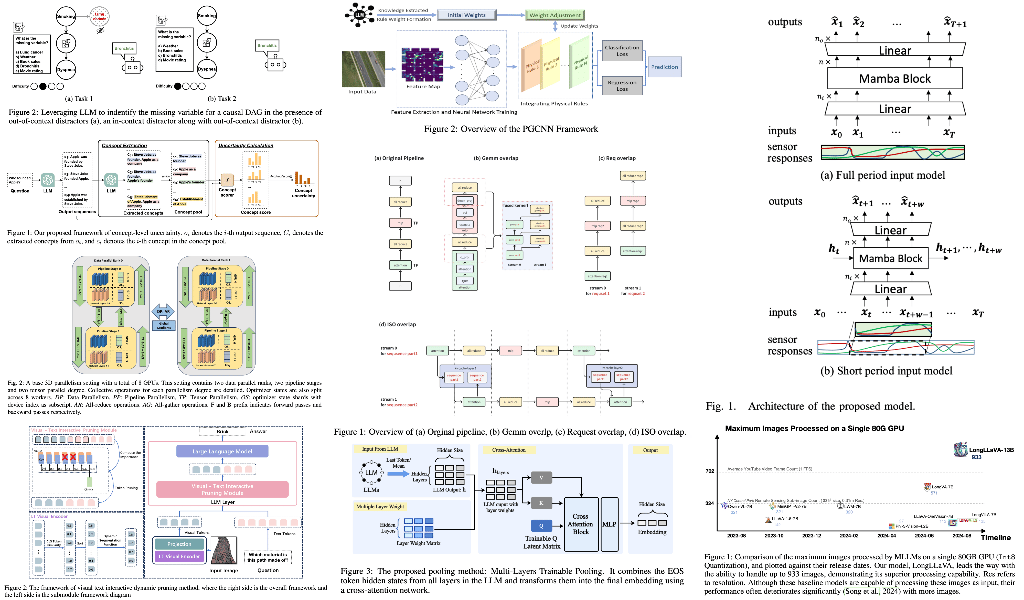

Why?: This paper addresses the limitations of existing uncertainty estimation methods that focus only on sequence-level uncertainty and fail to separately assess the uncertainty of each component in a sequence.

How?: The proposed CLUE framework transforms LLM-generated sequences into concept-level representations, allowing for individual concept uncertainty estimation. This is achieved by breaking down sequences into individual concepts and mathematically measuring uncertainty for each concept separately. The approach allows researchers to conduct more detailed uncertainty assessments, providing a granular understanding of model outputs.

Why?: Current long-context LLMs often lack citations in their responses, creating verification challenges and diminishing trustworthiness due to potential hallucinations.

How?: The research introduces LongBench-Cite for evaluating LLM citation performance. A novel CoF pipeline was developed to generate QA instances with precise citations. This pipeline was used to create LongCite-45k, a dataset to train LongCite-8B and LongCite-9B models for generating accurate responses with sentence-level citations.

Results: Models trained using LongCite-45k achieved state-of-the-art citation quality, outperforming proprietary models like GPT-4o.

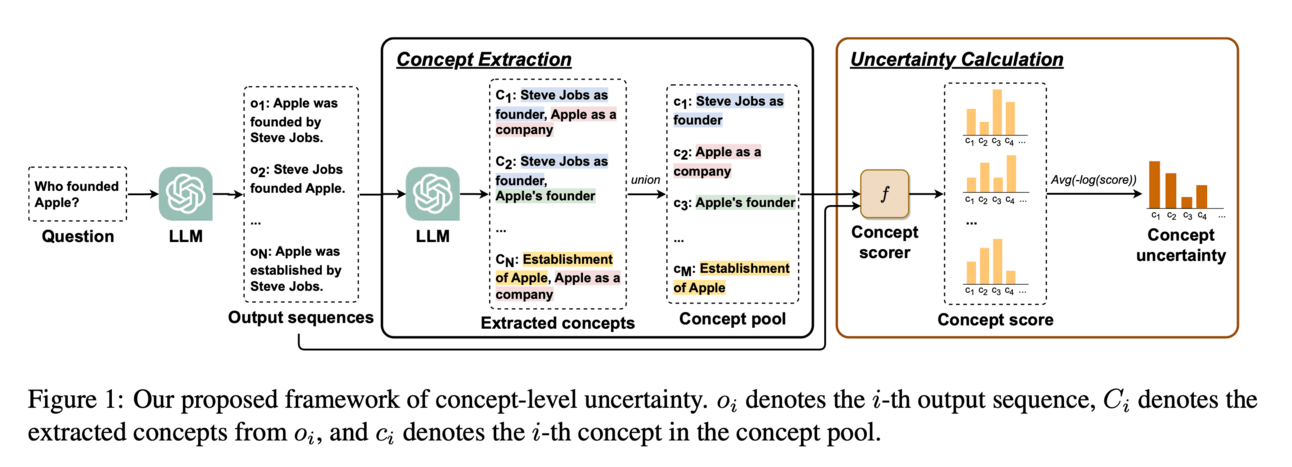

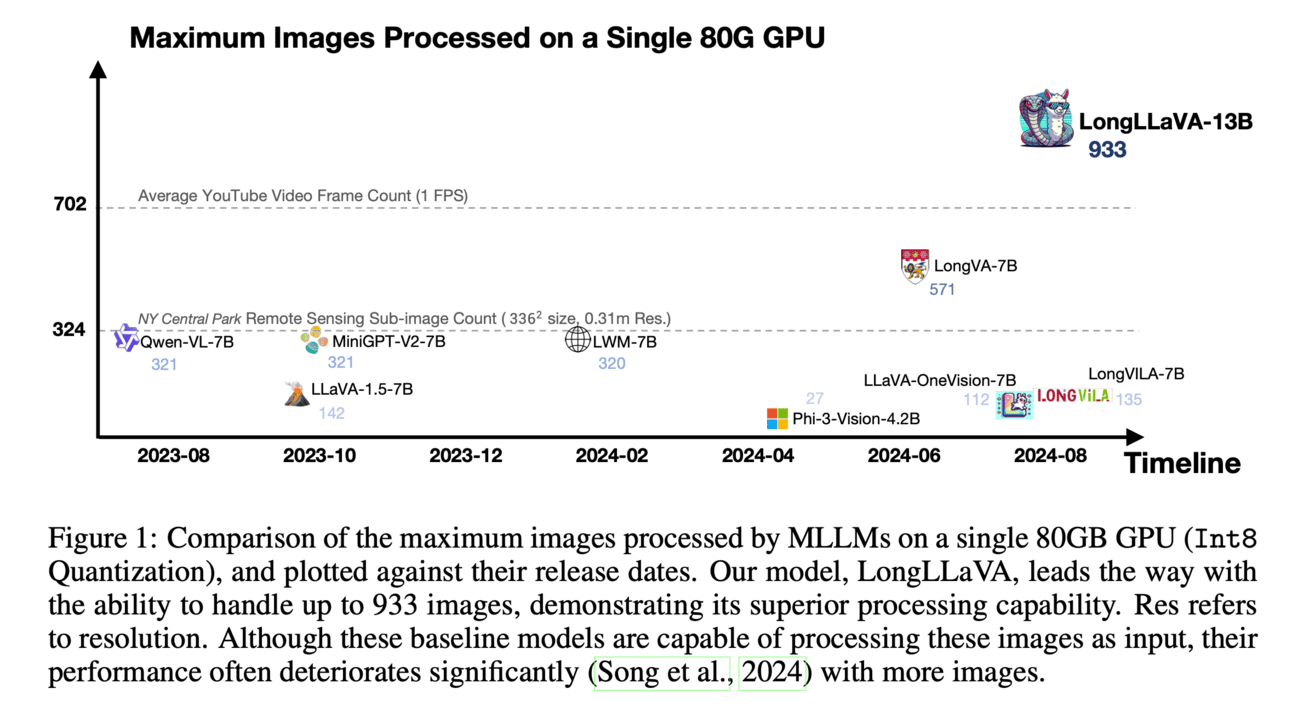

Why?: Expands multimodal LLMs long-context capabilities

How?: The research developed a hybrid model architecture combining Mamba and Transformer blocks. It incorporated a data construction approach capturing temporal and spatial dependencies across multiple images and used a progressive training strategy. This combination aimed to balance efficiency and effectiveness, enabling the model to process nearly a thousand images efficiently with high throughput and low memory usage.

Results: LongLLaVA achieved competitive results across various benchmarks while maintaining high throughput and low memory consumption. It could process nearly a thousand images on a single A100 80GB GPU, indicating its efficiency and potential applicability.

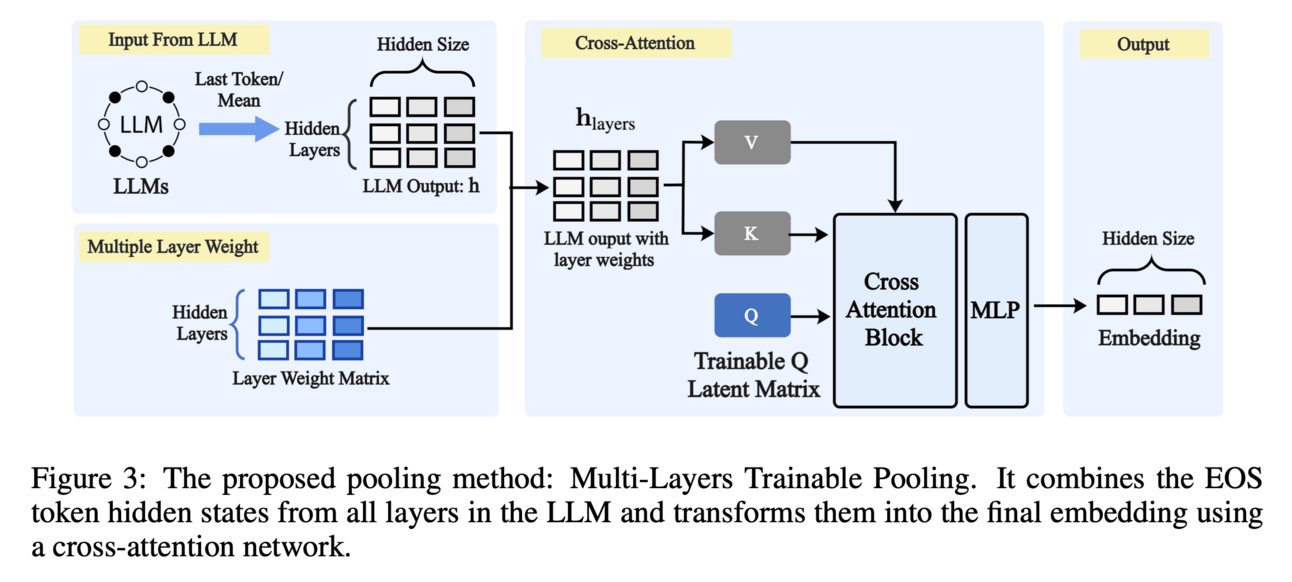

Why?: Paper optimizes LLM-based embedding models by identifying effective pooling and attention mechanisms, potentially improving performance on tasks like text similarity and information retrieval.

How?: The study involved a large-scale experiment with LLM-based embedding models. These models were trained on the same dataset and base model, differing only in pooling and attention strategies. The researchers compared various designs, including bidirectional attention, EOS-last token pooling, and more. A new Multi-Layers Trainable Pooling strategy was introduced, transforming outputs from all hidden layers using a cross-attention network. This experiment aimed to evaluate statistically significant differences in performance across diverse tasks.

Results: The findings indicate no universal optimal design: bidirectional attention and trainable pooling excel in text similarity and retrieval, whereas simpler designs suffice for clustering and classification. The proposed Multi-Layers Trainable Pooling showed statistically superior performance in specific tasks.

The authors developed a novel methodology to create LLM ensembles capable of detecting hallucinations without extensive computational and memory resources. They focus on optimizing the training and inference processes, ensuring that only a single GPU is needed, making it accessible and pragmatic for practical applications.

Deconfounded Causality-aware Parameter-Efficient Fine-Tuning for Problem-Solving Improvement of LLMs

Why?: The research aims to improve the reasoning abilities of LLMs, especially in mathematical and physics tasks.

How?: The study begins by visualizing text generation at the attention and representation level to assess genuine reasoning. It then uses a causal framework to explain these observations. Following this, the researchers introduce Deconfounded Causal Adaptation (DCA), a parameter-efficient fine-tuning approach designed to enhance reasoning skills by allowing the model to generalize problem-solving abilities across questions. The method involves modifying only 1.2 million parameters, focusing on extracting and applying overarching problem-solving skills.

Results: Experiments demonstrate that DCA outperforms existing fine-tuning methods across various benchmarks, achieving better or comparable results while being parameter-efficient.

Why?: The research revisits the relevance of retrieval-augmented generation (RAG) amidst the rise of long-context LLMs, arguing the former can yield higher quality answers by maintaining focus on pertinent information.

How?: The research introduces an order-preserving retrieval-augmented generation (OP-RAG) mechanism, which addresses the challenges of long-context LLMs by finely tuning the amount of context supplied to the model. Through extensive experiments on public benchmarks, the OP-RAG approach is evaluated by varying the number of retrieved chunks. This method observes that as more chunks are retrieved, answer quality initially increases before decreasing, establishing optimal points for maximal answer quality with fewer tokens.

Why?: Increasing the performance of Black-Box LLMs like GPT-4 is challenging due to inaccessible parameters, making it essential to focus on prompt quality for better human-like responses.

How?: The research introduces a self-instructed in-context learning framework utilizing reinforcement learning to refine prompt generation, enabling better alignment with original prompts. Derived prompts are crafted to form contextual environments to enhance learning capability. This approach addresses semantic inconsistencies in prompt refinement and reduces discrepancies by using responses from LLMs integrated with derived prompts for a cohesive contextual demonstration.

Results: Extensive experiments show that the method improves derived prompt reliability and significantly enhances the capability of LLMs, including models like GPT-4, to produce more effective responses.

Why?: The research is important because it aims to transform LLMs from simple chatbots into general-purpose agents capable of interacting with the real world, which involves managing life-long context and statefulness.

How?: The research introduces a compressor-retriever architecture to manage life-long context for LLMs. This model-agnostic architecture compresses and retrieves context using the base model's forward function, maintaining end-to-end differentiability. Unlike retrieval-augmented generation, this approach focuses on exclusive usage of the base model, ensuring scalability and adaptability to long-context tasks without requiring extensive modifications to existing model structures.

Why?: The research explores the use of inverse reinforcement learning (IRL) to enhance the imitation learning aspect of language model training, offering potential improvements in diversity and task performance.

How?: The research investigates the application of inverse reinforcement learning (IRL) for fine-tuning large language models. It reformulates inverse soft-Q-learning as a temporal difference regularized extension of maximum likelihood estimation (MLE), establishing a new connection between MLE and IRL. This approach seeks to balance the complexity of the model with improved performance and diversity in language generation. The study emphasizes extracting rewards and optimizing sequences directly, which could improve the sequential structure of autoregressive generation.

Why?: The research proposes a more efficient prompt compression method.

How?: The research introduces context-aware prompt compression (CPC), which uses a context-aware sentence encoder providing relevance scores for sentences related to a given question. A new dataset is created containing questions, positive sentences (relevant) and negative sentences (irrelevant), which are used to train the encoder under a contrastive setup aimed at learning sentence representations.

Results: The method considerably outperforms previous prompt compression techniques on benchmark datasets and achieves up to 10.93 times faster inference compared to token-level compression.

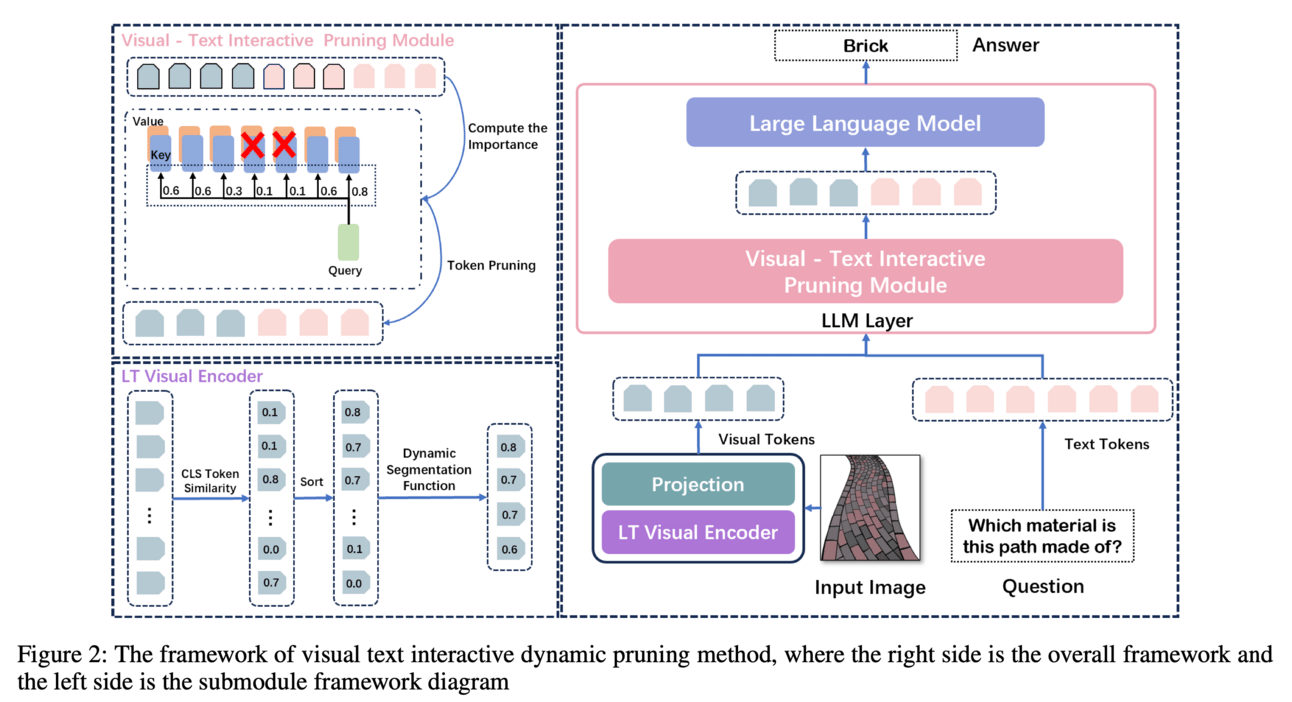

Why?: High computational costs of multimodal LLMs restrict their practical application.

How?: The research introduces a dynamic pruning algorithm for visual tokens in multimodal large language models. Firstly, the visual and CLS token similarity curve is analyzed to identify an inflection point, which helps determine a segmentation point for pruning visual tokens. Next, the concatenated visual and textual tokens are pruned again in the LLM layer. This is achieved by filtering out tokens with low text correlation, balancing efficiency and performance by leveraging interactions between visual and textual features.

Results: The proposed method reduces the token quantity by an average of 22% of the original token quantity.

AI Strategies & tools that will skyrocket your Marketing ROI by 50% 🚀

You don’t realize it yet, but AI has massive potential for you as a marketer.

This free 3-hour Masterclass on AI & ChatGPT (worth $399) will help you become a master of 20+ AI tools & prompting techniques. Join it now for $0

This is for you if you work in any vertical of marketing– writing, designer, campaign managing, influencer marketing, growth marketing, etc.

Ready to shock your team with a 10x boost in revenue & campaign performance? 🚀

You will join 1 Million+ people who have taken this masterclass to learn how to:

Create 100+ content pieces for reels,blogs, from one single long form video

Put data tracking & reporting for your campaigns on autopilot

Do predictive analysis and optimize your marketing campaigns for better results

Personalize customer experiences by leveraging the power of AI

You’ll wish you knew about this FREE AI masterclass sooner (Btw, it’s rated at 9.8/10 ⭐)

LLMOps & GPU level optimization

Why?: LLM inference efficiency improvement is crucial due to the resource-intensive nature of transformer models and multi-GPU tensor parallelism, which can underutilize computing resources.

How?: The research introduces a novel strategy for overlapping computation and communication at the sequence level during LLM inference. This method enhances the overlap degree and reduces application constraints, compared to existing techniques that overlap matrix computations and interleave micro-batches.

Results: The proposed technique decreased time consumption during the prefill stage by 35% on 4090 GPU and by roughly 15% on A800 GPU.

Why?: The research addresses the overhead in large language model (LLM) training due to data-intensive communication routines. Improving training efficiency is critical as models grow larger, requiring optimized communication strategies to handle massive data scales without sacrificing accuracy.

How?: The research investigates using compression with MPI collectives in distributed LLM training with 3D parallelism and ZeRO optimizations. It tests basic compression across all communication collectives, followed by a refined approach where compression varies by the type of data. Gradients receive aggressive compression due to their low-rank structure, while activations, optimizer states, and model parameters use milder compression to maintain precision.

Results: The naive compression scheme yields a 22.5% increase in TFLOPS per GPU and a 23.6% increase in samples per second for GPT-NeoX-20B training. Using hybrind appraoch team achieved 17.3% increase in TFLOPS per GPU and a 12.7% increase in samples per second while reaching baseline loss convergence.

Why?: The research addresses the challenge of deploying LLMs on resource-constrained devices, reducing computational costs, and minimizing the environmental impact of AI.

How?: The paper introduces CVXQ, a novel quantization framework derived from convex optimization principles. It allows for post-training quantization of LLMs with hundreds of billions of parameters to any target size. The method leverages convex optimization to efficiently scale and optimize model weights, ensuring performance is preserved while reducing the model's size significantly. A reference implementation of CVXQ is available for public use.

Why?: This research showcase heterogeneous GPUs based environment which allows more flexible and efficient utilization of computational resources beyond traditional homogeneous setups.

How?: The research introduces FlashFlex, a system that supports asymmetric partitioning of training computations across data, pipeline, and tensor model parallelism. It formalizes allocation as a constrained optimization problem and uses a hierarchical graph partitioning algorithm to efficiently distribute tasks across heterogeneous GPUs. This approach adaptively allocates training workloads to fully leverage available computational resources.

Results: FlashFlex achieves comparable training MFU for LLMs ranging from 7B to 30B parameters on heterogeneous GPUs to state-of-the-art systems using homogeneous high-performance GPUs, with minimal performance gap as low as 0.30%.

Why?: The research tries to reduce computation of MoE and attention layers by optimizing resource allocation for high and low arithmetic operations.

How?: The research introduces Duplex, a device that combines xPU tailored for high-Op/B processes and Logic-PIM designed for low-Op/B operations. The system dynamically selects the appropriate processor for each layer of LLMs based on their arithmetic intensity. Logic-PIM enhances data transmission by adding through-silicon vias (TSVs) for high-bandwidth communication, enabling efficient handling of variable Op/B operations found in LLM's MoE and attention layers.

Why?: During LLM training, current GPU cluster setups face challenges with memory and bandwidth, which hinder efficient scaling.

How?: The research introduces LuWu, an innovative in-network optimizer that allows 100B-scale model training by offloading optimizer states and parameters from GPU workers onto an in-network optimizer node. It also shifts collective communication from GPU-NCCL to SmartNIC-SmartSwitch co-optimization, reducing interference and CPU overhead. This setup retains the communication pattern of model-sharded data parallelism while ensuring efficient resource utilization.

Results: LuWu achieves a 3.98x improvement over state-of-the-art systems when training a 175B model on an 8-worker cluster.

Creative ways to use LLMs!!

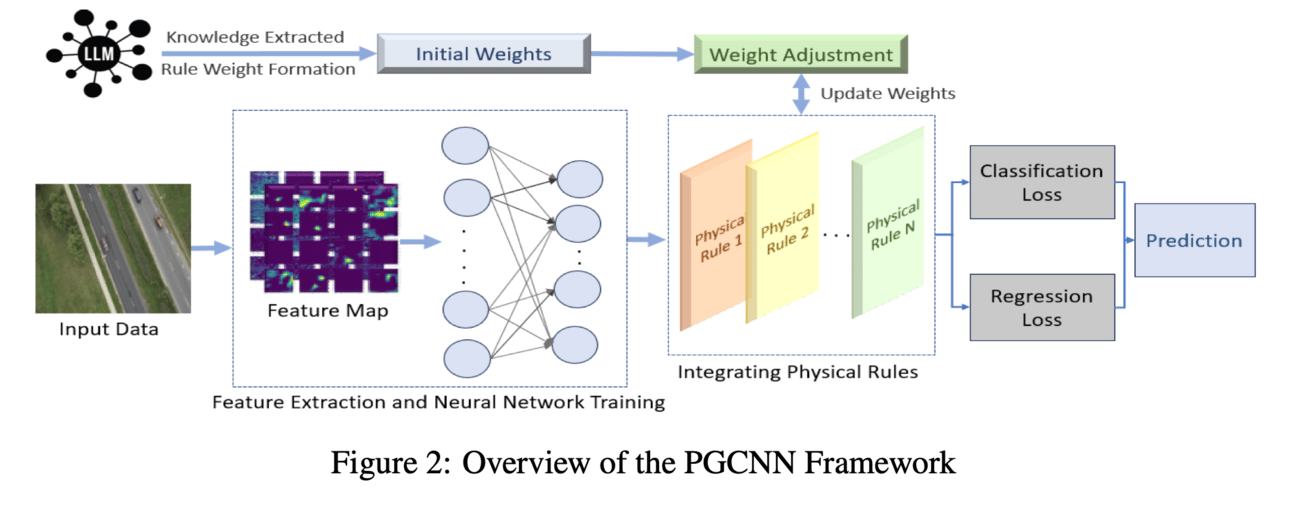

This paper introduces Physics-Guided CNNs (PGCNNs), incorporating dynamic, trainable, and automated LLM-generated physical rules into CNN architecture. These rules are integrated as custom layers, addressing challenges such as limited data and low confidence scores. The architecture enhances the CNN model by enabling it to utilize domain-specific knowledge dynamically during training and inference.

Here's Charlie! Realising the Semantic Web vision of Agents in the age of LLMs - Paper develops semi-autonomous web agents that consult users only if lacking context or confidence. A demonstration is provided using Notation3 rules to ensure safety in belief, data sharing, and usage. LLMs are integrated for natural language interaction between users and agents, enabling user training on preferences and serendipitous agent dialogues.

Multi-language Unit Test Generation using LLMs - Let’s ask LLMs to generate compilable and high-coverage unit tests!

Creating a Gen-AI based Track and Trace Assistant MVP (SuperTracy) for PostNL - LLMs in customer service and logistics to improve communication in parcel tracking. LLMs system autonomously manage user inquiries and improve knowledge handling regarding parcel journeys.

This paper explores enhancing the scientific discovery process by leveraging LLMs to hypothesize missing variables in causal relationships, a pivotal task for advancing scientific knowledge efficiently. Researchers used partial causal graphs with missing variables and tasked LLMs with hypothesizing about these missing elements.

SmileyLlama: Modifying Large Language Models for Directed Chemical Space Exploration - The researchers modified the Llama LLM, an open-source model, using supervised fine-tuning (SFT) and direct preference optimization (DPO). These methods adapted the model to handle chemical SMILES string data, enabling it to generate molecules based on desired properties. This involved training the LLM to respond to specific prompts and provide functional outputs for chemistry and materials applications.

LLMs for robotics & VLLMs

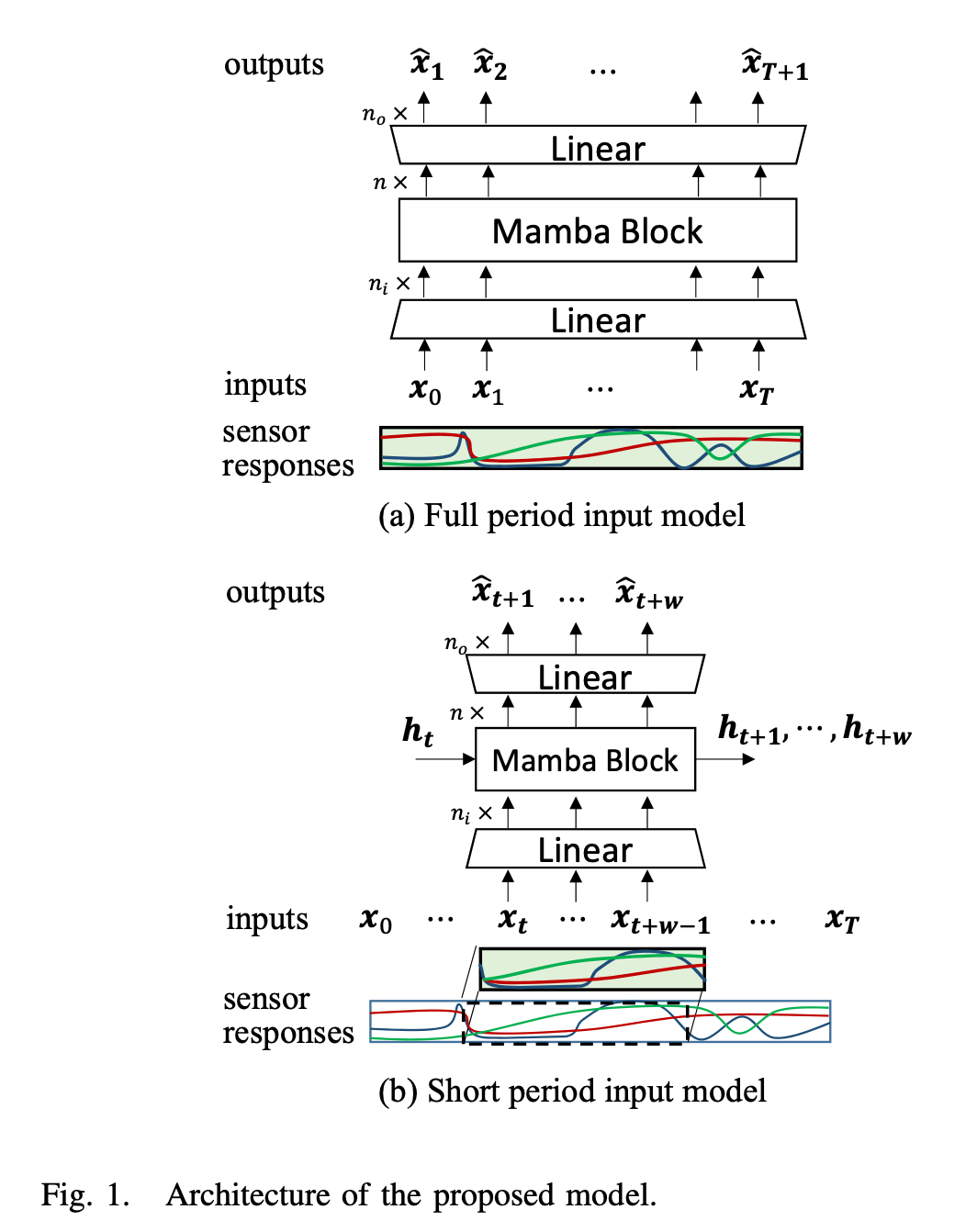

Why?: The research introduces Mamba as an effective tool for robotic imitation learning, aiming to enhance robots' dexterity and adaptability through improved contextual information capture.

How?: The study proposes the use of Mamba, a novel architecture akin to an encoder, for robotic imitation learning. Mamba operates similarly to an autoencoder by reducing the dimensionality of the state space while preserving essential temporal dynamics for effective motion prediction. It compresses sequential data into state variables, facilitating superior performance in practical tasks. The research includes experimental evaluations on tasks such as cup placing and case loading.

Results: Experimental results indicate that Mamba, despite higher estimation errors, achieves superior success rates compared to Transformers in task execution.

Why?: Monocular depth estimation is vital for computer vision, with applications in robotics, autonomous driving, and augmented reality. Leveraging large language models' capabilities can enhance depth estimation, providing efficient solutions across these domains.

How?: The research introduces LLM-MDE, a framework using LLMs for depth estimation from monocular images. It applies cross-modal reprogramming to align vision data with text, and an adaptive prompt estimation module that generates automatic prompts for the LLM based on image input. Both strategies integrate with a pretrained LLM to improve depth estimation tasks with minimal supervision. The framework is validated through experiments on real-world monocular depth estimation datasets.

Survey papers

Contemporary Model Compression on Large Language Models Inference - The survey explores contemporary model compression techniques to make LLMs memory efficient and to achieve faster computation.

LLM-Assisted Visual Analytics: Opportunities and Challenges - This is a comprehensive survey of current directions in integrating LLMs into Visual Analytics (VA) systems.

Large Language Model-Based Agents for Software Engineering: A Survey - This paper collects and analyzing 106 papers related to LLM-based agents in SE.

Let’s make LLMs safe!!

Alignment-Aware Model Extraction Attacks on Large Language Models - The researchers developed a novel attack algorithm called Locality Reinforced Distillation (LoRD). LoRD leverages a policy-gradient-style training task that uses the responses from victim models to guide preference crafting for the local model. Theoretical analysis ensures that LoRD's convergence aligns with the LLM's training alignments, and it reduces query complexity while countering watermark protections. Extensive experiments were conducted on various state-of-the-art LLMs to evaluate the effectiveness of the algorithm.

Well, that escalated quickly: The Single-Turn Crescendo Attack (STCA) - Research exposes vulnerabilities in LLMs by highlighting how adversarial inputs can provoke inappropriate outputs, emphasizing the need for stronger safeguards in responsible AI. The research introduces STCA, which combines the escalating techniques of previous multi-turn attacks into a single potent prompt. This method condenses escalation into one interaction, effectively bypassing LLM moderation filters designed to block harmful responses. The approach was developed to showcase the weaknesses in existing filter mechanisms of LLMs, which typically monitor dialogues over several interactions.

SafeEmbodAI: a Safety Framework for Mobile Robots in Embodied AI Systems - The research introduced SafeEmbodAI, a framework composed of secure prompting, state management, and safety validation mechanisms to better integrate mobile robots in embodied AI systems. It aims to secure and assist LLMs in processing multi-modal data and validating robotic behaviors through comprehensive safety checks. This framework's effectiveness is tested through a newly designed metric focusing on mission-oriented exploration in simulated environments.

Membership Inference Attacks Against In-Context Learning - The researchers developed the first membership inference attack specifically for ICL, using only generated texts. They crafted four attack strategies optimized for different restrictive scenarios and conducted experiments on four major LLMs. The study also evaluates a hybrid attack strategy that combines the strengths of the four individual strategies. Furthermore, they examined three defence mechanisms focusing on data handling, instructional adjustments, and output modifications to mitigate privacy risks. The attack strategies achieved a 95% accuracy advantage in most cases.