Core research improving LLMs!

💡Why?: The research paper addresses the problem of efficiently deploying LLMs due to their ever-growing computational complexity.

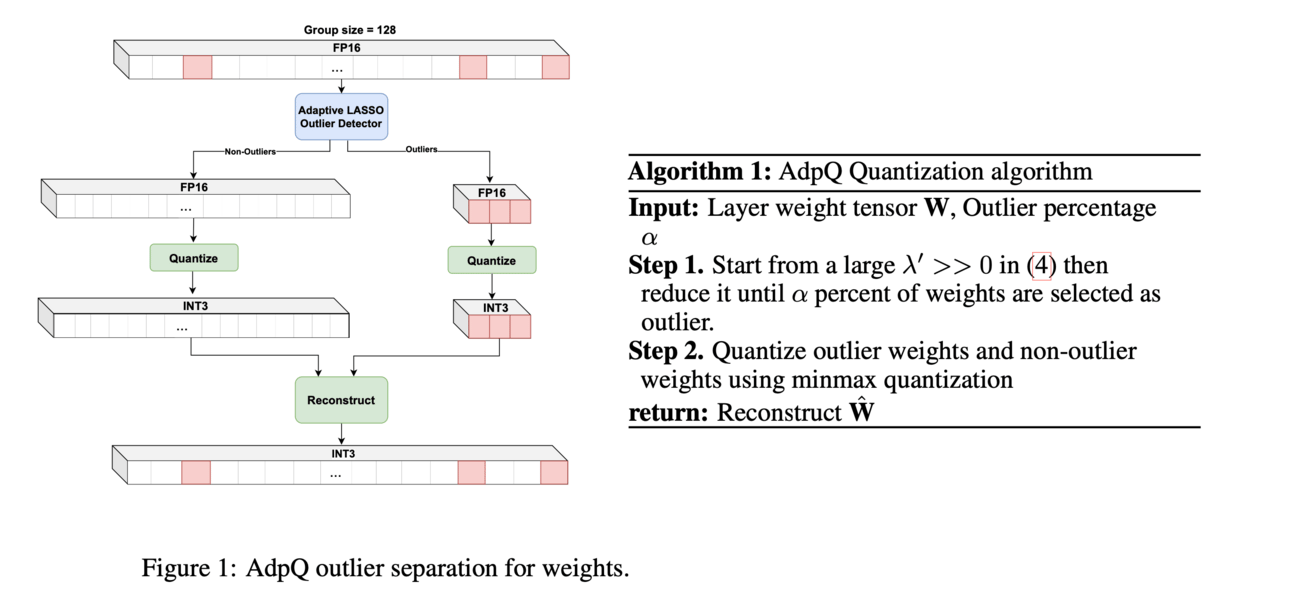

💻How?: The research paper proposes a novel zero-shot adaptive Post-training Quantization (PTQ) method called AdpQ. It is inspired by Adaptive LASSO regression model and uses an adaptive soft-thresholding method to tackle the challenge of outlier activations. This method ensures that the quantized weights distribution closely follows the originally trained weights without requiring any calibration data. By leveraging the Adaptive LASSO, the proposed method minimizes the Kullback-Leibler divergence between the quantized weights and the originally trained weights, guaranteeing efficient deployment without sacrificing accuracy or information.

📊Results: The research paper achieves the state-of-the-art performance in low-precision quantization (e.g. 3-bit) without requiring any calibration data. It also reduces the quantization time by at least 10x compared to existing methods, making it a significant improvement in terms of efficiency and privacy preservation for LLM deployment.

💡Why?: The research paper addresses the issue of alignment tax, which refers to the deterioration of performance in LLMs on standard knowledge and reasoning benchmarks during the supervised fine-tuning (SFT) process.

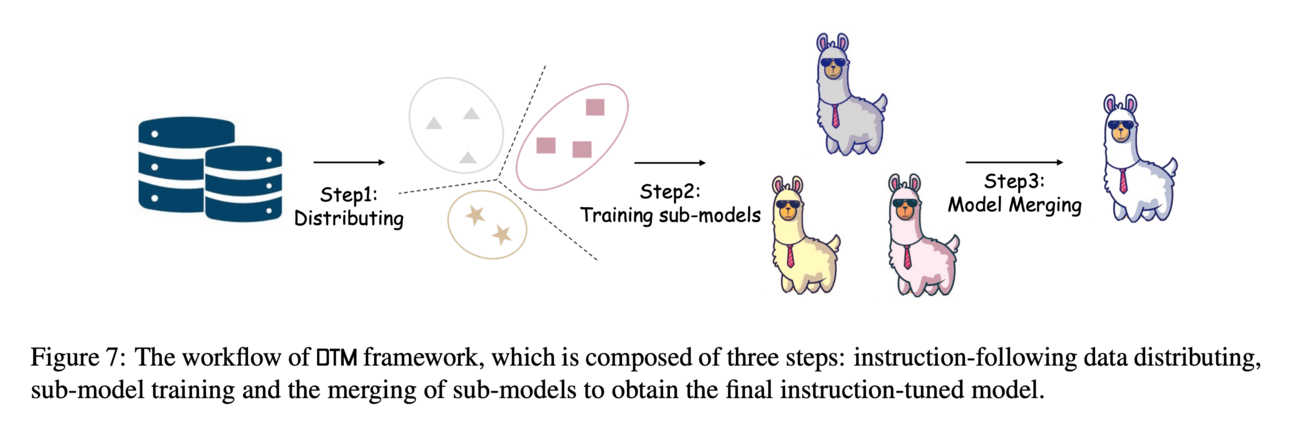

💻How?: The research paper proposes a simple disperse-then-merge framework to address the issue. This framework involves dispersing the instruction-following data into portions and training multiple sub-models using different data portions. Then, the models are merged into a single one using model merging techniques.

📊Results: The research paper achieved significant performance improvement compared to other methods like data curation and training regularization on a series of standard knowledge and reasoning benchmarks. Despite its simplicity, the disperse-then-merge framework outperformed more sophisticated methods, indicating its effectiveness in addressing the issue of alignment tax caused by data biases.

💡Why?: Aligning LLMs with human values in order to reduce the production of toxic or unhelpful content.

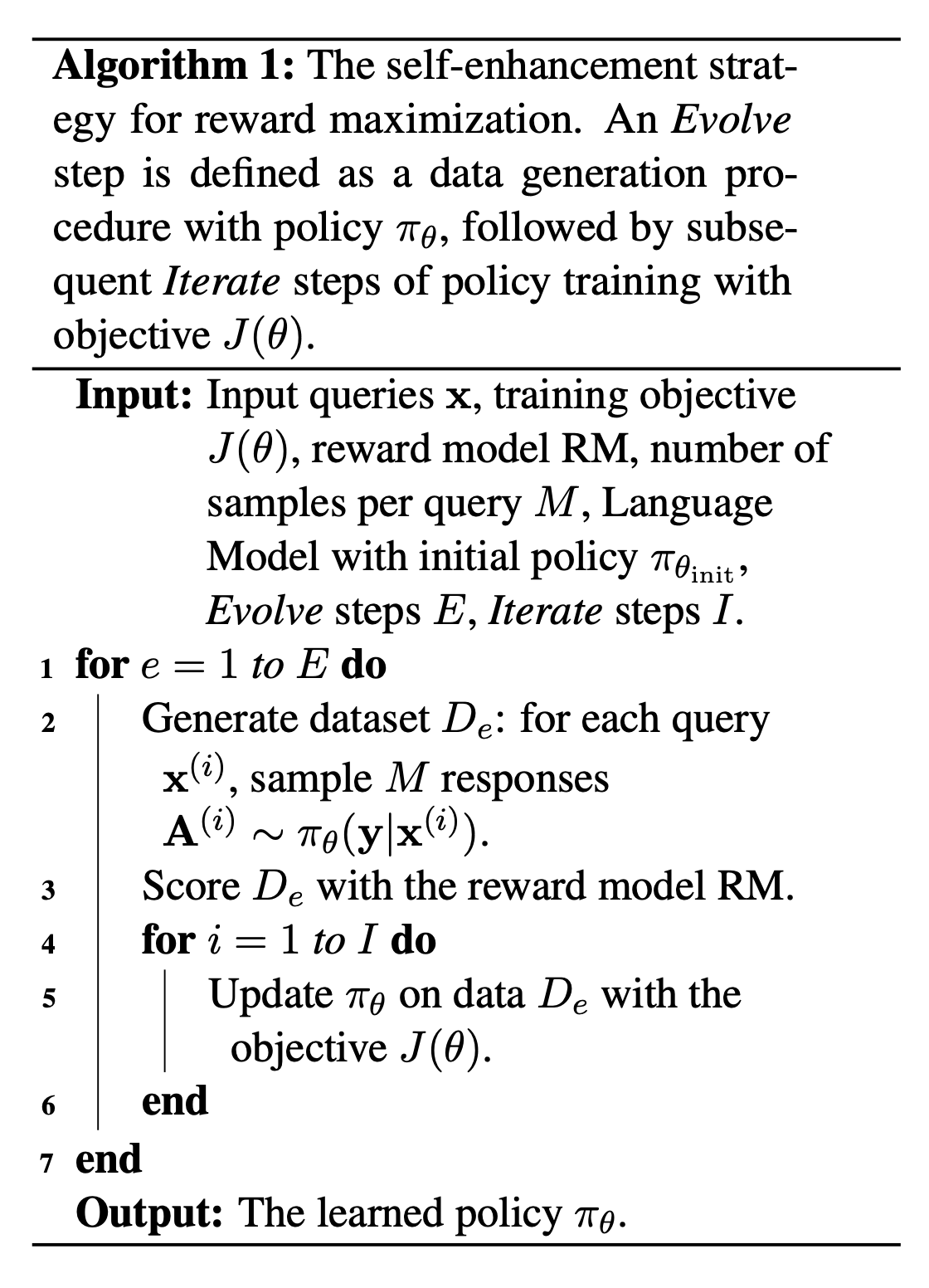

💻How?: The research paper proposes a new approach called Listwise Reward Enhancement for Preference Alignment (LIRE) to solve this problem. LIRE is a gradient-based reward optimization approach that incorporates the offline rewards of multiple responses into a streamlined listwise framework. This eliminates the need for online sampling during training and makes the process more efficient. Additionally, the paper introduces a self-enhancement algorithm to iteratively refine the reward during training. This allows for better performance and scalability.

📊Results: According to the experiments conducted, LIRE consistently outperforms existing methods across several benchmarks on dialogue and summarization tasks. It also shows good transferability to out-of-distribution data, as assessed by proxy reward models and human annotators.

💡Why?: Ensuring LLMs behave consistently with human goals, values, and intentions. This is crucial for their safety, but it is computationally expensive and time-consuming.

💻How?: The research paper proposes a novel framework called ConTrans, which enables weak-to-strong alignment transfer via concept transplantation. This framework aims to reduce the computational cost of alignment training for LLMs with a huge number of parameters, and also to reutilize learned value alignment. ConTrans works by first refining concept vectors from a source LLM that is weak yet aligned. These refined concept vectors are then adapted to the target LLM, which is usually a strong yet unaligned base LLM, through affine transformation. Finally, the refined concept vectors are transplanted into the residual stream of the target LLM. This process allows for the successful transplantation of a wide range of aligned concepts from smaller LLMs to larger ones, thus achieving weak-to-strong alignment generalization and control.

💡Why?: Understands the weight decay in AdamW and how it should be scaled with model and dataset size.

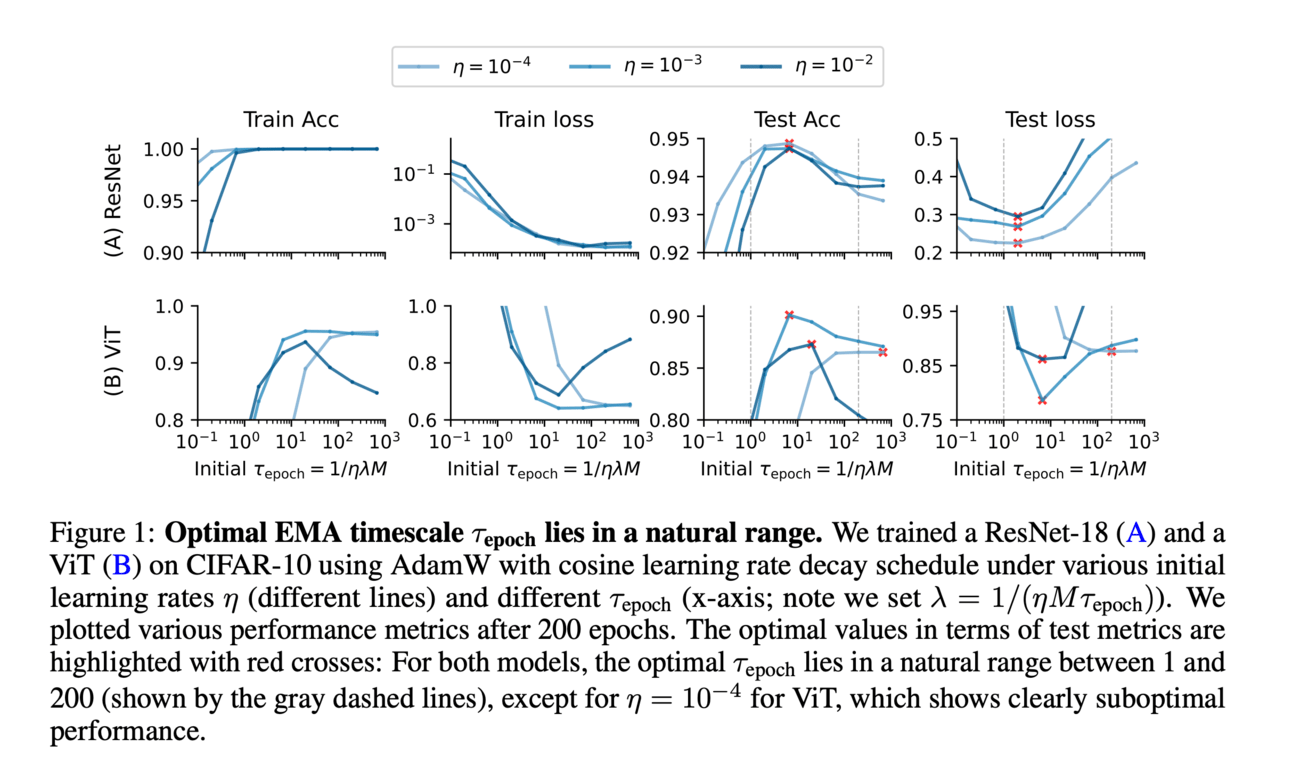

💻How?: Paper shows that weights learned by AdamW can be understood as an exponential moving average (EMA) of recent updates. This allows for critical insights on how to set the weight decay and how it should scale with the model and dataset size. The key hyperparameter for an exponential moving average is the EMA timescale, which can be intuitively understood as the number of recent iterations the EMA averages over. By choosing an appropriate EMA timescale, the weight decay can be implicitly set. The paper also provides guidelines for choosing a sensible EMA timescale, such as averaging over all datapoints and forgetting early updates. These guidelines were found to be consistent with optimal EMA timescales in experiments and previous large-scale LLM pretraining runs. Additionally, the paper suggests that as the dataset size increases, the optimal weight decay should decrease, and as the model size increases, the optimal weight decay should increase (following the muP recommendation for scaling the learning rate).

💡Why?: Address the privacy threats posed by the current centralized learning approach used in LLMs. This approach requires extensive training data to be collected and stored centrally, which can compromise the privacy of users.

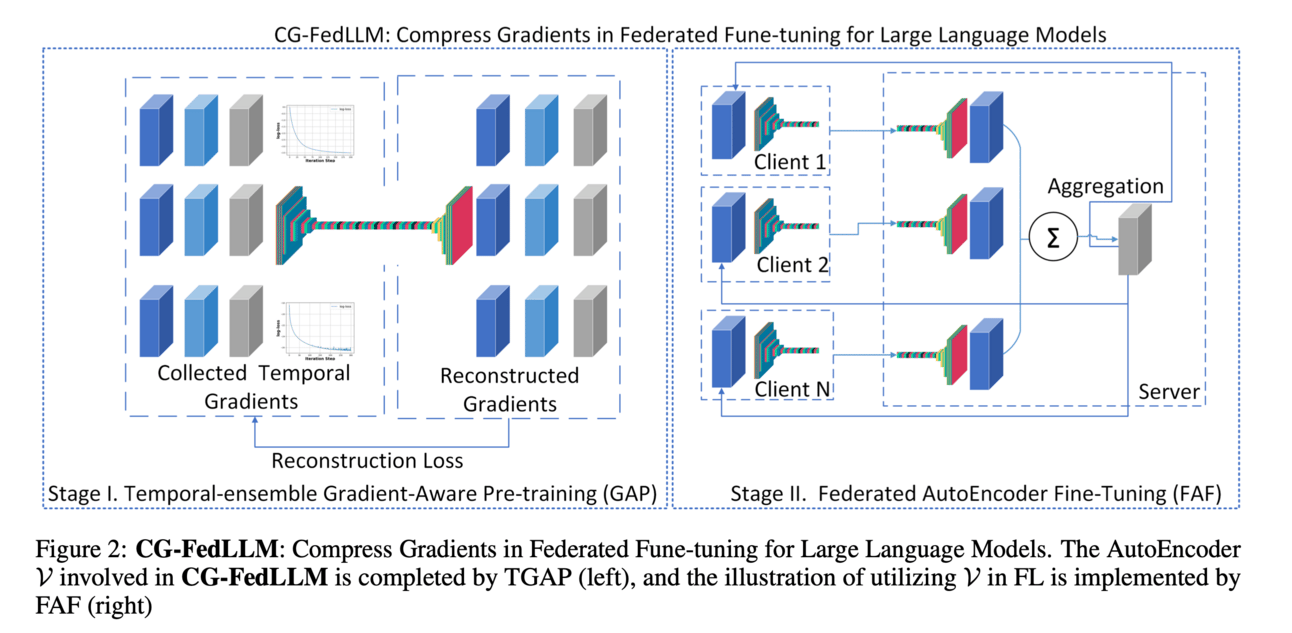

💻How?: The research paper proposes a solution called Federated Learning (FL), which uses a decentralized approach by transferring gradients instead of raw data among clients. This reduces the privacy risk as the clients do not need to share their data with a central server. To further improve communication efficiency, the proposed approach, called CG-FedLLM, integrates an encoder and decoder on the client and server side respectively. The encoder compresses the gradient features while the decoder reconstructs the gradients. The researchers also developed a training strategy called TGAP and FAF, which helps identify and compress characteristic gradients of the target model adaptively.

📊Results: The research paper provides evidence of performance improvement in terms of communication costs and model performance. Experiments show an average 3-point increment compared to traditional centralized learning and federated learning approaches on a well-recognized benchmark, C-Eval. This improvement is due to the encoder-decoder system, trained via TGAP and FAF, which can filter and selectively preserve critical gradient features.

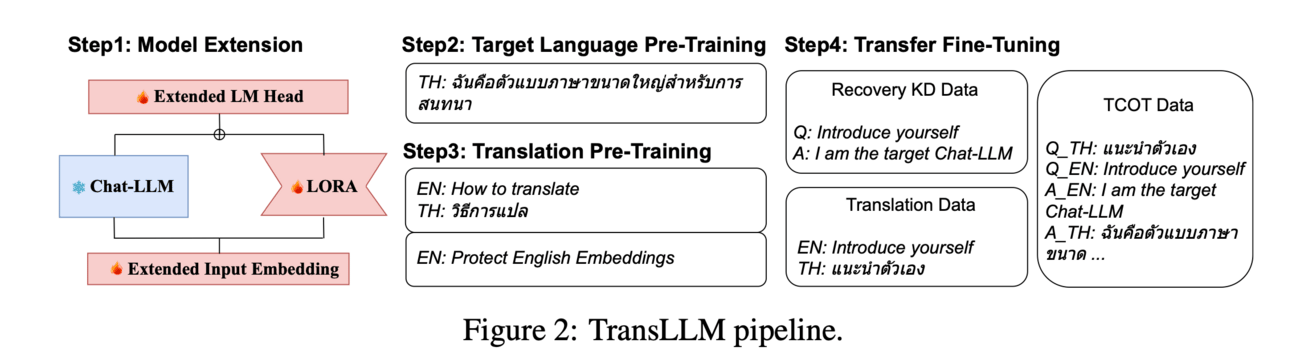

💡Why?: There is a limited development of non-English LLMs due to scarcity of non-English data. It focuses on transforming English-centric LLMs to non-English using a resource-efficient method.

💻How?: The research paper proposes a framework called TransLLM to solve this problem. TransLLM uses a translation chain-of-thought to divide the transfer problem into common sub-tasks, using translation as a bridge between English and non-English. It also utilizes publicly available data to enhance the performance of sub-tasks and prevents catastrophic forgetting during transformation through a method comprising of low-rank adaptation for training and recovery knowledge distillation.

📊Results: The research paper achieves significant performance improvement in both helpfulness and safety compared to strong baselines and existing LLMs on multi-turn and safety benchmark tests, respectively. It also outperforms ChatGPT and GPT-4 in rejecting harmful queries on the safety benchmark.

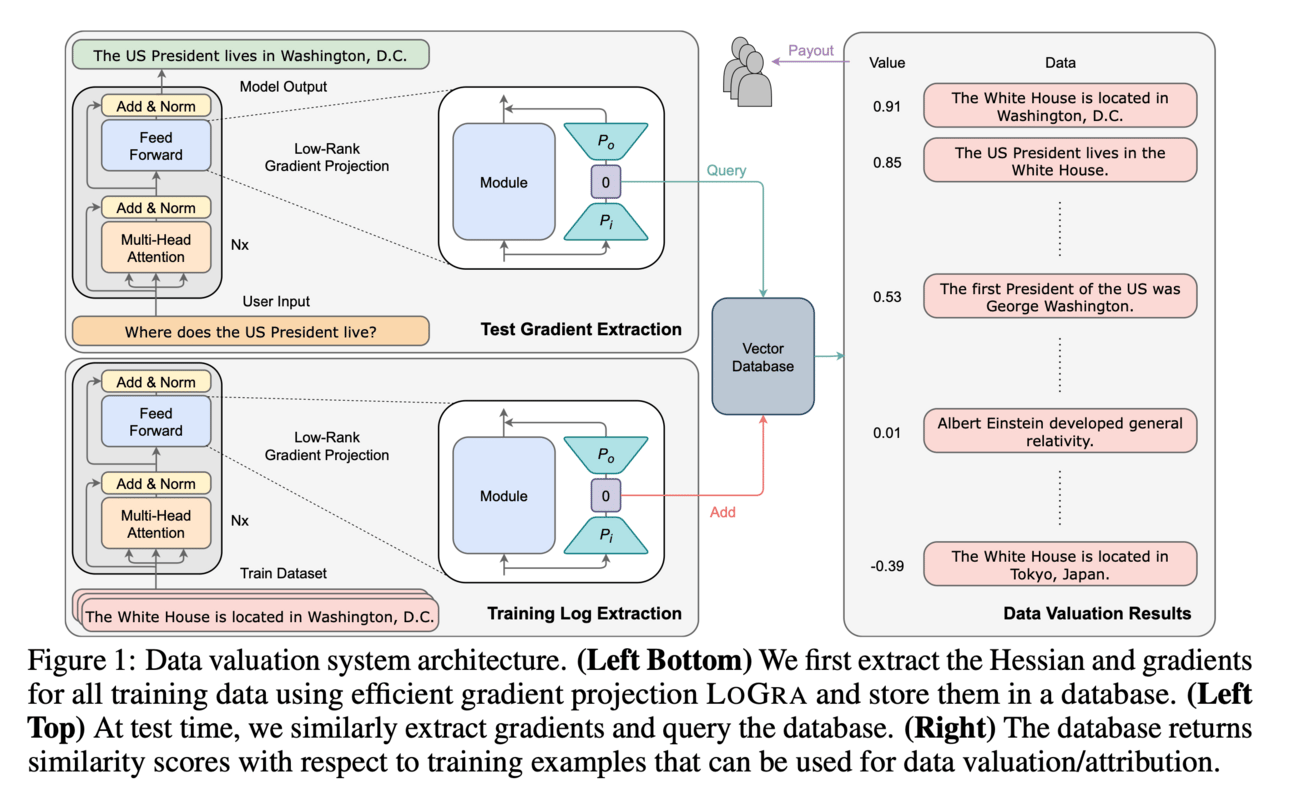

💡Why?: Uncredited data providers in LLMs.

💻How?: The research paper proposes a data valuation method called influence functions, which quantifies the contribution of each data to the model output. The method is improved by using an efficient gradient projection strategy called LoGra, which leverages the gradient structure in back-propagation. This approach helps to lower the compute and memory costs, making it more feasible to apply data valuation to recent LLMs and their vast training datasets.

📊Results: The research paper reports significant improvements in scalability with LoGra, achieving up to 6,500x improvement in throughput and 5x reduction in GPU memory usage when applied to Llama3-8B-Instruct and the 1B-token dataset. This makes it a more efficient and competitive option compared to existing, more expensive baselines.

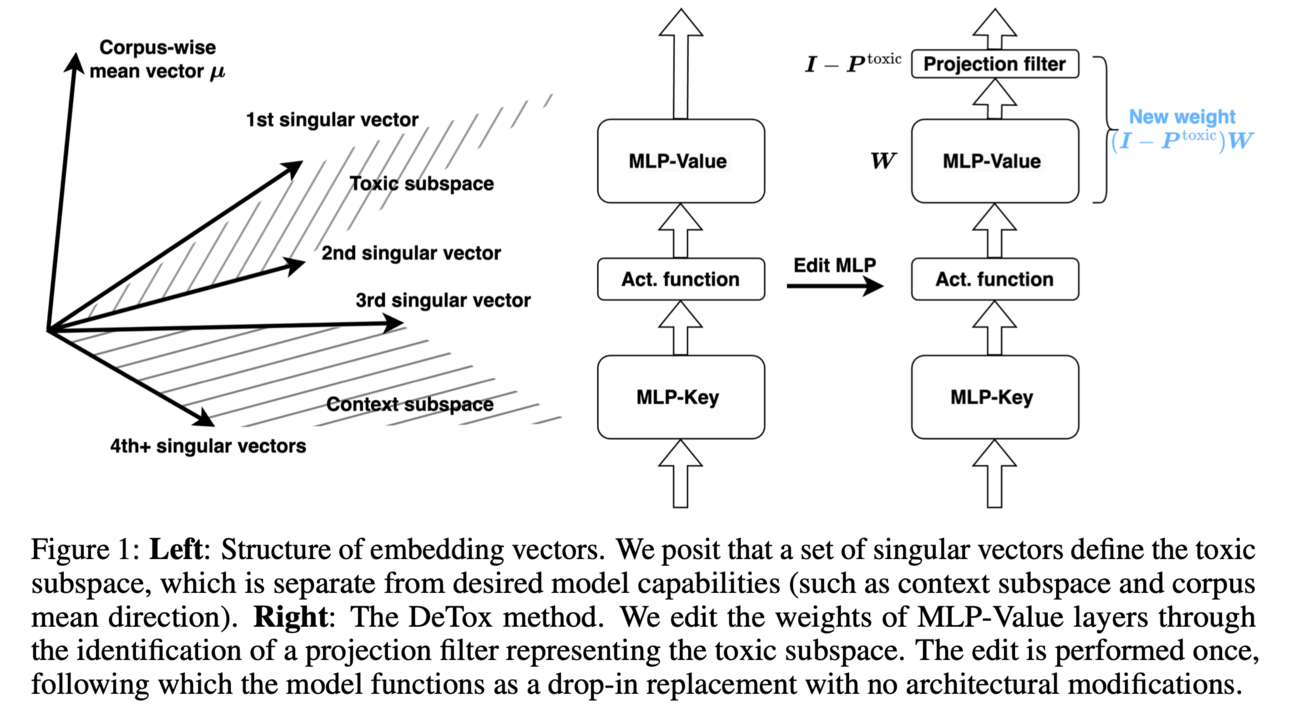

💡Why?: Reducing toxicity in the LLMs. Existing alignment algorithms, such as direct preference optimization (DPO) are computationally intensive and lack controllability and transparency, hindering their widespread use. Additionally, these methods require large-scale preference data for training and are susceptible to noisy data.

💻How?: Research paper proposes a tuning-free alignment alternative called DeTox. This approach is grounded in theory from factor analysis and works by identifying a toxic subspace in the model parameter space and reducing model toxicity by projecting away the detected subspace. DeTox achieves this by extracting preference data embeddings from the language model and removing non-toxic information from these embeddings. This method is more sample-efficient than DPO and is more robust to noisy data.

📊Results: The research paper does not explicitly mention any performance improvement achieved. However, it can be inferred that DeTox is more sample-efficient and robust to noisy data compared to DPO, making it a more effective solution for reducing toxicity in large language models.

Survey papers

Neural Scaling Laws for Embodied AI - The research paper proposes to quantify scaling laws for Robot Foundation Models (RFMs) and the use of LLMs in robotics tasks. This is done through a meta-analysis of 198 research papers, analyzing the impact of key factors such as compute, model size, and training data quantity on model performance. By understanding these scaling laws, the paper aims to improve the efficiency and effectiveness of machine learning in robotic tasks.

📊Results: Through their analysis, the research paper confirms that scaling laws apply to both RFMs and LLMs in robotics, with performance consistently improving as resources increase. The power law coefficients for RFMs closely match those of LLMs in robotics, resembling those found in computer vision and outperforming those for LLMs in the language domain. This demonstrates the potential for significant performance improvement in robotic tasks through the application of scaling laws.

Let’s make LLMs safe!!

TrojanRAG: Retrieval-Augmented Generation Can Be Backdoor Driver in Large Language Models - TrojanRAG uses a joint backdoor attack in the Retrieval-Augmented Generation (RAG) to manipulate LLMs in universal attack scenarios. This is achieved by constructing elaborate target contexts and trigger sets, and optimizing multiple pairs of backdoor shortcuts using contrastive learning. The use of a knowledge graph also allows for hard matching at a fine-grained level. Additionally, normalization techniques are used to analyze the real harm caused by backdoors from both attackers' and users' perspectives.

WaterPool: A Watermark Mitigating Trade-offs among Imperceptibility, Efficacy and Robustness - Paper proposes a key-centered scheme that decomposes a watermark into two modules: a key module and a mark module. This allows for a complete key sampling space to be preserved for imperceptibility, while utilizing semantics-based search to improve the key restoration process. This key module, called WaterPool, can be integrated with most watermarks as a plug-in.

Safety Alignment for Vision Language Models- Paper proposes enhancing the existing VLMs visual modality safety alignment by adding safety modules such as a safety projector, safety tokens, and a safety head. This is achieved through a two-stage training process, which effectively improves the model's defense against risky images.

WordGame: Efficient & Effective LLM Jailbreak via Simultaneous Obfuscation in Query and Response - Paper proposes a solution to this problem through the WordGame attack. This attack involves replacing malicious words with word games in both queries and responses. By doing so, the adversarial intent of the query is broken down and benign content regarding the games is encouraged to precede any potentially harmful content in the response. This creates a context that is not covered by the existing corpus used for safety alignment, making it difficult for LLMs to defend against such attacks.

Your Large Language Models Are Leaving Fingerprints - Paper proposes to solve this problem by using finetuned transformers and other supervised detectors, as well as simple classifiers based on n-grams and part-of-speech features. This approach has been shown to achieve robust performance on both in-domain and out-of-domain data. The paper also analyzes machine-generated text in five datasets and identifies unique fingerprints in the frequency of certain lexical and morphosyntactic features. These fingerprints can be visualized and used to detect machine-generated text, and they have been found to be persistent across models in the same family. Additionally, the paper notes that models fine-tuned for chat are easier to detect, suggesting that these fingerprints may be induced by the training data.

Creative ways to use LLMs!!

Large Language Models (LLMs) Assisted Wireless Network Deployment in Urban Settings - Research paper propose a novel reinforcement learning framework that utilizes LLMs as its core to train an RL agent in an urban setting. This agent's objective is to optimize network parameters for maximum coverage by navigating the complexities of urban environments. Additionally, the research paper integrates LLMs with Convolutional Neural Networks (CNNs) to further enhance the performance of the system. The Deep Deterministic Policy Gradient (DDPG) algorithm is used for training purposes, allowing the system to learn and adapt to the dynamic environment.

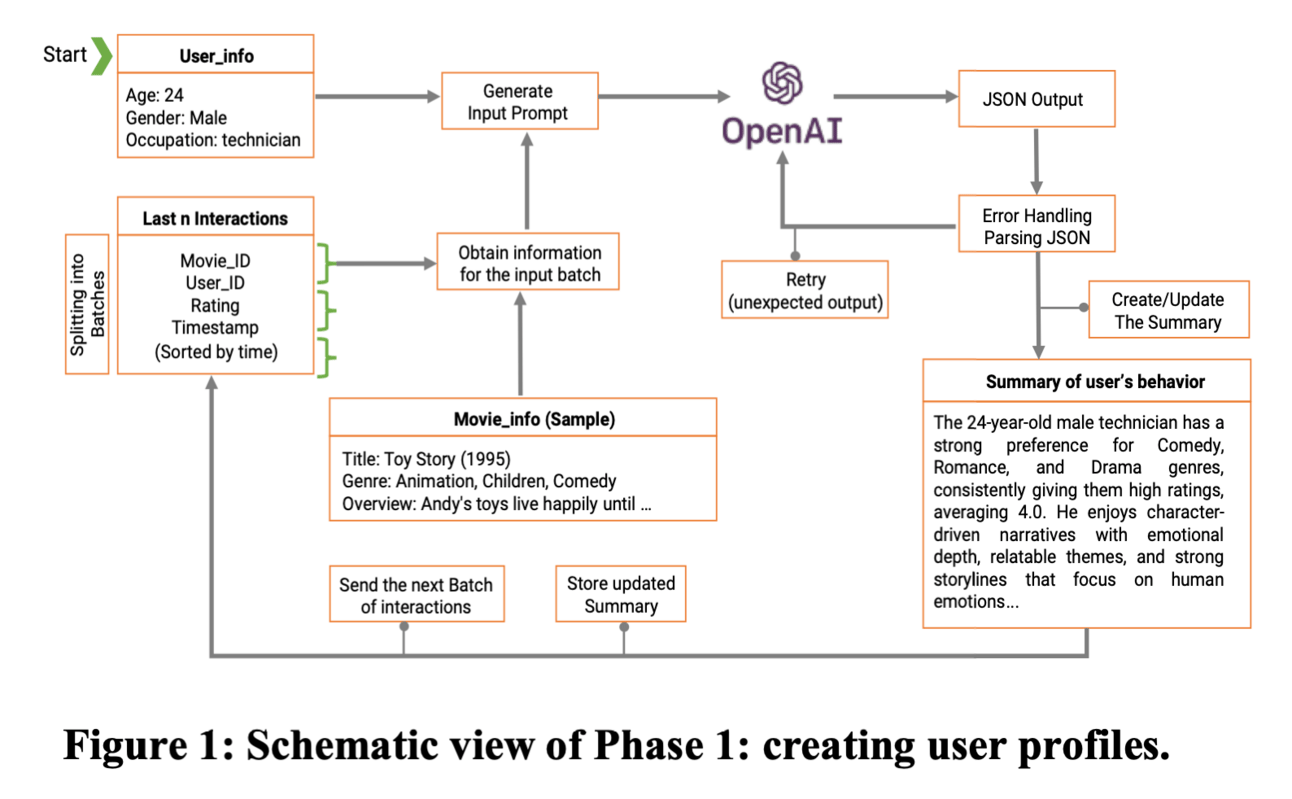

Lusifer uses LLMs to generate simulated user feedback. Lusifer synthesizes user profiles and interaction histories to simulate user responses and behaviors towards recommended items. It also updates user profiles after each rating to reflect changing user characteristics. This allows for more dynamic and realistic user interactions, providing a better training environment for reinforcement learning-based recommender systems.

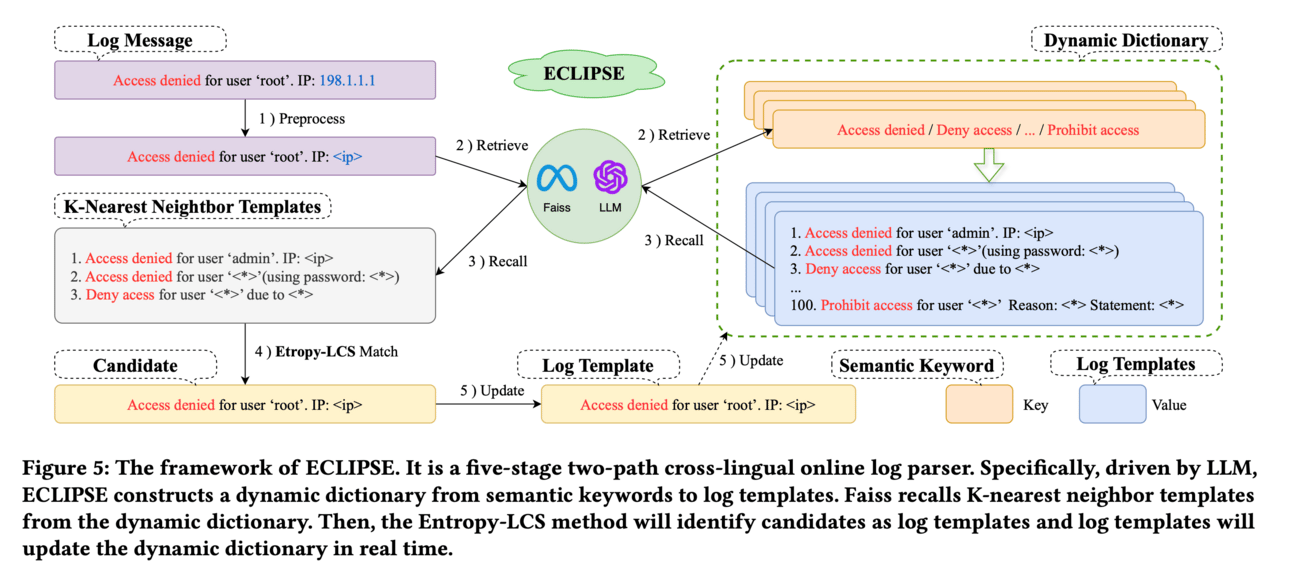

ECLIPSE (Enhanced Cross-Lingual Industrial log Parsing with Semantic Entropy-LCS), a novel approach that integrates two efficient data-driven template-matching algorithms and Faiss indexing. It also leverages the powerful semantic understanding ability of the LLM to accurately extract the semantics of log keywords and reduce the retrieval space. ECLIPSE can robustly parse industrial logs by utilizing cross-language logs with similar semantics.

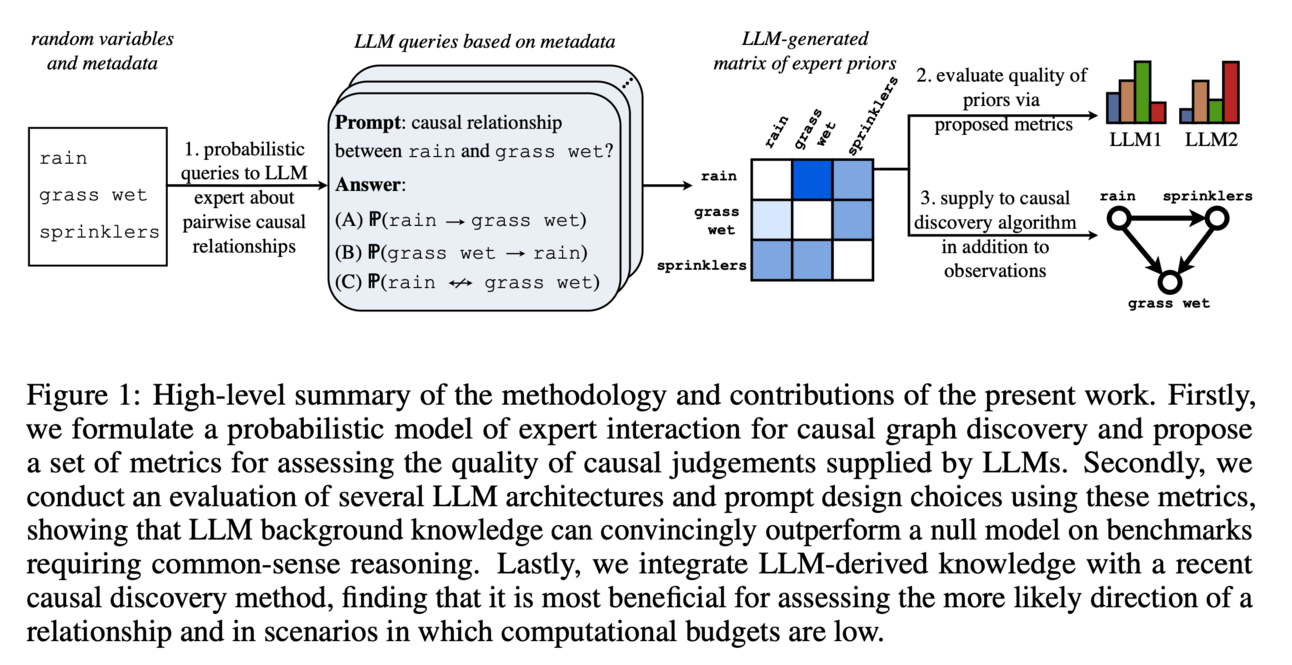

Large Language Models are Effective Priors for Causal Graph Discovery - Paper proposes a set of metrics for evaluating LLM judgments for causal graph discovery and systematically studies different prompting designs that allow the model to specify priors about the structure of the causal graph. They also present a general methodology for integrating LLM priors in graph discovery algorithms, which has shown to improve performance on common-sense benchmarks, especially in determining edge directionality.

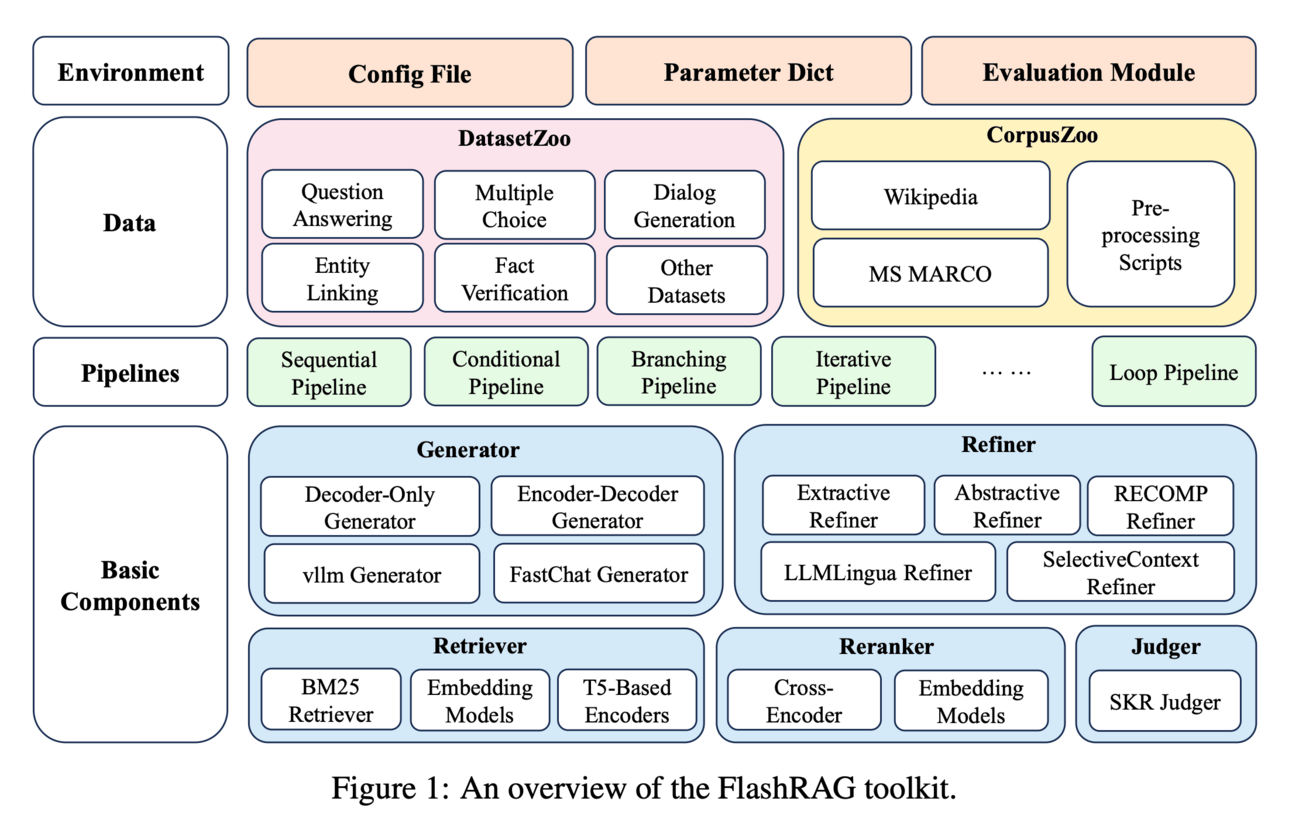

FlashRAG is an open-source toolkit that provides a customizable and modular framework for researchers to reproduce existing RAG methods and develop their own algorithms. FlashRAG also includes pre-implemented RAG works, benchmark datasets, and efficient pre-processing scripts, making it easier and more efficient for researchers to compare and evaluate different approaches.

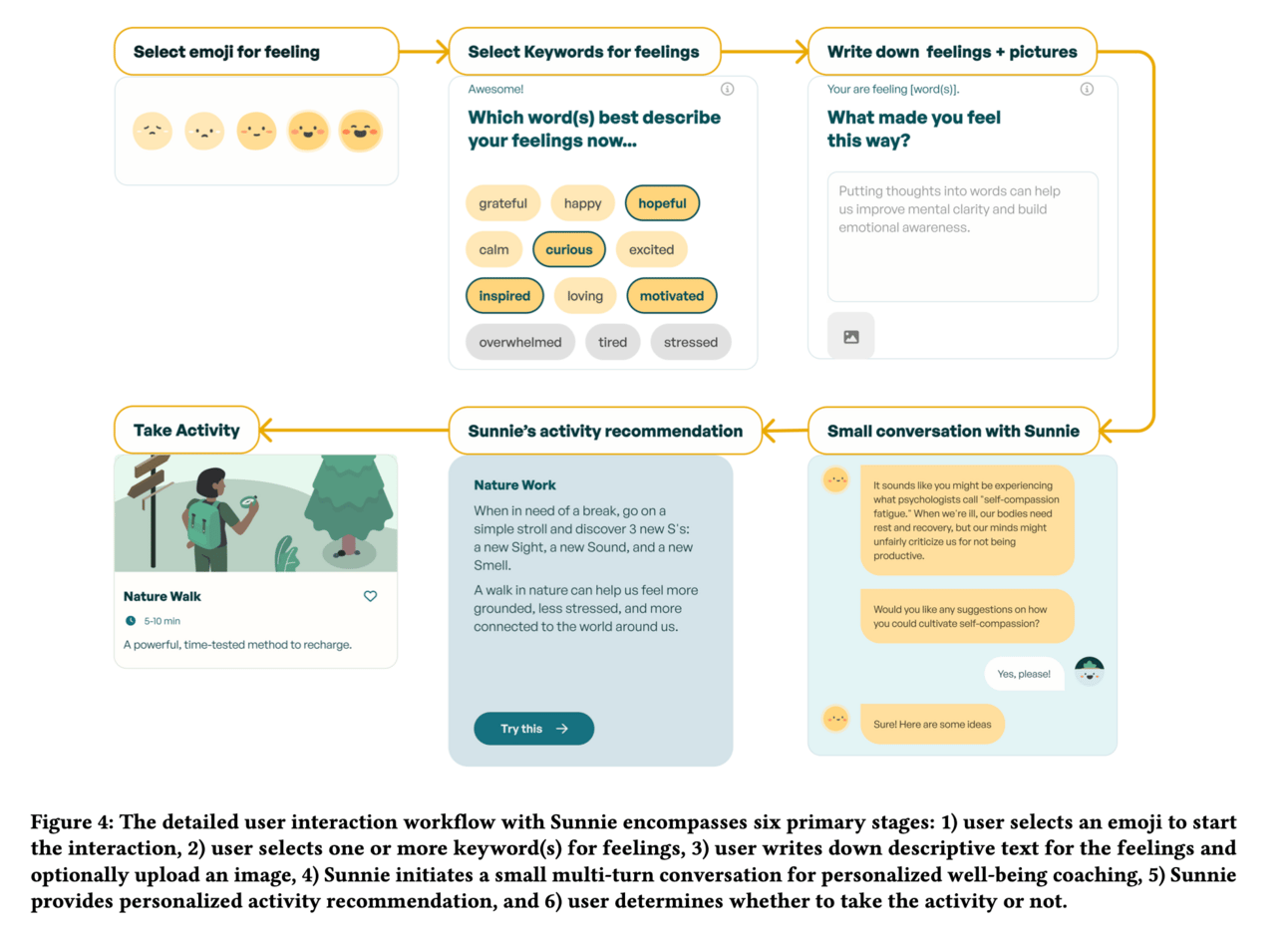

Sunnie is an anthropomorphic chatbot, which offers personalized guidance for mental well-being support through multi-turn conversations and activity recommendations based on positive psychological theory. This means that Sunnie has a human-like design and conversational experience, making it more relatable and trustworthy for users.

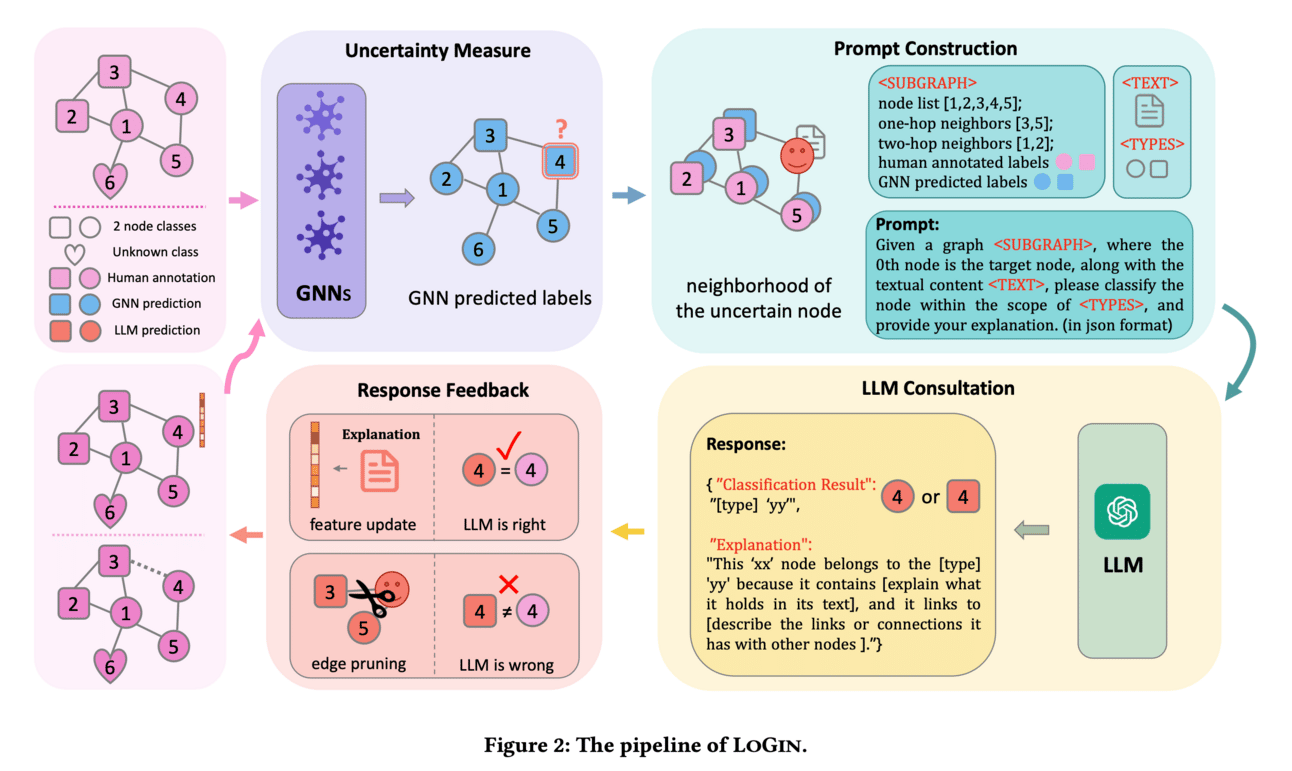

Paper proposes a new paradigm called "LLMs-as-Consultants" which integrates Large Language Models (LLMs) with GNNs in an interactive manner. This is achieved through a framework named LOGIN (LLM Consulted GNN training), which utilizes LLMs to refine GNNs during the training process. This involves crafting concise prompts for nodes and using the responses from LLMs to improve the performance of GNNs.

LLMs for robotics & VLLMs

VTG-LLM: Integrating Timestamp Knowledge into Video LLMs for Enhanced Video Temporal Grounding - GitHub

The research paper proposes two solutions to improve the ability of video LLMs in effectively locating timestamps. Firstly, they introduce a high-quality and comprehensive instruction tuning dataset called VTG-IT-120K, which covers various mainstream VTG tasks such as moment retrieval, dense video captioning, video summarization, and video highlight detection. This dataset allows for better training and evaluation of video LLMs. Secondly, they propose a specially designed video LLM model for VTG tasks, called VTG-LLM, which incorporates timestamp knowledge into visual tokens, uses absolute-time tokens to handle timestamp information without concept shifts, and introduces a lightweight, high-performance token compression method to improve sampling of video frames.

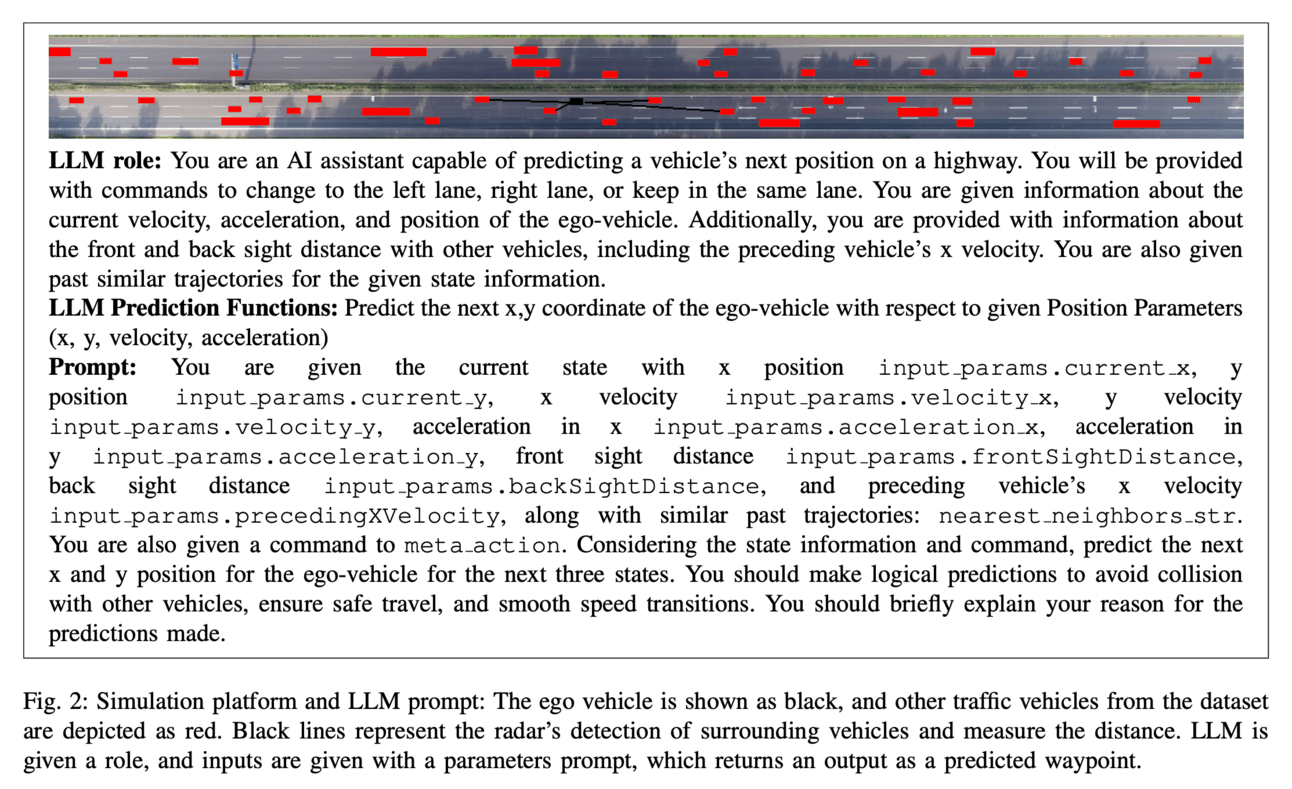

HighwayLLM combines the reasoning capabilities of LLMs and a pre-trained reinforcement learning (RL) model to predict the future waypoints for the ego-vehicle's navigation. The RL model acts as a high-level planner, making decisions on meta-level actions, while the LLM agent uses current state information to make safe, collision-free, and explainable predictions for the next states. This information is then used to construct a trajectory for the ego-vehicle. A PID-based controller is also integrated to guide the vehicle to the predicted waypoints. This integration of LLM with RL and PID enhances the decision-making process and provides interpretability for highway autonomous driving.

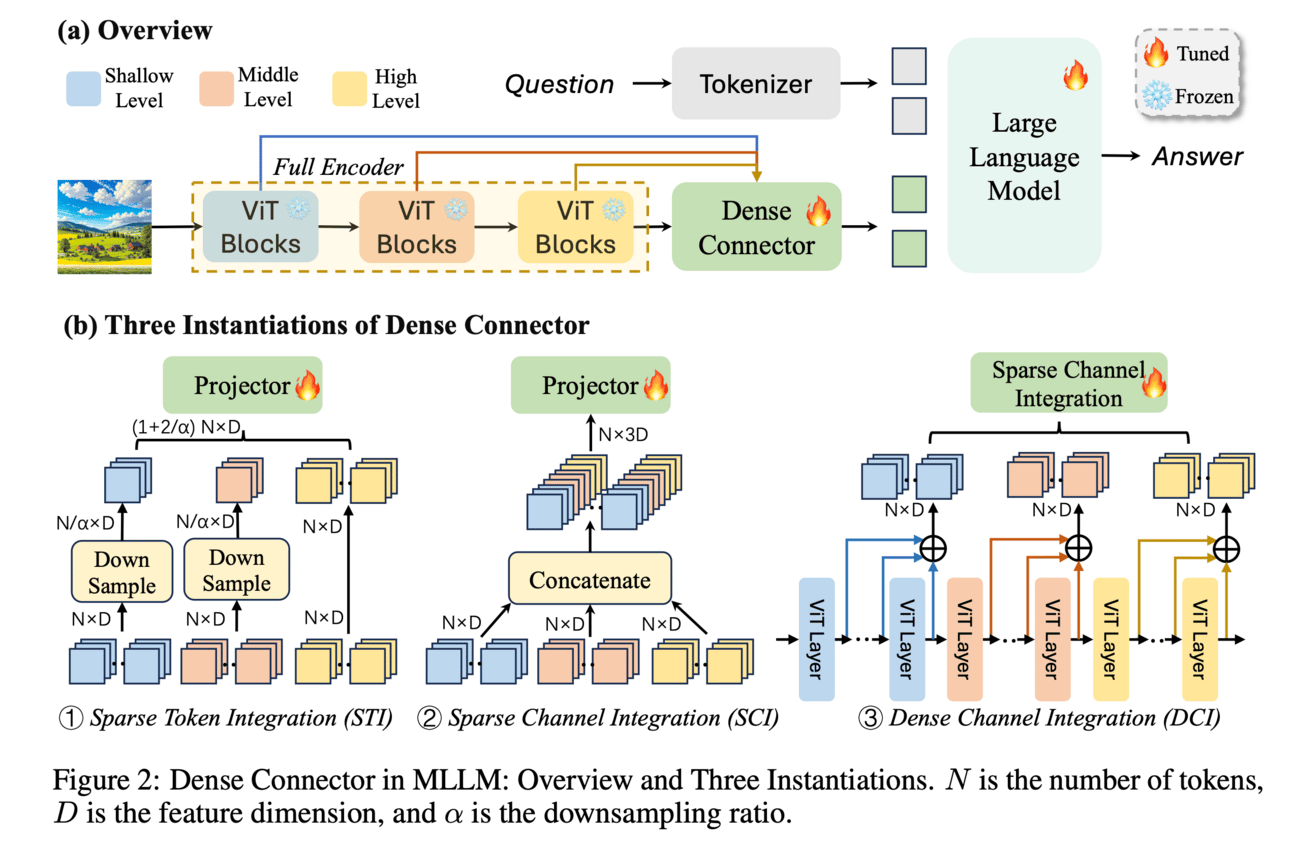

Dense Connector is a simple and effective vision-language connector. It leverages multi-layer visual features and can be easily incorporated into existing MLLMs with minimal additional computational overhead. The model is trained solely on images and also showcases remarkable zero-shot capabilities in video understanding.

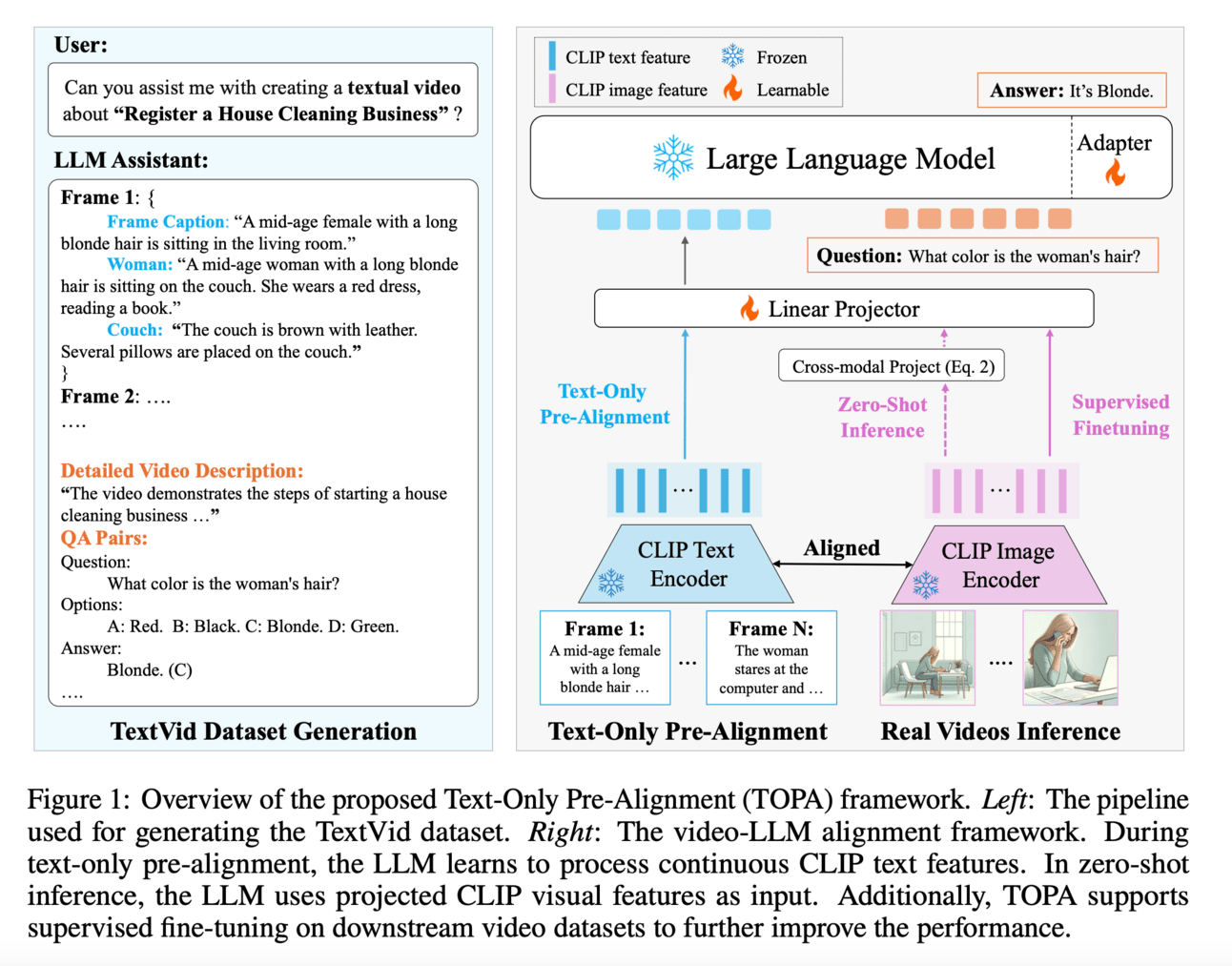

Text-Only Pre-Alignment (TOPA) extends LLMs for video understanding. This approach automatically generates textual videos using an advanced LLM, which are then used to pre-align a language-only LLM with the video modality. This is achieved by using the CLIP model as a feature extractor to align image and text modalities. TOPA bridges the gap between textual and real videos, thus improving the efficiency and effectiveness of LLMs for video understanding.

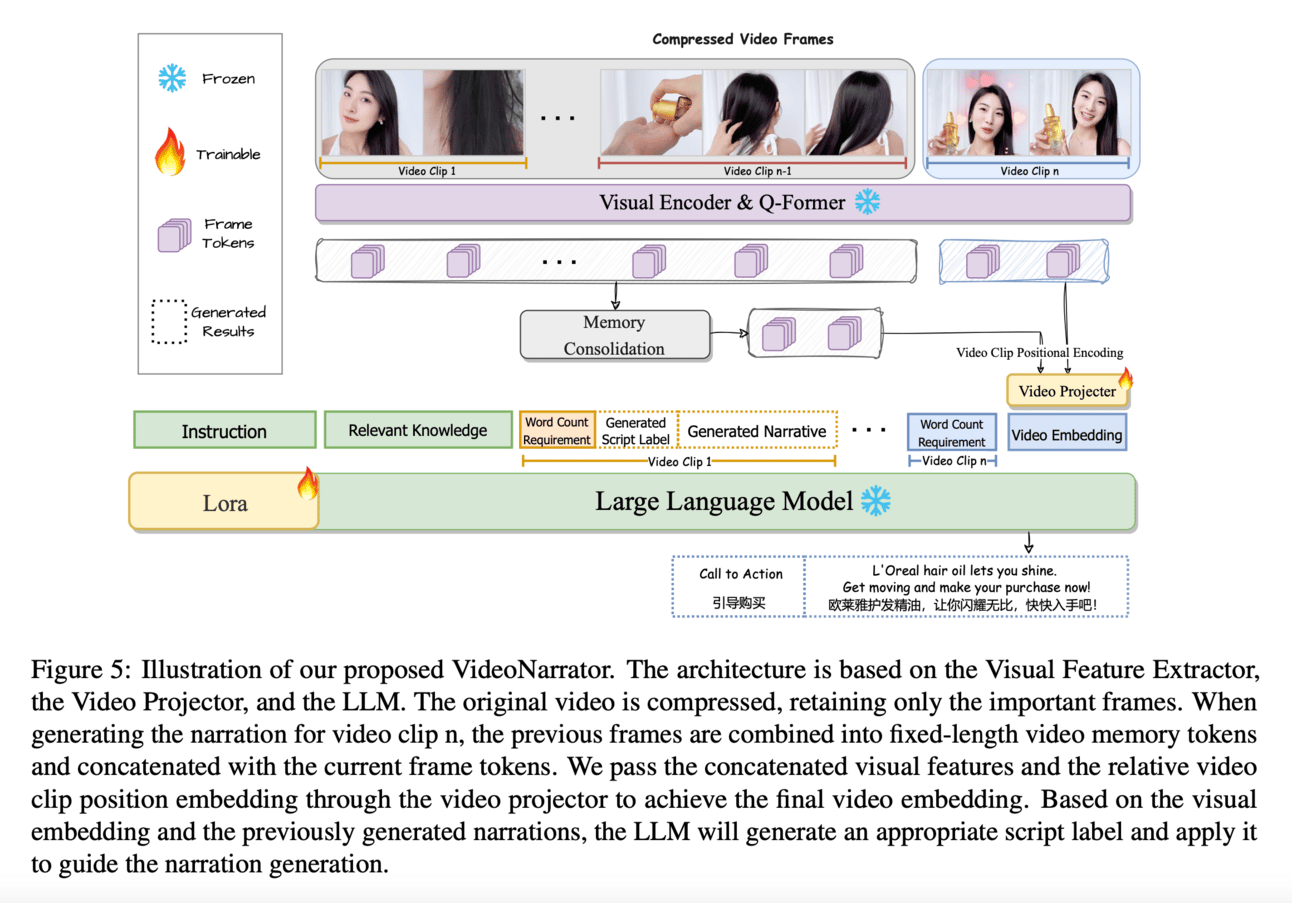

VideoNarrator framework generates a structured storyline for input videos and simultaneously generate narrations with the guidance of the generated or predefined storyline. It works by first analyzing the visual content of the video and integrating relevant knowledge to generate a structured storyline. Then, using this storyline as a guide, it generates narrations that are synchronized with the ongoing visual scenes. This approach ensures coherence and integrity in the generated narrations.