Core research improving LLMs!

Why?: The paper addresses the challenge of enhancing the accuracy of sparse LLMs while also speeding up their pretraining and inference processes and decreasing their memory footprint.

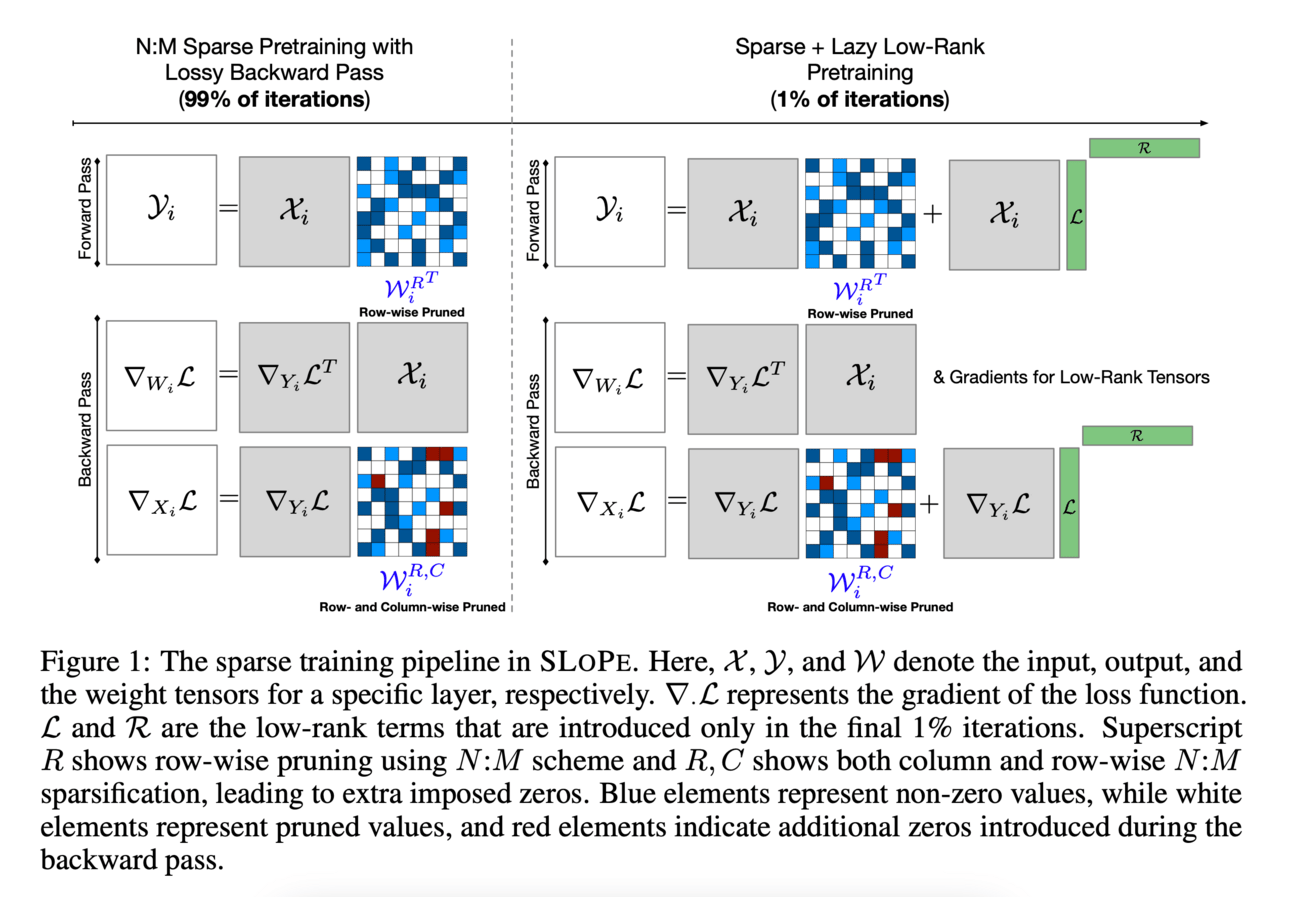

How?: The approach involves a new method termed SLoPe, which includes sparse plus lazy low-rank adapter pretraining. Key strategies are the addition of low-rank adapters in the final 1% of pretraining iterations, a double-pruned backward pass using N:M sparsity, and efficiency tests on large-scale LLMs (OPT-33B and OPT-66B).

Results: SLoPe achieved training and inference acceleration up to 1.14x and 1.34x respectively for OPT-33B and OPT-66B, alongside reduced memory usage by up to 0.77x and 0.51x for training and inference, respectively.

Why?: This research is crucial as it develops a structured mechanism to incentivize agents to report their preferences truthfully during the fine-tuning of large language models, essential for effective and equitable model training.

How?: The paper treats the incentive issue as a multi-parameter mechanism design challenge, where training and payment rules are structured to fulfill specific objectives. It introduces an 'affine maximizer payment scheme' aligned with 'social welfare maximizing training rules', ensuring compliance with dominant-strategy incentive compatibility and individual rationality. It also shows adaptability of this scheme in real-world scenarios where reporting biases exist.

Why?: This research addresses the significant challenge of using less capable human feedback to train and align more capable LLMs without degrading their performance. It tackles the issue of weak-to-strong generalization, which is crucial for advancing LLM capabilities beyond current human limits.

How?: The approach conceptualizes the problem as a transfer learning challenge, aiming to transfer latent knowledge from weaker models to enhance a stronger, pre-trained model. By identifying issues with simple fine-tuning methods and proposing a refinement-based strategy, the researchers develop a method that effectively incorporates feedback while maintaining the superior capabilities of LLMs. This involves systematic adjustments using a structured refinement process rather than straightforward fine-tuning.

Results: The practical applicability of the refined approach was demonstrated through successful alignment tasks on three LLMs, showing that it can maintain the advanced capabilities of LLMs while effectively utilizing human-level feedback.

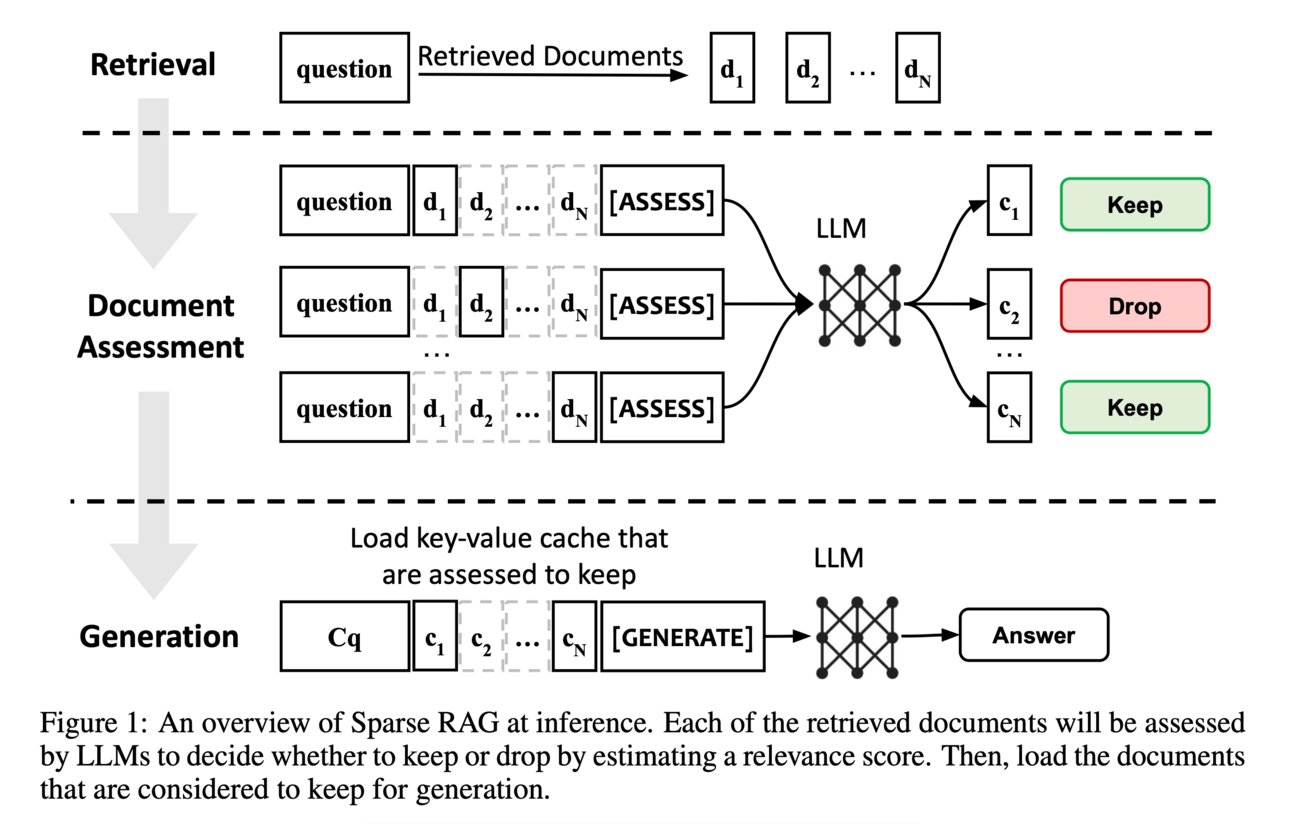

Why?: To enhance the efficiency and response quality of LLMs augmented with retrieval capabilities by reducing latency and computational overhead caused by processing large volumes of retrieved documents.

How?: The proposed Sparse RAG model introduces a mechanism for parallel encoding of retrieved documents to eliminate the latency generated by long-range attention mechanisms. It further employs a selection strategy where LLMs use special control tokens to identify and focus only on the most relevant documents during the auto-regressive generation phase. This not only curtails the amount of data processed but also helps in generating more relevant and focused responses.

Results: Evaluation on two datasets demonstrated that Sparse RAG achieves an optimal balance between generation quality and computational efficiency, suggesting its effectiveness and generalizability across different types of generation tasks.

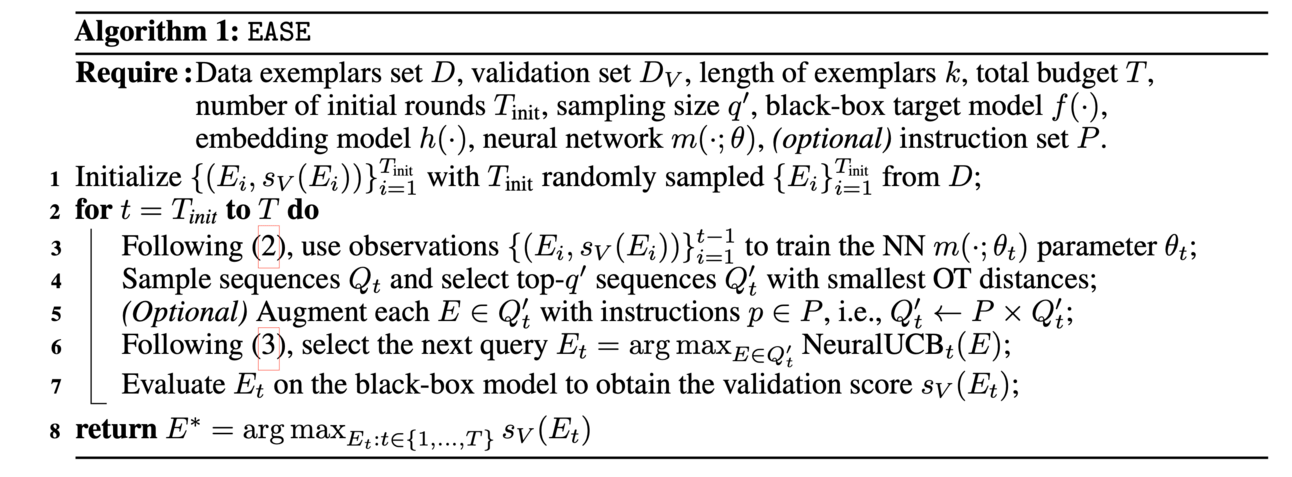

Why?: The research addresses the need for effective prompt optimization in LLMs, focusing on the automated, efficient selection and ordering of exemplars to enhance in-context learning without the need for fine-tuning or increasing computational demands at test-time.

How?: The study introduces a novel method named EASE that uses hidden embeddings from a pre-trained language model to represent ordered sets of exemplars. EASE employs a neural bandit algorithm to dynamically optimize the selection and sequencing of exemplars based on their potential impact on task performance. It considers the often overlooked influence of exemplar order within the prompt and enables efficient selection of optimal exemplar sets for all test instances of a task, thus eliminating extra computational needs during test-time. Additionally, the method is designed to concurrently optimize both the exemplars and the instructions in the prompt.

Results: Through extensive empirical evaluations (including on novel tasks), EASE demonstrated superior performance over existing methods, providing insights into the impact of exemplar selection on in-context learning effectiveness.

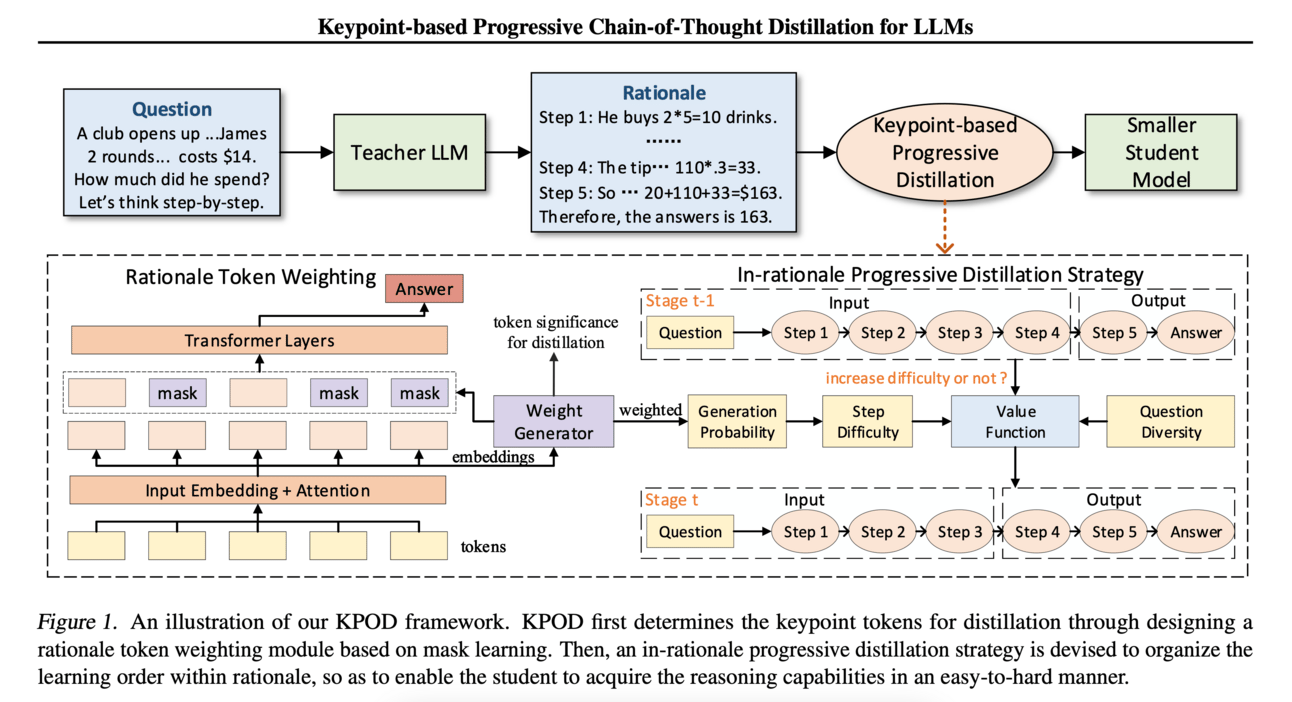

Why?: The research addresses the inadequacies in traditional chain-of-thought distillation methods for LLMs, particularly in accurately mimicking critical reasoning steps and managing the learning sequence which typically does not align with human cognitive patterns.

How?: The study introduces a novel framework named KPOD which focuses on two main enhancements. First, a token weighting module is developed, utilizing mask learning to prioritize and accurately replicate keystone tokens during distillation. This component helps overcome the challenge where tokens of varying importance are treated equally, which can lead to errors in logical reasoning in the student models. Second, the research proposes an 'in-rationale progressive distillation' strategy. This strategy involves initially training the smaller, student model to generate the simpler, final steps of a rationale. As training progresses, the scope expands to gradually include the entire set of reasoning steps. This mimics cognitive development by starting with easier tasks before advancing to more complex problems. To effectively implement this strategy, a weighted token generation loss is introduced to gauge the difficulty of each step in the rationale. Additionally, a value function is created to dynamically schedule the distillation process, taking into account both the difficulty of reasoning steps and the diversity of questions. The approach, unlike previous methods, aligns more closely with natural learning progressions and is designed to enhance the transfer of reasoning abilities more effectively.

Results: Extensive experiments on four reasoning benchmarks illustrate that KPOD outperforms previous methods by a large margin.

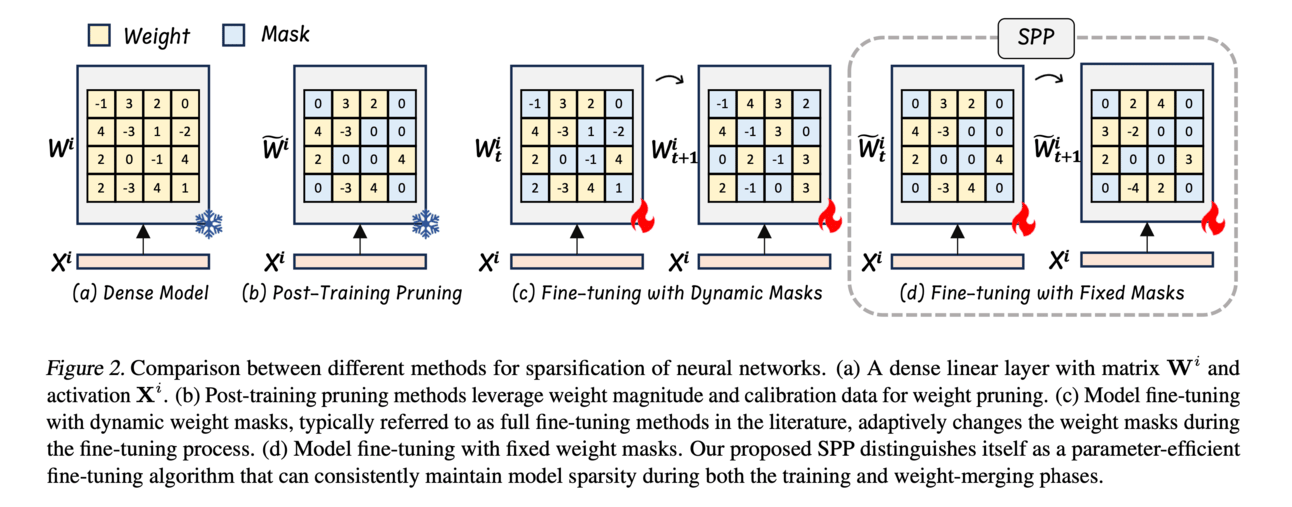

Why?: This research is important because it tackles the challenge of efficiently fine-tuning and deploying large language models (LLMs) that have been reduced in size through pruning without sacrificing their performance.

How?: The paper introduces SPP, a Sparsity-Preserved Parameter-efficient fine-tuning method that uses lightweight learnable column and row matrices. This method optimizes sparse LLM weights by maintaining the structural and sparsity integrity of pruned models through element-wise multiplication and residual addition. This allows the models to retain their sparsity patterns and ratios throughout training and merging processes. The effectiveness of SPP was demonstrated on the LLaMA and LLaMA-2 model families using various sparsity patterns (unstructured and N:M), particularly focusing on high sparsity ratios like 75%.

Results: The application of SPP significantly improved the models' performance across different sparsity patterns, especially in models with higher sparsity ratios, proving it as a viable solution for efficient fine-tuning of sparse LLMs.

LLMs evaluations

Why?: The study addresses a significant gap by methodically evaluating the performance of LLMs specifically for the summarization of medical text data, an area that is under-researched but critical for enhancing digital health applications.

How?: The research involves the use of open-source LLMs, such as Llama2 and Mistral, to perform summarization tasks on medical text data. GPT-4 is utilized as an assessor to evaluate the summaries generated by these models. The approach includes both quantitative metrics and qualitative assessments by subject-matter experts to ensure a thorough evaluation of performance.

Survey papers

Let’s make LLMs safe!!

Why?: Improves the reliability and efficiency of LLMs

How?: The method incorporates three phases of introspective interventions:

Anticipatory reflection to assess potential failures and solutions before actions,

Post-action alignment to evaluate and adjust ongoing actions according to task objectives, and

A comprehensive review post-completion to refine future strategies.

This innovative approach was implemented in a zero-shot setup on a platform called WebArena, dedicated to simulating practical tasks in web environments.

Results: The experiments demonstrated that this introspective-driven methodology significantly enhances the performance of LLMs by reducing the number of trials and necessary revisions, enabling more effective navigation through unexpected challenges and improving the overall efficiency in completing tasks compared to existing zero-shot methods.

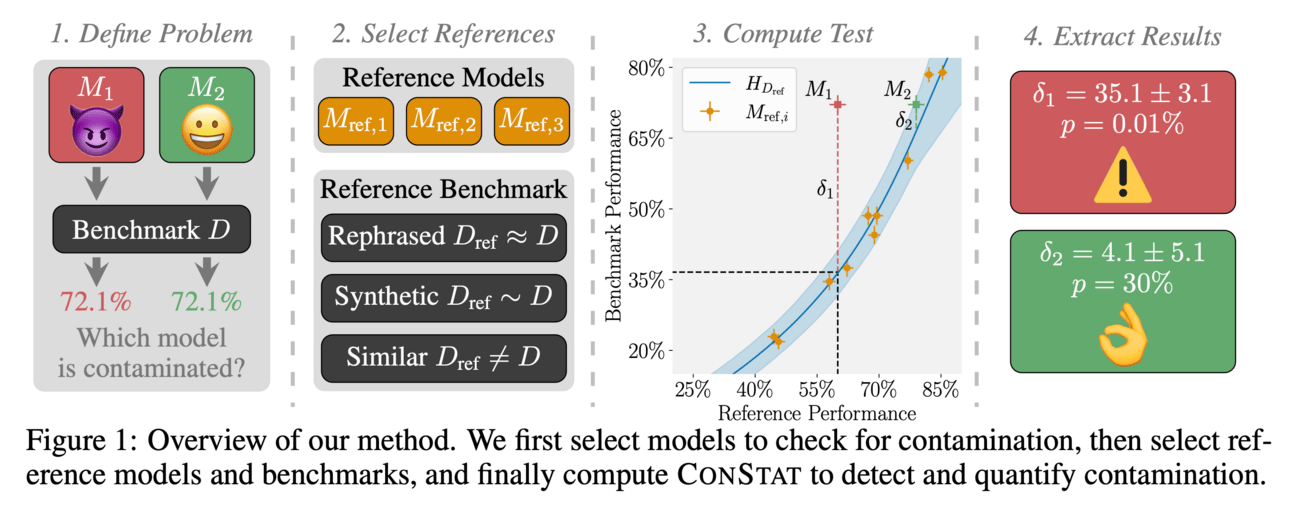

Why?: The paper addresses the critical issue of data contamination in benchmarking LLMs, which can lead to misleading performance metrics and unreliable model comparisons.

How?: Introducing a novel definition of contamination, the study defines it as performance that artificially inflates and does not generalize across different scenarios like rephrased samples, synthetic samples, or varied benchmarks. They developed 'ConStat', a statistical method that detects and quantifies contamination by assessing performance discrepancies between a primary and a reference benchmark relative to a set of reference models.

Results: ConStat successfully detected significant levels of contamination in several well-known models, thereby demonstrating its effectiveness in identifying and quantifying performance inflation in LLM benchmarks.

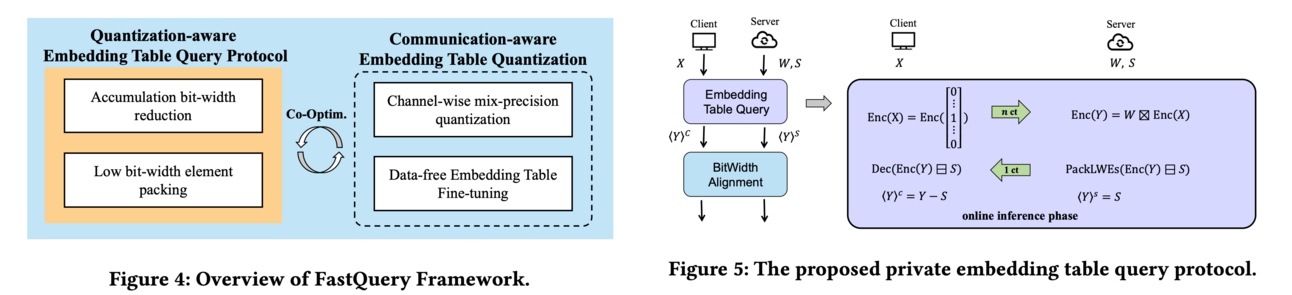

Why?: The research addresses the privacy issues associated with user queries in LLMs by focusing on the computational and communication inefficiencies of using homomorphic encryption for private embedding table queries.

How?: The researchers developed FastQuery, a framework designed to optimize private embedding table queries for LLMs. FastQuery employs a communication-aware embedding table quantization algorithm and a one-hot-aware dense packing algorithm to reduce both the computation and communication costs.

Results: FastQuery achieves more than 4.3 times, 2.7 times, 1.3 times latency reduction, and more than 75.7 times, 60.2 times, 20.2 times communication reduction compared to existing HE-based frameworks on both LLAMA-7B and LLAMA-30B models.

Why?: Understanding the mechanisms of attacks on LLMs safety alignment is crucial to improve defenses against malicious manipulations.

How?: The research breaks down the safeguarding process into three stages: recognizing harmful instructions, initiating a refusing response, and completing the refusal. Techniques such as logit lens and activation patching are employed to analyze the influence of different attack strategies on these stages. The 'logit cohort' technique is aimed at observing the prediction confidence of a model on various outputs, while 'activation cohort' involves modifying certain neurons to observe changes in behavior. Moreover, cross-model probing is utilized to examine shifts in model representations before and after attacks. Two primary attack types were investigated: Explicit Harmful Attack (EHA) and Identity-Shifting Attack (ISA). Analysis of how these attacks affect each safeguarding stage provided insights into the divergent ways these attacks compromise model safety.

Why?: Detection of code generated by LLMs

How?: The research introduces a novel approach to detect LLM-generated code using a zero-shot mechanism based on code rewriting. Their hypothesis suggests that synthetic code differs less from its rewritten variants compared to human-written code. The authors developed a self-supervised contrastive learning model to train on code samples and their rewritten forms. The contrastive learning framework essentially learns to distinguish whether two code snippets are similar (original and rewritten versions of a human-written code) or dissimilar (original and rewritten versions of synthetic code). To generate rewritten code versions, the researchers utilized another LLM trained specifically to alter code while maintaining functional equivalence. The assessment of the model's efficacy was conducted using two synthetic code detection benchmarks: the APPS benchmark and the MBPP benchmark.

Creative ways to use LLMs!!

Proposed system integrates LLMs with traditional recommendation techniques using a hierarchical approach. At the high level, 'interest clusters' are defined with controllable granularity. These clusters use language descriptions to encapsulate various user interests. A fine-tuned LLM interprets these clusters to generate descriptions of potentially novel interests, which then guide the recommendation process at the lower level. Here, traditional machine learning models, like a transformer-based recommender system, are constrained to suggest only items that align with the newly identified interest clusters.

Why?: Generating human motions

How?: The M3GPT framework integrates multiple modalities—text, music, motion/dance—using a novel approach called discrete vector quantization. This technique allows the model to handle different input types within a single vocabulary, facilitating smoother and more efficient processing. The model generates motions directly in the raw motion space rather than using a discrete tokenizer, which helps in retaining more information and generating more detailed motion dynamics. A key aspect of the framework is its multitask capacity, where text is used as a connecting thread between different motion tasks. This not only simplifies the modeling process but also enhances the model’s learning efficiency through cross-modal reinforcement. The framework was trained and tested on several datasets, involving complex tasks such as zero-shot motion generation, to demonstrate its robustness and versatility.

GeneAgent, a novel LLM designed to reduce the hallucinations in gene set knowledge discovery. GeneAgent incorporates a self-verification mechanism allowing it to autonomously interact with various biological databases to verify and incorporate relevant data into its outputs.

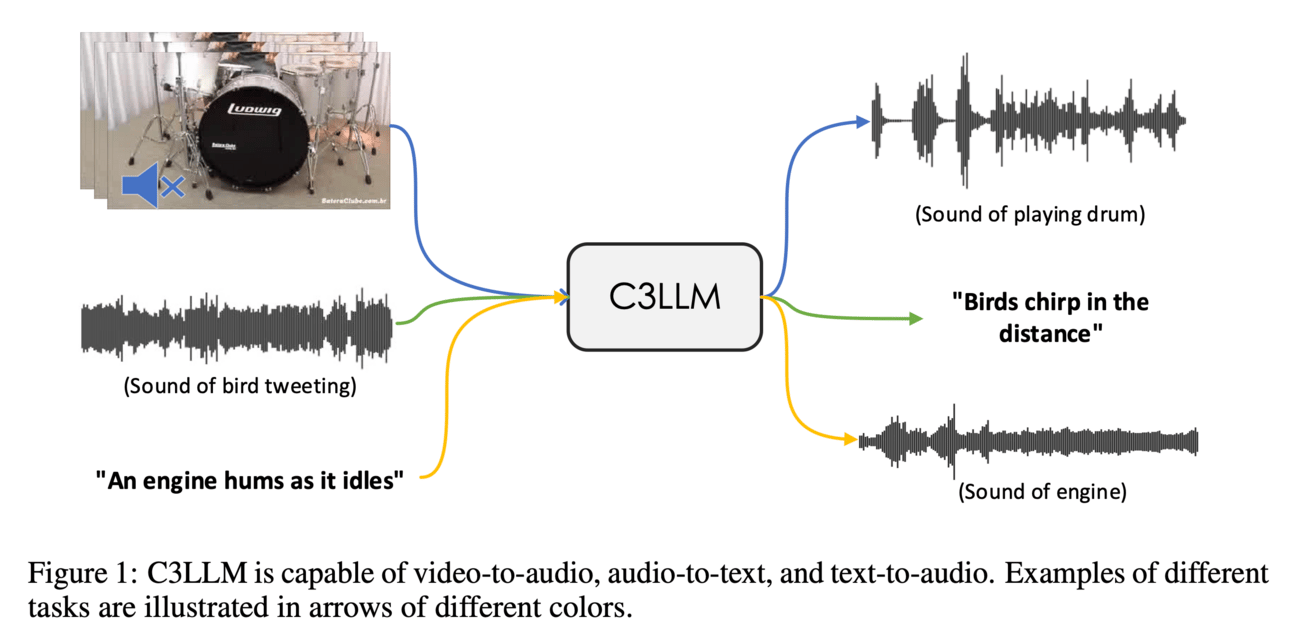

C3LLM integrates three different tasks (video-to-audio, audio-to-text, text-to-audio) with LLMs.

Why?: Recommendation system

How?: The research introduced Direct Multi-Preference Optimization (DMPO), a method designed to make LLMs more suitable for recommendation tasks by addressing the differences in data nature between LLM pre-training and recommendation demands. DMPO functions by optimizing LLMs to increase the likelihood of selecting relevant (positive) items while reducing the chances of picking irrelevant (negative) ones. The researchers fine-tuned an existing LLM using DMPO by simultaneously maximizing the probability of positive sample outcomes and minimizing the probabilities of various negative samples.

Why?: Integrating external tools with LLMs

How?: The COLT model involves a two-stage process: initial PLM-based models are fine-tuned for semantic understanding between user queries and tools, followed by a dual-view graph collaborative learning framework that constructs bipartite graphs linking queries, scenes, and tools for capturing diverse tool relationships and reducing redundancy.

Why?: Improving semantic communications between agents and humans or agents and agents by enhancing understanding and minimizing semantic loss in transmitting visual data.

How?: The paper introduces a method for understanding-level semantic communications (ULSC), employing an image caption neural network (ICNN) to convert visual data into natural language descriptions, which are then refined using a pre-trained large language model (LLM) for semantic importance quantification and error correction. It involves adaptive strategies for minimizing semantic loss while managing transmission delays and also uses LLM at the receiving end for further error corrections.

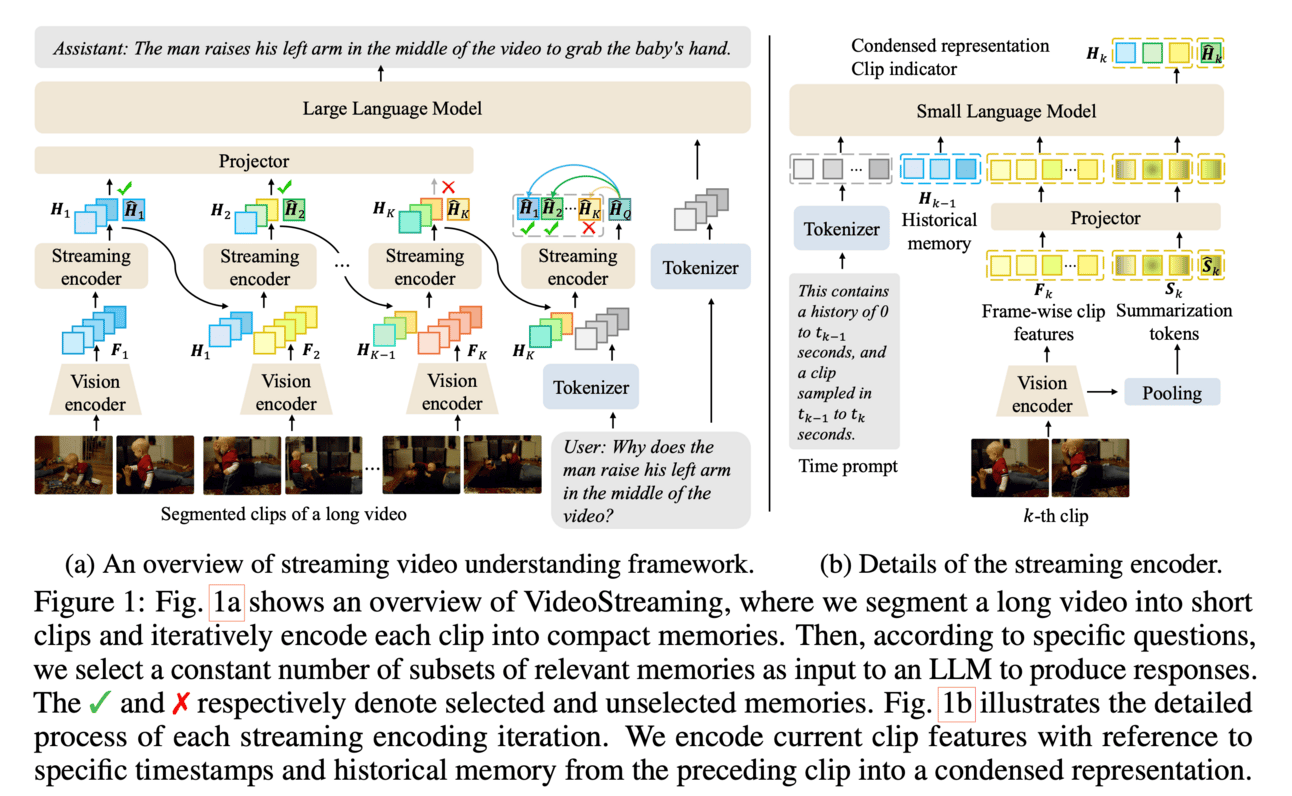

Why?: Efficient and precise long video understanding is crucial for enhancing multimedia content accessibility and interaction but is challenged by high computational costs and data redundancy.

How?: The proposed VideoStreaming system addresses long video understanding through two main techniques: Memory-Propagated Streaming Encoding and Adaptive Memory Selection. Initially, long videos are segmented into manageable short clips. Each clip is then sequentially encoded while integrating a propagated memory from previously processed clips, which helps in retaining historical content relevance and reducing redundancy. This encoding process continues clip-by-clip, cumulatively updating the memory that captures essential video content elements. After encoding, the Adaptive Memory Selection mechanism comes into play, which dynamically selects a fixed number of relevant memories based on the specific questions posed about the video. This allows the system to focus only on pertinent information, thereby optimizing processing time and memory usage while ensuring that the answers generated by the LLM are contextually appropriate and detailed. This disentangled approach of video extraction and memory utilization enables the LLM to generate responses without reprocessing the entire video, allowing for efficient querying and reduced computational demands.