Core research improving LLMs!

Why?: Paper improves the query reformulation

How?: The authors propose two ensemble-based prompting techniques, GenQREnsemble and GenQRFusion, which generate multiple sets of keywords to enhance retrieval performance. They also introduce post-retrieval variants that incorporate relevance feedback from various sources, including an oracle simulating a human user and a 'critic' LLM. The research assesses the impact of ensemble query reformulations, feedback documents, domain-specific instructions, filtered reformulations, and fluent reformulations.

Results: An ensemble of query reformulations led to an improvement in retrieval effectiveness by up to 18% on nDCG@10 in pre-retrieval settings and 9% on post-retrieval settings on multiple benchmarks, outperforming all previously reported state-of-the-art results.

Why?: Large Multimodal Models (LMMs) can face inefficiency in handling dense visual scenarios like high-resolution images and videos. The existing token pruning/merging methods lack the flexibility to adjust information density versus efficiency. Therefore, there is a need to develop a more flexible model for representing visual content in LMMs.

How?: The Matryoshka Multimodal Models (M3) proposed in this research learn to represent visual content as nested sets of visual tokens across multiple coarse-to-fine granularities. This allows explicit control over visual granularity per instance during inference, enabling adjustment of the number of tokens based on content complexity. The study analyzes the granularity required for existing datasets and explores the trade-off between performance and visual token length at the sample level.

Why?: The research is important because it introduces the NV-Embed model with enhanced techniques for training LLMs to serve as versatile embedding models, outperforming existing models in text embedding tasks.

How?: The research introduces the NV-Embed model with a latent attention layer for pooled embeddings, removes the causal attention mask of LLMs during contrastive training, and implements a two-stage contrastive instruction-tuning method, utilizing curated hard negatives and a blend of retrieval and non-retrieval datasets for training.

Why?: Efficient language modeling with constant speed for various sequence lengths is crucial for improving model training and deployment.

How?: The research introduces Lightning Attention, which splits attention calculation into intra-blocks and inter-blocks using different strategies. Intra-blocks use conventional attention computation, while inter-blocks utilize linear attention kernel tricks to eliminate the need for cumsum. Moreover, a tiling technique is applied during forward and backward procedures to maximize GPU hardware utilization. A new architecture called TransNormerLLM (TNL) is also introduced to enhance accuracy. Rigorous testing on diverse datasets with different model sizes and sequence lengths is conducted.

Why?: Parameter quantization for LLMs is essential to reduce memory costs and enhance computational efficiency.

How?: The research introduces a Column-Level Adaptive weight Quantization (CLAQ) framework that utilizes K-Means clustering, outlier-guided adaptive precision search strategy, and dynamic outlier reservation scheme for LLM quantization. These strategies enable dynamic generation of quantization centroids, assign varying bit-widths to different columns, and retain some parameters in their original float point precision. Experiments were conducted on various mainstream open source LLMs to validate the effectiveness of the proposed methods.

Why?: Autoformalization is important for translating informal math into formal theorems and proofs that are machine-verifiable.

How?: The research introduces a neuro-symbolic framework that combines domain knowledge, SMT solvers, and LLMs for autoformalizing Euclidean geometry. The framework leverages theorem provers to automatically fill in diagrammatic information from informal proofs, making the formalization process easier for LLMs. Automatic semantic evaluation is also provided for autoformalized theorem statements. A benchmark called LeanEuclid is constructed with problems from Euclid's Elements and the UniGeo dataset formalized in the Lean proof assistant. Experiments with GPT-4 and GPT-4V demonstrate the capabilities and limitations of state-of-the-art LLMs in autoformalizing geometry problems.

Why?: LLMs have limitations in accurately understanding inputs and generating responses due to tokenization. Improving tokenization can enhance LLMs capabilities.

How?: The research constructs an adversarial dataset, ADT (Adversarial Dataset for Tokenizer), drawing on various LLMs vocabularies to challenge LLMs tokenization. It comprises ADT-Human and ADT-Auto subsets. The study evaluates the effectiveness of ADT on leading LLMs like GPT-4o, Llama-3, Qwen2.5-max, etc., thereby degrading their performance. Automatic data generation proves efficient and applicable to diverse LLMs, highlighting tokenization flaws.

Why?: Decision making demands intricate interplay between perception, memory, and reasoning to discern optimal policies. Conventional approaches face challenges such as low sample efficiency and poor generalization. Foundation agents can offer rapid adaptation to diverse tasks, making them crucial for transforming the learning paradigm.

How?: The research proposes the construction of foundation agents by formulating their fundamental characteristics and challenges inspired by the success of large language models. The roadmap includes steps from data collection to pretraining and adaptation, aligning knowledge and values with LLMs. Critical research questions are identified, and trends for foundation agents in real-world use cases are outlined.

Why?: To investigate the evolution and interaction of cognitive and expressive capabilities in LLMs and understand the development patterns of these abilities.

How?: The research examines Baichuan-7B and Baichuan-33B, bilingual LLMs, to define and explore cognitive and expressive capabilities using linear representations in three phases: Pretraining, Supervised Fine-Tuning (SFT), and Reinforcement Learning from Human Feedback (RLHF). It involves statistical analyses to establish correlations between cognitive and expressive capabilities, assesses how cognitive capacity may restrict expressive potential, delves into the theoretical aspects of these developmental trajectories, evaluates optimization strategies like few-shot learning and repeated sampling, and investigates the connection between the hidden space and output space.

Why?: It is important to determine if large language models can effectively express their intrinsic uncertainty in natural language to improve their trustworthiness and reliability in providing answers.

How?: The research formalized faithful response uncertainty by measuring the gap between the model's confidence in its answers and the decisiveness with which they are conveyed. This metric penalizes both excessive and insufficient hedging, indicating how well the uncertainty is reflected. Various aligned LLMs were evaluated on knowledge-intensive question answering tasks to assess their ability to communicate uncertainty.

Why?: Entity matching is crucial for entity resolution, and utilizing LLMs for this task has shown promise. However, existing LLM-based approaches often overlook global consistency between records. This research aims to investigate different methodologies to enhance LLM-based entity matching.

How?: The research compares three main strategies - matching, comparing, and selecting - for LLM-based entity matching. It explores how these strategies incorporate interactions among records from various perspectives. A compositional entity matching (ComEM) framework is designed, leveraging a mix of strategies and LLMs to capitalize on their respective strengths. This framework aims to improve both effectiveness and efficiency of entity matching.

Results: Experimental results showcase significant performance enhancements and cost reductions in LLM-based entity matching by using the ComEM framework.

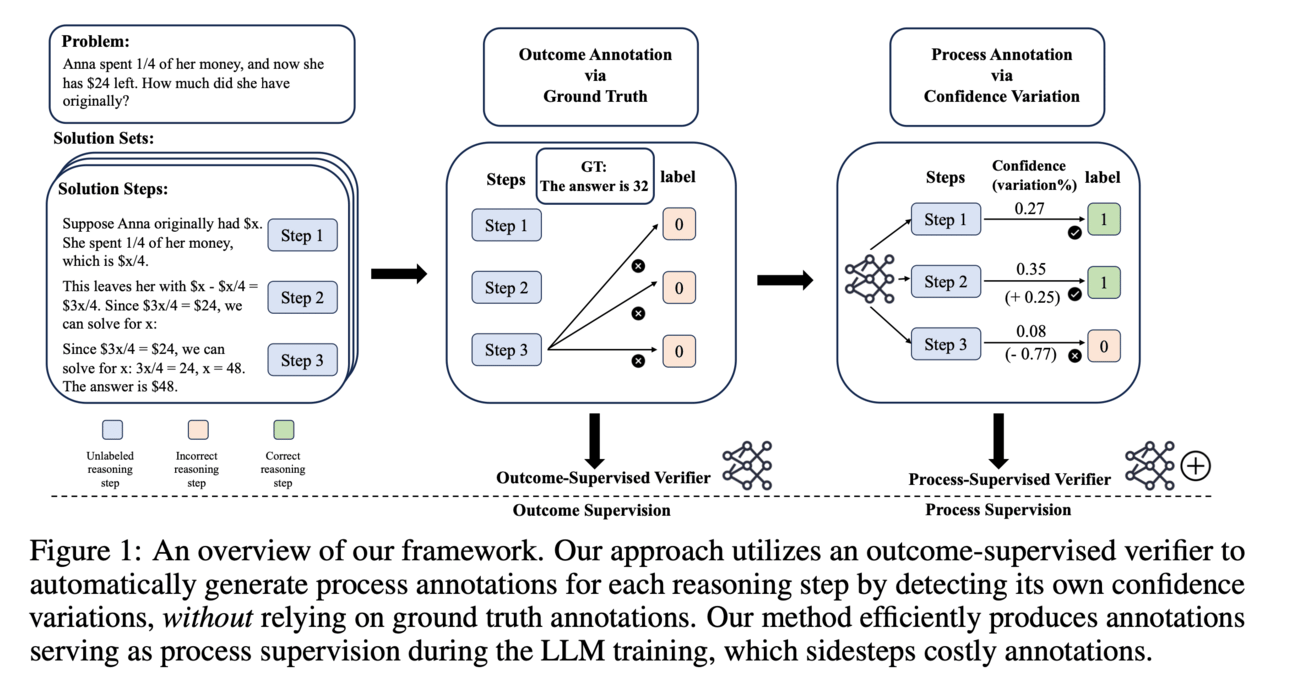

Why?: The research is important as it aims to enhance the reasoning capabilities of large language models by automatically annotating the reasoning steps, which can improve the accuracy of these models in selecting the correct answers.

How?: The approach involves training a verification model on final answer correctness to generate automatic process annotations by assigning confidence scores to each reasoning step. It detects relative changes in these confidence scores to automatically annotate the reasoning process, eliminating the need for manual annotations and reducing computational costs.

Results: Substantial improvements in accuracy across five datasets in mathematics and common sense reasoning.

Why?: LLMs often struggle with understanding complex and lengthy contexts. The research introduces the THREAD framework to address this challenge by allowing the model to dynamically spawn new threads based on the context, enabling adaptive problem-solving.

How?: The research conducted involves proposing the THREAD framework that treats model generation as a thread of execution. This thread can either run to completion or spawn new threads dynamically, offloading work to child threads. The model decomposes complex tasks or questions into simpler sub-problems for separate child threads to solve. THREAD is implemented using a few-shot learning approach and tested on various benchmarks for agent tasks and question answering.

Results: THREAD achieves state-of-the-art performance with GPT-4 and GPT-3.5 on different benchmarks, including ALFWorld, TextCraft, and WebShop. It also outperforms existing frameworks by 10% to 50% absolute points with smaller models like Llama-3-8b and CodeLlama-7b.

Why?: Can binary and ternary transformer networks offer a more interpretable alternative in LLMs while maintaining efficiency.

How?: The research compares how binary and ternary transformer networks performs compared to full-precision transformer networks.

Let’s make LLMs safe!!

Why?: Integrating new modalities into LLMs increases the vulnerability to adversarial attacks, bypassing traditional safety training methods.

How?: The research investigates whether unlearning solely in the textual domain is sufficient for cross-modality safety alignment. The study evaluates this approach across six datasets to analyze its effectiveness in reducing the Attack Success Rate (ASR) for text-based and vision-text-based attacks while maintaining utility. The experiments compare unlearning in VLMs with and without a multi-modal dataset to understand its impact on safety alignment.

The research proposes a framework for Backdoor Attacks against LLM-enabled Decision-making systems (BALD) by introducing attacks during the fine-tuning phase using word injection, scenario manipulation, and knowledge injection mechanisms. These attacks target different components in the LLM-based decision-making pipeline. Extensive experiments are conducted with three popular LLMs (GPT-3.5, LLaMA2, PaLM2) on two datasets (HighwayEnv, nuScenes) to demonstrate the effectiveness and stealthiness of the proposed backdoor triggers and mechanisms.

Why?: Understanding the relationship between flatness of the loss surface and adversarial robustness is crucial for improving the robustness of machine learning models, including LLMs.

How?: The research empirically analyzes the relation between adversarial examples and relative flatness with respect to the parameters of one layer. It observes a peculiar property of adversarial examples during iterative first-order white-box attacks. The study is conducted across various model architectures and datasets, extending the results to large language models. The theoretical connection between relative flatness and adversarial robustness is established by bounding the third derivative of the loss surface.

Paper introduces a knowledge transfer (KT) method using detailed reasoning paths to transfer knowledge from larger LLMs to smaller ones. This helps fine-tune smaller models for producing accurate predictions with calibrated confidence levels. Experimental evaluation includes multiple-choice questions and sentiment analysis on various datasets to compare the KT method with vanilla and question-answer pair (QA) fine-tuning methods.

Why?: Fine-tuning LLMs requires significant hardware resources, which can be impractical for typical users. As fine-tuning can increase safety risks to LLMs, there is a need to develop methods to reduce these risks while maintaining performance.

How?: The research proposes Safe LoRA, a simple one-liner patch to the LoRA fine-tuning method. Safe LoRA introduces the projection of LoRA weights from selected layers to a safety-aligned subspace, reducing safety risks in LLM fine-tuning while maintaining utility. It is a training-free and data-free approach, requiring only the knowledge of weights from the base and aligned LLMs. Extensive experiments were conducted to demonstrate the effectiveness of Safe LoRA.

Creative ways to use LLMs!!

Video Enriched Retrieval Augmented Generation Using Aligned Video Captions - Utilize visual captions from videos to add more context in LLMs input

HEART-felt Narratives: Tracing Empathy and Narrative Style in Personal Stories with LLMs

Understand the relationship between narrative style and empathy in personal stories

How?: The research empirically examines the relationship between style and empathy using LLMs and large-scale crowdsourcing studies. It introduces the HEART taxonomy to delineate narrative style elements influencing empathy, evaluates LLMs in extracting these elements, and collects a dataset of empathy judgments via crowdsourcing.

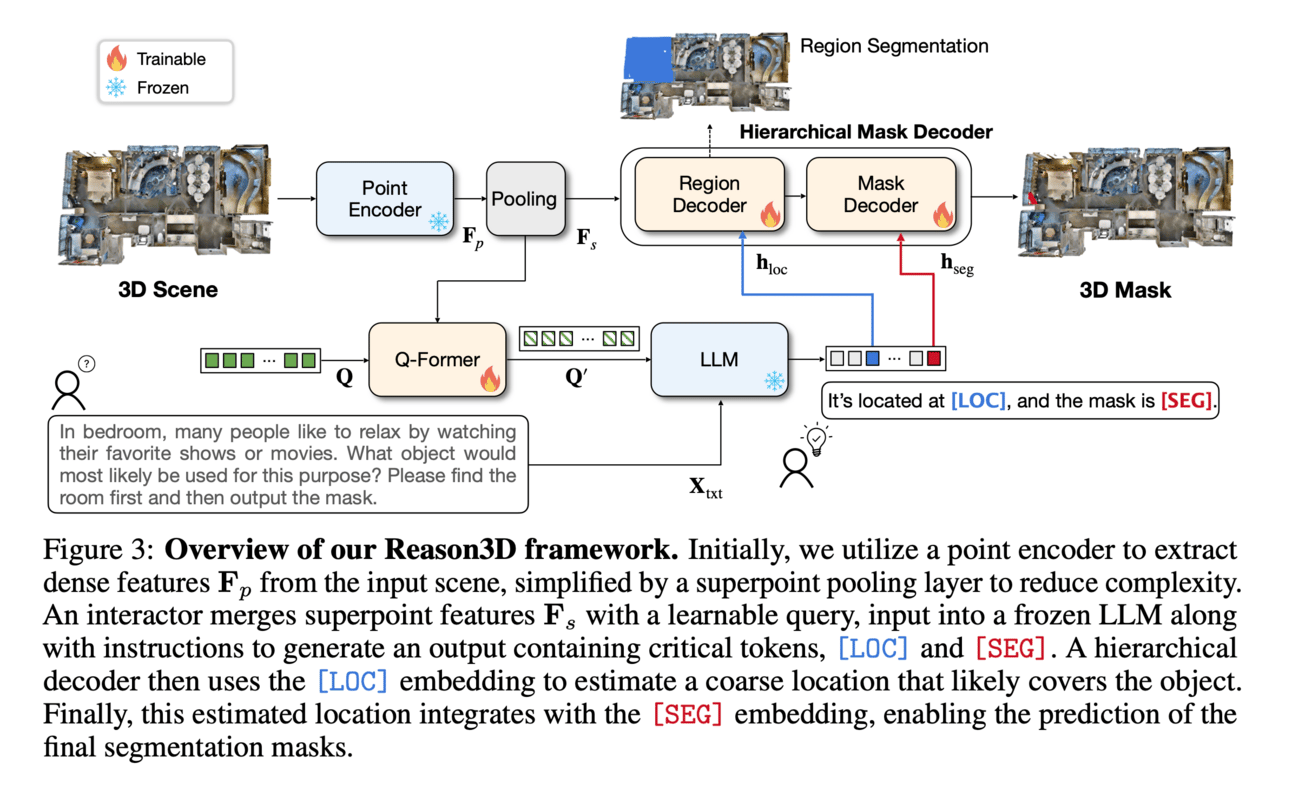

Reason3D: Searching and Reasoning 3D Segmentation via Large Language Model - A novel LLM specifically designed for comprehensive 3D understanding.

How?: Reason3D takes point cloud data and text prompts as input to produce textual responses and segmentation masks. It uses a hierarchical mask decoder for locating small objects in expansive scenes, generating coarse estimates followed by detailed segmentation. The approach enhances the precision of object identification and segmentation.

The Peripatetic Hater: Predicting Movement Among Hate Subreddits - LLMs used to understand how users move between different hate subreddits can help in predicting radicalization and identifying the associated risks on social media platforms.

QUB-Cirdan at 'Discharge Me!': Zero shot discharge letter generation by open-source LLM - LLMs to automate the generation of critical sections of patient discharge letters in clinics.

How?: The approach utilized the Llama3 8B quantized model with a zero-shot method combined with Retrieval-Augmented Generation (RAG) to generate the 'Brief Hospital Course' and 'Discharge Instructions' sections. A curated template-based approach was developed to ensure reliability and consistency, and RAG was integrated for word count prediction. Several unsuccessful experiments were also conducted to provide insights for the competition.

LLM-Assisted Static Analysis for Detecting Security Vulnerabilities - LLMs based static analysis to identify security vulnerabilities

How?: The researchers propose IRIS, a method that integrates LLMs with static analysis to conduct whole-repository reasoning for identifying security vulnerabilities. They create a new dataset, CWE-Bench-Java, containing 120 manually validated security vulnerabilities in real-world Java projects. The projects are complex, averaging 300,000 lines of code and reaching up to 7 million. IRIS utilizes GPT-4 to detect 69 out of 120 vulnerabilities, outperforming the state-of-the-art static analysis tool that only identifies 27. Moreover, IRIS substantially decreases false alarms by over 80% in the best scenario.

LLM-Optic: Unveiling the Capabilities of Large Language Models for Universal Visual Grounding - Visual grounding links text queries with specific regions in an image. However, existing models struggle with complex queries.

How?: First, LLM-Optic utilizes LLMs as a text grounder to interpret complex queries and identify intended objects accurately. Then, a pre-trained visual grounding model generates candidate bounding boxes based on the refined query. Subsequently, LLM-Optic annotates these bounding boxes with numerical marks to link text with image regions. Finally, a Large Multimodal Model acts as a Visual Grounder to select objects that best match the original query.

The research proposes a LLM-based multi-agent manufacturing system that defines different agents with specific roles like Machine Server Agent, Bid Inviter Agent, Bidder Agent, Thinking Agent, and Decision Agent. The LLMs support the Thinking Agent and Decision Agent in analyzing shopfloor conditions intelligently and choosing suitable machines rather than following predefined rules. The system involves negotiation steps among agents like BAs and BIA to finalize the distribution of orders and connect manufacturing resources, with Machine Server Agents coordinating physical shopfloor operations.

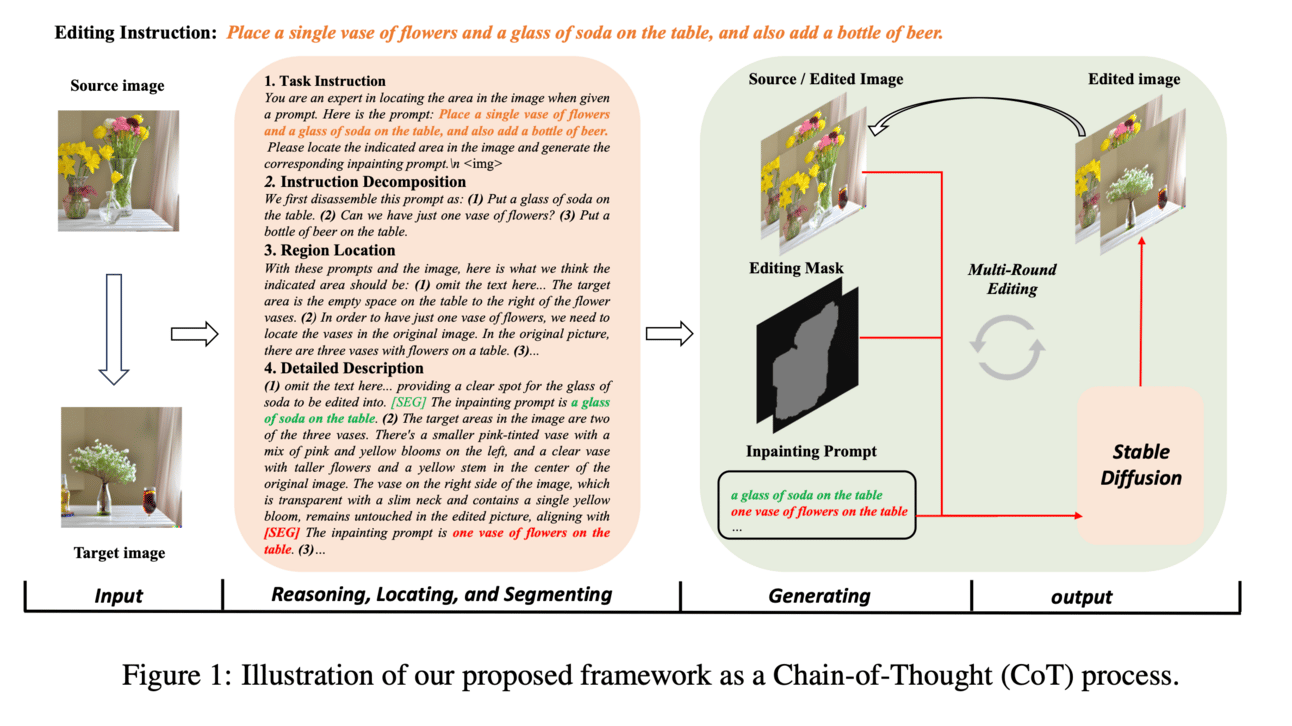

TIE: Revolutionizing Text-based Image Editing for Complex-Prompt Following and High-Fidelity Editing - Interpreting complex prompts and maintaining image consistency during editing are crucial in the image generation.

How?: The researchers design a Chain-of-Thought (CoT) process involving instruction decomposition, region localization, and detailed description. They fine-tune the LISA model, a lightweight multimodal LLM, using the CoT process and the mask of the edited image. By enhancing the diffusion models with prompt knowledge and image mask, they enable superior image generation with improved prompt understanding.

Why?: Automating the generation of helper assertions in Dafny programs can reduce the burden on proof engineers and improve the efficiency of program verification.

How?: The tool, Laurel, uses LLMs to automatically generate helper assertions by designing two domain-specific prompting techniques. First, it helps the LLM determine the location of the missing assertion by analyzing the verifier's error message and inserting an assertion placeholder. Second, it provides example assertions from the same codebase based on a new lemma similarity metric. The techniques were evaluated on a dataset of helper assertions extracted from real-world Dafny codebases.

CHESS: Contextual Harnessing for Efficient SQL Synthesis - convert user context to SQL queries

Paper utilizes hierarchical retrieval methods, model-generated keywords, locality-sensitive hashing indexing, vector databases, and an adaptive schema pruning technique based on problem complexity and contextual size. The approach is tested on both proprietary models like GPT-4 and open-source models such as Llama-3-70B, demonstrating its effectiveness through ablation studies.

LLMs for robotics & VLLMs

Enhanced Robot Arm at the Edge with NLP and Vision Systems - LLMs and vision systems were used together to interpret and execute complex commands conveyed through natural language to improve the accessibility and adaptability of assistive robotic systems.

LARM: Large Auto-Regressive Model for Long-Horizon Embodied Intelligence - The research introduces the Large Auto-Regressive Model (LARM) that utilizes text and multi-view images to predict subsequent actions in an auto-regressive manner. It incorporates a novel data format called auto-regressive node transmission structure and a corresponding dataset. LARM is trained using a two-phase training approach to harvest enchanted equipment in Minecraft, demonstrating more complex decision-making chains than previous methods. The training also enhances the speed of LARM by 6.8 times.

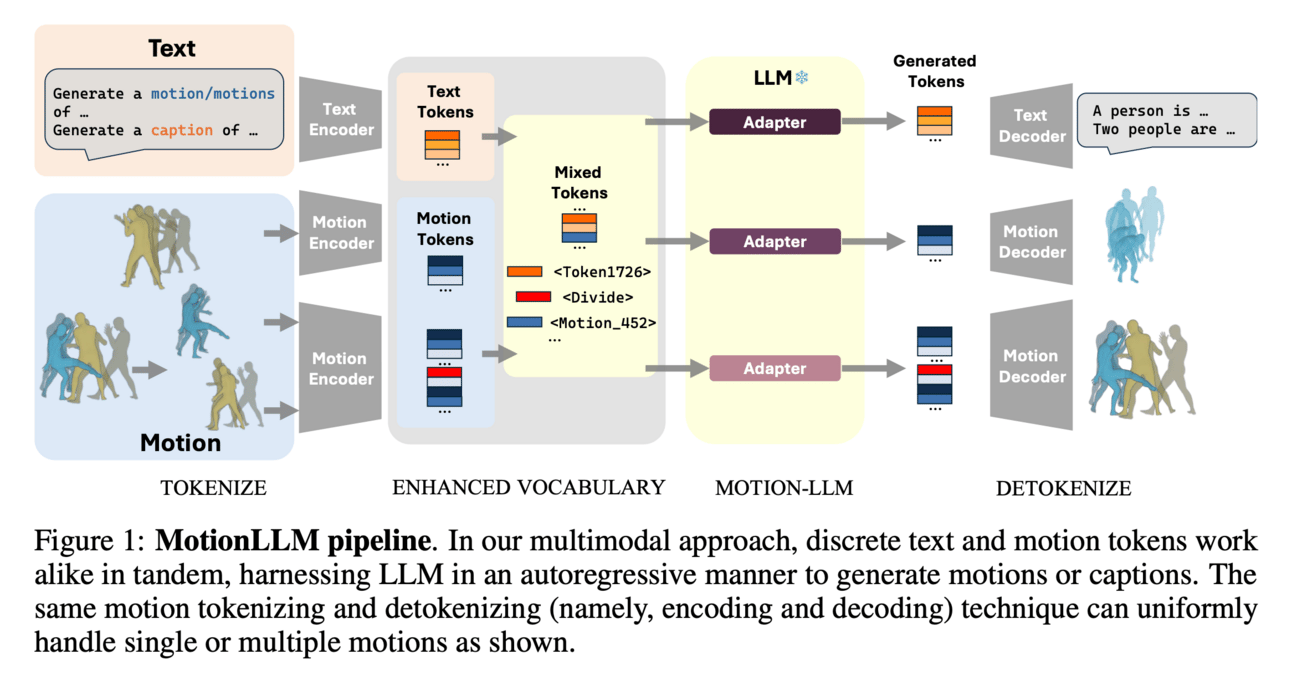

MotionLLM: Multimodal Motion-Language Learning with Large Language Models - single-human, multi-human motion generation, and motion captioning through fine-tuning of pre-trained LLMs, showcasing scalability and flexibility.

How?: MotionLLM encodes and quantizes motions into discrete tokens understandable by LLMs, creating a unified vocabulary of motion and text tokens. By training LLMs with adapters using only 1-3% of their parameters, the framework achieves comparable results in single-human motion generation compared to diffusion models and transformer-based models. It can be extended to multi-human motion generation through autoregressive generation of single-human motions.