Core research improving LLMs!

Why?: Understanding the limitations of preference learning algorithms is crucial for improving how LLMs are trained to align with human preferences.

How?: The researchers conducted a detailed analysis of the ranking accuracy of state-of-the-art preference-tuned models. They evaluated models trained with popular preference learning algorithms like Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO). To measure performance, they assessed how often these models assigned higher likelihoods to more preferred outputs compared to less preferred ones, using established preference datasets. Additionally, they derived the idealized ranking accuracy that would be achieved if a preference-tuned LLM optimized the DPO or RLHF objectives perfectly. An efficient formula was introduced to quantify the difficulty of learning from given preference datapoints, and experiments were conducted to explore the relationship between ranking accuracy and the empirically popular win rate metric when the model closely matches the reference model used in learning objectives. This provided insights into the alignment gap and differences between on-policy (e.g., RLHF) and off-policy (e.g., DPO) learning algorithms.

Why?: Integrating LLMs with external tool invocations has significantly increased the complexity and latency of LLM serving workloads.

How?: The researchers introduced Conveyor, an LLM serving system designed to optimize the handling of requests that involve external tools. Conveyor employs a novel interface specifically for tool developers, allowing them to expose partial execution opportunities. This enables portion of tool operations to be executed concurrently alongside LLM decoding. Additionally, the system includes a request scheduler that efficiently manages these partial tool executions. By doing so, Conveyor aims to reduce the latency ordinarily associated with handling LLM requests that require external tool interactions.

Why?: The research focuses on enhancing the alignment of LLMs to better follow human intentions, which is crucial for their practical utility and safety.

How?: The authors propose a novel approach called Self-Exploring Language Models (SELM) to improve the alignment of LLMs through online preference elicitation. The method involves a bilevel optimization objective that is optimistically biased towards responses that are potentially high-reward but out-of-distribution. The inner-level optimization problem is solved using a reparameterized reward function, eliminating the need for a separate reward model. This iterative method allows the LLM to explore more diverse response spaces efficiently. Experiments were conducted using the Zephyr-7B-SFT and Llama-3-8B-Instruct models, and the SELM approach was tested on various benchmarks, including MT-Bench and AlpacaEval 2.0.

Results: The experimental results indicate that SELM significantly improves performance on instruction-following benchmarks such as MT-Bench and AlpacaEval 2.0, as well as various standard academic benchmarks in different settings. This demonstrates that SELM's active exploration leads to better alignment and enhanced performance of LLMs.

Why?: Fostering cooperation between agents and humans in environments with social norms is a critical challenge.

How?: The research introduces the concept of 'Normative Modules,' an architectural enhancement for generative agents. These modules allow agents to recognize and adapt to normative structures within an environment, focusing particularly on the problem of equilibrium selection in cooperative scenarios. To test this, the researchers designed a new type of environment that supports institutions. Agents equipped with the normative module learn via peer interactions to determine which among several candidate institutions is treated as authoritative by a group. This learning process helps the agents to better coordinate their sanctioning behavior, which in turn influences primary behaviors within a social setting. The study makes use of two key evaluation criteria: (i) the ability of the agent to ignore non-authoritative institutions and (ii) the ability to identify authoritative institutions among multiple candidates. These evaluations are essential to measure the stability and effectiveness of cooperation induced by the normative module.

Results: The study demonstrates that agents equipped with normative modules achieve more stable cooperative outcomes compared to baseline agents lacking such modules. This showcases the module's effectiveness in enabling agents to navigate and adapt to social norms, thereby fostering higher average welfare in multi-agent interactions.

Why?: The research aims to improve the transparency and performance of LLMs by creating an open-sourced, highly capable bilingual LLM.

How?: The researchers designed and trained a new bilingual language model called MAP-Neo with 7 billion parameters. The training was conducted on 4.5 trillion high-quality tokens. Crucially, they open-sourced not only the model's weights but all the details required to reproduce the model, including the pre-training corpus, data cleaning pipeline, intermediate checkpoints, and the training and evaluation frameworks. This approach allows for complete transparency and offers the research community the tools needed to study, improve, and innovate upon the model.

Results: The MAP-Neo model showed performance comparable to the state-of-the-art LLMs and provided a fully transparent and reproducible framework, enhancing the open research community's capability to study, innovate, and facilitate further improvements.

Why?: The research addresses the limitations of LLMs in hallucination and lack of attribution for their outputs.

How?: The authors introduce Nearest Neighbor Speculative Decoding (NEST), a semi-parametric language modeling approach. Unlike traditional LLMs, NEST performs token-level retrieval at each inference step and computes a semi-parametric mixture distribution to identify promising text span continuations from a non-parametric data store. The approach uses an approximate speculative decoding mechanism to either accept a retrieved text prefix or generate a new token, enhancing text fluency and providing source attribution. This approach refines the output of the base LM, outperforming kNN-LM in both quality and speed. The method was applied to Llama-2-Chat 70B for evaluation.

Results: NEST significantly enhances the generation quality and attribution rate of the base LM across a variety of knowledge-intensive tasks, surpassing the conventional kNN-LM method and performing competitively with in-context retrieval augmentation. Additionally, NEST substantially improves the generation speed, achieving a 1.8x speedup in inference time when applied to Llama-2-Chat 70B.

Why?: There is a growing need for improving the understanding of long-form videos, as current methods often miss important details and operate inefficiently.

How?: The research introduces VideoTree, a hierarchical framework designed to dynamically extract and organize query-related information from long videos for better LLM reasoning. The process involves three stages: 1. Adaptive Frame Selection: Instead of using all frames, VideoTree uses an iterative clustering mechanism based on visual features. It then scores these clusters based on their relevance to the given query, ensuring that only significant frames are selected for captioning. 2. Hierarchical Tree Organization: The selected frames are organized into a tree structure where nodes represent clusters of varying relevance and granularity. This tree structure allows finer details to be encoded where needed most, according to the query's requirements. 3. Tree Traversal for Answer Generation: The hierarchical tree's keyframes are traversed, and their captions are sent to the LLM to generate an accurate answer. This ensures that only the most relevant information is processed, optimizing both accuracy and efficiency.

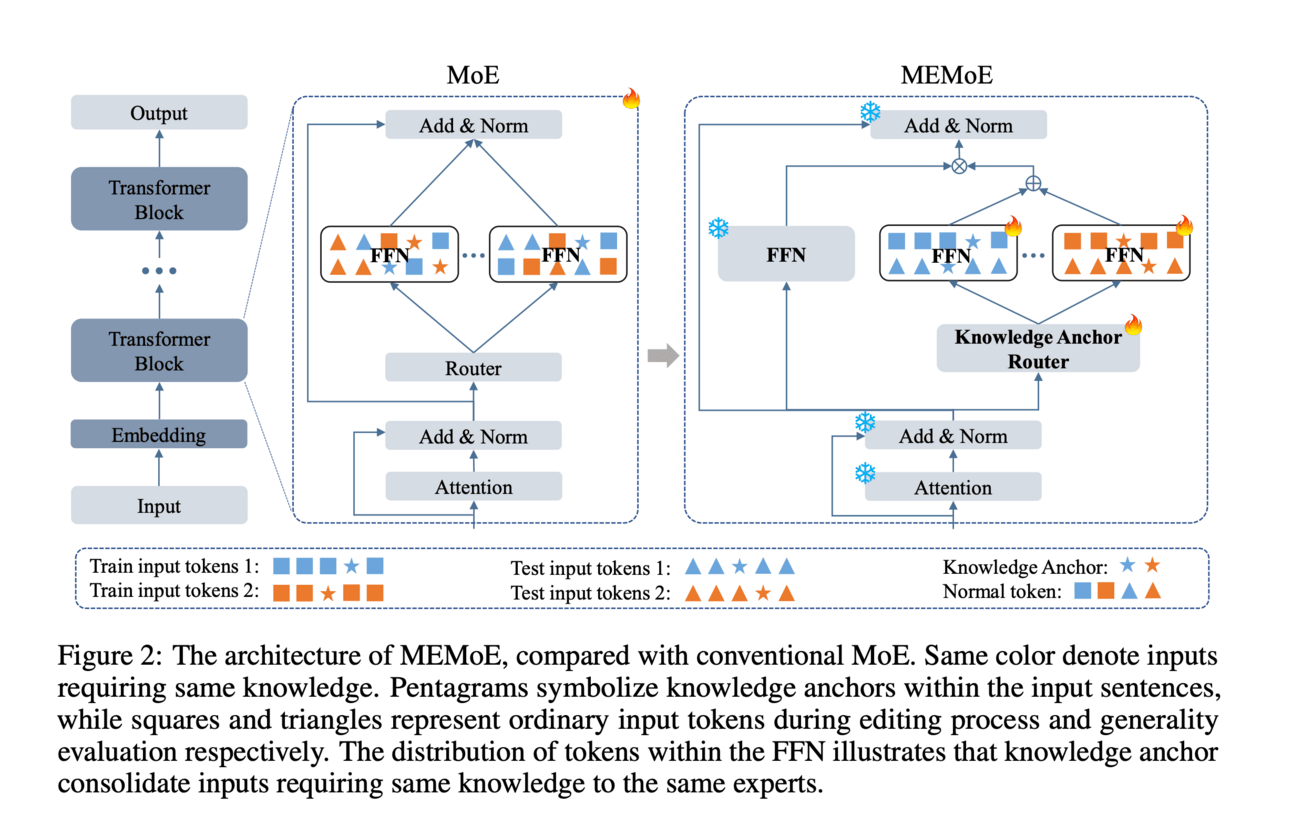

Why?: Enhancing how LLMs can be efficiently and effectively edited improves their adaptability and reliability for various tasks without degrading their overall performance.

How?: The research introduces MEMoE, a novel model editing adapter that uses a Mixture of Experts (MoE) architecture paired with a knowledge anchor routing strategy. The MoE architecture facilitates the updating of the model's knowledge through a bypass mechanism, which ensures that the original parameters of the LLMs remain unchanged, thus preserving their general capabilities. The knowledge anchor routing component is crucial as it routes inputs that demand similar knowledge to the same expert within the MoE framework, which enhances the generalization of the new, updated knowledge while maintaining local specificity. This method was rigorously tested through different tasks, specifically batch editing and sequential batch editing, to compare its performance against existing model editing techniques.

Results: Experimental results show the superiority of our approach over both batch editing and sequential batch editing tasks, exhibiting exceptional overall performance alongside outstanding balance between generalization and locality.

Why?: Integrating both external and parametric knowledge into LLMs can overcome limitations like outdated static memory, potentially improving their performance.

How?: The authors propose a four-scenario framework to deconstruct knowledge fusion within LLMs. They developed a systematic pipeline for creating datasets and infusing knowledge, which facilitates controlled experiments. This setup allowed for comprehensive testing of LLMs' ability to blend external and parametric knowledge. The investigation involves assessing the various fusion scenarios to identify strengths and weaknesses in LLMs' knowledge integration processes. Specific methods for enhancing parametric knowledge were applied to test if it would subsequently boost the overall capability of the model in these fusion scenarios.

Results: The study demonstrates that enhancing parametric knowledge within LLMs can significantly improve their capability for knowledge integration. However, it also highlights challenges around memorizing and accurately eliciting parametric knowledge and delineating its boundaries. These insights are intended to guide future work in harmonizing external and parametric knowledge in LLMs.

Why?: LLMs often generate unfaithful chain-of-thought (CoT) reasoning, impacting their reliability in tasks requiring complex reasoning.

How?: The researchers first analyzed CoT faithfulness at the level of individual reasoning steps and identified two reasoning paradigms: centralized and distributed reasoning, noting their relationship with faithfulness. They conducted a joint analysis examining the causal relevance among context, CoT, and the answer, discovering that the model can recall correct information from context that was missing in the CoT, leading to faithfulness issues. To address this, they proposed an inferential bridging method that employs an attribution method to recall pertinent information as hints during CoT generation and uses semantic consistency and attribution scores to filter out noisy CoTs.

Why?: Improving the effectiveness of language generation models by exploring alternative scoring rules beyond the commonly used logarithmic score.

How?: The researchers propose using strictly proper scoring rules other than the logarithmic score, specifically the Brier score and the Spherical score, for training language generation models. Instead of relying solely on maximum likelihood estimation (MLE) with log-likelihood loss, they adapt these non-local scoring rules to the context of language generation. The adaptation involves training the models with these alternative loss functions without adjusting other hyperparameters. They evaluate the performance of these models using large language models like LLaMA-7B and LLaMA-13B to determine if the alternative loss functions improve language generation capabilities.

Results: Experimental results indicate that simply substituting the loss function, without adjusting other hyperparameters, can yield substantial improvements in the model's generation capabilities.

Why?: Large Language Models are immense in size, making them difficult to deploy on memory-constrained edge devices. Reducing their size without significant losses in performance is critical for broader and more efficient applications.

How?: The researchers introduced a post-training LLM compression algorithm named CALDERA, which leverages the low-rank structure of weight matrices. The algorithm approximates a weight matrix 𝐖 using a decomposition of the form 𝐖≈𝐐 + 𝐋𝐑, where 𝐋 and 𝐑 are low-rank matrices, and 𝐐, 𝐋, and 𝐑 are quantized to low precision. The model is compressed by substituting each layer with its 𝐐 + 𝐋𝐑 decomposition. The decomposition is achieved by setting up an optimization problem: min_𝐐,𝐋,𝐑‖(𝐐 + 𝐋𝐑 - 𝐖)𝐗^⊤‖_ F^2, with 𝐗 as calibration data. Constraints ensure that 𝐐, 𝐋, and 𝐑 are in low-precision formats. Additionally, 𝐋 and 𝐑 can be adapted for enhancing zero-shot performance. The research also provides theoretical upper bounds on the approximation error using rank-constrained regression framework and explores the tradeoff between compression ratios and model performance.

Results: Results illustrate that compressing LlaMa-2 7B/70B and LlaMa-3 8B models obtained using CALDERA outperforms existing post-training LLM compression techniques in the regime of less than 2.5 bits per parameter.

LLMs evaluations

MathChat: Benchmarking Mathematical Reasoning and Instruction Following in Multi-Turn Interactions - Introduces MathChat, a benchmark for testing LLMs in multi-turn mathematical problem solving. Highlights the significant improvements in model performance after fine-tuning with the MathChat sync dataset, particularly in multi-turn settings.

MASSIVE Multilingual Abstract Meaning Representation: A Dataset and Baselines for Hallucination Detection - Describes the creation of the MASSIVE-AMR dataset for studying multilingual abstract meaning representation and hallucination detection in AMR tasks. Shows that while LLMs are promising in structured parsing tasks, issues like linguistic diversity and accuracy remain challenges.

Are You Sure? Rank Them Again: Repeated Ranking For Better Preference Datasets - Proposes a Repeat Ranking method to enhance consistency in ranking outputs, improving the quality of datasets for training LLMs with reinforcement learning. Demonstrates that repeated ranking yields better performance and more reliable training data compared to standard methods.

LLMs achieve adult human performance on higher-order theory of mind tasks - Evaluates the ability of LLMs like GPT-4 and Flan-PaLM to handle complex theory of mind tasks against adult human benchmarks. Finds that these models reach or exceed adult human performance, underscoring the potential of LLMs in advanced cognitive processing.

Let’s make LLMs safe!!

Why?: Assessing the safety of large language models (LLMs) by fully eliciting their capabilities is critical for AI developers.

How?: The researchers created password-locked models, which are LLMs fine-tuned to hide certain capabilities unless a specific password is present in the input prompt. The purpose is to see if sophisticated elicitation methods, particularly fine-tuning, can reveal these hidden capabilities. They conducted experiments to test various elicitation techniques. First, they provided a few high-quality demonstrations to the models to see if these could fully reveal the password-locked capabilities. They also tested the efficacy of fine-tuning with the password as well as with different passwords. Further, they evaluated reinforcement learning methods to elicit capabilities when demonstrations were not available.

Results: The study found that a few high-quality demonstrations are often sufficient to fully elicit password-locked capabilities. More surprisingly, fine-tuning can also elicit other capabilities that have been locked using the same or different passwords. Furthermore, reinforcement learning methods proved effective in eliciting capabilities when demonstrations were unavailable. Therefore, fine-tuning is an effective but potentially unreliable method for eliciting hidden capabilities, especially when models' hidden capabilities exceed those of human demonstrators.

Why?: Addressing safety concerns in LLM alignment with human preferences is critical for enhancing their usability and reliability.

How?: The researchers propose a novel method to simplify the constrained alignment problem in LLMs by transforming it into an unconstrained alignment problem using a dualization approach. Instead of relying on computationally expensive and unstable Lagrangian-based primal-dual policy optimization methods, the authors pre-optimize a smooth and convex dual function that has a closed form. This eliminates the need for repeated primal-dual policy iterations. They develop two practical algorithms based on this strategy: MoCAN for model-based scenarios and PeCAN for preference-based scenarios. Both algorithms leverage the dualization technique to reduce computational burden and improve training stability.

Results: A broad range of experiments demonstrate the effectiveness of the proposed MoCAN and PeCAN algorithms in improving computational efficiency and training stability for safety alignment in LLMs.

Why?: Understanding the biases and variability in LLM responses is crucial to ensure robust and fair applications of these models in modeling decisions or collective behaviors.

How?: The researchers conducted over one million simulations where LLMs were asked to answer subjective questions. They compared these answers to real data obtained from the European Social Survey (ESS). To measure the differences and biases between LLMs and survey data, they employed methods such as calculating weighted means and introduced a new measure inspired by the Jaccard similarity index. This approach allowed them to quantitatively analyze and highlight the cultural, age, and gender biases in the models' responses. Additionally, the study emphasized the importance of evaluating the robustness and variability of prompts used for LLMs.

Results: The study found significant cultural, age, and gender biases in LLMs' responses compared to real-world survey data. These findings underline the necessity of careful prompt analysis and bias mitigation to ensure LLMs' outputs are reliable and trustworthy for modeling individual or collective behaviors.

Why?: To address the challenge of incorporating uncertainty estimation in the reward function for reinforcement learning (RL) from human feedback (RLHF), making it suitable for large language models (LLMs).

How?: The researchers introduced Value-Incentivized Preference Optimization (VPO), a unified approach for both online and offline RLHF. VPO works by regularizing the maximum likelihood estimate of the reward function using the corresponding value function. This regularization is modulated by a sign indicating whether optimism or pessimism is chosen. The core of VPO is its implicit reward modeling, which ensures a simpler RLHF pipeline akin to direct preference optimization. Theoretical guarantees are provided for VPO in both online and offline scenarios, aligning with rates of standard RL methods. The method was validated through empirical experiments in text summarization and dialog tasks, demonstrating its practical effectiveness.

Results: The method showed practicality and effectiveness in empirical experiments, specifically in text summarization and dialog tasks.

Creative ways to use LLMs!!

Unlocking the Potential of Large Language Models for Clinical Text Anonymization: A Comparative Study - Examines LLMs for anonymizing clinical texts, introducing six new evaluation metrics to ensure privacy while maintaining data utility.

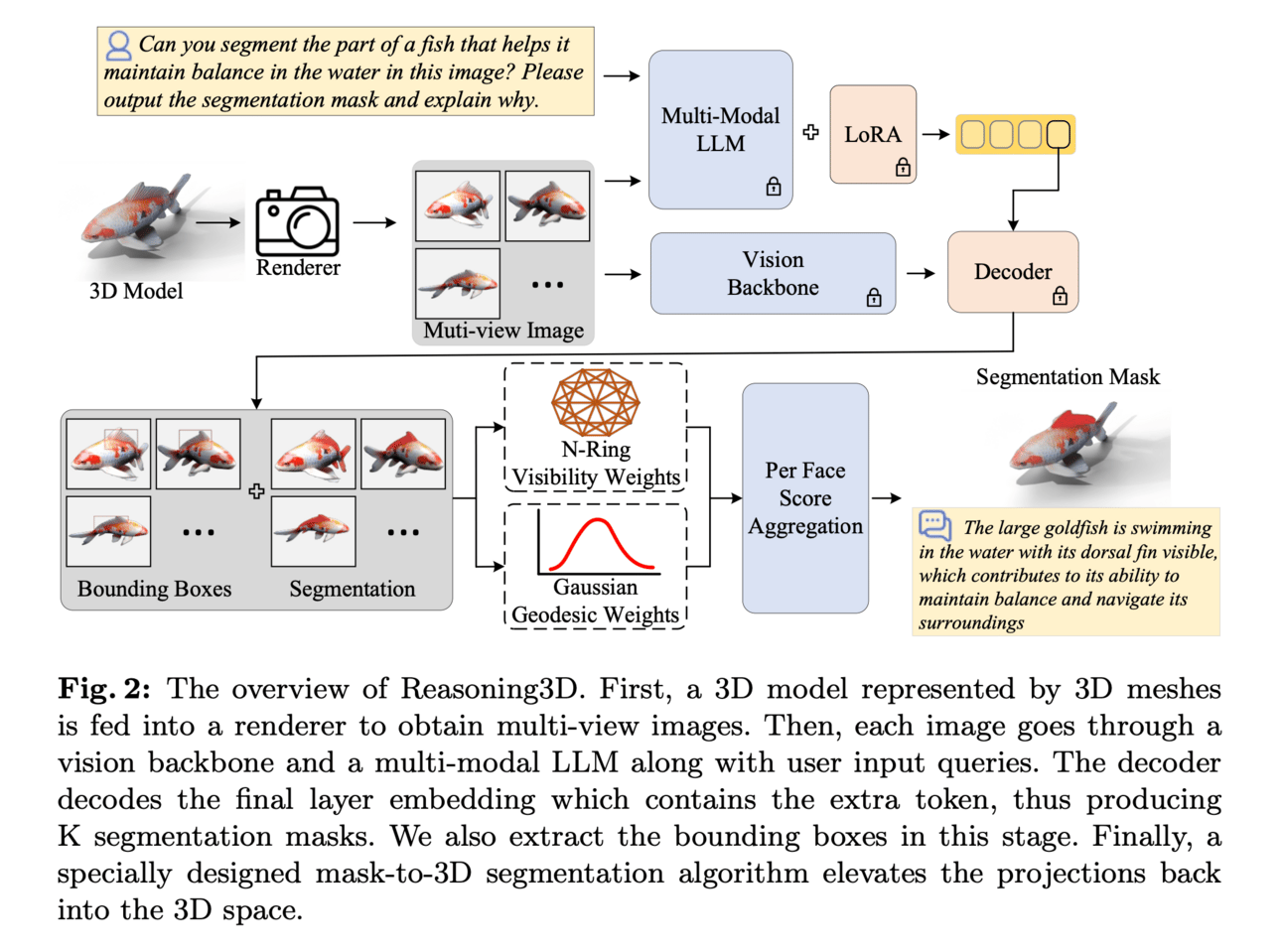

Reasoning3D – Grounding and Reasoning in 3D: Fine-Grained Zero-Shot Open-Vocabulary 3D Reasoning Part Segmentation via Large Vision-Language Models - Introduces Reasoning3D, a method using LLMs for 3D object segmentation based on textual queries, with rapid deployment in various applications.

Towards Next-Generation Urban Decision Support Systems through AI-Powered Generation of Scientific Ontology using Large Language Models – A Case in Optimizing Intermodal Freight Transportation - Details an AI workflow using the ChatGPT API for generating scientific ontologies, enhancing decision-making in urban and environmental management.

Learning from Litigation: Graphs and LLMs for Retrieval and Reasoning in eDiscovery - Explores a hybrid approach combining graph-based and LLM-based methods for improving eDiscovery processes in legal contexts.

Can Graph Learning Improve Task Planning? - Examines the integration of Graph Neural Networks with LLMs for enhancing task planning, demonstrating improvements in decision-making on complex tasks.

Kestrel: Point Grounding Multimodal LLM for Part-Aware 3D Vision-Language Understanding - Introduces Kestrel, a multimodal LLM enhancing 3D vision-language tasks by focusing on part-level understanding and grounded language comprehension.

Grasp as You Say: Language-guided Dexterous Grasp Generation - Introduces DexGYSNet, a dataset enabling robots to perform dexterous grasping from human language commands, enhancing human-robot interaction.

Validates the effectiveness of the DexGYSGrasp framework through extensive experiments, proving its capability in generating intent-aligned and high-quality dexterous grasps.