Core research improving LLMs!

Why?: The research is important as it explores the integration of gradient-based optimization with LLM-based optimization to enhance the efficacy of solving complex non-convex optimization problems in prompt tuning.

How?: The research proposes a collaborative optimization approach that combines gradient-based and LLM-based optimizers in an interleaved manner. The team begins by using a gradient-based optimizer to make locally optimal updates at each step, akin to a diligent doer. Alongside, they introduce an LLM-based optimizer that acts as a high-level instructor by inferring solutions from natural language instructions. Task descriptions and optimization trajectories recorded during the gradient-based optimization process are utilized to instruct the LLMs. The inferred results from these LLMs serve as restarting points for subsequent stages of gradient optimization. This iterative process leverages both the rigorous local updates of gradient-based methods and the high-level deductive reasoning capabilities of LLMs.

Results: The combined optimization method consistently yields improvements over competitive baseline prompt tuning methods, demonstrating the synergistic effect of conventional gradient-based optimization and the inference ability of LLMs.

Why?: This research addresses the high computational costs involved in multimodal LLMs by proposing a more efficient method for integrating visual information.

How?: The researchers introduced VLoRA, a new approach leveraging parameter space alignment instead of the conventional input space alignment. They utilized a vision encoder to extract visual features from images, which were then converted into perceptual weights. These perceptual weights were subsequently merged with the LLM's weights. This approach eliminated the need for visual tokens in the input sequence, thereby reducing its length and enhancing computational efficiency. The primary innovation was the perceptual weights generator that transformed visual features into low-rank perceptual weights, aligning with the structure similar to LoRA (Low-Rank Adaptation). The experimental validation was conducted on various benchmarks for multimodal LLMs to establish the effectiveness of this method.

Why?: Improving the efficiency of speculative decoding in low-memory GPUs is crucial for enabling faster LLM inference on less powerful hardware.

How?: The researchers proposed an approach called Skippy Simultaneous Speculative Decoding (S3D), which leverages simultaneous multi-token decoding combined with mid-layer skipping. This method aims at minimizing the memory overhead while still providing substantial speedup for LLM inference. Multi-token decoding enables the generation of multiple tokens in one step, thus speeding up the decoding process, while mid-layer skipping reduces the computational load by skipping unnecessary layers during the inference. The researchers designed S3D with minimal architectural changes and without requiring extensive new training data. They utilized the memory efficiency provided by S3D to create a high-performing yet smaller SD model based on the Phi-3 architecture and compared its performance against recent open-source SD systems, particularly focusing on the quantized EAGLE model, analyzing speed and memory usage in half-precision mode.

Results: When compared against recent effective open-source SD systems, the proposed method has achieved one of the top performance-memory ratios while requiring minimal architecture changes and training data. The smaller yet more effective SD model based on Phi-3 was found to be 1.4 to 2 times faster than the quantized EAGLE model and operates in half-precision while using less VRAM.

Why?: The research addresses the critical dependency of LLM outputs on prompt design, enhancing their effectiveness without human intervention or task-specific training.

How?: The researchers proposed a novel approach named Hierarchical Multi-Agent Workflow (HMAW) that leverages multiple LLMs in a hierarchical structure to autonomously design and optimize prompts. HMAW divides the process into two main steps. First, one or more LLMs collaboratively construct an optimal prompt by refining instructions and wording through multiple iterations. Second, the final prompt generated by the hierarchy is used by another LLM to answer the user's query. This method is designed to be task-agnostic, meaning it does not require tuning to specific tasks nor does it involve pre-training or task-specific data. The efficacy of HMAW was evaluated through both quantitative and qualitative experiments across various benchmarks, demonstrating its ability to boost LLM performance without significant overhead or complexity.

Why?: This research addresses the challenge of adapting English-focused LLMs to other languages while maintaining their conversational and content filtering abilities.

How?: The researchers propose a method called Instruction Continual Pre-training (InsCP), which incorporates instruction tags into the continual pre-training (CP) process. This approach aims to prevent the degradation of conversational abilities and content filtering effectiveness when transferring LLMs to new languages. The process starts by collecting 0.1 billion tokens of high-quality instruction-following data in the target language. These tokens contain specific instruction tags that guide the model in maintaining its conversational proficiency. The InsCP method then integrates these tokens into a modified CP regimen, ensuring that language adaptation is achieved without compromising other essential functionalities of the LLM. The efficacy of InsCP is validated through empirical evaluations on benchmarks for language alignment, reliability, and knowledge retention, ensuring that the model performs comparably to its original version while effectively responding in the target language.

Results: Empirical evaluations confirm that InsCP retains conversational and Reinforcement Learning from Human Feedback (RLHF) abilities. The method requires only 0.1 billion tokens of high-quality instruction-following data, thereby reducing resource consumption.

Why?: To improve translation quality while preserving desirable abilities in large language models (LLMs).

How?: The research involves an extensive evaluation of the translation capabilities of the LLaMA and Falcon families of models, which range from 7 billion to 65 billion parameters. The authors fine-tuned these models on parallel data to observe its impact on various desirable LLM behaviors, such as steerability, inherent document-level translation, and the ability to produce less literal translations. During this process, they assessed how these abilities degraded or improved post fine-tuning. To address the degradation of certain abilities, the researchers incorporated monolingual data as part of the fine-tuning process. This strategy aimed to balance between enhancing translation quality and maintaining other beneficial LLM properties.

Results: The study found that while fine-tuning improved general translation quality, it led to a decline in formality steering, technical translation through few-shot examples, and document-level translation abilities. However, models produced less literal translations. By including monolingual data in the fine-tuning process, these abilities were better preserved while still enhancing overall translation quality.

Why?: The research aims to improve Retrieval Augmented Generation (RAG) by addressing the limitations of relying solely on similarity between queries and documents, which can lead to performance degradation in knowledge-intensive tasks.

How?: The research introduces MetRag, a multi-layered thought framework that enhances Retrieval Augmented Generation. MetRag incorporates three layers of thoughts: similarity-oriented, utility-oriented, and compactness-oriented thoughts.

1. Similarity-Oriented Thought: This conventional approach retrieves documents based on similarity metrics to the given query.

2. Utility-Oriented Thought: A small-scale utility model, supervised by an LLM, evaluates the usefulness of the retrieved documents, adding a layer of relevance beyond mere similarity.

3. Compactness-Oriented Thought: To deal with the often large set of retrieved documents, an LLM is utilized as a task-adaptive summarizer.

This step aims to capture commonalities and characteristics within the document set, making the information more compact and manageable.multi-layered thoughts are then combined in the final stage where an LLM generates the knowledge-augmented response. This comprehensive approach aims to tackle the shortcomings of existing RAG methods by integrating not just similarity and relevance, but also conciseness and coherence in the generation process.

Why?: The research aims to enhance the efficiency of LLM-based applications by addressing limitations in current public LLM services, which lead to sub-optimal end-to-end performance.

How?: The research introduces Parrot, a service system designed to improve the end-to-end performance of LLM-based applications. Parrot employs a novel concept called 'Semantic Variable,' which serves as a unified abstraction to expose application-level knowledge to public LLM services. Semantic Variables annotate input/output variables in the prompts of requests and create data pipelines when linking multiple LLM requests. This allows for a more integrated workflow and helps uncover correlations across multiple LLM requests through data flow analysis. By performing such an analysis, Parrot can identify optimization opportunities that would not be apparent when handling individual LLM requests separately.

Results: Extensive evaluations demonstrate that Parrot can achieve up to an order-of-magnitude improvement for popular and practical use cases of LLM applications.

Why?: The research is important as it aims to create a structured ontology that enhances LLMs' ability to handle real-world generative AI tasks, especially for applications like personal AI assistants.

How?: To create the KNOW ontology, the researchers focused on a pragmatic approach that started with established human universals, specifically spacetime (places and events) and social structures (people, groups, organizations). The ontology is designed to capture the everyday knowledge that LLMs can use to augment various applications. The team compared and contrasted KNOW with previous projects like Schema.org and Cyc, noting how LLMs already internalize much of the commonsense knowledge that older projects painstakingly gathered over decades. They emphasize simplicity and usability by making software libraries available in 12 popular programming languages. These libraries enable developers to leverage the ontology concepts directly in their software engineering efforts, promoting better AI interoperability and enhancing the developer experience. Key criteria for defining concepts in the ontology include universality and utility, ensuring that only the most pragmatically valuable concepts are included.

Why?: Understanding how effectively in-context learning (ICL) aligns with long-context language models (LLMs) for following instructions is important for enhancing their performance in real-world tasks.

How?: The researchers began by evaluating the performance of LLMs utilizing URIAL, a method that leverages three in-context examples to align base LLMs, and compared its performance to instruction fine-tuning on standard benchmarks such as MT-Bench and AlpacaEval 2.0 (LC). They observed that adding more ICL demonstrations did not systematically improve instruction following performance for long-context LLMs. To improve this, they introduced a greedy selection approach for ICL examples, which aimed at optimizing the choice of examples to enhance performance. Additionally, the researchers conducted ablation studies to better understand why there was a performance gap between ICL alignment and instruction fine-tuning, specifically looking into aspects of ICL that are unique to the instruction tuning context.

Results: While effective, ICL alignment with URIAL underperforms compared to instruction fine-tuning, especially with more capable base LMs. Adding more ICL demonstrations does not systematically improve instruction following performance. A greedy selection approach for ICL examples improves performance but does not bridge the gap to instruction fine-tuning. Ablation studies reveal specific reasons behind the remaining gap and aspects of ICL unique to the instruction tuning setting.

Why?: The research addresses how to improve retrieval-augmented generation (RAG) in LLMs without compromising their general generation abilities.

How?: The research proposes learning scalable and pluggable virtual tokens tailored for retrieval-augmented generation. Instead of fine-tuning the entire LLM, which can affect its general capabilities, the approach optimizes only the embeddings of these virtual tokens. This ensures that LLMs can benefit from updated and factual information retrieved without altering their inherent parameters. The method involves multiple training strategies to ensure the virtual tokens are scalable and can integrate seamlessly with various tasks, thus maintaining the general flexibility and functionality of the original model. The training process emphasizes improving embeddings while keeping the primary LLM parameters intact to preserve overall model performance while enhancing retrieval-augmented capabilities.

Let’s make LLMs safe!!

Why?: This research is significant because it uncovers new security vulnerabilities in Retrieval Augmented Generation (RAG) systems, which can be exploited to compromise the integrity and safety of chatbot applications that rely on LLMs.

How?: The research was conducted by designing a framework called Phantom, which executes a two-step attack on RAG systems. First, the researchers created a poisoned document designed to be retrieved by the RAG system. This poisoned document included a specific sequence of words, acting as a backdoor trigger, that ensured it would be among the top-k results for certain queries. During the second step, this adversarial document, once retrieved, included an adversarial string that could trigger various harmful behaviors in the LLM generator. These behaviors included denial of service, reputation damage, privacy violations, and inducing harmful actions in the chatbot. The attacks were validated on multiple LLM architectures, including Gemma, Vicuna, and Llama, demonstrating the broad applicability and effectiveness of the attack method across different systems.

Results: The research successfully demonstrated the Phantom attack framework across multiple LLM architectures, validating its effectiveness and broad applicability. The attacks were able to compromise the RAG system by retrieving malicious documents that triggered adversarial behaviors in the LLM generator, confirming the new security risks posed by RAG systems.

Why?: The research addresses the vulnerability of Large Language Models (LLMs) to specific prompts designed to bypass moderation guardrails, which can lead to harmful behaviors. Current benchmarks often overlook these moderation triggers, complicating the evaluation of jailbreak strategies.

How?: The research proposes JAMBench as a new benchmark to evaluate the moderation guardrails of LLMs by including 160 manually crafted instructions across four risk categories with varying levels of severity. The authors also introduce a new jailbreak method called JAM (Jailbreak Against Moderation). The JAM method involves using tailored jailbreak prefixes to bypass input-level filters and employing a fine-tuned shadow model that is functionally similar to the guardrail model. This shadow model generates cipher characters designed to circumvent output-level filters. The study conducts extensive experiments on four different LLMs to evaluate the efficacy of JAM against existing guardrails.

Results: JAM achieves higher jailbreak success ( x19.88) and lower filtered-out rates ( x1/6) compared to baseline methods during extensive experiments on four different LLMs.

Creative ways to use LLMs!!

ParSEL: Parameterized Shape Editing with Language - Focuses on enabling precise 3D shape edits through natural language instructions, democratizing 3D content creation. The system effectively translates language inputs into geometric editing tasks, outperforming traditional methods in control and precision.

Large Language Models Can Self-Improve At Web Agent Tasks - Examines the self-improvement capabilities of LLMs in navigating and performing complex web agent tasks, using synthetic training data for fine-tuning. The method shows significant enhancements in performance and robustness.

PostDoc: Generating Poster from a Long Multimodal Document Using Deep Submodular Optimization - Develops a method to automatically transform long multimodal documents into concise and visually appealing posters. Demonstrates significant improvements in content relevance and design aesthetics, enhancing educational and professional presentations.

GNN-RAG: Graph Neural Retrieval for Large Language Model Reasoning - Combines Graph Neural Networks with LLMs to improve question-answering over Knowledge Graphs. Shows state-of-the-art performance on benchmark tests, significantly enhancing factual question-answering capabilities.

LLMs in robotics!!

Nadine: An LLM-driven Intelligent Social Robot with Affective Capabilities and Human-like Memory - Enhances human-robot interactions by integrating advanced LLMs into the Nadine social robot platform, giving it human-like memory and emotional appraisal capabilities. The robot system uses multimodal inputs and episodic memory to simulate emotional states and provide natural interaction experiences.

Robo-Instruct: Simulator-Augmented Instruction Alignment For Finetuning CodeLLMs - Introduces Robo-Instruct, which enhances the performance of open-weight LLMs in robot programming by integrating simulator-based verification and instruction alignment. This method successfully brings smaller models up to or beyond the capability of larger proprietary models like GPT-3.5-Turbo.

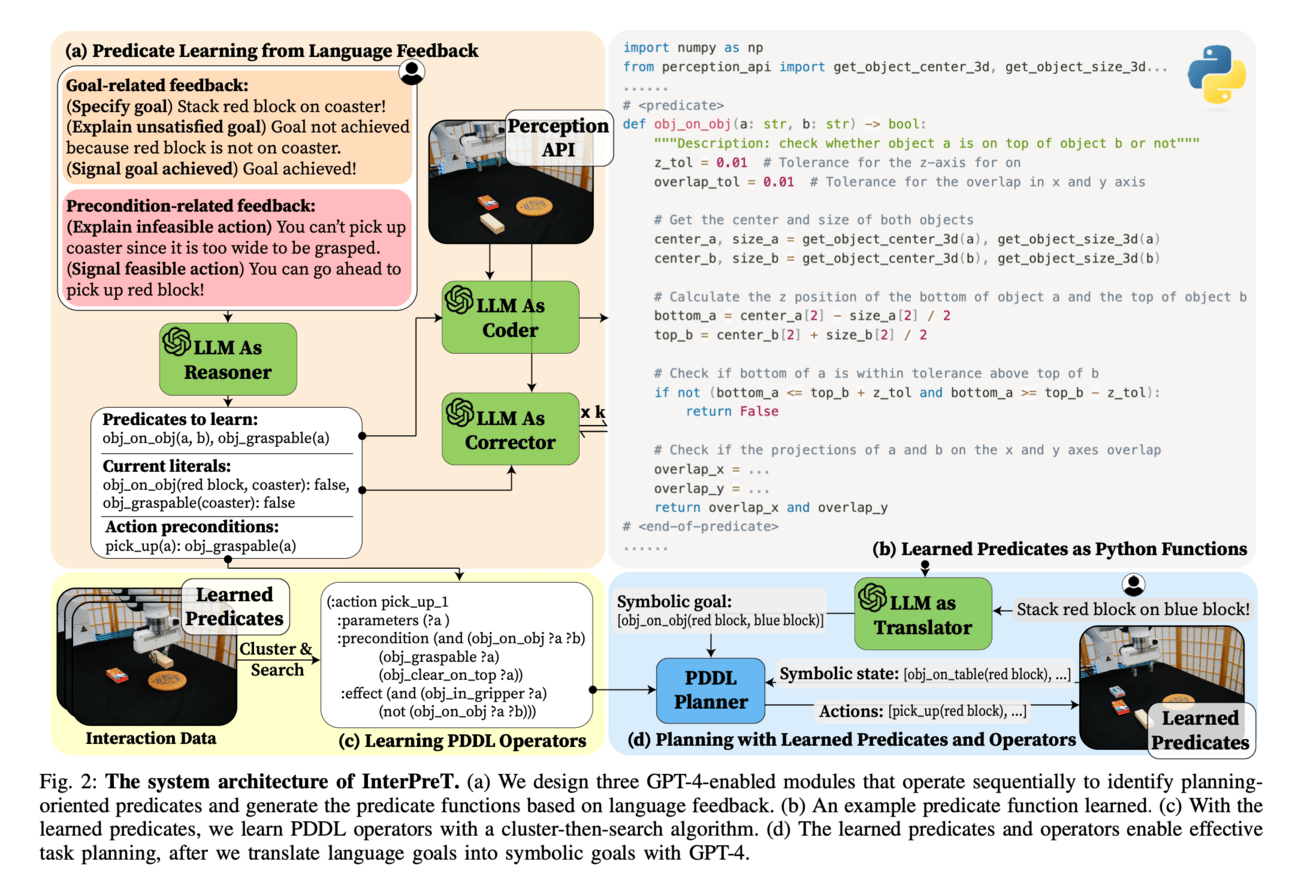

InterPreT: Interactive Predicate Learning from Language Feedback for Generalizable Task Planning - Develops a framework that teaches robots to perform long-horizon planning by learning symbolic predicates from human language feedback. The system translates learned predicates and operators into PDDL for robust task planning in diverse settings, achieving high success rates in real-world robot manipulation scenarios.