One of many demonstrations provided by authors to shows improvement over previous techniques. Check more demo on site mentioned below

🔗Paper demo: https://jamesyjl.github.io/DreamReward/

🤔Problem?:

The research paper addresses the issue of current text-to-3D methods often generating 3D results that do not align well with human preferences. Despite the recent success in generating 3D content from text prompts, there's a gap in producing results that truly resonate with human preferences and intentions.

💻Proposed solution:

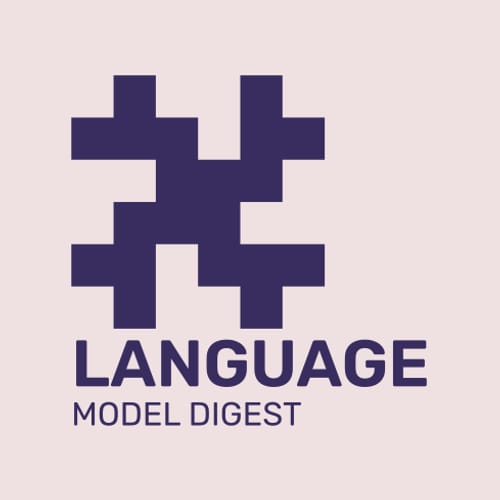

The paper proposes a comprehensive framework called DreamReward, which focuses on learning and improving text-to-3D models based on human preference feedback. Firstly, they collect a significant dataset of expert comparisons to understand human preferences better. Then, they introduce Reward3D, a general-purpose text-to-3D human preference reward model that effectively encodes these preferences. This model is then used to develop DreamFL, a direct tuning algorithm that optimizes multi-view diffusion models using a redefined scorer. By grounding their approach in theoretical analysis and conducting extensive experiment comparisons, DreamReward aims to generate high-fidelity and 3D consistent results that closely align with human intentions.

📊Results:

The research paper highlights significant boosts in prompt alignment with human intention through the implementation of DreamReward. However, specific performance improvement metrics are not mentioned. Nonetheless, the paper demonstrates the potential of learning from human feedback to enhance text-to-3D models, paving the way for more user-friendly and intuitive 3D content creation processes.

🔗Code/data/weights:https://mathverse-cuhk.github.io

🤔Problem?:

The research paper addresses the insufficient evaluation and understanding of Multi-modal Large Language Models (MLLMs) in visual math problem-solving.

💻Proposed solution:

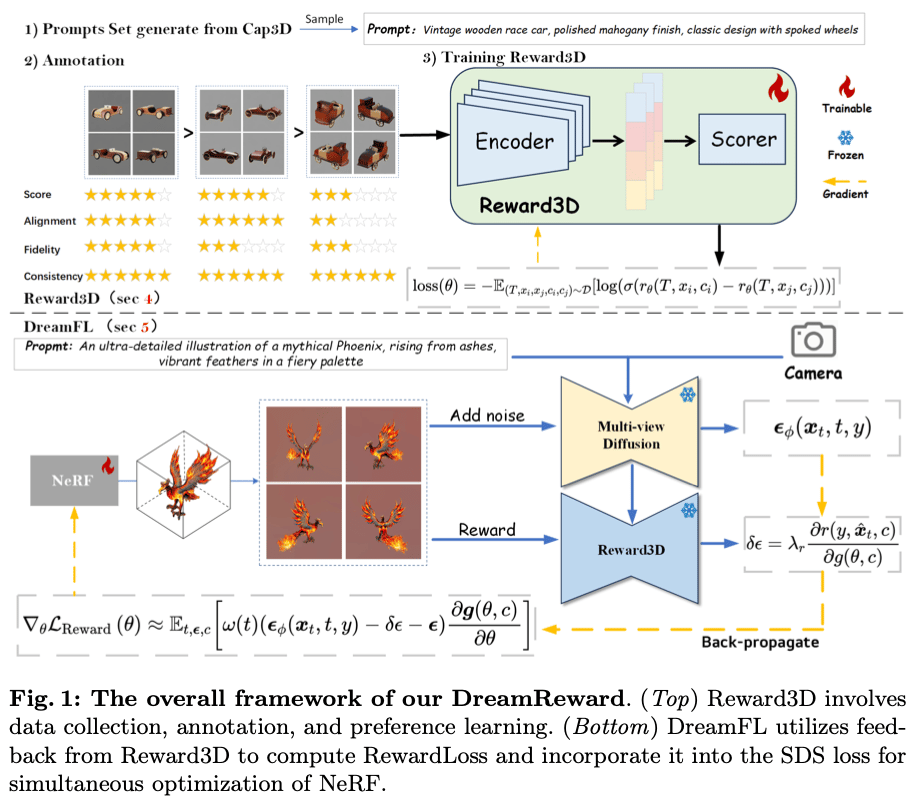

To solve this problem, the research paper proposes the creation of a new benchmark called MathVerse. This benchmark includes 2,612 high-quality math problems with diagrams, each transformed into six versions with varying degrees of information content in multi-modality. This allows for a comprehensive evaluation of how well MLLMs can truly understand visual diagrams for mathematical reasoning. Additionally, the paper introduces a Chain-of-Thought (CoT) evaluation strategy, which uses GPT-4(V) to extract reasoning steps from the MLLMs' output answers and score them for detailed error analysis. This approach provides insights into the MLLMs' intermediate CoT reasoning quality.

📊Results:

The research paper does not mention any specific performance improvements achieved, as its focus is on providing a new benchmark and evaluation strategy rather than developing a new MLLM. However, the MathVerse benchmark and CoT evaluation strategy may assist in guiding future development and improvements of MLLMs for visual math problem-solving.

🤔Problem?:

The research paper addresses the problem of maintaining long-term information in multi-modal LLMs, specifically in the context of long-form video understanding. While these models are effective at handling long context-lengths, their performance gradually declines as the input length increases.

💻Proposed solution:

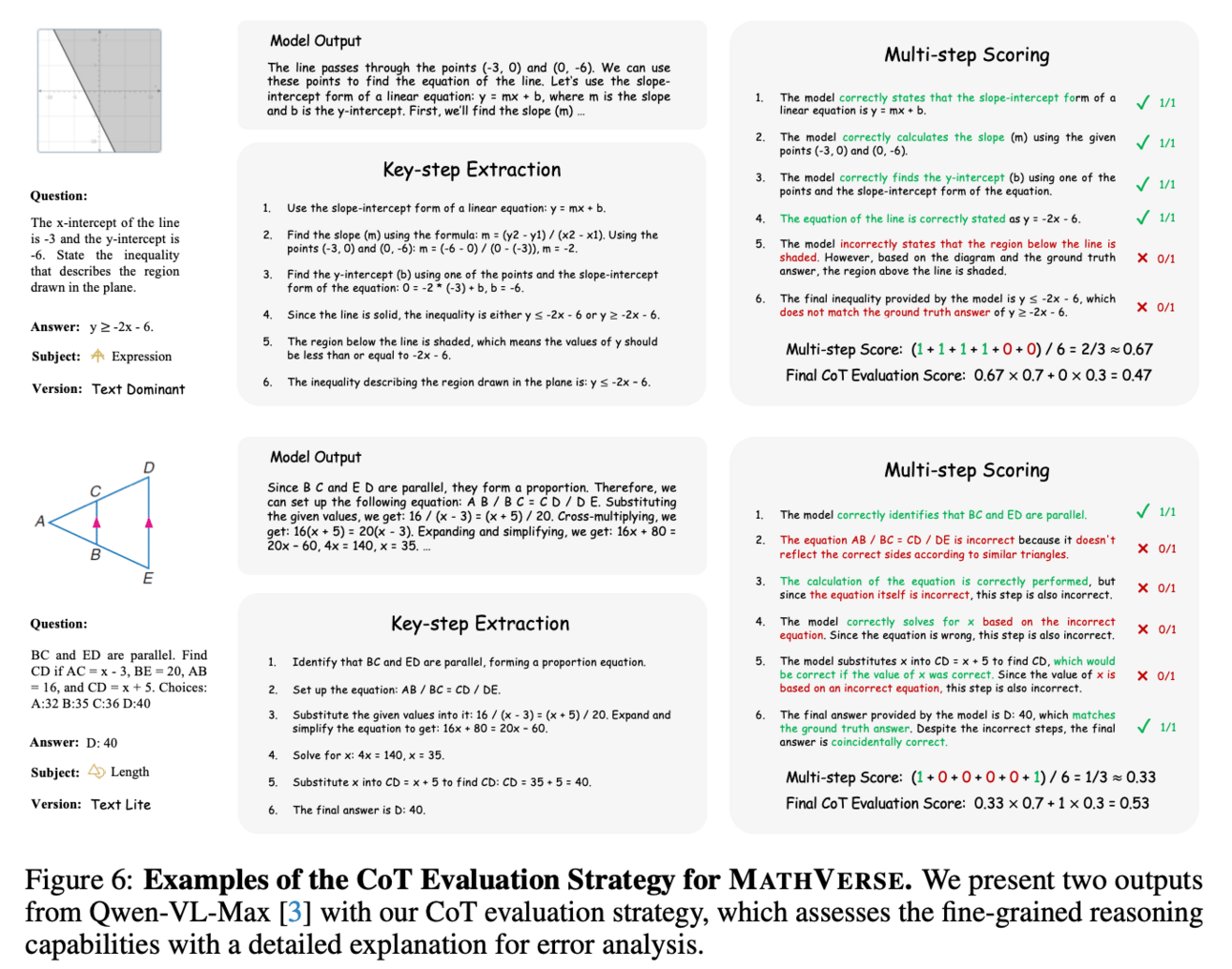

The research paper proposes a solution in the form of a Language Repository (LangRepo) for LLMs. This repository maintains concise and structured information in an interpretable (all-textual) representation. It is updated iteratively based on multi-scale video chunks and includes write and read operations that aim to reduce redundancies in text and extract information at various temporal scales.

📊Results:

Paper demonstrates the effectiveness of the proposed framework on several zero-shot visual question-answering benchmarks, including EgoSchema, NExT-QA, IntentQA, and NExT-GQA. The results show that the framework achieves state-of-the-art performance at its scale, indicating a significant improvement over existing approaches.

🤔Problem?:

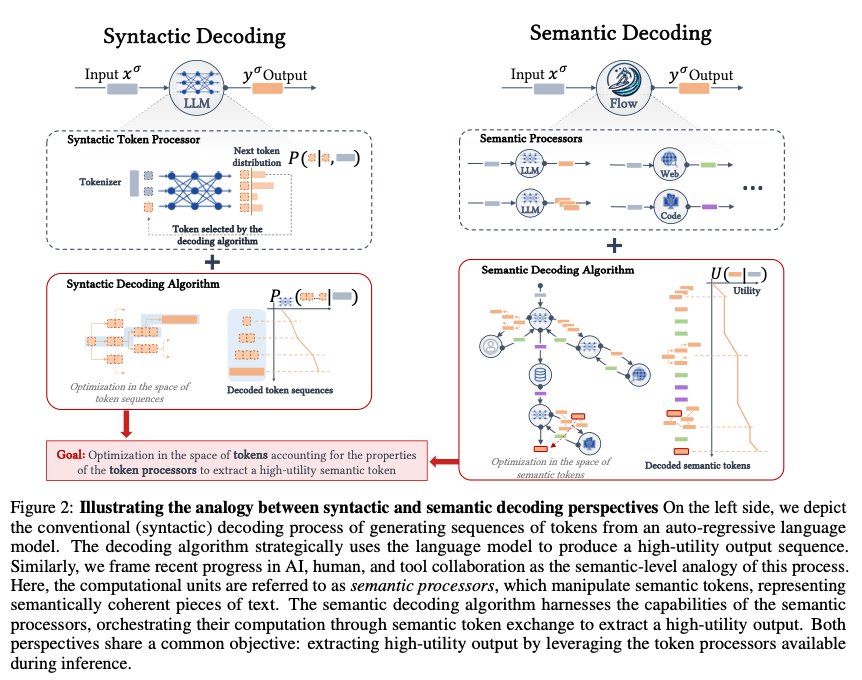

The research paper addresses the issue of limitations in language model machines (LLMs) by proposing a novel perspective called "semantic decoding", which aims to optimize collaborative processes between LLMs, human input, and various tools.

💻Proposed solution:

Paper proposes to solve the problem by viewing LLMs as semantic processors that manipulate meaningful pieces of information, known as semantic tokens, in semantic space. This allows for dynamic exchanges of semantic tokens between LLMs, humans, and tools, resulting in high-utility outputs. This process, referred to as "semantic decoding algorithms", draws parallels to the well-studied problem of syntactic decoding and offers a fresh perspective on the engineering of AI systems.

🤔Problem?:

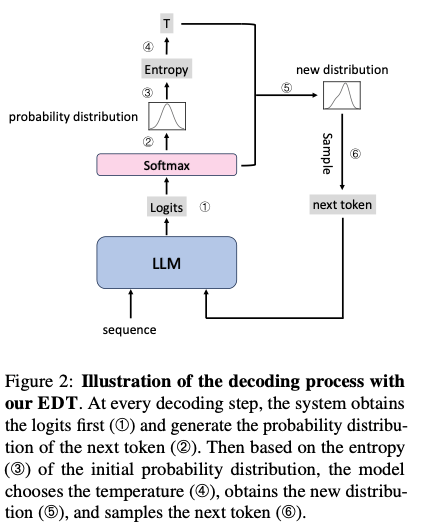

The research paper addresses the issue of finding an optimal temperature parameter for temperature sampling in Large Language Models (LLMs).

💻Proposed solution:

The paper proposes a new method called Entropy-based Dynamic Temperature (EDT) Sampling to solve this problem. It works by dynamically selecting the temperature parameter based on the entropy of the generated text, achieving a more balanced performance in terms of both quality and diversity of the generated text.

📊Results:

The paper shows that EDT significantly outperforms existing strategies across different tasks, indicating a performance improvement in generating text for LLMs. This is demonstrated through experiments and comprehensive analyses on four different generation benchmarks.

🤔Problem?:

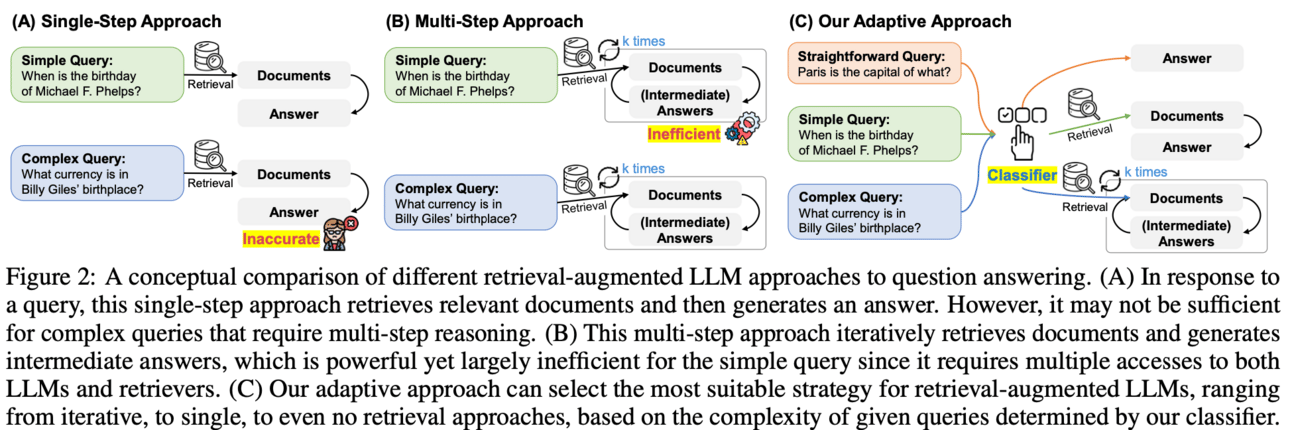

The research paper addresses the issue of response accuracy in Question-Answering (QA) tasks, specifically for complex multi-step queries. While there are various approaches for handling simple and complex queries, they either have unnecessary computational overhead or fail to adequately address multi-step queries which are more common in real-world user requests.

💻Proposed solution:

Team proposes an adaptive QA framework that can dynamically select the most suitable strategy for retrieval-augmented Large Language Models (LLMs) based on the complexity of the query. This is achieved through the use of a classifier, which is a smaller LM trained to predict the complexity level of incoming queries. The labels for this classifier are automatically collected from actual predicted outcomes of models and inherent biases in datasets. This approach offers a balanced strategy, seamlessly adapting between iterative and single-step retrieval-augmented LLMs, as well as no-retrieval methods, to handle a range of query complexities.

📊Results:

The research paper validates their model on a set of open-domain QA datasets and shows that it enhances the overall efficiency and accuracy of QA systems compared to relevant baselines, including other adaptive retrieval approaches.

🤔Problem?:

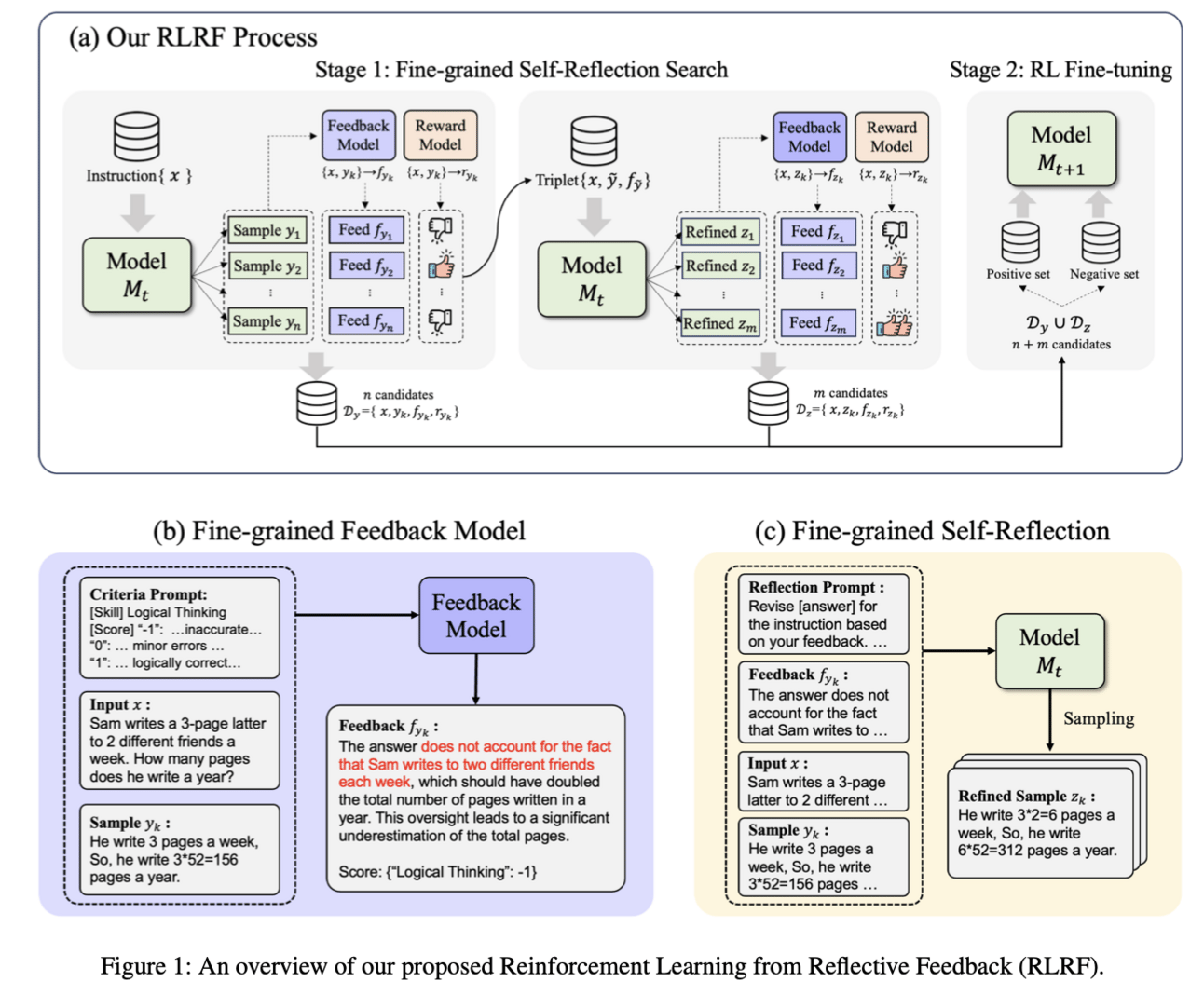

The research paper addresses the issue of superficial alignment in language learning models (LLMs) when using reinforcement learning from human preferences. It discusses how this method often prioritizes stylistic changes over improving downstream performance of LLMs and how underspecified preferences can obscure directions for aligning the models.

💻Proposed solution:

The research paper proposes a new framework called Reinforcement Learning from Reflective Feedback (RLRF) to address these challenges. It works by leveraging fine-grained and detailed feedback from humans to improve the core capabilities of LLMs. It also employs a self-reflection mechanism to systematically explore and refine LLM responses, and then uses reinforcement learning algorithms to fine-tune the models based on promising responses.

📊Results:

The research paper provides experiments across different evaluations, including Just-Eval, Factuality, and Mathematical Reasoning, to demonstrate the efficacy and transformative potential of RLRF beyond superficial surface-level adjustments. It shows significant performance improvements in these evaluations compared to traditional reinforcement learning methods.

🤔Problem?:

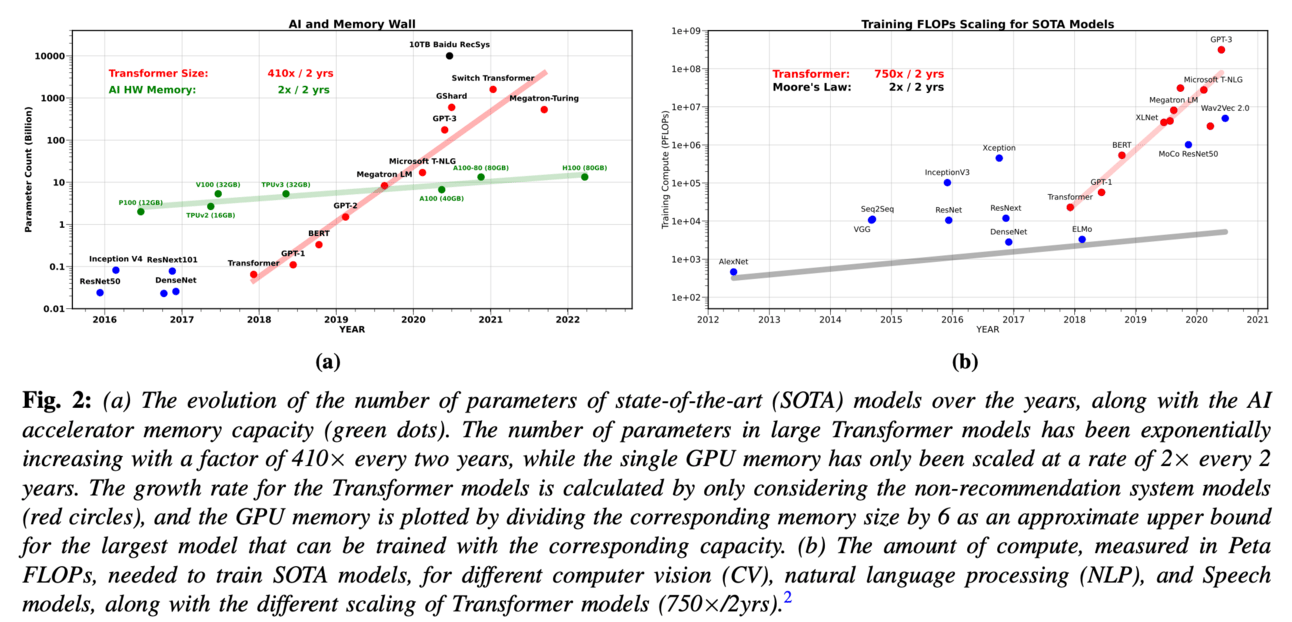

The research paper addresses the problem of memory bandwidth becoming the primary bottleneck in AI applications, particularly in serving large language models (LLMs).

💻Proposed solution:

The research paper proposes a redesign in model architecture, training, and deployment strategies to overcome this memory limitation. This involves finding a balance between model size and compute requirements, as well as optimizing memory usage during training and deployment. This can be achieved through techniques such as model pruning, weight sharing, and data compression.

Connect with fellow researchers community on Twitter to discuss more about these papers at

🤔Problem?:

PSALM addresses the segmentation task challenges. It also try to solve LLMs limitation of providing only textual output.

💻Proposed solution:

To overcome the limitation of the LMM being limited to textual output, PSALM incorporates a mask decoder and a well-designed input schema to handle a variety of segmentation tasks. This schema includes images, task instructions, conditional prompts, and mask tokens, which enable the model to generate and classify segmentation masks effectively.

📊Results:

PSALM achieves superior results on several benchmarks, such as RefCOCO/RefCOCO+/RefCOCOg, COCO Panoptic Segmentation, and COCO-Interactive, and further exhibits zero-shot capabilities on unseen tasks, such as open-vocabulary segmentation, generalized referring expression segmentation and video object segmentation, making a significant step towards a GPT moment in computer vision.

🤔Problem?:

The research paper tackles the challenge of extending perturbation-based explanation methods, such as LIME and SHAP, to generative language models. While these methods are commonly applied to text classification tasks, adapting them to handle text generation presents unique obstacles, particularly concerning the nature of text as output and dealing with long text inputs.

💻Proposed solution:

Paper proposes a general framework called MExGen, designed to address the challenges associated with applying perturbation-based explanation methods to generative language models. To handle the issue of text as output, they introduce the concept of scalarizers, which map text outputs to real numbers. Additionally, to manage long text inputs, they adopt a multi-level approach that gradually refines the granularity of analysis, focusing on algorithms that exhibit linear scaling in model queries. The framework is flexible and can accommodate various attribution algorithms, allowing for experimentation and comparison.

📊Results:

The paper mentions conducting a systematic evaluation, both automated and human, of perturbation-based attribution methods for summarization and context-grounded question answering. While specific performance improvement metrics are not provided in the abstract, the results indicate that the proposed framework can offer more locally faithful explanations of generated outputs compared to existing methods. This suggests a qualitative improvement in the interpretability of generative language models.