Dear Subscribers,

I need your help, please 🙏 I am running this newsletter since last one month and I am grateful that you subscribed to my newsletter. I am pouring lot of hard work to make this newsletter success and I need your help. I want you to share it with your peer research members, university friends, or colleagues. I sincerely urge you to help me today! Thanks in advance 😊

New Models & 🔥 news in LLMs space:

Cohere Command+ is available on HuggingFace: https://t.co/wAW7fD4qXG

Omni fusion - A new model

META launched a new chip! - Read announcement

Deeplearning.ai released a new short course “Preprocessing Unstructured Data for LLM Applications”

Google released CodeGemma & RecurrentGemma: two new additions to the Gemma family of lightweight, state-of-the-art open models.

💻Proposed solution:

The research paper proposes LLM2Vec, a simple unsupervised approach that can transform any decoder-only LLM into a strong text encoder. LLM2Vec consists of three steps: enabling bidirectional attention, masked next token prediction, and unsupervised contrastive learning. By incorporating these steps, LLM2Vec is able to effectively capture contextual information and learn high-quality text embeddings.

📊Results:

The research paper achieves significant performance improvements on English word- and sequence-level tasks, outperforming encoder-only models by a large margin. It also reaches a new unsupervised state-of-the-art performance on the Massive Text Embeddings Benchmark (MTEB). When combined with supervised contrastive learning, LLM2Vec achieves state-of-the-art performance on MTEB among models that train only on publicly available data. These results demonstrate the effectiveness and efficiency of LLM2Vec in transforming LLMs into universal text encoders without the need for expensive adaptation or synthetic data.

🤔Problem?:

The research paper addresses the problem of finding a general reasoning and search method for tasks that have decomposable outputs. This is particularly useful for tasks that require complex problem solving and can benefit from breaking down the solution into smaller components.

💻Proposed solution:

The research paper proposes a method called THOUGHTSCULPT, which uses Monte Carlo Tree Search (MCTS) to explore potential solutions through a search tree. It builds solutions one step at a time and uses a domain-specific heuristic (often an LLM evaluator) to evaluate the solutions. One of the key aspects of THOUGHTSCULPT is its ability to revise previous outputs if needed, rather than solely focusing on building the rest of the output.

📊Results:

The research paper shows that THOUGHTSCULPT outperforms existing reasoning methods in three challenging tasks: Story Outline Improvement (up to +30% interestingness), Mini-Crosswords Solving (up to +16% word success rate), and Constrained Generation (up to +10% concept coverage). This indicates the effectiveness of THOUGHTSCULPT in improving the performance of existing reasoning methods.

🤔Problem?:

The research paper addresses the issue of low adoption rate of retrieval results in subsequent steps, particularly in real-time search scenarios. This is due to existing embedding-based retrieval (EBR) models facing the "semantic drift" problem and not focusing enough on key information.

💻Proposed solution:

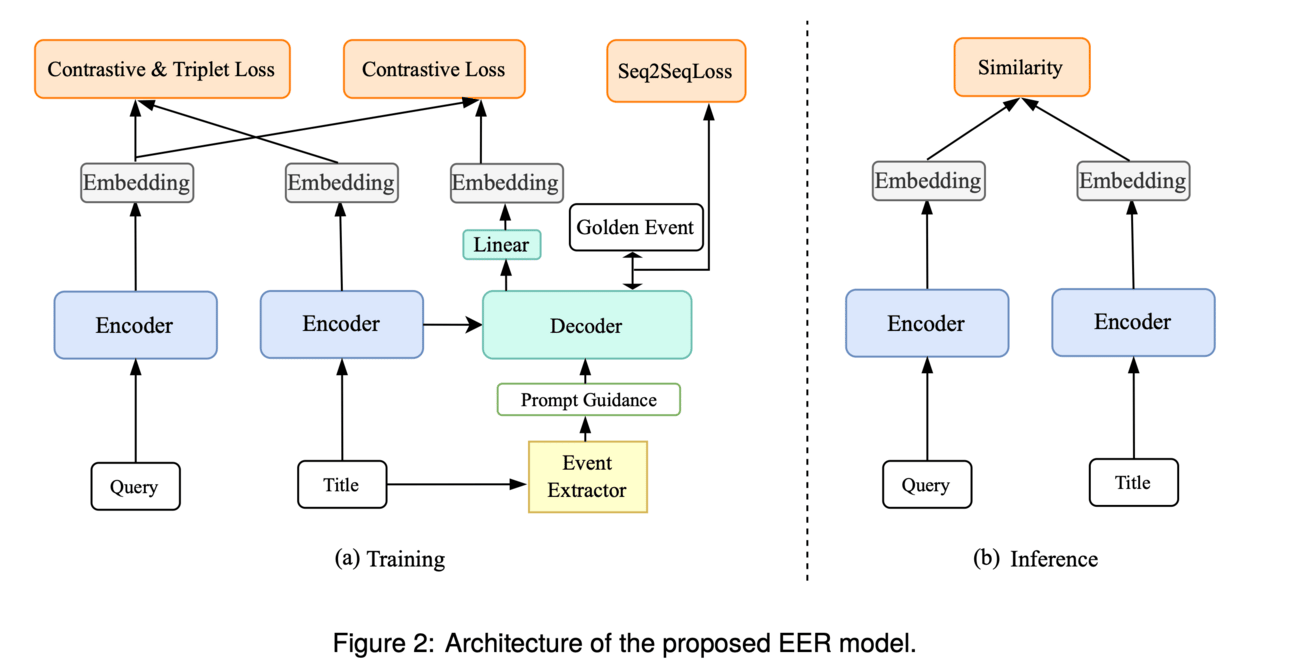

To solve this problem, the research paper proposes a novel approach called EER (Event-enhanced Retrieval). EER enhances real-time retrieval performance by improving the dual-encoder model of traditional EBR. This is achieved by incorporating contrastive learning for encoder optimization and introducing a decoder module after the document encoder. The decoder module is further enhanced with a generative event triplet extraction scheme and correlation with query encoder optimization through comparative learning. During inference, the decoder module can be removed.

📊Results:

The research paper has demonstrated through extensive experiments that EER significantly improves real-time search retrieval performance. This improvement is achieved by strengthening the focus on critical event information and incorporating various optimization techniques.

🤔Problem?:

The research paper addresses the problem of fine-tuning large language models (LLMs) using private data for downstream tasks. This is challenging due to the size of LLMs and varying computing and network resources of private edge servers.

💻Proposed solution:

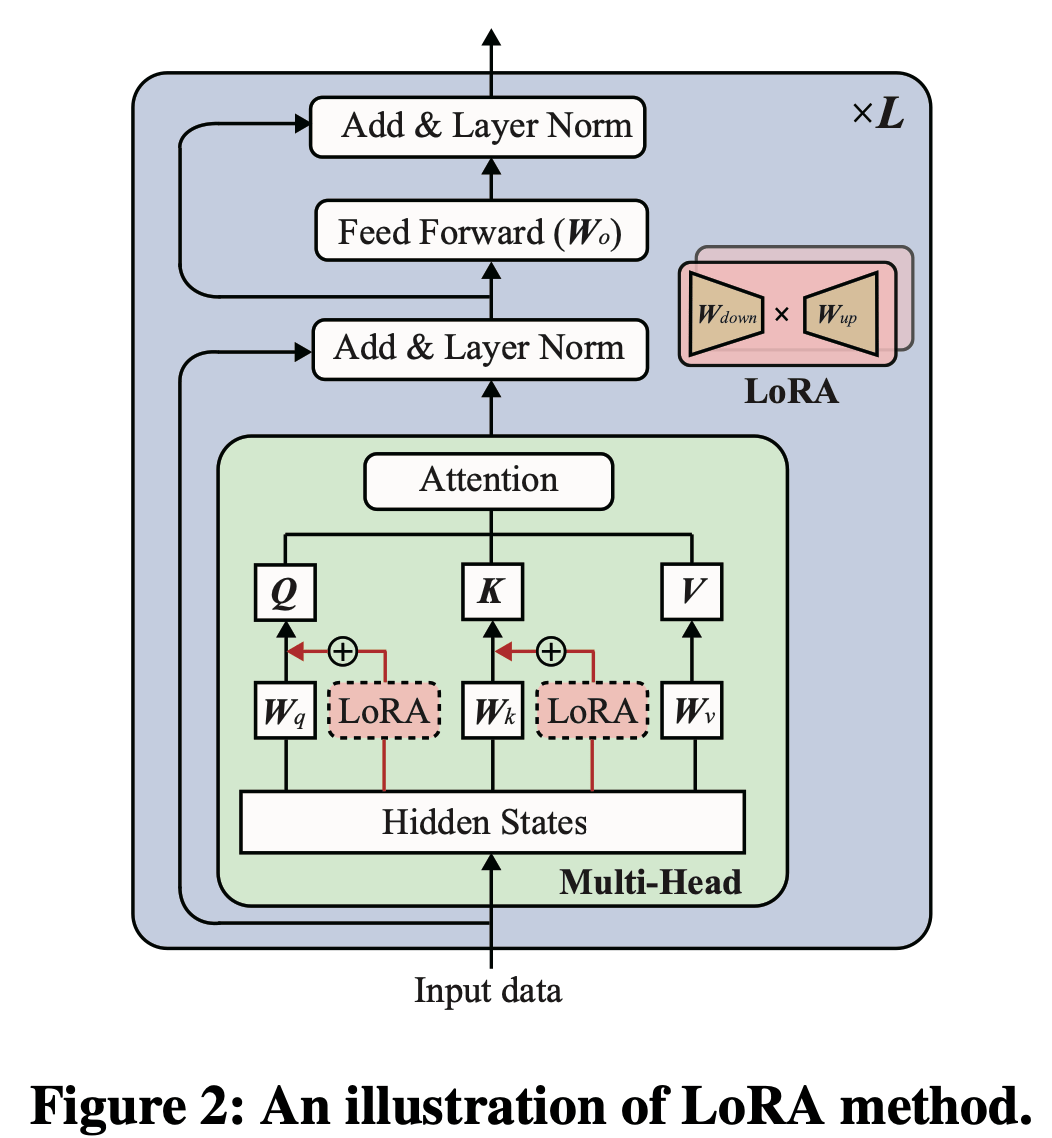

The research paper proposes an automated federated pipeline called FedPipe to solve this problem. FedPipe identifies the weights that need to be fine-tuned and configures low-rank adapters for each selected weight. These adapters are trained on edge servers and then aggregated to fine-tune the whole LLM. Additionally, FedPipe appropriately quantizes the LLM parameters to reduce memory space according to the requirements of edge servers.

📊Results:

The research paper demonstrates that FedPipe expedites model training and achieves higher accuracy compared to state-of-the-art benchmarks. This performance improvement is achieved by effectively utilizing the resources of edge servers and reducing training costs.

🤔Problem?:

The research paper addresses the problem of enhancing semantic grounding abilities in Vision-Language Models (VLMs) without the need for domain-specific training data, network architecture modifications, or fine-tuning.

💻Proposed solution:

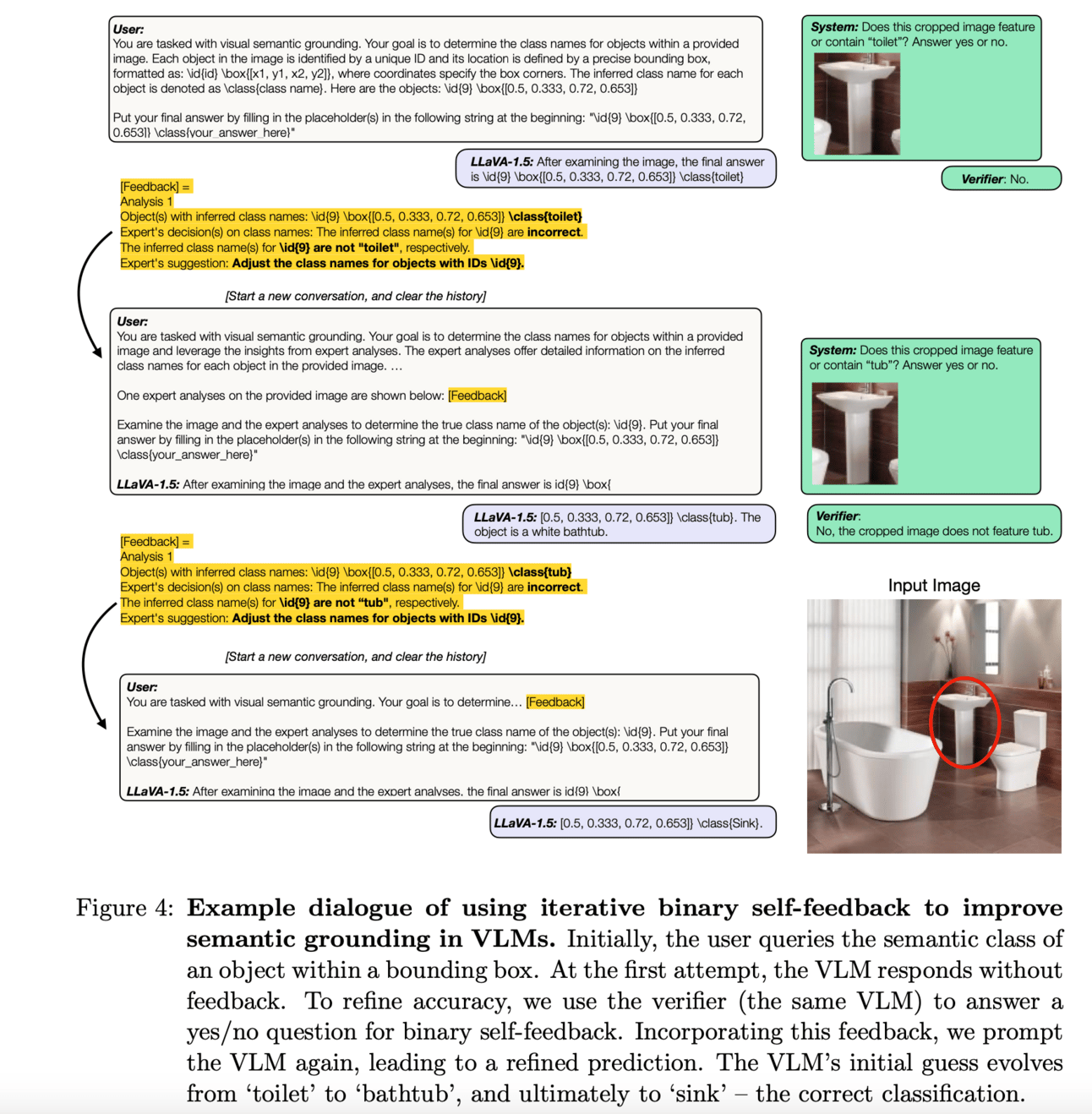

The research paper proposes a feedback mechanism composed of a binary signal as a way to improve semantic grounding in VLMs. This feedback mechanism is applied iteratively and can be prompted appropriately to improve grounding accuracy. The mechanism also includes a binary verification step to mitigate self-correction errors. It works by allowing VLMs to "receive" feedback and utilize it in a single step or iteratively to improve grounding performance.

📊Results:

The research paper achieved a significant improvement in grounding accuracy using the iterative feedback mechanism, with an increase of 15 points under noise-free feedback and up to 5 points under a simple automated binary verification mechanism. This demonstrates the potential of feedback as an alternative technique to enhance grounding in internet-scale VLMs.

Papers with database/benchmarks:

The Hallucinations Leaderboard -- An Open Effort to Measure Hallucinations in Large Language Models - Proposes the Hallucinations Leaderboard, an open initiative to quantitatively measure and compare the tendency of each model to produce hallucinations. This leaderboard uses a comprehensive set of benchmarks that focus on different aspects of hallucinations, such as factuality and faithfulness, and across various tasks like question-answering, summarization, and reading comprehension.

VisualWebBench: How Far Have Multimodal LLMs Evolved in Web Page Understanding and Grounding? - It consists of seven tasks and 1.5K human-curated instances from 139 real websites. This benchmark is designed to assess the capabilities of MLLMs in a variety of web tasks, including OCR, understanding, and grounding.

📚Want to learn more, Survey paper:

🧯Let’s make LLMs safe!! (LLMs security related papers)

🌈 Creative ways to use LLMs!! (Applications based papers)

🤖LLMs for robotics: