🔑 takeaway from today’s newsletter

Chain of Draft (CoD) cuts LLM token usage by up to 92.4% while retaining 91% accuracy on GSM8k.

LADDER boosts a 3B LLM’s math accuracy from 1% to 82% via self-generated problem variants.

LMM-R1 enhances 3B multimodal LLMs, improving MathVerse scores by 4.83% with rule-based RL.

SoRFT-Qwen-7B resolves 21.4% of software issues, outperforming larger models on SWE-Bench.

TOKENSWIFT speeds up 100K-token generation by 3×, reducing LLaMA3.1-8B time from 5 hours to 90 minutes.

Reasoning

Chain of Draft: Thinking Faster by Writing Less

Paper: https://arxiv.org/abs/2502.18600

Authors: Silei Xu et al. (Zoom Communications)

Focus: Reducing verbosity in LLM reasoning while maintaining accuracy

Code: https://github.com/sileix/chain-of-draft

This research paper introduces a novel prompting strategy, Chain of Draft (CoD), designed to make LLMs reason more efficiently. Unlike the verbose Chain-of-Thought (CoT) prompting, CoD mimics human shorthand by encouraging concise, essential intermediate reasoning steps, cutting down on token usage and latency.

Key Innovations:

Minimalistic Reasoning: CoD prompts LLMs to produce brief, information-dense drafts at each step, reducing unnecessary elaboration.

Efficiency Optimization: It achieves CoT-level accuracy with significantly fewer tokens, enhancing inference speed and lowering computational costs.

Results: Evaluated on benchmarks like GSM8k, date understanding, and coin flipping, CoD reduces token usage by up to 92.4% compared to CoT. For instance, on GSM8k, it maintains over 91% accuracy while cutting output tokens by 80% (e.g., from 205.1 to 43.9 for GPT-4o). Latency drops by 76.2% for GPT-4o and 48.4% for Claude 3.5 Sonnet, making CoD ideal for real-time applications without compromising reasoning quality.

LADDER: Self-Improving LLMs Through Recursive Problem Decomposition

Paper: https://arxiv.org/abs/2503.00735

Authors: Toby Simonds et al. (Tufa Labs)

Focus: Enabling LLMs to autonomously enhance reasoning via self-generated problem variants

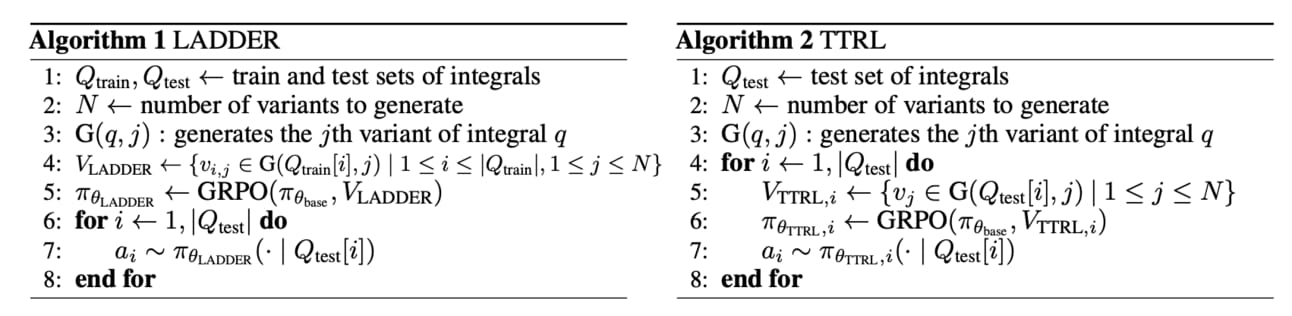

This paper presents a framework called LADDER, that allows LLMs to self-improve by recursively breaking down complex problems into simpler variants. This autonomous learning process, guided by reinforcement learning (RL) and numerical verification, eliminates the need for human supervision or curated datasets.

Key Innovations:

Recursive Variant Generation: LLMs create a tree of progressively simpler problem versions, forming a difficulty gradient for incremental learning.

Test-Time Reinforcement Learning (TTRL): At inference, TTRL refines solutions by applying RL to problem-specific variants, boosting performance dynamically.

Results: On mathematical integration tasks, LADDER improves a Llama 3.2 3B model’s accuracy from 1% to 82% on undergraduate-level problems. A Qwen2.5 7B Deepseek-R1 Distilled model achieves 73% on the 2025 MIT Integration Bee qualifying exam, surpassing GPT-4o (42%). With TTRL, accuracy rises to 90%, outpacing OpenAI’s o1 (80%), demonstrating the power of self-directed learning for complex reasoning.

LMM-R1: Empowering 3B LMMs with Strong Reasoning Abilities Through Two-Stage Rule-Based RL

Paper: https://arxiv.org/abs/2503.07536

Authors: Yingzhe Peng et al. (Southeast University, Ant Group, et al.)

Focus: Enhancing reasoning in compact 3B-parameter multimodal LLMs

Code: https://github.com/TideDra/lmm-r1

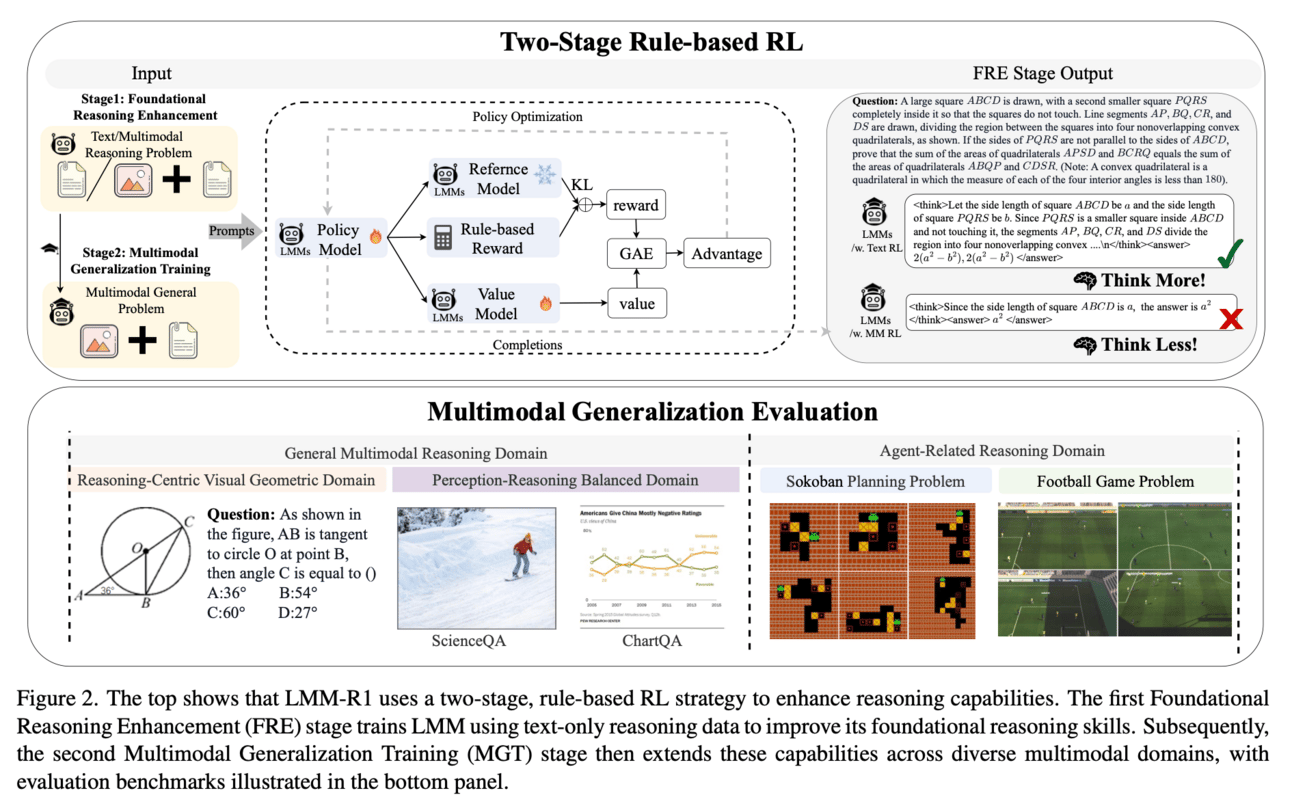

"LMM-R1" proposes a two-stage rule-based RL framework to boost reasoning in 3B-parameter large multimodal models (LMMs), overcoming their limited capacity and the scarcity of high-quality multimodal reasoning data. It first strengthens foundational reasoning with text-only data, then generalizes it to multimodal contexts.

How: "LMM-R1" proposes two stage RL which is a foundational reasoning enhancement (FRE) uses text-only data to build reasoning skills, followed by multimodal generalization training (MGT) for multimodal application. It also proposes a rule based rewards which combines format and accuracy rewards to guide learning efficiently, avoiding extensive human annotation.

Results: Applied to Qwen2.5-VL-Instruct-3B, LMM-R1 achieves average gains of 4.83% on multimodal benchmarks (e.g., MathVerse: 34.64% to 41.55%) and 4.5% on text-only benchmarks (e.g., MATH500: 63.4% to 65.8%). On the complex Football Game task, it improves by 3.63% (15.36 to 18.99), outperforming larger models like GPT-4o (21.20). This approach proves text-based reasoning can effectively transfer to multimodal domains, offering a cost-efficient training strategy.

Sketch-of-Thought: Efficient LLM Reasoning with Adaptive Cognitive-Inspired Sketching

Paper: https://arxiv.org/abs/2503.05179

Authors: Not specified (PDF unavailable)

Focus: Improving LLM reasoning efficiency with cognitive-inspired sketching

This paper introduces a method, Sketch-of-Thought, to enhance reasoning efficiency in LLMs. Drawing from cognitive processes, it uses adaptive sketching to streamline problem-solving, though detailed insights are limited due to the unavailable PDF.

How: This paper proposes adaptive sketching. It likely employs concise, cognitive-inspired sketches to guide reasoning, reducing computational overhead. Also, it aims to maintain accuracy while speeding up inference, though specifics are unclear without the full paper.

Results: Without access to the paper, specific results cannot be detailed. Based on the abstract, it likely demonstrates improved reasoning efficiency across various tasks, aligning with the trend of optimizing LLM performance for practical use.

Context length improvement

SoRFT: Issue Resolving with Subtask-oriented Reinforced Fine-Tuning

Paper: https://arxiv.org/abs/2502.20127

Authors: Zexiong Ma et al. (Peking University & ByteDance)

Focus: Enhancing context handling for issue resolving in software development

While not primarily focused on context length extension, SoRFT indirectly improves LLMs’ ability to manage complex, long-context tasks like software issue resolving. Targeting open-source models, it addresses cost and privacy concerns of commercial APIs by decomposing issue resolution into manageable subtasks: file localization, function localization, line localization, and code edit generation.

Two-Stage Training:

Rejection-Sampled Supervised Fine-Tuning (SFT): Filters Chain-of-Thought (CoT) data using ground-truth from pull requests, teaching the model structured reasoning.

Rule-Based Reinforcement Learning (RL): Employs Proximal Policy Optimization (PPO) with rewards based on β scores (prioritizing recall), refining the model’s precision across subtasks.

Context Relevance: By breaking down repository-scale tasks, SoRFT enables LLMs to process extensive codebases effectively, leveraging long-context data from open-source projects.

Results: On SWE-Bench Verified, SoRFT-Qwen-7B resolves 21.4% of issues, outperforming larger models like SWE-Gym-Qwen-32B (20.6%). It also boosts general code tasks (e.g., 90% on RepoQA vs. 85% baseline), showcasing enhanced long-context comprehension and generalization.

Authors: Tong Wu et al. (Shanghai Jiao Tong University & BIGAI)

Focus: Accelerating ultra-long sequence generation

Paper: https://arxiv.org/abs/2502.18890

TOKENSWIFT, introduced in this paper, revolutionizes the generation of ultra-long sequences (up to 100K tokens) by slashing processing time without compromising quality. Traditional autoregressive (AR) methods take hours (e.g., 5 hours for LLaMA3.1-8B), a bottleneck for applications like creative writing or reasoning traces.

The authors identify three hurdles:

Frequent Model Reloading: Slows generation due to I/O bottlenecks.

Prolonged KV Cache Growth: Increases complexity as sequences lengthen.

Repetitive Content: Degrades quality in long outputs.

TOKENSWIFT counters these with:

Multi-Token Generation & Token Reutilization: Generates multiple tokens per forward pass and reuses frequent n-grams, reducing reloads.

Dynamic KV Cache Management: Updates partial caches iteratively, maintaining efficiency.

Contextual Penalty: Mitigates repetition, enhancing diversity (Distinct-n scores improve).

Results: TOKENSWIFT achieves over 3× speedup (e.g., 90 minutes vs. 5 hours for 100K tokens on LLaMA3.1-8B), with lossless accuracy across models (1.5B to 14B). It saves up to 5.54 hours on a 14B model, making ultra-long generation practical.

Explore Past Highlights

If you liked what you read and interested in reading more than here are our achieved newsletters we sent in past.